You might not be curious about how an HPC/AI cluster actually works — especially if you've already considered renting one instead of building from scratch. But here’s the real question: How do you actually build an AI-ready HPC cluster that can handle your most demanding workloads?

Without understanding how the system operates under the hood, it’s incredibly hard to know whether your infrastructure is truly optimized — or even capable — of solving your AI challenges.

As one of the first teams to build a large-scale AI cluster in Asia, we’re here to share our journey — the real, gritty, behind-the-scenes lessons learned. This is part of an ongoing blog series where we walk you through the entire process, from uncertainty to execution.

So ,how did we come up with something huge and risky like this? Stay tuned for what’s next.

Starting from What People Need

If you’re running serious AI workloads — training large language models, processing massive datasets, or running production inference at scale — sooner or later, you’ll hit a breaking point. That’s when the real question surfaces: “Should I keep renting infrastructure, or is it time to build my own AI cluster?”

At first, renting feels easy. You spin up instances, scale on demand, and avoid the upfront costs of hardware. But for teams pushing boundaries with high-performance compute, the honeymoon phase doesn't last. Here’s what that journey usually looks like — emotionally and financially:

Stage 1: Denial

"The bill is expensive, but we’ll optimize it next month." You see your cloud invoice rising. It stings a little, but you justify it. Maybe it was the experiment last week. Maybe you just need to automate shutdowns. Maybe next month will be better.

Stage 2: Anger

"Why is the bill still not reduced?" You start digging into usage reports. You realize how hard it is to track GPU burn. And worse — you’re paying top dollar for machines that often sit idle between jobs.

Stage 3: Optimize

"Let’s try reserved instances, use spot pricing, prune idle notebooks, optimize training loops..." You try every trick in the book. And it works — a little. But it adds complexity, time, and risk. Your infra team is now spending half their time managing cost instead of performance.

Stage 4: Disappointed

"Spot instances aren’t reliable. Reserved instances are too rigid. None of this feels right." You start realizing the tools aren’t built for sustained, high-efficiency AI work. The cost-performance curve is plateauing, and no amount of tuning seems to close the gap.

Stage 5: Acceptance (with stress)

"Cloud is expensive. AI cloud is really expensive." You accept the truth: Running state-of-the-art AI workloads on general-purpose cloud infrastructure isn’t cheap, and won’t be anytime soon. The pricing models just don’t scale in your favor.

No matter how promising it seems, constructing an AI cluster isn’t easy — we’ve lived through the chaos firsthand. But when done right, it unlocks long-term cost efficiency, performance control, and deep infrastructure visibility. This was also the way we could find and serve our customers within several months, compared to other competitors around the world.

If you’re a hyperscaler — armed with massive budgets, a world-class team, and a lineup of global partners ready to do the heavy lifting — this post probably isn’t for you. But if you’re building a project where internal AI workloads and customer-serving infrastructure need to coexist and scale, this may be the most comprehensive guide you’ll find.

We Thought about What Experience We Have Accumulated

With decades of experience delivering infrastructure services for internal and enterprise customers, we’ve grown used to solving scale. But the rise of AI brought a completely different kind of challenge — one that traditional infrastructure models weren’t designed for.

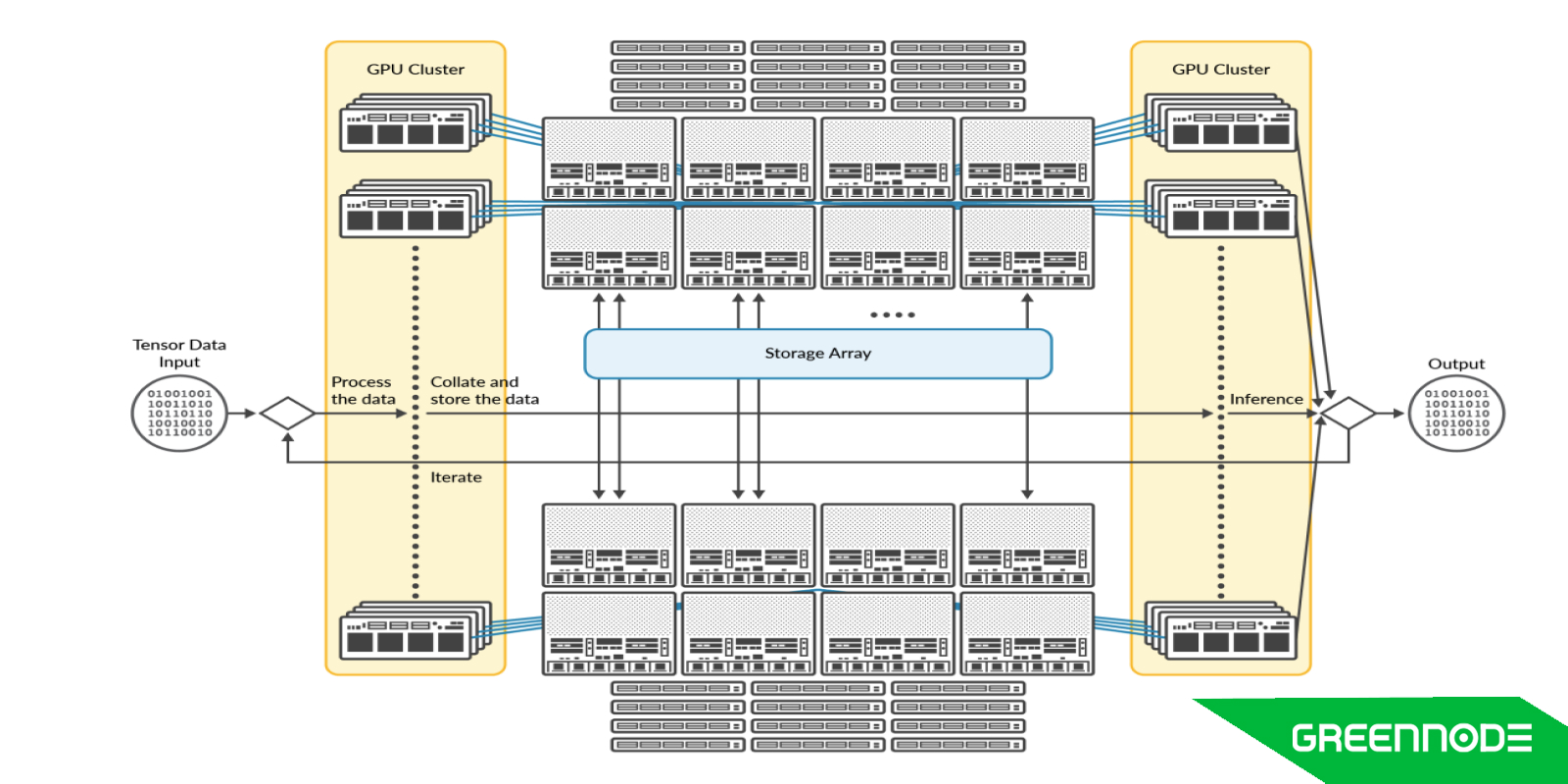

AI workloads demand a new breed of architecture: extremely high compute density, ultra-low latency communication between GPUs, and storage that keeps up with terabytes of throughput. It’s a different game. We knew what we were good at — and what we weren’t. Instead of pretending to be AI infrastructure experts overnight, we leaned on our internal experience and sought out the best practices from those who had walked this path before.

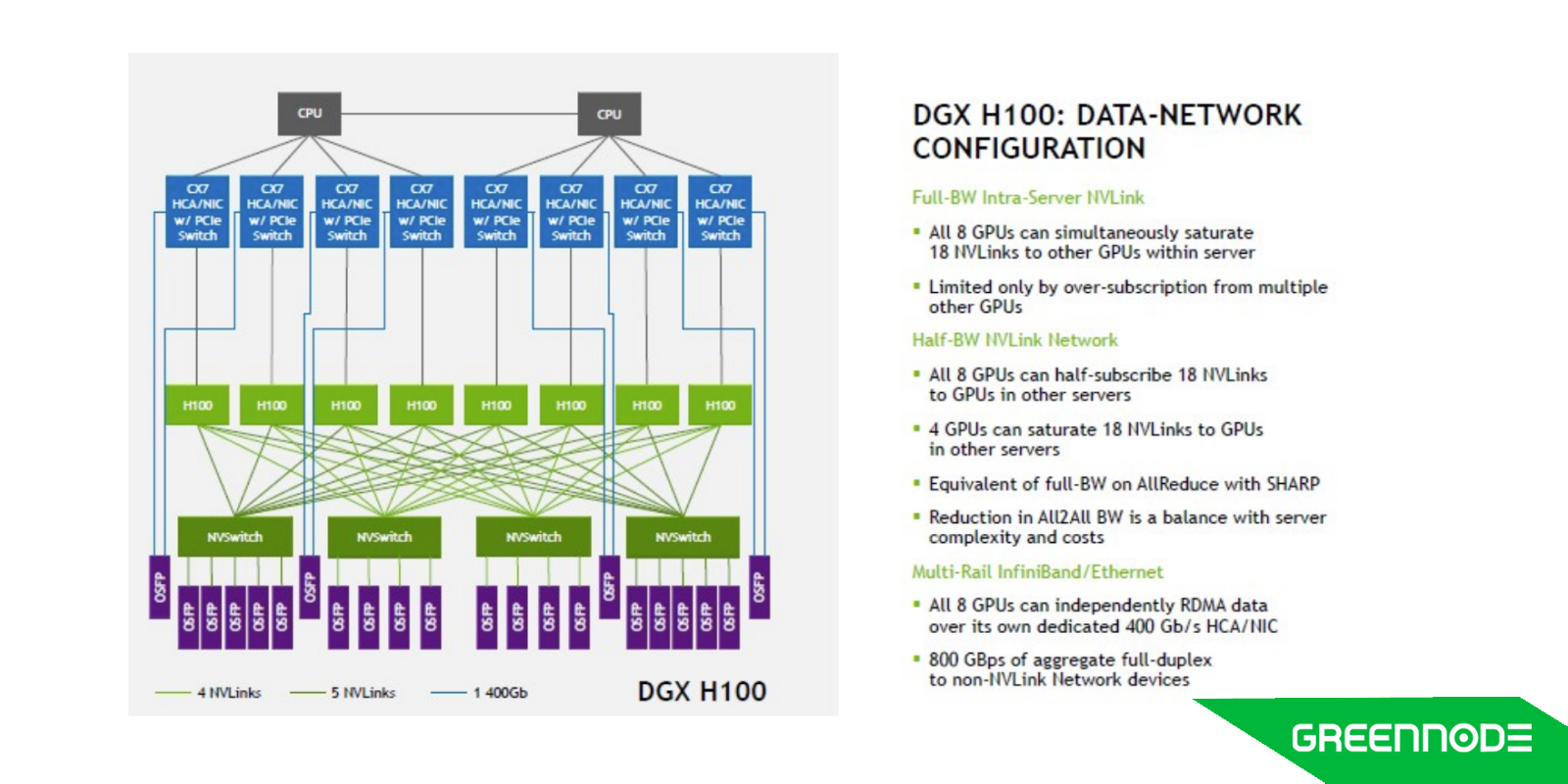

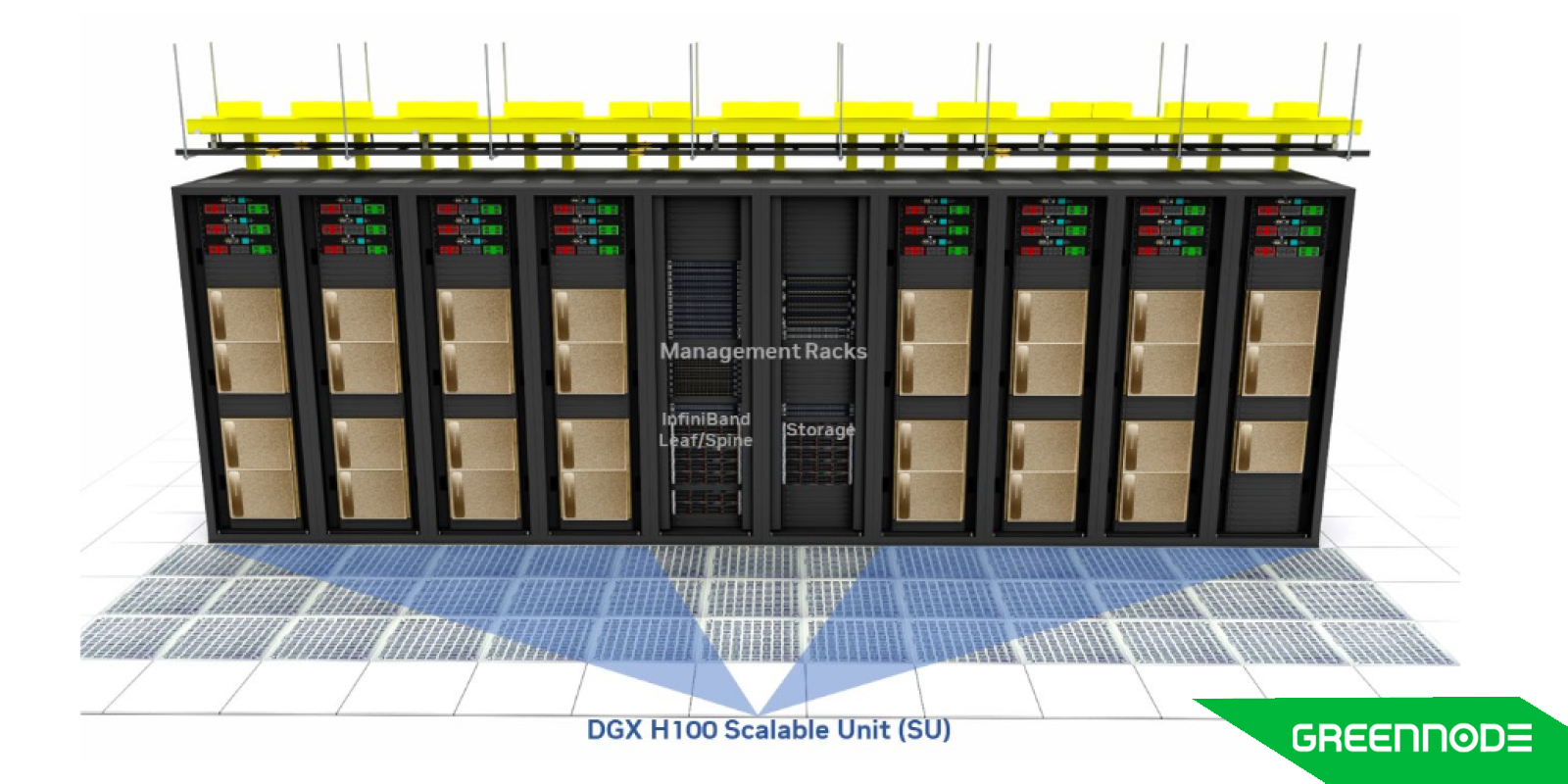

Thanks to earlier internal projects across business units, we had the opportunity to access DGX H100 systems — giving us a solid starting point. This setup, following NVIDIA’s classic plug-and-play architecture, allowed us to gain early familiarity with data center preparation, networking fabric, and storage design aligned with NVIDIA’s best practices.

Throughout the process, we also supported the deployment of a small-scale production cluster powering AI chatbot and virtual assistant workloads for an internal initiative. This gave us firsthand insights into the demands of real AI applications — from workload patterns to operational challenges — and helped us understand how infrastructure must adapt to support them effectively.

However, while NVIDIA SuperPod offers a fast and fully supported path to deployment, the cost of scaling such a solution was far from practical for us. Instead, we took on the more complex route: building a cluster based on HGX hardware under the NCP program.

This path came with significant architectural differences. Unlike DGX, HGX systems do not include NVIDIA’s proprietary NVSwitch, the “secret sauce” that enables seamless inter-GPU communication. That alone introduced a whole new layer of complexity. Beyond the hardware, we found ourselves stepping into unfamiliar territory — especially during the setup of the very first rack. Many of these challenges, along with how we approached them, will be revealed in the upcoming parts of this series.

Throughout this journey, we engaged with partners from across the globe. Their input not only helped us technically, but also exposed us to vastly different working styles and tech cultures — each leaving behind lessons far beyond just architecture and infrastructure.

And We Started to Take a Leap Toward Uncertainty

When we started, things weren’t exactly crystal clear. In fact, we were confused. Most of the time, our team was connecting the dots based on previous experience, reference architectures, and a lot of guesswork. The tech we worked with was cutting-edge — new hardware, new software — and too rare and expensive to fully test in a lab environment. A full-cluster POC simply wasn’t an option.

So we took a practical approach: test what we could, and learn from those ahead of us.

Throughout the process, we have retrieved tons of documents to realize that the resource is scarce.

We drew insights from:

- NVIDIA SuperPod documentation

- Hyperscaler architectures from Azure and Meta

- Designing Data Centers for AI Clusters from Juniper Networks

- A lot of conversations with KSC, Coreweave, LambdaLabs 20 years of experience operating large-scale Internet services

- NVIS (formerly NVPS (NVIDIA Professional Services) to support us along the way

The lack of official documentation and reference architecture guides was a major challenge. We had to make numerous assumptions along the way — and that proved to be incredibly time-consuming.

In the end, our key takeaways were simple but hard-earned:

- Necessity is the mother of invention

- Assumptions waste time

- Open communication saves time

- Failures are part of the process - quickly learning from failures

- Balance matters — especially between experienced engineers and fresh perspectives

Throughout this journey, we owe a special thanks to our partners at STT Bangkok, the NVIDIA technical team, VastData, Gigabyte, Hydra Host, and other friends and vendors who stood by us for months — across time zones, cultures, and countless late-night calls — to make this project possible.

Final Verdict

So, while we had a strong head start, building a truly scalable, production-grade AI cluster was far from plug-and-play. It demanded custom architecture, constant iteration, and a willingness to embrace trial and error at every step.

This wasn’t just about deploying servers or wiring up GPUs — it was about rethinking infrastructure for a new class of workloads, where performance bottlenecks and architectural mismatches can quietly erode everything you're trying to achieve.

In the upcoming parts of this series, we’ll dive deeper into the technical design, hardware considerations, network architecture, storage strategy, and operational lessons that shaped our build. If you have any questions about the GreenNode AI Cluster — or simply want support so you don’t feel as lost as we once did — join our community on Discord and connect with our team directly: GreenNode Discord.

You can also explore available GreenNode GPU instances and other AI-ready products at: GreenNode - One stop solution for your AI journey