According to NVIDIA, the GPU cloud market is projected to surpass $25 billion by 2030, with demand for H100- and H200-class GPUs driving much of this growth. Enterprises, startups, and research labs are now asking: Should we stick with the proven H100, or upgrade to the H200?

This blog provides a full breakdown of the specifications, performance benchmarks, real-world use cases, pricing, and adoption trends for the NVIDIA H100 vs H200, helping you make the right decision for 2025 and beyond.

Why the NVIDIA H100 vs H200 Comparison Matters

The demand for GPU accelerated computing is exploding. From training large language models (LLMs) to powering high-performance computing (HPC) and enabling real-time inference, GPUs are now the backbone of modern AI infrastructure.

NVIDIA’s H100 Tensor Core GPU, launched in 2022, quickly became the industry’s flagship for AI training and inference, powering state-of-the-art models like OpenAI’s GPT-4 and Anthropic’s Claude. In fact, the H100 became so essential that analysts estimated over 500,000 H100s were shipped in 2023 alone, generating billions in revenue for NVIDIA.

But the evolution didn’t stop there. In late 2023, NVIDIA unveiled the H200 GPU, built on the same Hopper architecture, but enhanced with next-generation HBM3e memory technology. With more memory, higher bandwidth, and better efficiency, the H200 is designed for the next wave of generative AI and exascale HPC workloads.

Overview of NVIDIA H100 GPU

Specs and Architecture

The NVIDIA H100 GPU is built on the Hopper microarchitecture and optimized for AI and HPC.

Key Specifications (NVIDIA, 2023):

- CUDA Cores: 14,592

- Tensor Cores: 4th Gen Tensor Cores, delivering up to 60 teraflops of FP64 and 1,000 teraflops of FP16 performance

- Memory: 80 GB HBM3

- Memory Bandwidth: 3.35 TB/s

- Process Technology: TSMC 4N

This represents a 3× jump in performance over the previous generation A100, especially in AI training throughput.

Use cases of H100

The NVIDIA H100 Tensor Core GPU is built for high-performance, multi-purpose AI workloads, ranging from training massive foundation models to powering real-time inference systems in production. It represents a generational leap in compute density, memory bandwidth, and scalability across AI, data analytics, and scientific computing.

AI Model Training

The H100’s Hopper architecture and Transformer Engine are optimized for training extremely large-scale AI models.

- Large Language Models (LLMs): Enables efficient training of models such as GPT-class, LLaMA 3, Mistral, or GreenMind, cutting training time by up to 4× compared to the A100.

- Computer Vision: Accelerates convolutional and transformer-based architectures used for autonomous driving perception stacks and satellite image analysis.

- Speech and NLP: Reduces latency in speech recognition and translation models like Whisper or BERT-based systems, crucial for real-time communication tools.

Example: Enterprises deploying multi-node DGX H100 clusters report up to 30% lower cost per training run for LLM fine-tuning compared to A100 clusters, thanks to mixed-precision optimization and NVLink bandwidth.

AI Inference and Real-Time Serving

The H100 excels at low-latency inference for generative AI, delivering massive throughput for deployed models.

- Generative AI Applications: Supports high-volume API serving for chatbots, copilots, and multimodal assistants built on open LLMs like Falcon, GreenMind, or Mistral.

- Recommendation Systems: Powers personalized content delivery in streaming and e-commerce platforms, handling billions of inferences per day.

- Edge Deployment: When paired with NVIDIA NIM or Triton Inference Server, H100 enables efficient, multi-model serving in enterprise or cloud environments.

Example: Inference throughput on the H100 using vLLM or Triton can exceed 10,000 tokens per second on optimized LLMs, ideal for high-traffic generative AI APIs.

Enterprise Data Analytics and AI Pipelines

With GPU acceleration for data frameworks such as RAPIDS, Spark RAPIDS, and cuDF, the H100 extends its utility beyond model training.

- Data Engineering: Enables real-time ETL and feature engineering for terabyte-scale data lakes.

- Business Intelligence (BI): Accelerates dashboard rendering, analytics queries, and anomaly detection.

- AI-Powered Databases: Integrates with GPU-accelerated systems like Heavy.AI and BlazingSQL for analytics at unprecedented scale.

Example: Financial institutions using RAPIDS on H100 GPUs reported up to 45× speed improvements in portfolio risk simulations compared to CPU-only clusters.

Multi-Modal and Generative AI Workflows

The H100’s high memory bandwidth (HBM3 up to 3.35 TB/s) and support for massive parallelism make it ideal for multi-modal workloads that combine text, image, and audio.

- Video Understanding: Real-time captioning and object detection in video surveillance or media workflows.

- Healthcare Imaging: AI-powered diagnostics combining radiology and text reports.

- Creative AI: Supports diffusion models like Stable Diffusion XL and text-to-video generation pipelines.

Example: When paired with NVIDIA’s TensorRT-LLM optimization, diffusion-based image generation on H100 achieves 2–3x faster rendering times than on A100.

Overview of NVIDIA H200 GPU

Specs and New Improvements

The NVIDIA H200, introduced in late 2023, is an upgraded Hopper GPU featuring HBM3e memory, which delivers both higher capacity and bandwidth.

Key Specifications (NVIDIA, 2024):

- CUDA Cores: Similar to H100 (14,592)

- Tensor Cores: 4th Gen Tensor Cores with refinements

- Memory: 141 GB HBM3e (vs 80 GB on H100)

- Memory Bandwidth: 4.8 TB/s (42% higher than H100)

- Energy Efficiency: Up to 50% better total cost of ownership (TCO) compared to H100

Performance Benchmarks

- Memory Advantage: The H200 offers 76% more memory and 43% higher bandwidth than H100

- LLM Benchmarking: MLPerf tests on Llama2-70B show the H200 reaching 31,712 tokens/second, compared to the H100’s 21,806 tokens/second, reaching a ~45% improvement

- AI Inference: NVIDIA reports up to 1.9x faster inference performance for generative AI workloads

- HPC Workloads: The H200 delivers up to 110x improvements in simulation performance for scientific applications

Use Cases of the NVIDIA H200 GPU

The NVIDIA H200 Tensor Core GPU is the next evolution of the Hopper architecture, designed to push the limits of AI inference, fine-tuning, and high-performance computing. With 141 GB of HBM3e memory and 4.8 TB/s of bandwidth, it delivers over 1.44x the memory capacity and 1.8x the bandwidth of the H100, making it ideal for massive AI and HPC workloads that demand both scale and efficiency.

AI Inference at Scale

The H200 is purpose-built for high-throughput, low-latency inference, enabling organizations to deploy large-scale AI services efficiently.

- Large Language Models (LLMs): Ideal for hosting massive open or proprietary LLMs on a single GPU, reducing the need for model parallelism.

- Generative AI Applications: Optimized for serving real-time chatbots, copilots, and content-generation systems, processing more tokens per second with lower latency.

- Enterprise Inference APIs: When paired with NVIDIA Triton Inference Server or NIM microservices, H200 accelerates model deployment in production across industries.

Example: Benchmarks show H200 can deliver up to 60% higher inference throughput than the H100 on large transformer models, enabling faster, cheaper serving for generative AI platforms.

Fine-Tuning and Adaptation of LLMs

The H200’s expanded memory (141 GB HBM3e) makes it a powerhouse for fine-tuning and adaptation of foundation models, particularly where large context windows or long sequences are required.

- Domain-Specific Fine-Tuning: Enables efficient retraining of base models for fields like finance, healthcare, or legal AI without sharding across GPUs.

- Parameter-Efficient Methods (LoRA, Adapters): Supports larger batch sizes and longer context fine-tuning with better memory utilization.

- Training Efficiency: Combined with NVIDIA NeMo and TensorRT-LLM, teams can fine-tune billion-parameter models with lower power and time costs.

Example: Enterprises report that H200 clusters can reduce fine-tuning time for multi-billion parameter models by up to 40% compared to H100 configurations.

High-Performance Computing (HPC) and Scientific Simulation

The H200 also extends NVIDIA’s dominance in HPC and scientific modeling, delivering record memory throughput for large-scale simulations.

- Climate and Weather Modeling: Supports exascale workloads with improved precision and reduced time-to-solution.

- Physics and Engineering Simulations: Enables larger mesh resolutions for CFD, materials science, and energy simulations.

- Genomics and Bioinformatics: Accelerates large-scale sequencing, molecule discovery, and AI-driven protein folding.

Example: Research clusters such as Japan’s ABCI 3.0 supercomputer use H200 GPUs to combine AI and HPC, achieving unprecedented simulation speed for both scientific and generative workloads.

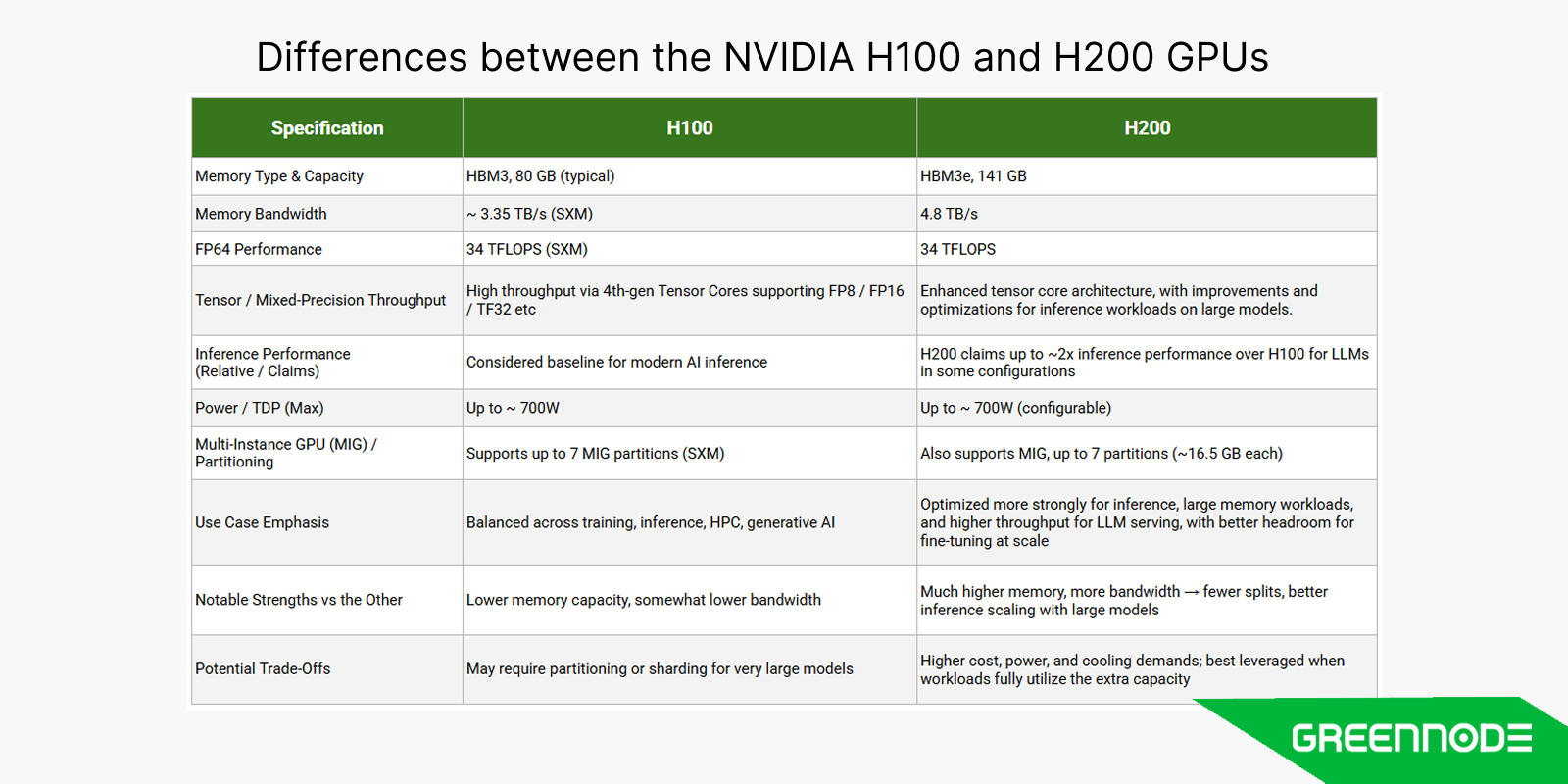

What’s Difference Between NVIDIA H100 vs H200?

Memory Capacity and Bandwidth

H100: 80GB HBM3, 3.35 TB/s

H200: 141GB HBM3e, 4.8 TB/s

The H200 nearly doubles memory capacity and increases bandwidth by over 40%. This upgrade allows the H200 to handle larger AI models and data-intensive HPC tasks more efficiently.

AI Training Performance Comparison

While the NVIDIA H100 excels at training state-of-the-art AI models, the H200 goes further by improving throughput and cutting training times for LLMs and generative AI workloads that exceed the H100’s memory capacity.

Efficiency and Scalability for LLMs

The NVIDIA H100 remains highly efficient for standard AI models, while the H200 is purpose-built for scaling beyond today’s models, supporting larger batch sizes and more efficient parameter handling.

Pricing and Availability in 2025

While H100 GPUs are widely available in cloud instances and DGX systems with prices that remain high but are stabilizing, the H200 is expected to cost more due to its advanced memory technology and will initially be limited to premium cloud providers and high-end DGX systems.

NVIDIA H100 vs H200: Which GPU Should You Choose?

Choosing between the NVIDIA H100 and H200 largely depends on your workload requirements, budget, and scale of deployment.

Choose H100 if:

- You need a widely available, cost-optimized GPU.

- Your workloads fit within 80GB HBM3 memory.

- You’re running AI inference or mid-sized model training.

Choose H200 if:

- You’re training LLMs with billions of parameters.

- You require larger memory capacity (141GB) for big datasets.

- You need maximum throughput for generative AI or HPC.

- Budget allows for premium pricing.

In conclusion, the H100 remains the most practical choice for many enterprises and startups, while the H200 is positioned as the future-ready GPU for organizations pushing the limits of AI scale.

Ready to Harness the Power of NVIDIA H100 and H200 with GreenNode?

The future of AI, cloud, and high-performance computing is being shaped by GPUs like the NVIDIA H100 and H200. Whether you’re scaling AI training, running HPC simulations, or deploying real-time inference, success depends not only on hardware, but on having the right partner to deliver it.

At GreenNode, we provide:

- Lower pricing compared to major cloud GPU providers

- Instant access to GPU compute infrastructure (H100 & H200)

- 24/7 dedicated support from experts who understand AI workloads

Accelerate your AI journey today with GreenNode’s GPU compute solutions. Talk to our team if you want to book a briefly consultation.

FAQs

1. What are the main differences between NVIDIA H100 and H200 GPUs?

The H200 nearly doubles memory (141GB HBM3e vs 80GB HBM3) and boosts bandwidth by 42%, making it better for large AI models and HPC workloads.

2. Is the NVIDIA H200 better than the H100 for AI training?

Yes. The H200’s HBM3e memory and higher bandwidth improve training throughput and enable handling of larger datasets and models compared to the H100.

3. How much will NVIDIA H200 cost compared to H100 in 2025?

The H200 will be priced higher due to advanced memory tech and initial limited availability. Cloud providers are expected to offer premium-priced instances.

4. Can startups access H100 and H200 GPUs through cloud providers?

Yes. GreenNode provides access to H100 instances, with H200 availability rolling out in 2025. This makes GPUs accessible without hardware investment.