Large language models (LLMs) like Gemini and GPT-4 have revolutionized how we interact with technology. They generate human-like text, assist in customer service, power search engines, and even help with creative tasks. But did you know that training these powerful models comes with significant costs—both in terms of money and resources?

But just how expensive are we talking about? Whether you're a startup dreaming big or a tech-savvy business scaling up, understanding these costs can help you make smart decisions.

In this article, we're diving into three main options for training LLMs: building your own GPU cluster, going with a hyper scaler service, or teaming up with a local GPU provider like GreenNode. Each has its quirks, its perks, and yes, its costs. Let’s break it down!

How Do LLMs Work Their Magic?

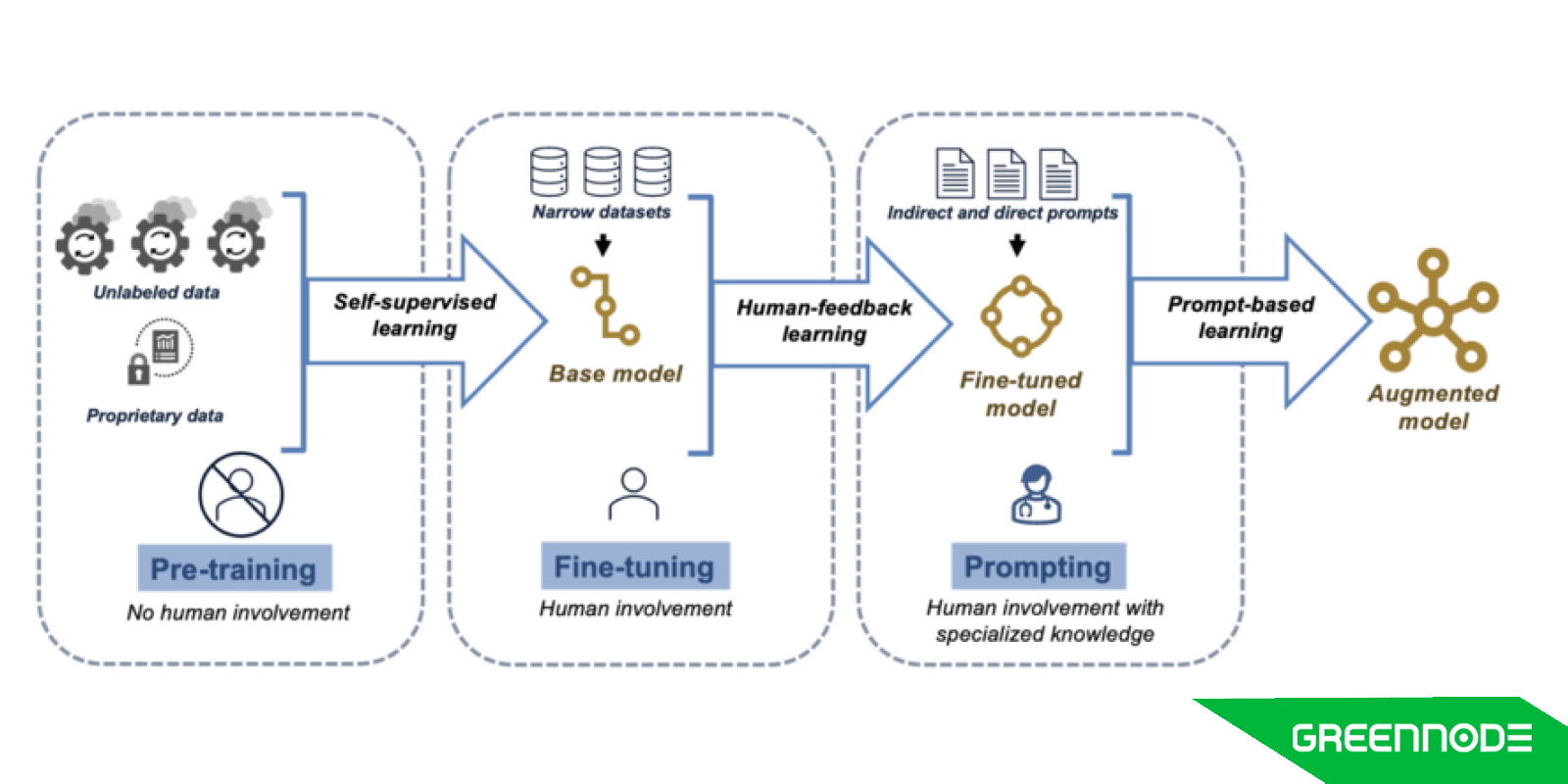

Before we jump into the nitty-gritty of costs, let’s take a quick detour to understand what makes an LLM an LLM. A Large Language Model is essentially a type of artificial intelligence designed to understand, generate, and manipulate human language. Imagine having a machine that can not only comprehend text but also generate responses that sound like they came from a real person. That’s the magic of an LLM.

These models are built on deep learning techniques and trained on vast amounts of data—think of every book, article, picture, and website you’ve ever read, multiplied by a thousand.

The training process involves teaching the model to recognize patterns in this data to predict what word, or phrase comes next in a sentence, answer questions, or even write entire articles.

Also read: What is AI Development Lifecycle?

Why Bother with a Custom LLM? Here’s What You’re Missing Out On

In a world where AI is becoming integral to business operations, having a customized LLM can offer significant advantages. While general AI services like ChatGPT are powerful and convenient, they’re designed for broad, generic use cases.

If your business has specific needs—whether it’s handling industry-specific jargon, understanding unique customer interactions, or generating content that aligns perfectly with your brand voice—off-the-shelf models might fall short.

Building your own LLM gives you the flexibility to tailor the model to your exact requirements. You can fine-tune it with your own data, optimize it for specific tasks, and ensure it integrates seamlessly with your existing systems.

This level of customization can result in more accurate predictions, more relevant responses, and a better overall user experience. Plus, it gives you full control over your data and how it’s used.

Now that we know what makes an LLM tick and why you might need your own, let’s talk about how you can train one.

So, What Are Your Options?

Whether you’re thinking about building your own setup, going with a big-name cloud provider, or choosing a specialized local service, each approach has its own set of considerations. Let’s break them down, starting with the DIY route.

Option 1: Building Your Own GPU Cluster – The DIY Route

If you’re the kind of person who prefers to do everything yourself, building your own GPU cluster might sound appealing. It’s like building a custom gaming PC but on a much larger, more expensive scale.

What You’ll Need

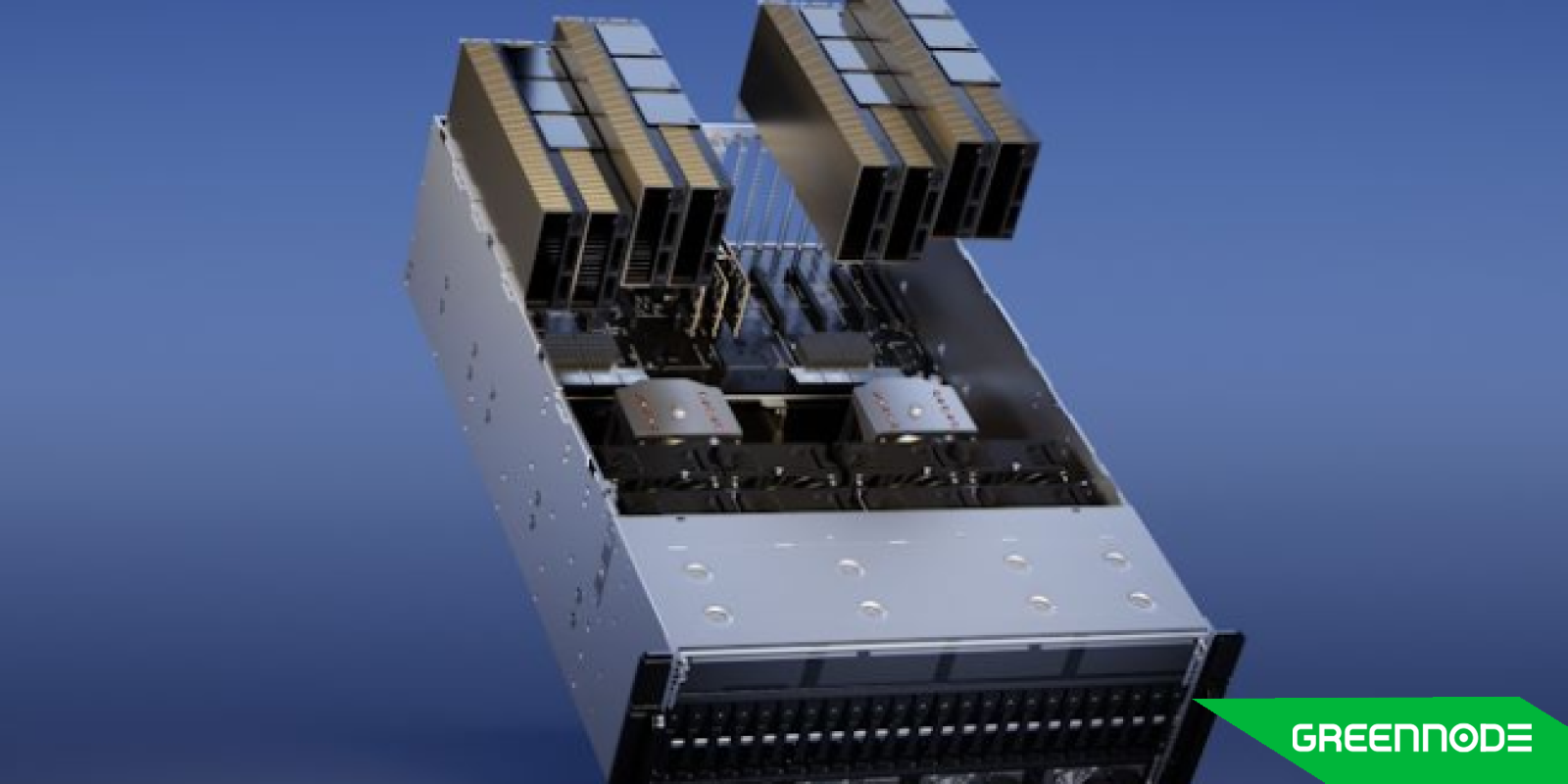

To train an LLM, you’ll need a serious amount of computing power. We’re talking about GPUs (the brains behind AI processing), and not just one or two. Think bigger—like 8 NVIDIA H100 GPUs working together in harmony. But there’s more! You’ll also need a massive dataset, probably ranging from hundreds of gigabytes to a few terabytes, depending on how sophisticated you want your model to be.

What It’ll Cost You

Ready for some sticker shock? Each NVIDIA H100 GPU will set you back around $30,000. For a cluster with 8 of these, you’re looking at a cool $240,000 just for the GPUs. Throw in networking gear, storage, and other essentials, and your total might climb to $300,000 or even $350,000. That’s many zeros!

Once you’ve built your dream GPU cluster, keeping it operational can become a significant financial burden. Power bills alone can be sky-high, not to mention the cooling costs required to prevent overheating. Maintenance and upgrades are ongoing expenses you can’t ignore.

Additionally, if you choose to host your cluster in a Tier III data center for enhanced reliability, you’ll need to factor in the rental costs. These can be up to $23,000/kW depending on the specific location and services offered. This doesn’t include other operational costs, which can further inflate your expenses

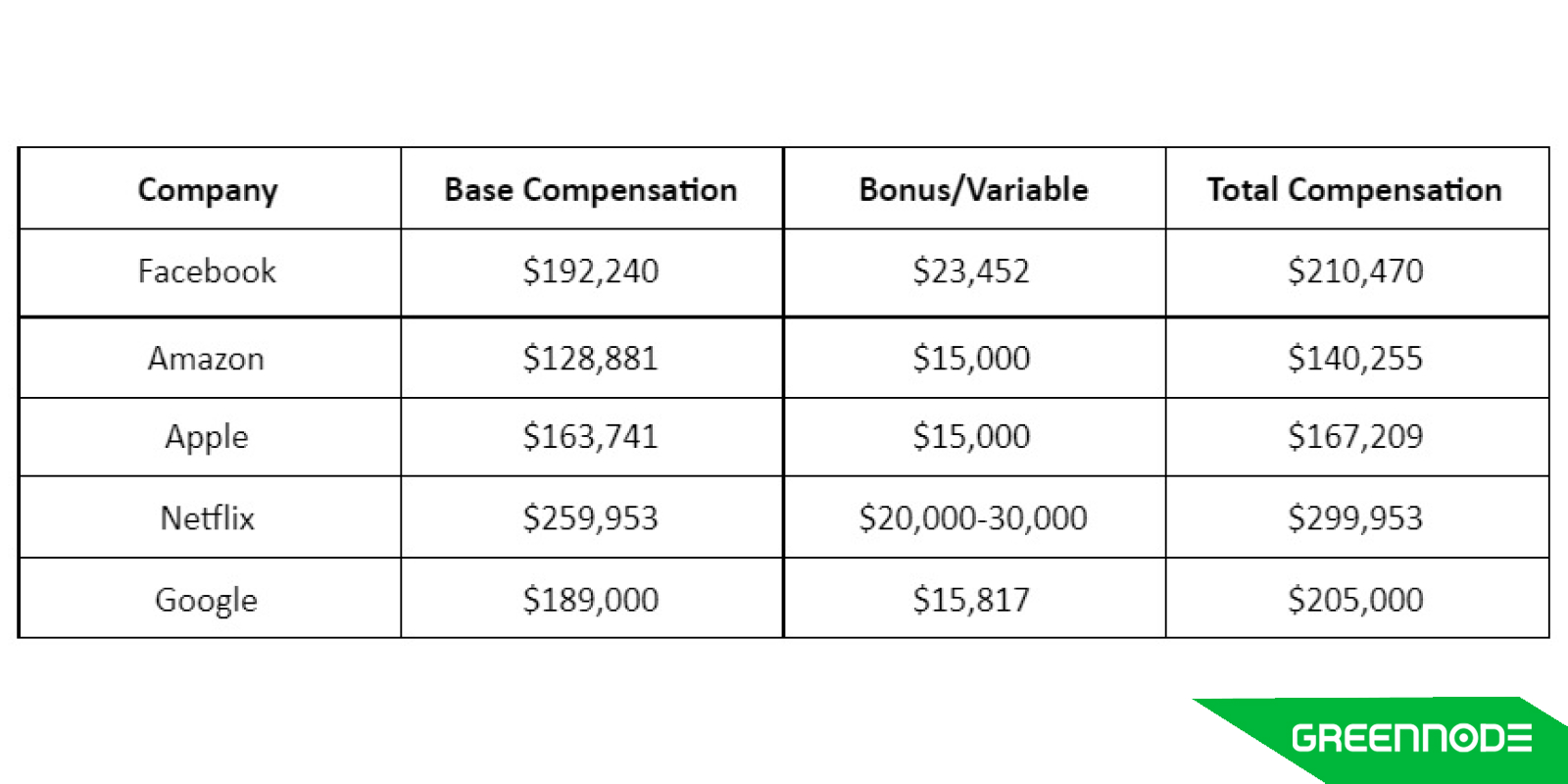

Building a cluster is one thing, but running it? That’s a whole other level. You’ll need a team of AI pros and IT gurus to keep everything humming along smoothly. This number is expected to shell out $100,000 to $250,000 per year per expert.

The upside? You’re in control, and you can tweak everything to your heart’s content. The downside? It’s expensive, complex, and requires serious know-how. Unless you’ve got deep pockets and a top-notch tech team, this option might not be the best fit.

Option 2: Using a Hyperscaler Service – The Cloudy, Pay-As-You-Go Option

If the DIY route sounds like too much work (and money), you might want to consider renting someone else’s hardware. That’s where hyperscaler services like AWS, Google Cloud, and Microsoft Azure come in. They let you access top-tier GPUs on a pay-as-you-go basis, so you only pay for what you use.

Scalability and Data Flexibility

One of the biggest perks of using a hyper scaler is flexibility. If your data needs are all over the place—big one month, smaller the next—you can scale your resources accordingly. Need more power? Just rent more GPUs. Are your training needs in flux? No problem; scale up or down as needed.

How Much It’ll Cost You

Hyperscalers charge based on the resources you use. Want to rent the equivalent of an 8-GPU H100 cluster? That might cost you anywhere from $50 to $150 per hour, depending on the provider. Over a month of 24/7 usage, you’re looking at $36,000 to $108,000. Not chump change, but may be cheaper than buying your hardware.

But wait, there’s more! Hyperscalers might also hit you with charges for data storage, network usage, and other extras like security and backup. These can add up, especially if you’re working with large datasets.

Hyperscalers give you the tools, but you still need someone who can use them. Hiring AI consultants or cloud experts on an hourly basis can get pricey—think $200 to $500 an hour.

The beauty of hyper scalers is flexibility—you get access to powerful GPUs without the big upfront costs. But beware: the pay-as-you-go model can turn expensive over time, especially if your project drags on longer than expected. And don’t forget, you might still need to bring in outside experts to help manage everything.

Option 3: Teaming Up with a Local GPU Provider – The Best of Both Worlds

If you’re looking for a sweet spot between the high costs of building your own cluster and the potentially expensive, unpredictable costs of hyper scalers, consider a local GPU provider. These companies specialize in providing the computing power you need for AI projects, without the hassle of managing your hardware or dealing with sky-high cloud bills.

Balancing Resources and Data Needs

You don’t have to commit to an entire cluster with local GPU providers. Instead, you can rent pre-built instances starting from just one core, allowing you to scale up as your project requires. With costs as low as $3.89/hour, it’s a more affordable option compared to hyper scalers, offering flexibility without breaking the bank.

Features like GreenNode’s dynamic endpoints make it easy to adjust your resources on the fly, ensuring you have exactly what you need when you need it.

What Local GPU Providers Bring to the Table

GPU Cloud providers, like GreenNode, offer powerful GPUs such as the NVIDIA H100 at rates that are often more budget-friendly than hyperscalers. Their pricing is transparent, so you won’t be hit with surprise bills. For businesses with long-term projects, this can translate into significant cost savings and less stress.

One standout feature of services like GreenNode is the expert support included with their offerings. Unlike hyperscalers, where you might need to bring in outside help, GreenNode provides assistance with setup and optimization as part of their service, at no extra cost. This is especially beneficial for companies without a large in-house AI team.

Local GPU providers offer a balanced solution: powerful GPUs, expert support, and fair pricing. It’s an excellent choice for businesses that need high-performance computing without the high costs and complexities of other options. However, if your project is highly specialized or requires a unique setup, you might face challenges in switching providers and could lose time reconfiguring. In such cases, considering a multi-year lock-in could help streamline your process and optimize your setup.

Final Thoughts

So, what’s the best way to train your LLMs? It depends on your needs, budget, and available resources. Building your own GPU cluster gives you control but comes with high costs and complexity. Hyperscalers offer flexibility but can get expensive quickly, especially if you need outside help.

Local GPU providers, like GreenNode, offer a balanced, cost-effective solution with the added bonus of free expert support. If you’re looking for a powerful, budget-friendly way to train your LLMs, local GPU providers might just be your new best friend. They provide the tools, support, and flexibility to take your AI projects to the next level—without breaking the bank.

Ready to elevate your AI projects? Whether you’re building a custom LLM or scaling up your existing models, the right setup can make all the difference. Don’t let high costs or complex setups hold you back. Explore GreenNode’s powerful, budget-friendly GPU solutions today and see how easy it can be to train your LLMs with expert support by your side. Unlock the full potential of your AI with GreenNode now!