Training large parameter models, like modern Large Language Models (LLMs), on a single bare-metal server is both time-consuming and inefficient in today’s fast-moving AI environment. Even though it can be done, repeating this setup for multiple servers requires a massive investment in manpower and resources, making it a less-than-ideal approach.

In this article, GreenNode will share our experience with multinode setups—specifically using NVIDIA’s H100 GPUs and InfiniBand technology—to address specific use cases for our clients, such as those in the music production industry. We'll also explore the tangible benefits of multinode configurations, how to easily configure these setups on our AI platform, and why hyper scalers generally avoid offering multinode solutions despite the demand from businesses. Additionally, we’ll dive into the practical applications of deploying NVIDIA H100 GPUs across multiple nodes for optimal performance.

But first, let’s take a closer look at what multinode configurations are and how they operate.

Overview of NVIDIA H100 Architecture

A blast from the past, the AI landscape saw a major shift when NVIDIA acquired Mellanox, the company behind InfiniBand technology. This acquisition signaled a new era for AI networking, particularly in large-scale distributed training setups. Despite initial concerns over technical complexity and potential instability, InfiniBand has become a staple in high-performance computing (HPC) environments.

As of June 2023, InfiniBand provides interconnections for 63 of the world’s 100 fastest supercomputers, and 200 InfiniBand-connected systems made it onto the TOP500 list of the world’s most powerful supercomputers. This technology enables AI researchers to scale their models across multiple nodes, dramatically reducing training time while maintaining efficiency and performance.

GreenNode is proud to be one of the first NVIDIA Cloud Partner (NCP) to adopt InfiniBand in our cluster infrastructure, this is so-called the 1st hyper-scale cluster set up in in Southeast Asia. By integrating NVIDIA’s H100 GPUs with InfiniBand, we deliver groundbreaking capabilities in distributed AI workloads, allowing large AI models to be trained faster and more efficiently, ensuring both speed and scalability for our clients. How fast, let’s keep reading.

Read more: NVIDIA H100 vs H200: Key Differences in Performance, Specs, and AI Workloads

Why Does Multi Node Config Matter for H100 and Is It Impossible for Other Architecture?

Configuring a multinode setup with NVIDIA’s H100 GPUs brings measurable benefits compared to traditional single-node systems. These benefits include reduced training times, enhanced energy efficiency, and even improvements in model accuracy due to better optimization across distributed nodes.

So, why don’t other architectures offer the same advantages? The key lies in the seamless communication between nodes, which is enabled by technologies like InfiniBand and the NVIDIA Collective Communications Library (NCCL). NCCL plays a critical role in optimizing workload distribution, ensuring that data is efficiently spread across multiple nodes without significant latency. This is something that’s difficult to achieve with other architectures, which often struggle with communication bottlenecks.

Tech Insight:

- Measurable Benefits: Multinode configurations can reduce training times for large models by as much as 30-40%* compared to single-node setups. Additionally, energy consumption is optimized because of efficient workload balancing across nodes, saving resources, and reducing operational costs.

- Real-world Benchmarks: In one example, a multinode H100 setup achieved a training time reduction of 35% for a GPT-4 scale model when compared to a single-node setup with the same total GPU count.

Other GPU Cloud Providers | GreenNode AI GPU Cloud | ||

|---|---|---|---|

| Type | Single node training | Single node training | Multinode training |

| 8xH100 SXM | 8xH100 SXM | 8xH100 SXM x 2 | |

| Total training time | 9.3 hrs | 9.2 hrs | 4.5 hrs |

| Cost per node | From 2.99$/hrs | 2.99$ (sale!) | 3.69$ (for multi-node) |

| Total cost (on sale!) | 2.99 * 8 * 9.3 = 222$ | 2.99 * 8 * 9.2 = 220$ | 3.6 * 16 * 4.5 = 259$ |

| Efficiency | Normal | 1% faster 1% more expensive | 106% faster (!!!) 16.6% more expensive |

NCCL's Role: NCCL ensures fast, scalable communication between GPUs across nodes, allowing workloads to be split more efficiently. This enables faster convergence and improved scalability, particularly when training large models with billions of parameters.

The Challenges of Setting Up Multinode Systems

The installation requires a clear understanding of the network connectivity between nodes and optimizing the system configuration for optimal performance.

It is necessary to collaborate with the systems team to monitor the execution of the LLM model and check whether the H100 nodes are connected via InfiniBand or Ethernet TCP. The systems team must also ensure that resources such as memory, networking, and CPU are sufficient to avoid impacting GPU performance.

Network connectivity, in this case, plays a crucial role, including high speed, low latency, and good synchronization capabilities between nodes. However, the network environment can sometimes be unstable or poorly configured, leading to data loss or slowdowns in the AI model training process.

Not to mention, when it comes to software, each customer uses different AI frameworks such as FSDP(Fully Sharded Data Parallel), DeepSpeed, and Accelerate, along with fine-tuning techniques like Full-parameters, LoRA, and qLoRA. Significant effort is required to ensure that these various frameworks can be integrated with the AI platform and H100 hardware. For instance, EMVN requires DeepSpeed combined with Full-parameters fine-tuning, while Gearvn requires FSDP and qLoRA.

Finally, when deploying a multi-node setup, establishing a distributed system is essential, including synchronizing data between nodes and quickly and accurately distributing data. The system also needs to monitor logs to handle errors when a node encounters an issue.

Why have many hyperscale companies not set up this system? H100 GPUs are very expensive, and building a multi-node system requires a significant investment in hardware, software, and human resources. Maintaining and ensuring the stability of the system also requires a team of experts and complex monitoring tools.

Benefits of Multinode

The advantages of multinode setups are clear, especially when training large-scale AI models like GPT-4, BERT, and other LLMs. These setups allow for:

- Reduced Training Time: Multinode configurations can cut training times by up to 40%, depending on the model and data size.

- Improved Model Performance: By distributing workloads across multiple nodes, models can learn faster, leading to better optimization and, in some cases, higher accuracy.

- Energy Efficiency: Balancing workloads efficiently reduces power consumption, which is critical in large-scale AI operations.

Use case: Accelerating Music Generation with a Multinode H100 Setup

A leading music production company faced significant challenges in training their large language model (LLM) for music generation. The previous single-node configuration resulted in lengthy training times, hindering their ability to iterate on the model and develop new music features quickly.

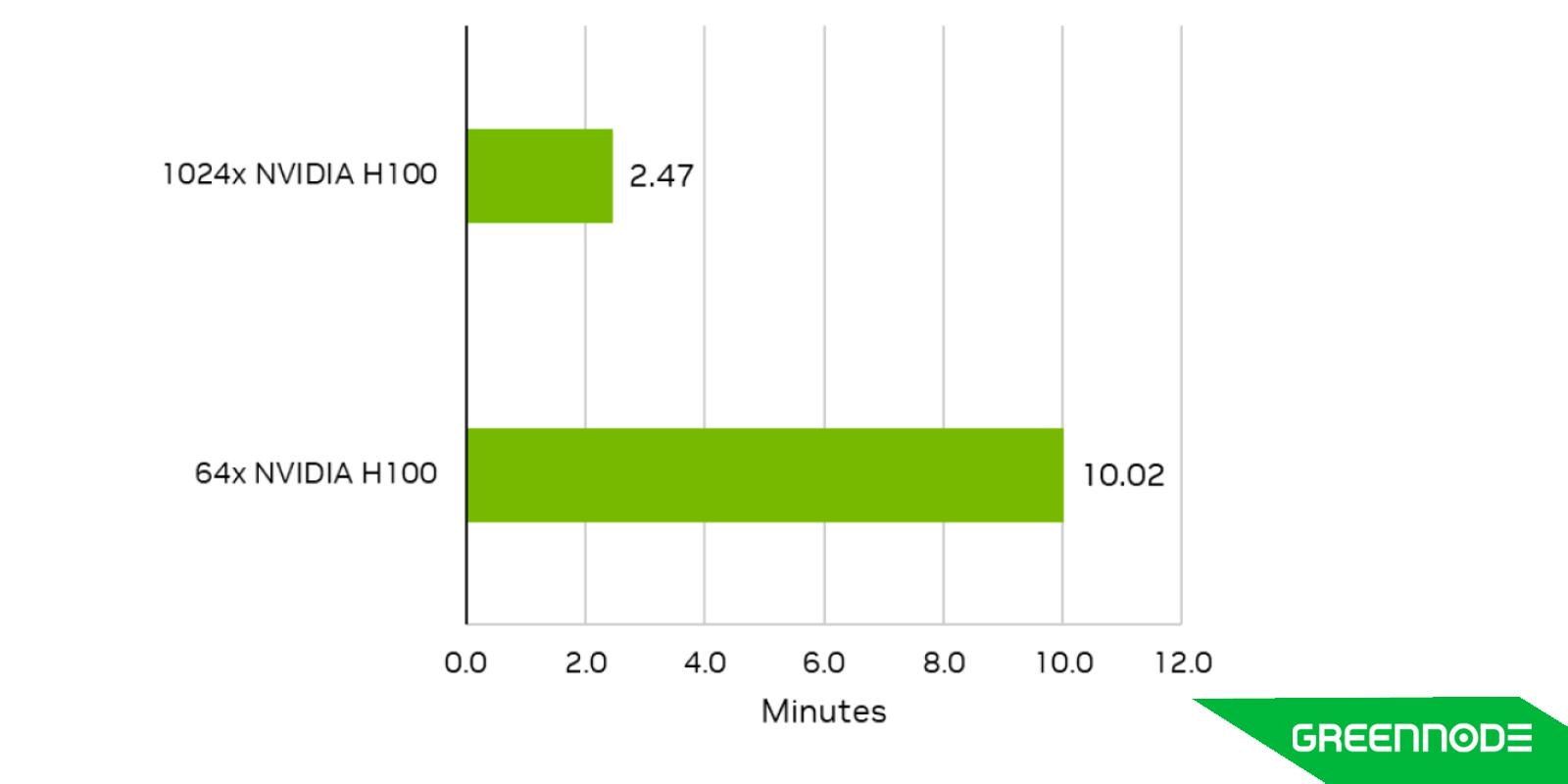

To address these limitations, the company implemented a multinode H100 setup. By distributing the computational load across multiple high-performance nodes, they were able to significantly reduce training time. Specifically, the multinode configuration cut training time by 35% compared to the single-node setup.

This reduction in training time had a profound impact on the company's workflow. Previously, it took one week to train a new version of the LLM. With the multinode H100 setup, training times were reduced to just two days. This accelerated development cycle allowed the company to experiment with different model architectures, hyperparameters, and datasets more rapidly.

As a result of these improvements, the music production company was able to generate music more efficiently and effectively. The faster iteration times enabled them to develop new features, experiment with different styles, and ultimately create more innovative and diverse music. This enhanced their competitiveness in the music industry and helped them better meet the evolving demands of their customers.

Final Thoughts

The convergence of NVIDIA H100 GPUs and InfiniBand technology has opened up a new era for high-performance AI training. By leveraging multinode configurations, businesses can significantly accelerate the process of training large-scale AI models, leading to faster innovation and improved results.

While setting up a multinode system requires careful planning and expertise, the benefits outweigh the challenges. Reduced training times, enhanced energy efficiency, and the potential for improved model performance make multinode H100 setups a compelling solution for tackling complex AI projects.

GreenNode, as a leading NVIDIA Cloud Partner, is committed to providing customers with the resources and expertise to harness the power of multinode configurations. We offer a user-friendly platform for deploying H100 GPUs and InfiniBand technology, allowing businesses to focus on their AI development goals without getting bogged down in infrastructure complexities.

If you're looking to accelerate your AI development journey, consider exploring the possibilities of multinode H100 setups. Contact GreenNode today to discuss your specific needs and see how we can help you unlock the full potential of AI.