Introduction

Large Language Models (LLMs) are pushing the boundaries of artificial intelligence, capable of generating human-like text and understanding complex concepts. However, training these powerhouses requires immense computational resources. This blog delves into the world of distributed training, enabling you to leverage the power of multiple GPUs for efficient LLM training.

We'll guide you through a step-by-step setup process, exploring both single-node and multi-node configurations. Using PyTorch and the Unified Collective Communication (UCX) library, we'll demonstrate how to harness the high-performance capabilities of InfiniBand for efficient communication between GPUs.

Purpose

This blog provides a proof of concept (PoC) for building and training Large Language Models (LLMs) using open-source tools. The setup is designed to be easily adaptable to other frameworks that support distributed training and to facilitate debugging purposes.

The Rise of Large Language Models

Large Language Models (LLMs) have significantly advanced artificial intelligence, especially in natural language processing. Models like GPT-2, GPT-3, and the recent LLaMA2 can understand and generate human-like text with impressive accuracy. These state-of-the-art (SoTA) models are pushing the boundaries of AI capabilities, but they require substantial computational power to train.

The Need for Distributed Training

Training LLMs involves processing vast amounts of data and running complex computations, which demands powerful hardware and efficient communication protocols. Single-GPU setups are often insufficient for handling the scale and complexity of these models. Distributed training across GPU clusters, such as NVIDIA’s H100, becomes essential to enable faster training and manage larger datasets effectively.

Why High Performance is Necessary

With the rapid growth and increasing complexity of LLMs, there is a pressing need for newer, more advanced hardware like the NVIDIA H100 GPUs and high-performance interconnects such as InfiniBand (IB). These technologies provide the necessary bandwidth and low latency required for LLM tasks, enabling faster communication between GPUs and more efficient training processes. The H100 GPUs offer significant improvements in performance, memory bandwidth, and efficiency, making them ideal for the demanding workloads of modern LLMs.

What You Will Learn

In this blog, we will guide you through the setup process for building a simple LLM, specifically GPT-2, on a server. We’ll cover:

- Single-node and multi-node configurations.

- How to configure the environment.

- How to utilize high-speed communication protocols.

- How to implement Distributed Data Parallel (DDP) with 8xH100 GPUs.

Model: GPT-2

We will use GPT-2 for this PoC because it has lower resource requirements compared to larger models like GPT-3 or LLaMA2. This makes it suitable for initial testing and experimentation. However, the setup described here can also be adapted to train larger models using frameworks that are based on PyTorch.

Communication Backends: UCX and NCCL

UCX (Unified Communication X): UCX is a community-driven, open-source project that provides flexibility and support for various communication protocols. It is ideal for PoC and heterogeneous environments due to its extensibility and scalability.

NCCL (NVIDIA Collective Communications Library): NCCL is optimized for NVIDIA GPUs and offers excellent performance in homogeneous environments. It can also be used in this setup for high-performance communication on NVIDIA hardware.

Implementation

In a distributed setup, each GPU on different nodes must be initialized and synchronized correctly. This script demonstrates how to set up and run distributed training using PyTorch’s Distributed Data Parallel (DDP) and GPT-2 model on a multi-GPU cluster.

Specifications

Each node in the setup is equipped with the following specifications:

- GPUs: 8 NVIDIA H100 GPUs, each with 80GB HBM3 memory and 700W power consumption.

- OS: Ubuntu 22.04 LTS

- Driver Version: 550.44.15+

- CUDA Version: 12.2.1+

- Docker Version: 26.1.2+

- NVIDIA Toolkit: 1.15.0-1+

- Built with Docker using nvidia-toolkit-cli

Step 1: Preparing the Environment and code

To check environment and code, clone the repository from GitHub:

Check environment: docker run --rm --gpus all nvidia/cuda:12.4.1-cudnn-devel-ubuntu22.04 nvidia-smi

Git clone: https://github.com/egoldne7/ml-exec-instructionPrepair dataset

For the purposes of this proof of concept (PoC), we are using randomly generated data instead of a real dataset. This approach is chosen to focus on the setup and training process without being affected by CPU and I/O workload associated with data loading and preprocessing in a real-world scenario. The random data generation allows us to simulate the training workflow of a Large Language Model (LLM) without the overhead of actual dataset handling.

# Create random input IDs (tokens)

input_ids = torch.randint(0, configuration.vocab_size, (batch_size, sequence_length))

# Create random attention masks

attention_mask = torch.randint(0, 2, (batch_size, sequence_length))

# Create random start and end positions for the answers

start_positions = torch.randint(0, sequence_length, (batch_size,))

end_positions = torch.randint(0, sequence_length, (batch_size,))This randomly generated data allows us to simulate the training process and validate the setup without the complexities of real data handling. This approach ensures that our PoC focuses on verifying the distributed training configuration and process.

Prepairing the Model

In a distributed setup, each GPU on different nodes must be initialized and synchronized correctly. This script demonstrates how to set up and run distributed training using PyTorch’s Fully Sharded Data Parallel (FSDP), PyTorch’s Distributed Data Parallel (DDP) and GPT-2 model for Question Answering on a multi-GPU cluster.

configuration = GPT2Config()

model = GPT2ForQuestionAnswering(configuration)The script begins by creating a GPT-2 model with a configuration suited for question answering tasks. This model is then used for the training process.

Step 2: Running single node using 8xH100 GPUs

Converting the Model to FSDP

To train the model in distributed mode using Fully Sharded Data Parallel (FSDP), you need to modify the training script to convert the model. Here’s how you can convert the model to FSDP:

device = torch.device("cuda", local_rank)

model = model.to(device)

print(f'Using FSDP on cuda device: {next(model.parameters()).device}')

model = FSDP(model)For more details on how to convert a model to FSDP with an example, please refer to this example.

Running the Single-Node using docker and torchrun

image_name="huggingface/transformers-pytorch-gpu:latest"

docker run \

--rm -it \

--cap-add CAP_SYS_PTRACE --shm-size="8g" \

--cap-add CAP_SYS_PTRACE --ipc host \

--gpus all \

--name fsdp_stresstest \

-v $(pwd)/ml-exec-instruction:/workspace \

$image_name \

torchrun --nnodes=1 \

--nproc_per_node=8 \

--rdzv_id=1 \

--rdzv_endpoint=127.0.0.1:29400 \

--rdzv_backend=c10d \

/workspace/benchmark/single_node_GPT2ForQA_fakedata.py --backend nccl --epochs 5000 --batch-size 80Description Torchrun Command

Torchrun: The entry point script for distributed training in PyTorch. More details can be found in the PyTorch Elastic documentation.

- nnodes=1: Specifies the number of nodes to use. Here, it is set to 1, indicating a single-node setup.

- nproc_per_node=8: Specifies the number of processes to run per node. Here, it is set to 8, indicating 8 GPUs will be used.

- rdzv_id=1: The rendezvous ID, used to identify the distributed job.

- rdzv_endpoint=127.0.0.1:29400: The rendezvous endpoint, specifying the address and port for the rendezvous server. This is used for initializing the distributed training setup.

- rdzv_backend=c10d: Specifies the backend to use for rendezvous. c10d is a backend provided by PyTorch for collective communication.

- /workspace/benchmark/single_node_GPT2ForQA_fakedata.py: The path to the training script inside the container.

- backend nccl: Specifies the backend for distributed training. nccl is highly optimized for NVIDIA GPUs.

- epochs 5000: Sets the number of epochs for training to 5000.

- batch-size 80: Sets the batch size to 80.

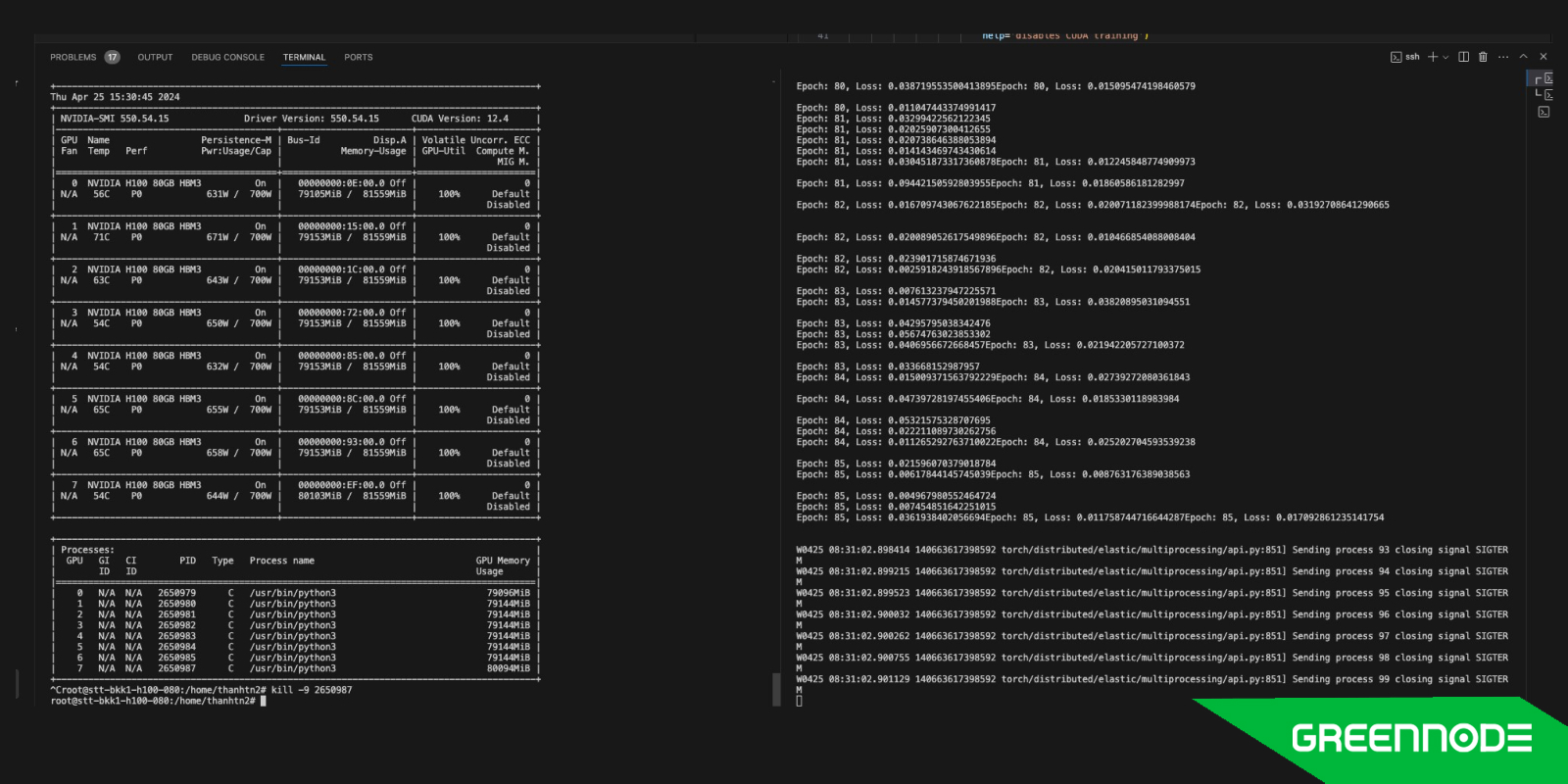

Output Log

The output log should look similar to this:

This log indicates that the training is running across 8 GPUs in a single node. If you kill one process on one GPU, the training process will stop, as indicated by the closing process using the signal SIGTERM.

In the provided log, you can see the training progress and resource utilization across all GPUs. The log also shows the synchronization and communication between GPUs, ensuring that the model training is distributed and efficient.

Step 3: Running Multi-Node Configuration with 8xH100 GPUs

In node 1

NODE_ID=0 N_GPU_EACH_NODE=8 N_NODES=2 EPOCHS=100 python3 /tmp/ml-exec-instruction/script/multinode.pyIn node 2

NODE_ID=1 N_GPU_EACH_NODE=8 N_NODES=2 EPOCHS=100 python3 /tmp/ml-exec-instruction/script/multinode.pyExplanation of Command Arguments:

- NODE_ID: Unique identifier for each node (0 for Node 1, 1 for Node 2).

- N_GPU_EACH_NODE: Number of GPUs per node (8).

- N_NODES: Total number of nodes (2).

- EPOCHS: Number of epochs for training (100).

Running the Multi-Node Configuration

When you run the ml-exec-instruction/script/multinode.py script on each node, it actually creates a Docker container using the following command to set up the environment and ensure the nodes are ready to communicate with each other.

docker run \

--rm \

--ulimit memlock=-1 \

--network host \

--cap-add CAP_SYS_PTRACE --shm-size="8g" \

--cap-add CAP_SYS_PTRACE --ipc host \

--gpus '"device=all"' \

--device=/dev/infiniband \

--volume /tmp/ml-exec-instruction:/workspace \

--name ddp_stresstest \

--detach \

--env MASTER_ADDR={head_node_ip} \

--env MASTER_PORT=1234 \

--env WORLD_SIZE={WORLD_SIZE} \

egoldne7/pytorch_ucc_transformers:latest \

tail -f /dev/nullImportant Configuration Details

- memlock: Setting memlock to -1 is crucial to allow the container to lock the necessary amount of memory, which is important for performance.

- InfiniBand Device Binding: Binding the InfiniBand device (--device=/dev/infiniband) ensures high-performance networking capabilities are available within the container.

- network host: Uses the host’s network stack, allowing direct communication between nodes

- env MASTER_ADDR={head_node_ip}: Sets the IP address of the master node.

Docker Image Configuration

The Docker image egoldne7/pytorch_ucc_transformers:latest is based on the NVIDIA PyTorch image and includes additional configurations to support UCC (Unified Collective Communication) for PyTorch distributed training. This image has been customized to include necessary dependencies and configurations for high-performance distributed training with InfiniBand.

UCC is not included by default in the built-in PyTorch. To use UCC, you need to build PyTorch with the USE_C10D_UCC flag enabled. However, the simplest way to use PyTorch with UCC is by using a pre-built image. NVIDIA’s image nvcr.io/nvidia/pytorch:23.06-py3 provides the experimental UCC process group for the distributed backend. You can reference the details in the NVIDIA PyTorch Release Notes for 23.06.

To specify the UCC backend in your PyTorch training script, you can use the following argument as shown in this example:

parser.add_argument('--backend', type=str, help='Distributed backend',

choices=[dist.Backend.GLOO, dist.Backend.NCCL, dist.Backend.MPI, dist.Backend.UCC],

default=dist.Backend.UCC)

.....

model = DDP(model)When running the script, make sure to use the –backend argument to specify UCC for PoC, understanding, and ease of troubleshooting.

python3 /workspace/benchmark/multi_node_GPT2ForQA_fakedata.py --backend ucc Output Log

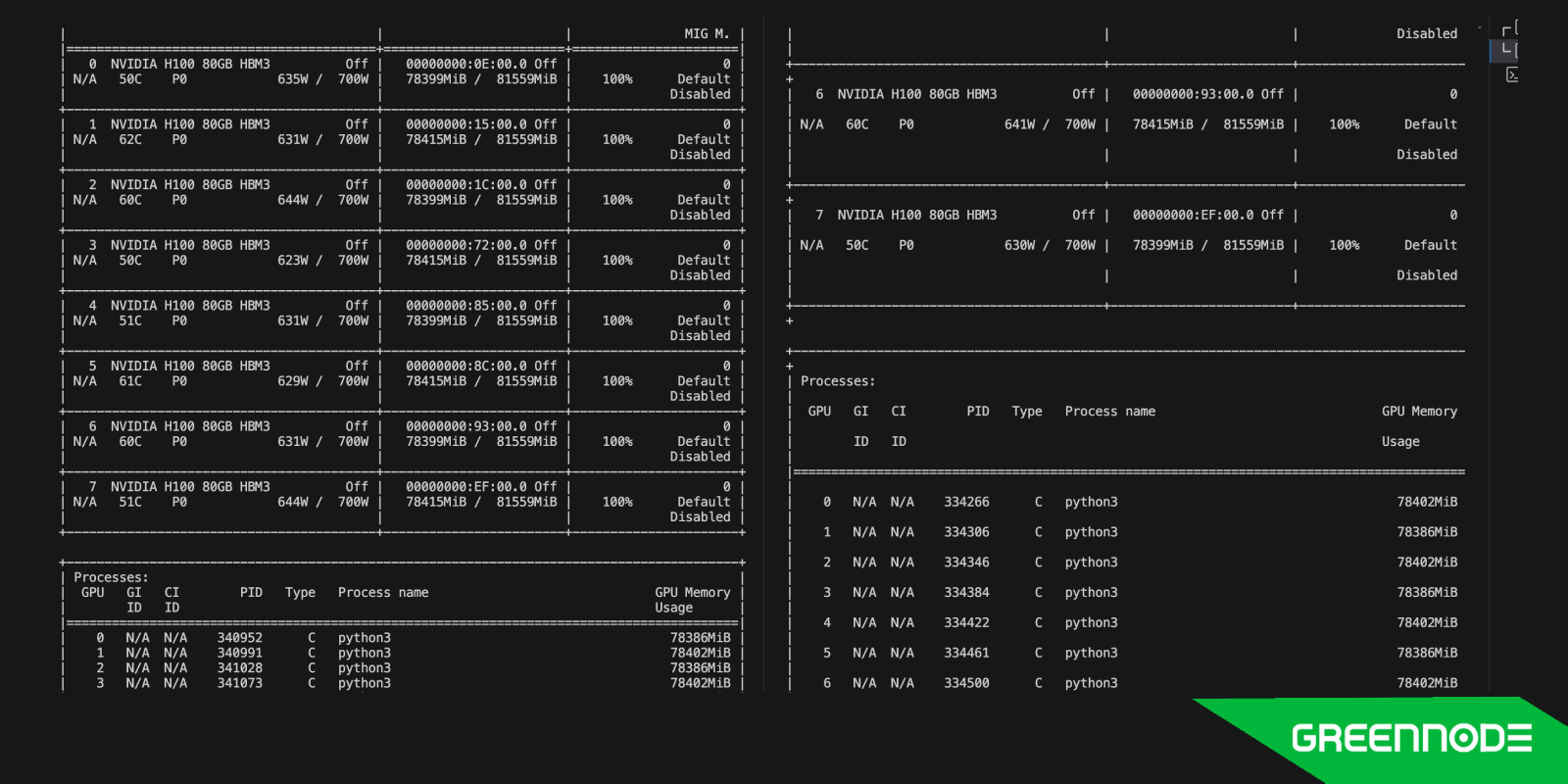

The output log should look similar to this:

This indicates a high-intensity training workload spread across two nodes using a total of 16 H100 GPUs.

Note: When using another framework based on PyTorch, consider using Fully Sharded Data Parallel (FSDP) instead of DDP for model sharding, and NCCL instead of UCC for better performance. For more details, please view this blog on faster AI training with fewer GPUs which describes how FSDP works and utilizes fewer GPUs.

Issues in Distributed Training

While distributed training offers numerous benefits, it also comes with its own set of challenges:

Communication Overhead Synchronizing data and gradients across multiple GPUs can introduce significant communication overhead. This overhead can negate the performance gains from parallelism if not managed properly. Techniques such as gradient compression and asynchronous updates are often used to mitigate this issue.

While distributed training offers numerous benefits, it also comes with its own set of challenges - Synchronization Issues Ensuring that all GPUs are synchronized at various stages of training is crucial. Desynchronization can lead to inconsistent updates and suboptimal training outcomes. Frameworks often provide built-in mechanisms to handle synchronization, but fine-tuning these settings can be complex.

- Fault Tolerance In a distributed setup, the failure of a single node can disrupt the entire training process. Implementing robust fault tolerance mechanisms, such as checkpointing and redundant computations, is essential to ensure the reliability and continuity of the training process.

Conclusion

Distributed training in an H100 cluster using Docker provides the computational power necessary to train large language models efficiently. By understanding the setup process, implementing robust training loops, and addressing common challenges, you can harness the full potential of distributed training to advance your deep learning projects. As the field continues to evolve, staying updated with the latest techniques and tools will be key to maintaining a competitive edge.

Next Steps

To further enhance your understanding and skills in distributed training, consider exploring the following topics:

- Implement Code with Slurm: Learn how to use Slurm, a workload manager, to schedule and manage jobs on large compute clusters. This includes writing Slurm scripts to handle job submission, resource allocation, and job dependencies, which are crucial for efficient use of HPC resources.

- Benchmark Transformer Engine in H100 Cluster: Integrate the Transformer Engine into your H100 cluster environment. This involves optimizing transformer models for performance and scalability, leveraging the advanced capabilities of H100 GPUs for faster and more efficient training of large language models.

- Benchmark Performance of Different LLMs on H100: Conduct performance benchmarks of various large language models (LLMs) on H100 GPUs using both the PyTorch Transformer library and the NVIDIA Nemo framework. This benchmarking helps in understanding the strengths and weaknesses of different models and frameworks, guiding the selection of the best tools for specific tasks.