Explore the importance of the document retrieval system in enhancing the efficiency of RAG in LLM model.

Retrieval Augmented Generation (RAG) is a powerful technique that combines the strengths of large language models (LLMs) with an external knowledge base to provide accurate and contextually relevant responses. Central to the effectiveness of a RAG system is the document retrieval system, which maintains a vast database of information that the LLM can query to answer specific questions.

Document retrieval system is crucial part of the RAG system, maintaining a database of information that LLM can query to answer a specific question. But how a document retrieval system is created, and how a document ingested into the RAG system?

After reading this blog, we hope you can understand more about GreenNode’s RAG and document retrieval in more detail.

What is Retrieval-Augmented Generation (RAG)?

Definition and Architecture

Imagine telling a powerful Large Language Model (LLM) what you need and giving it the relevant facts it hasn’t seen yet. That’s the core idea behind RAG, rather than letting the model rely solely on its pre-training (which can be outdated or incomplete), you retrieve current, domain-specific data and augment the model’s prompt before it generates its answer.

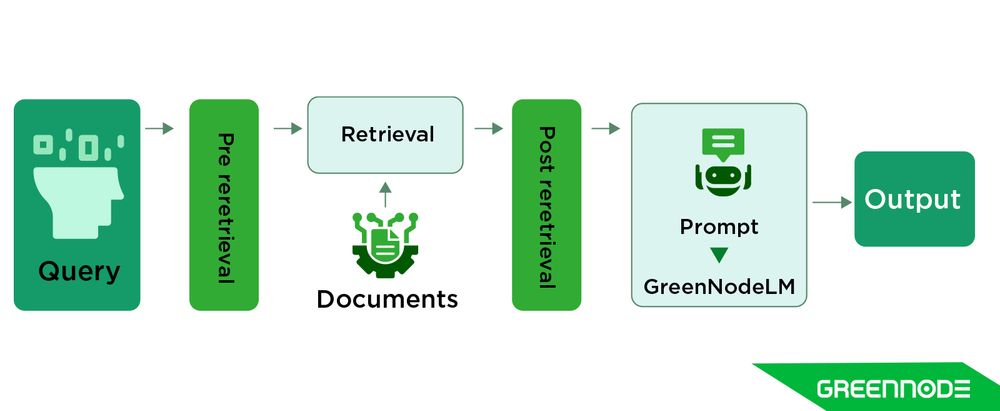

In practice, a typical RAG workflow looks like this:

- Retriever: Given your query, the system searches a knowledge base (documents, vector embeddings, company data) and pulls relevant context.

- Augmentation: That retrieved data is appended to the model’s prompt or fed into it in a structured fashion (e.g., “Here’s the user query + these supporting facts”).

- Generator: The LLM uses both its internal knowledge and the fresh context to produce a response that is more accurate, domain-aware, and up-to-date.

This two-part architecture (retrieval + generation) allows you to mitigate the “knowledge cutoff” of standard LLMs and anchor responses in reliable data. That’s why many enterprises now consider RAG a go-to pattern for production AI.

Benefits of RAG for Enterprise AI

RAG offers several compelling advantages for organizations looking to deploy AI in real-world settings:

- Improved relevance and accuracy: By incorporating fresh and domain-specific information, RAG helps reduce hallucinations (i.e., made-up or incorrect answers) and makes responses more grounded.

- Up-to-date knowledge without full retraining: Instead of retraining the entire model whenever new data appears, you can simply update the retrieval database for saving time, cost, and compute.

- Enterprise control and traceability: Because retrieved documents can be cited or traced, enterprises get better auditability and control which is important for regulated domains (finance, healthcare, legal).

- Customization for specific domains: RAG enables the LLM to “plug in” your internal documentation, manuals, or proprietary knowledge, meaning it becomes tailored to your business context quickly.

- Cost-effective enhancements: Instead of scaling up to ever-larger LLMs, RAG allows you to leverage a smaller model plus a strong retrieval component, often yielding better ROI.

In short, when you implement RAG well, you turn your generic model into a domain-aware assistant with speed, relevance, and trust.

What are Key Roles of Document Retrieval in RAG Performance?

What is Document Retrieval?

Document retrieval is the process of finding and ranking the most relevant pieces of information (documents, text passages, or records) from a large collection in response to a user’s query.

In Retrieval-Augmented Generation (RAG) systems, document retrieval plays a crucial role. It’s the “retriever” part of the retriever–generator architecture, fetching supporting context from a knowledge base before the large language model (LLM) generates an answer.

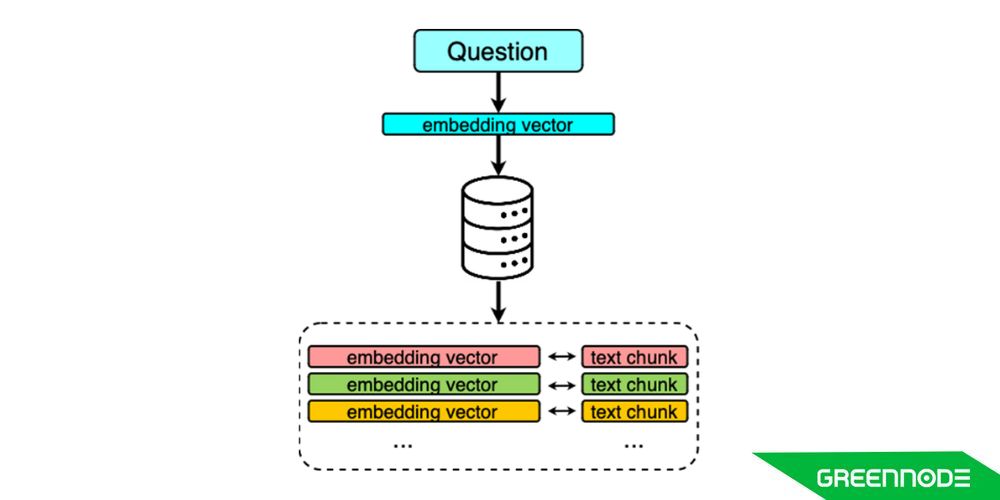

To retrieve an answer for a particular question, in the first step, we must encode or embed the question into embedding vectors. Once we have a question, we retrieve the nearest neighbors to represent the vector of the question. The advantage is we don’t really need to query specific words or concepts, we just need the idea and retrieve semantically similar vectors.

Why is document retrieval important?

“RAG is a hybrid model that merges the best of both worlds: the deep understanding of language from LLMs and the vast knowledge encoded in external data sources. By integrating these two elements, RAG-powered chatbots can deliver precise, relevant, and context-aware responses to users.“

Revolutionizing AI Conversations with GreenNode's Advanced RAG Technology

Every LLMs baseline model knowledge has a gap that is limited in its training data. Asking LLMs to write about the latest trending or information, LLMs will have no idea what you’re talking about, and the response will be crafted from the model's hallucinations and its worst. LLM’s problem comes from many key issues:

- Training data is out of date.

- Model tends to extrapolate when facts aren’t available (called hallucination).

- LLMs training is very expensive. According to Hackernoon statistic, OpenAI’s GPT-3 with over 175 billion parameters costs over $4.6 millions while Bloomberg’s BloomberGPT with 50 billion parameters costs $2.7 millions.

But LLMs is powerful when generating answers of context it has knowledge from (called Zero Shot Learning). And with embedding models to create embedding databases, we can utilize the dynamic of semantic embedding, retrieving relevant or custom knowledge we want LLMs to answer. With the cost of building a document embedding database is outperform the cost to fine tuning a LLM.

Key Roles of Document Retrieval in RAG Performance

If the Large Language Model (LLM) is the brain of a RAG system, then document retrieval is its bloodstream, continuously feeding it fresh, relevant information to think with. The quality of this retrieval layer directly determines whether your model generates answers that are accurate, insightful, trustworthy, or generic, and misleading.

At its core, document retrieval serves three critical roles in shaping RAG performance:

Providing Relevant Context at the Right Time: The retrieval system ensures that only the most relevant documents are surfaced for a given query. This relevance is measured not just by keyword matching but by semantic similarity, identifying passages that align conceptually with what the user is asking. When done well, retrieval reduces hallucination rates and grounds the model’s output in verified knowledge.

Acting as a Dynamic Knowledge Bridge: Unlike a static LLM, a retriever constantly pulls from a live or updated knowledge base such as enterprise documents, technical manuals, or proprietary datasets. This means your model can stay current without retraining. The retrieval layer effectively extends the LLM’s memory, giving it access to your organization’s collective intelligence in real time.

Balancing Recall and Precision for Accuracy: High recall ensures the retriever finds all potentially relevant documents; high precision ensures it delivers only what matters. A well-optimized retriever balances both; too much recall floods the model with noise, while too much precision risks missing key facts. Fine-tuning this tradeoff is what separates a production-grade RAG system from a prototype.

When retrieval falters, even the best LLM will stumble. Irrelevant or low-quality documents confuse the model, increase token costs, and degrade accuracy. But when retrieval is well-engineered, levering embedding models, vector databases, and re-ranking algorithms, it becomes the hidden force that elevates RAG from “smart autocomplete” to domain-aware reasoning system.

Advanced Document Retrieval Methods

The performance of any RAG system depends on how effectively it retrieves and ranks the most relevant information for the model to reason with. Traditional keyword-based methods are fast but limited in context understanding, while newer dense retrieval methods capture meaning more deeply though often at higher computational cost.

To bridge these trade-offs, modern RAG pipelines use advanced retrieval architectures that blend linguistic precision with semantic intelligence. Let’s break down the most common and effective methods powering today’s best systems.

Dense retrieval with embeddings

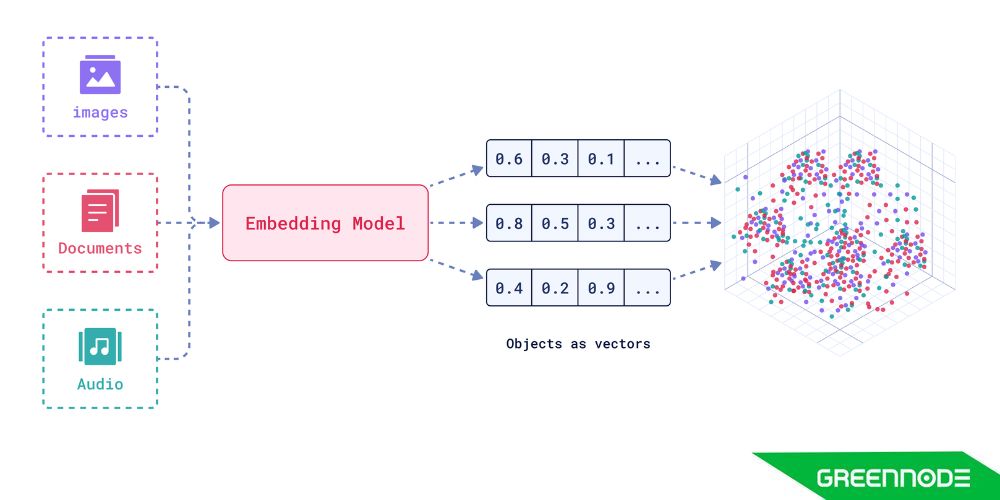

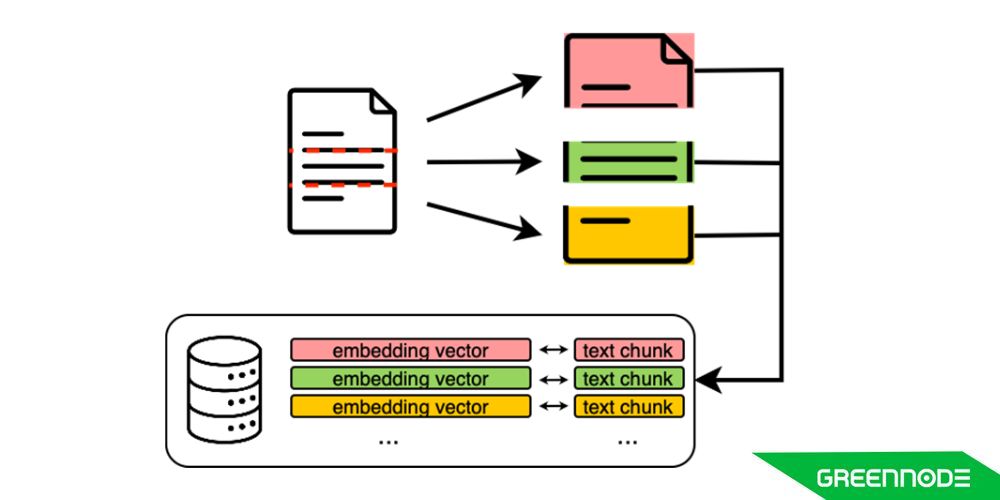

At GreenNode’s RAG system, the documents are split into chunks, or we can count as paragraphs. After that, chunks will be converted to multidimensional vectors. In this blog, we can treat the embedding model as a black box AI and focus on the main concept of the document retrieval system.

Multidimensional vectors are another representation of documentations from a semantic point of view. But why is that? In natural language processing, a sentence embedding refers to the numeric representation of a sentence form of a vector of real numbers which encodes meaningful semantic information.

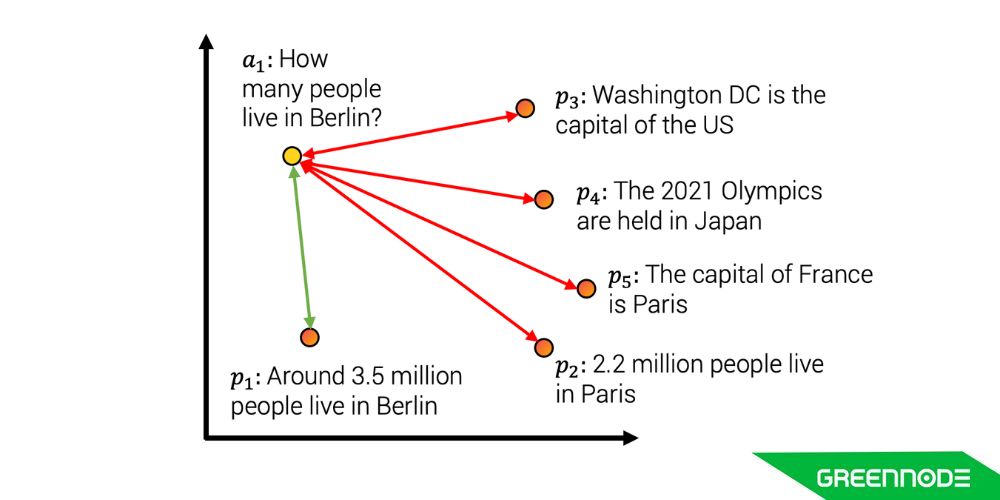

Image above represents a semantic concept in embedding: a1 is a vector representing a question about the population of Berlin, p1, p2, p3, etc. are vectors representing the information database. A document embedding system must ensure the distance between a query vector and relevant information vectors must be closer than irrelevant information. In figure above, the distance between question a and paragraph p1 is smaller than the others.

Computers don’t understand the meaning of texts, all information in computers is represented as bits and bytes, signals and numbers. In GreenNode’s RAG system, document after split into chunks will be processed into embedding vectors.

Sparse Retrieval (BM25, TF-IDF)

Sparse retrieval methods like TF-IDF (Term Frequency–Inverse Document Frequency) and BM25 form the foundation of classical search. They represent documents as collections of discrete words and rank results based on keyword frequency and document relevance.

While simple and computationally efficient, sparse retrieval excels when dealing with structured or domain-specific text such as legal documents, research abstracts, or code. Its main advantage lies in precision and explainability: you can easily trace why a document was retrieved, based on keyword overlap and scoring.

However, sparse retrieval struggles when users phrase queries differently from the document’s wording, which is a common issue in natural language search. This is where dense and hybrid approaches come in.

Hybrid Retrieval Approaches

Hybrid retrieval combines the semantic depth of dense embeddings with the lexical precision of traditional sparse methods. In this setup:

- Dense retrieval uses embedding models (like OpenAI’s text-embedding-3-large or GreenMind Embeddings) to represent text as high-dimensional vectors capturing semantic meaning.

- Sparse retrieval provides keyword-based grounding that ensures important exact matches aren’t overlooked.

By merging the results from both systems — often via score fusion or weighted ranking — hybrid retrieval achieves the best of both worlds:

- High recall from semantic search, ensuring nothing important is missed.

- High precision from keyword matching, filtering irrelevant noise.

In production-grade RAG systems, hybrid retrieval is now a best practice. It balances accuracy and cost-effectiveness, especially when dealing with enterprise-scale datasets containing both structured records and unstructured text.

Multi-Stage Retrieval

Even the most advanced retrievers often return a mix of relevant and partially relevant documents. That’s where multi-stage retrieval comes in.

In this architecture, an initial retriever (dense or hybrid) first fetches a broad set of candidate documents, typically the top 50–100 matches. These are then re-ranked by a more powerful cross-encoder model that evaluates the query–document pair jointly to score semantic relevance with higher precision.

Cross-encoders, like MiniLM or E5-Large-V2, perform deeper reasoning by attending to both the query and document tokens simultaneously. Though computationally heavier, this second pass ensures that only the most relevant documents reach the generation stage, leading to:

- Reduced hallucination and irrelevant outputs.

- Lower token consumption during LLM prompting.

- More factual and context-grounded responses.

Conclusion

Document retrieval plays a crucial role in the GreenNode’s RAG system because it maintains data and context knowledge so LLMs can understand and return answers accurately.

Document retrieval in GreenNode’s advanced RAG solution represents a significant leap forward in the realm of conversational AI. By prioritizing accuracy, context-awareness, and user engagement, we're not just creating chatbots; we're creating digital conversationalists—knowledgeable, efficient, and highly intuitive. Join us on this exciting journey as we continue to redefine the boundaries of AI communications.