The GreenNode AI Platform update introduces key enhancements to improve AI model training, inference speed, and deployment efficiency. This release focuses on performance optimization, expanded framework support, enhanced security, and better integration tools, making AI workflows more seamless and cost-effective.

With faster model loading, flexible authentication options, and broader framework compatibility, users can now deploy and manage models with greater ease. Additionally, new tools and SDK updates streamline the integration of GreenNode AI into existing applications, reducing complexity for developers and enterprises.

These improvements reinforce GreenNode AI Platform’s commitment to delivering high-performance, scalable, and accessible AI infrastructure, empowering users to train, deploy, and optimize AI solutions with greater speed and efficiency.

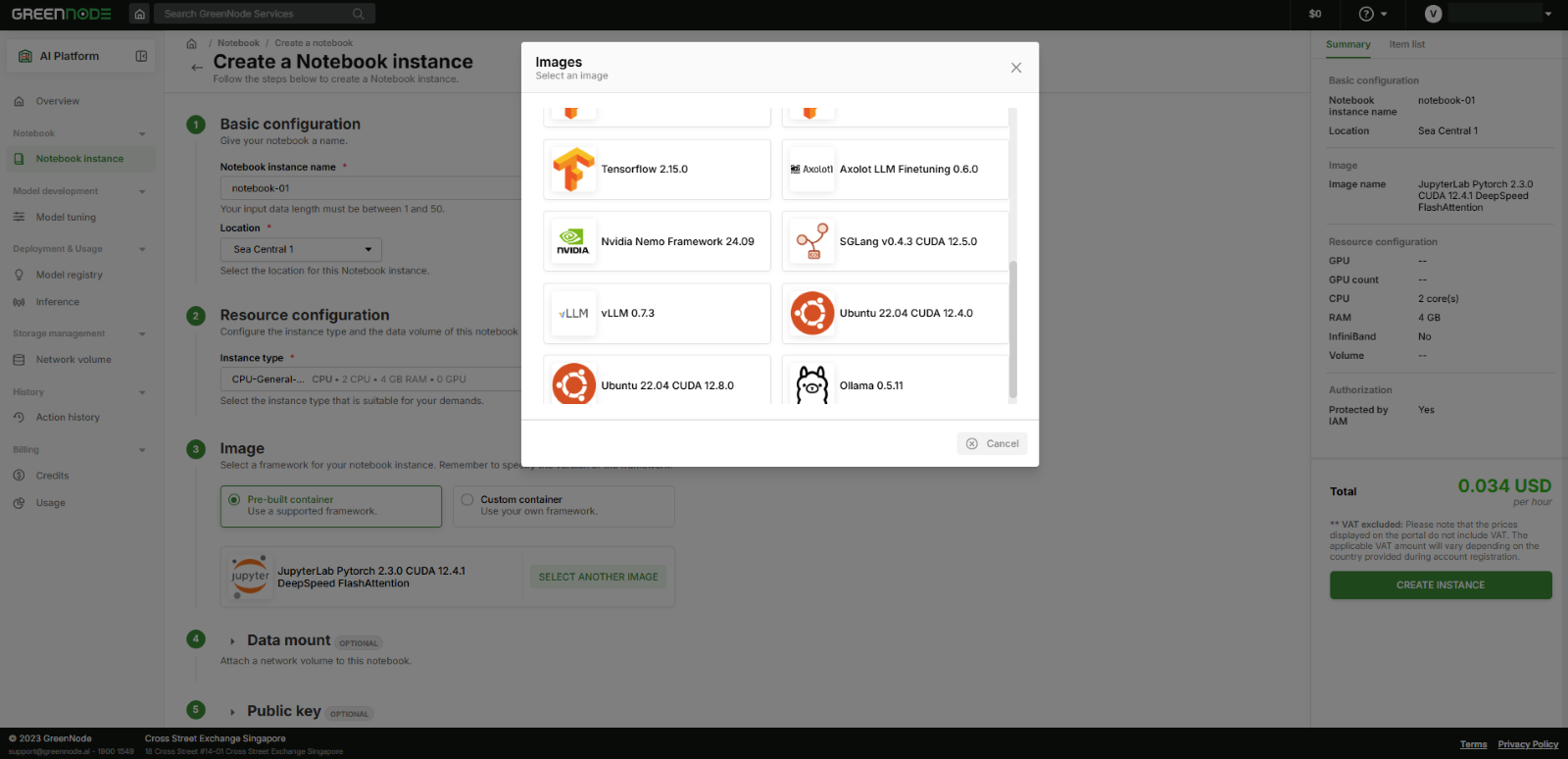

Expanded Pre-Configured Notebook Images for Faster Deployment

Setting up AI development environments can be a significant bottleneck for developers, often consuming 3-5 hours setting up frameworks, managing dependencies, and ensuring CUDA compatibility before they can even start training a model. Manually installing libraries, optimizing GPU settings, and troubleshooting errors are the main reasons that slow down research and add unnecessary complexity.

To remove these roadblocks, GreenNode AI Platform now offers pre-configured Notebook images with the latest AI frameworks and dependencies. With just one click, users can instantly launch an optimized AI/ML environment—no more tedious setup, no more wasted hours. This means researchers and developers can focus on building and innovating, rather than managing infrastructure.

Whether working with PyTorch, TensorFlow, or specialized frameworks, GreenNode AI Platform provides multiple ready-to-use environments, ensuring flexibility and efficiency for any AI/ML project.

- PyTorch 2.5.0, 2.4.0, 2.3.0, 2.2.0, 2.1.0, 1.13.0

- JupyterLab PyTorch 2.3.0 CUDA 12.4.1 with DeepSpeed & FlashAttention

- TensorFlow 2.17.0, 2.16.1, 2.15.0

- Axolot LLM Finetuning 0.6.0

- NVIDIA NeMo Framework 24.09

- vLLM 0.7.3 (for high-speed inference)

- SGLang v0.4.3 CUDA 12.5.0

- Ollama 0.5.11

- Ubuntu 22.04 with CUDA 12.4.0 & 12.8.0

By providing a comprehensive selection of pre-installed AI frameworks, optimized CUDA environments, and flexible OS configurations, GreenNode AI Platform empowers AI developers to work faster, scale efficiently, and push the boundaries of innovation. Learn more: https://aiplatform.console.greennode.ai/.

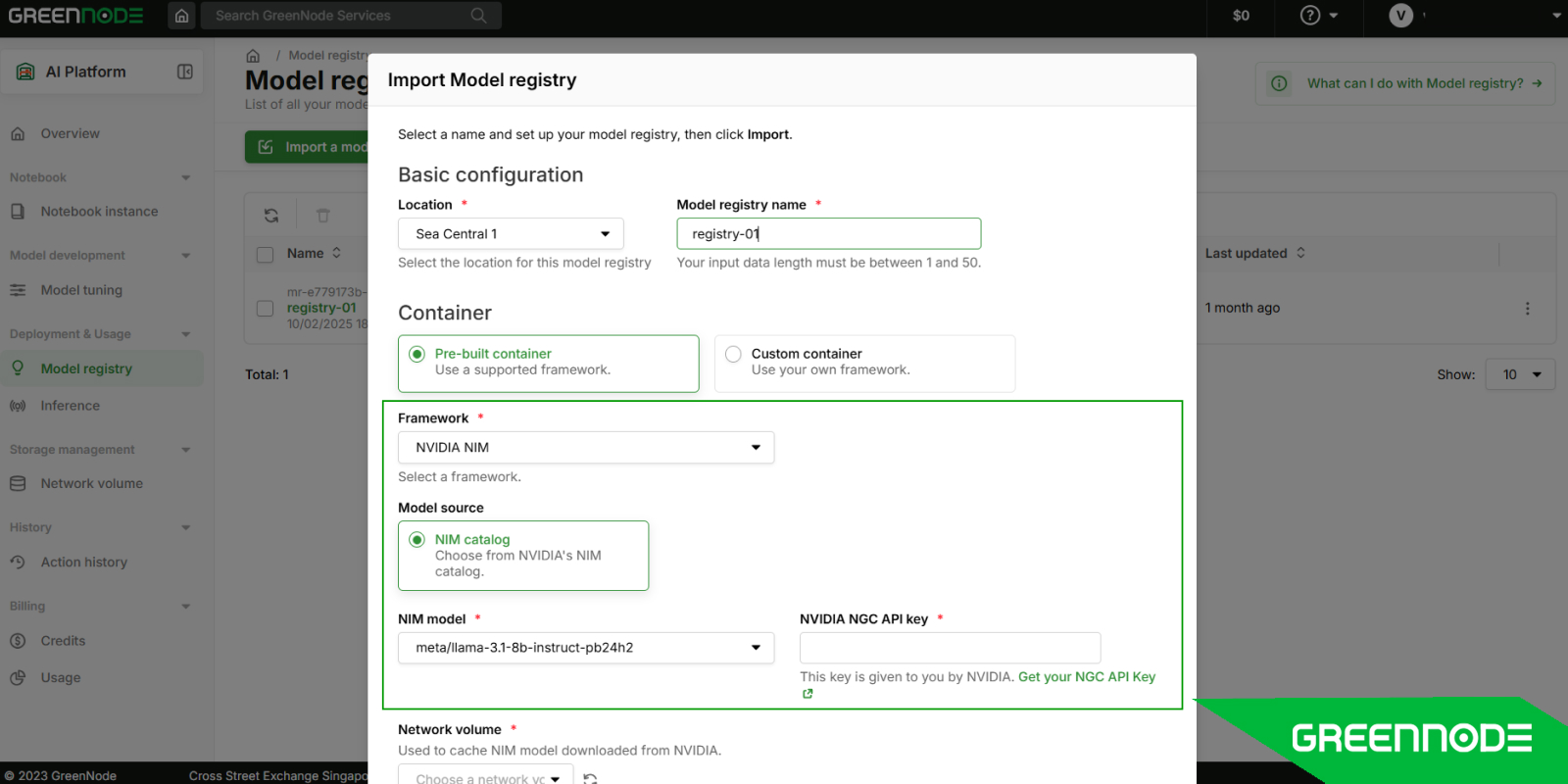

Seamless Model Registry Integration with Extended NIM and vLLM Framework

Effortless NVIDIA NIM deployment on GreenNode AI platform

NVIDIA Inference Microservices (NIM) is a suite of containerized, optimized AI inference microservices designed to streamline the deployment of large AI models on NVIDIA GPUs. Traditionally, businesses utilizing NVIDIA Inference Microservices (NIM) have had to build and manage their own infrastructure. This complex and time-consuming task can lead to increased costs and delays. Challenges include manual infrastructure setup, complex configurations, and the need for specialized expertise, all of which can hinder innovation and efficiency.

To address these challenges, GreenNode AI Platform now offers seamless integration with NVIDIA NIM, enabling users to deploy NIM models effortlessly using their NVIDIA accounts. This integration adheres to NVIDIA’s best practices, ensuring scalability and high efficiency.

Nvidia NIM is widely adopted by enterprises aiming to accelerate GPU workloads with high efficiency. With GreenNode, deploying NIM models is simplified into three steps: selecting the NIM framework, choosing a model, and deploying it instantly. This streamlined approach eliminates manual setup complexities and allows AI teams to focus on core model development.

The impact of this integration is substantial—deploying NIM models on GreenNode reduces deployment time by up to 60% compared to traditional setups. By removing infrastructure bottlenecks, GreenNode enables businesses to bring AI-driven solutions to market faster and more cost-effectively.

Accelerating model inference with vLLM on GreenNode AI platform

AI developers require high-speed inference solutions to efficiently serve large language models (LLMs). However, traditional inference methods often struggle with performance bottlenecks, memory inefficiencies, and slow startup times—especially when handling large-scale models. Previously, vLLM, an optimized open-source framework designed for high-speed LLM inference, was not supported on GreenNode AI. This limitation forced developers to rely on less efficient alternatives, increasing resource consumption and operational complexity.

To address this, GreenNode AI now fully integrates vLLM, providing faster inference speeds, optimized memory usage, and improved overall efficiency. This enhancement ensures that developers can deploy and serve large models with minimal latency and reduced infrastructure costs. Try this now: https://aiplatform.console.greennode.ai/.

Model Deployment Options: Performance Comparison

| Model Source | Initial Load Time | Subsequent Load Time (With Caching) | Best For |

|---|---|---|---|

| Hugging Face | 15–20 min (e.g., LLaMA 3.3-70B, ~150GB) | 1–2 min | Accessing the latest open-source AI models |

| GreenNode Model Catalog | 1–2 min (Pre-cached) | Near-instant | Running pre-optimized models with minimal setup |

| Network Volume | Varies (user-managed) | Optimized for storage efficiency | Custom model deployments with flexible storage options |

With vLLM support, GreenNode AI drastically reduces inference latency and enhances scalability, allowing AI teams to focus on innovation rather than infrastructure management.

Renovate your AI Project with GreenNode’s Inference Logs and Authentication Mechanism

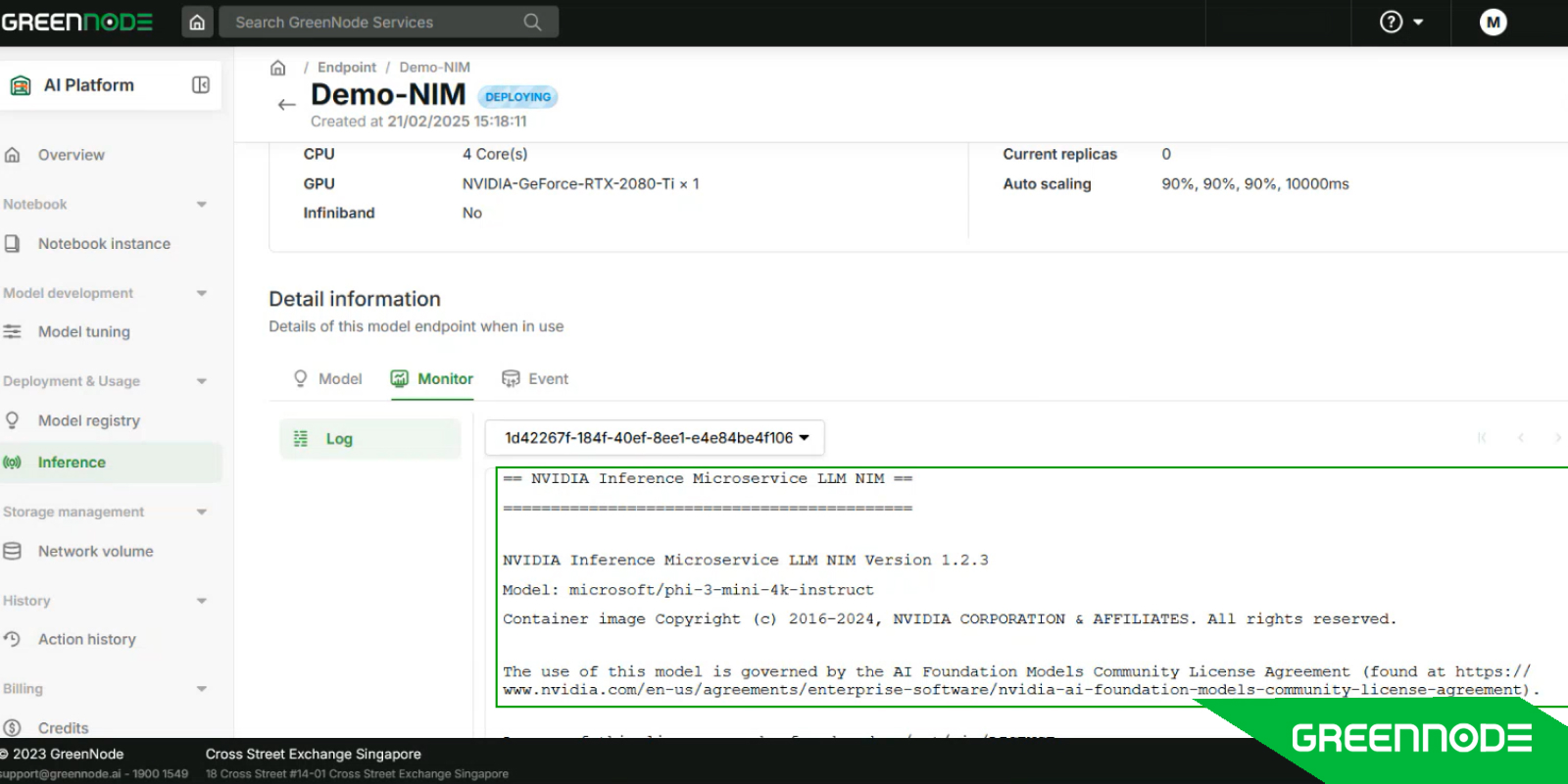

Enhanced log console for better AI inference monitoring

Monitoring AI inference activity is crucial for maintaining performance, security, and efficiency. However, many users struggle to track inference requests, troubleshoot errors, and prevent unauthorized access due to the lack of real-time logging. A report by Coralogix indicates that developers spend approximately 75% of their time on debugging, equating to about 1,500 hours annually. Without proper logging, identifying issues can take hours or even days, leading to delays in deployment and increased operational costs.

To address these challenges, GreenNode AI Platform now provides a built-in Log Console for inference monitoring. This feature automatically records all inference activities, allowing users to review logs in real time for troubleshooting, security auditing, and performance optimization. By offering detailed insights into inference requests, GreenNode helps users detect unauthorized access attempts, identify inefficient resource usage, and quickly debug failing models.

Security advancement with inference endpoint authorization

In the realm of AI development, securing inference endpoints is paramount, as unauthorized access can lead to data breaches and compromised model integrity. A recent assessment by the UK's Department for Science, Innovation, and Technology highlighted specific vulnerabilities at each stage of the AI lifecycle, emphasizing the critical need for robust security measures.

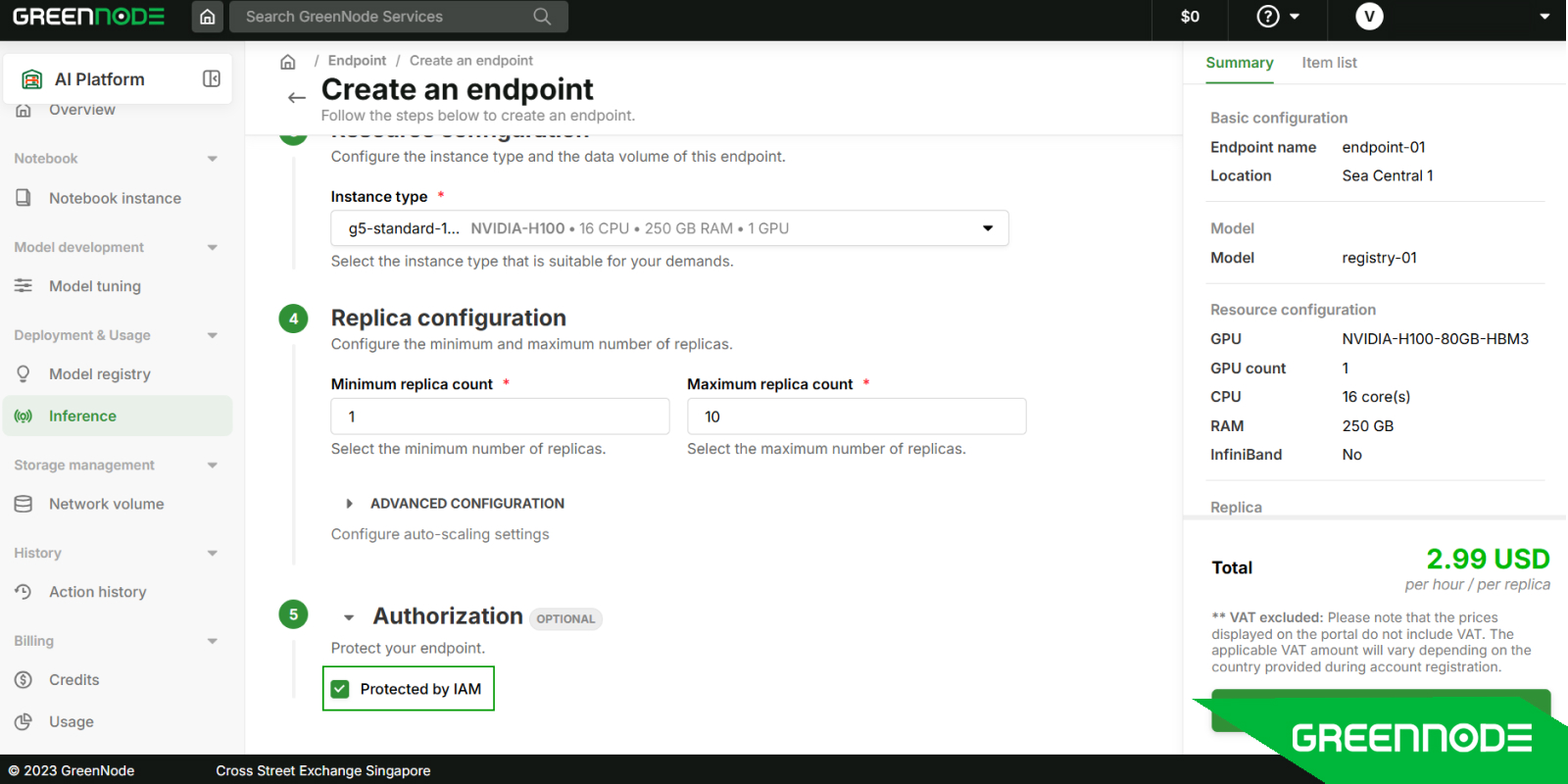

To address these challenges, the GreenNode AI Platform now offers flexible authentication mechanisms for inference endpoints. Users can select from the following methods:

- Username & Password Authentication: A traditional approach requiring users to provide valid credentials before accessing the endpoint.

- Token-Based Authentication: Enables secure and stateless authentication, improving both scalability and security. Currently, users can authenticate using 30-minute IAM Access Tokens. To further enhance security and usability, GreenNode is developing an API Key Management system, allowing users to generate permanent API keys for seamless and persistent authentication. (Coming soon)

- No Authentication (Public Access): Allows open access to the endpoint without any authentication, suitable for non-sensitive applications.

This flexibility enables users to tailor security measures to their specific needs, balancing accessibility and protection. The importance of such adaptable authentication methods is underscored by the increasing prevalence of AI-powered cyber threats, including phishing and ransomware attacks.

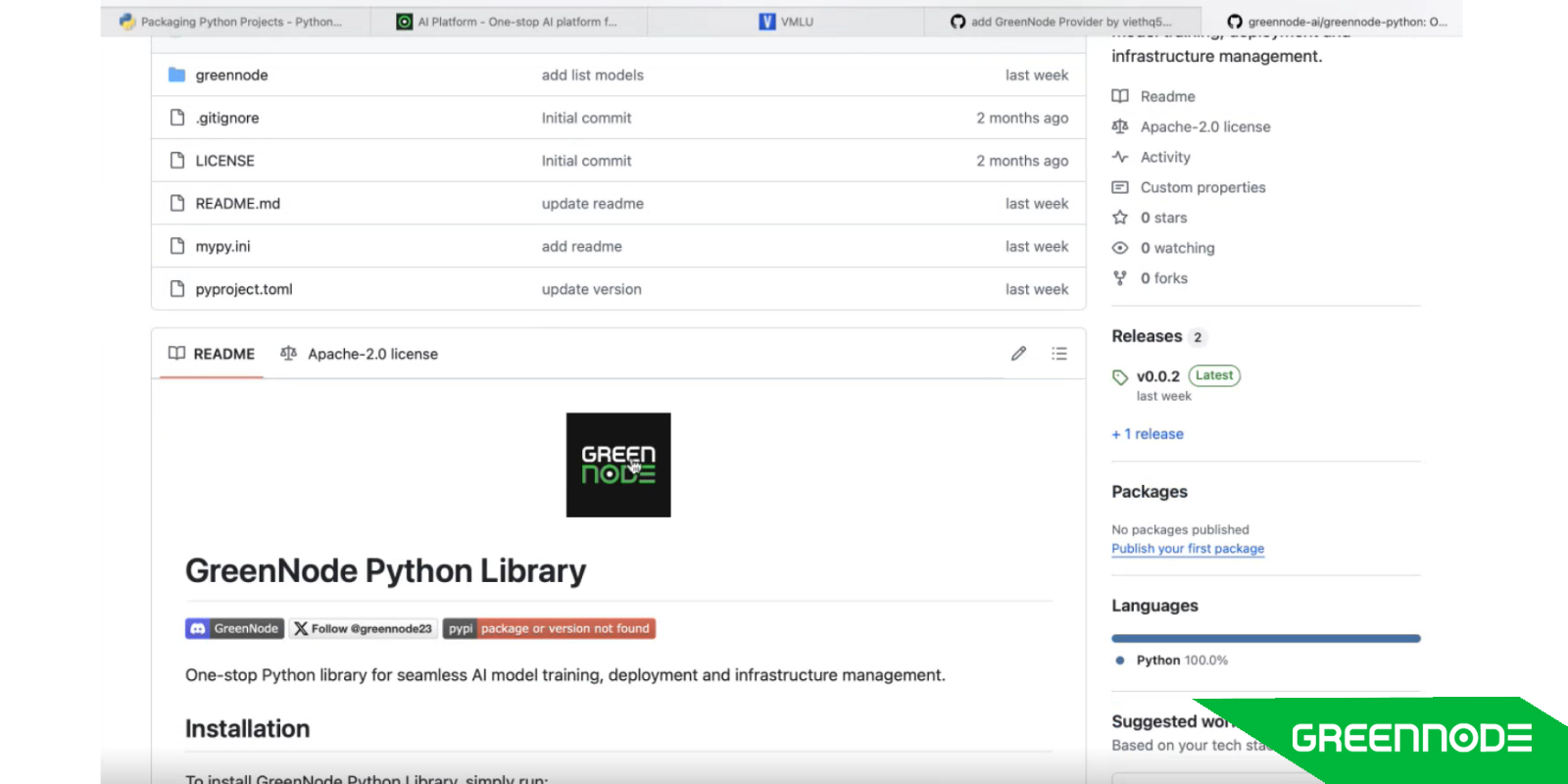

GreenNode SDK is now live for multiple usages

An SDK is a collection of tools and libraries that enables developers to interact with a platform through API integration, simplifying the development process and reducing potential errors. According to IBM, SDKs provide faster and smoother integrations by simplifying standard processes and offering ready access to necessary information.

From this March, GreenNode has launched its Software Development Kit (SDK), designed to streamline the integration of GreenNode AI into various applications. By utilizing the GreenNode SDK, developers can efficiently run AI models locally or connect their applications to the GreenNode AI Platform with minimal effort. Learn more: GreenNode · GitHub.

By adopting the GreenNode SDK, developers not only streamline the integration process but also position themselves to leverage the efficiency gains associated with AI-assisted development, ultimately leading to more robust and innovative applications.

Conclusion

The latest release of the GreenNode AI Platform marks a significant leap forward in AI deployment, training, and scalability. With seamless NVIDIA NIM integration, high-speed vLLM inference, advanced logging, and a powerful SDK, GreenNode empowers developers and enterprises to streamline their AI/ML workflows like never before.

Whether you're optimizing inference, enhancing security, or accelerating development, GreenNode AI provides the tools you need to innovate faster and more efficiently.

Experience the future of AI—start building with GreenNode AI Platform today!