One of the first steps towards using a new pre-trained model is prompting.

Prompting is often the first step in leveraging a pre-trained model, with techniques like prompt engineering helping maximize its potential. However, these techniques have limitations, and there comes a point where fine-tuning becomes essential to achieve more targeted results.

For instance, prompting the pre-trained Llama-3.1-8B model with, “What should I do on a trip to Europe?” yielded a generic response:

This is the most common question that I get from friends and family. I have been to Europe a few times now and I have found that the best way to see Europe is to travel as much as possible. You can’t see everything, but you can see a lot. And you can do it all in one trip. If you are planning a trip to Europe, here are some of the best things to do in Europe.

Europe is a continent with many different cultures, languages, and customs. It is also home to some of the world’s most beautiful cities and natural wonders.

While helpful, the response was not the specific list of European destinations I sought. In contrast, after fine-tuning the LLaMA-3.1-8B model from Meta using GreenNode, I was able to transform its understanding and output.

In this guide, I’ll share my journey of fine-tuning the LLaMA-3.1-8B model to address such challenges. Stay tuned to see how the model’s response to the same prompt changes post-fine-tuning. Let’s dive in!

Prepare Your Dataset

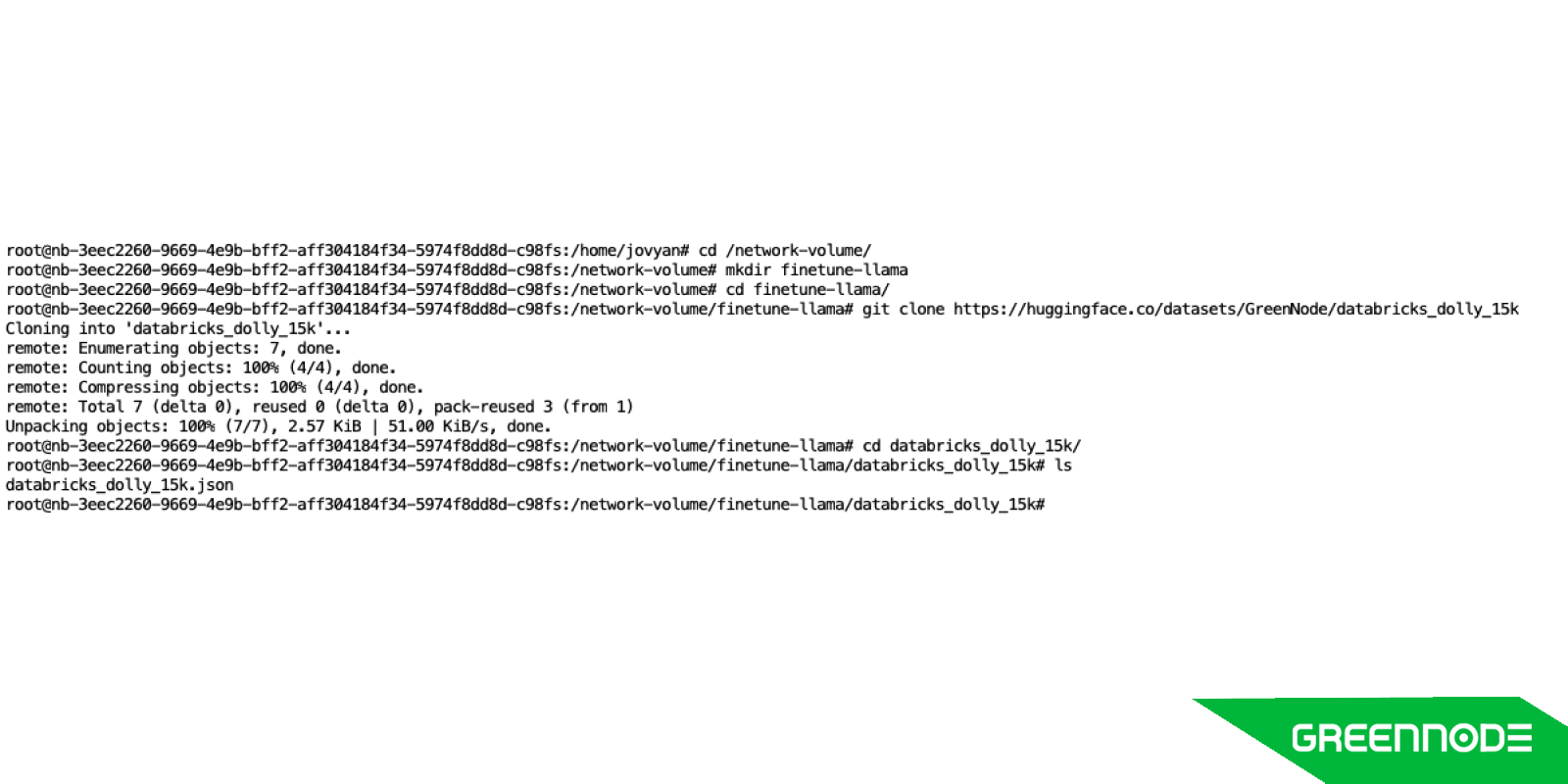

The first step in fine-tuning a model is preparing the dataset it will learn from. For this guide, I used the HuggingFaceH4/databricks_dolly_15k dataset, originally in Alpaca format, and converted it into the GreenNode format, now available as GreenNode/databricks_dolly_15k. You can learn more about the GreenNode format here.

The Dolly dataset comprises several instruction-response pairs categorized into types like closed QA, classification, and summarization. It also includes a “context” column, which provides additional context when needed, enhancing the dataset's versatility for fine-tuning tasks.

Here are key steps:

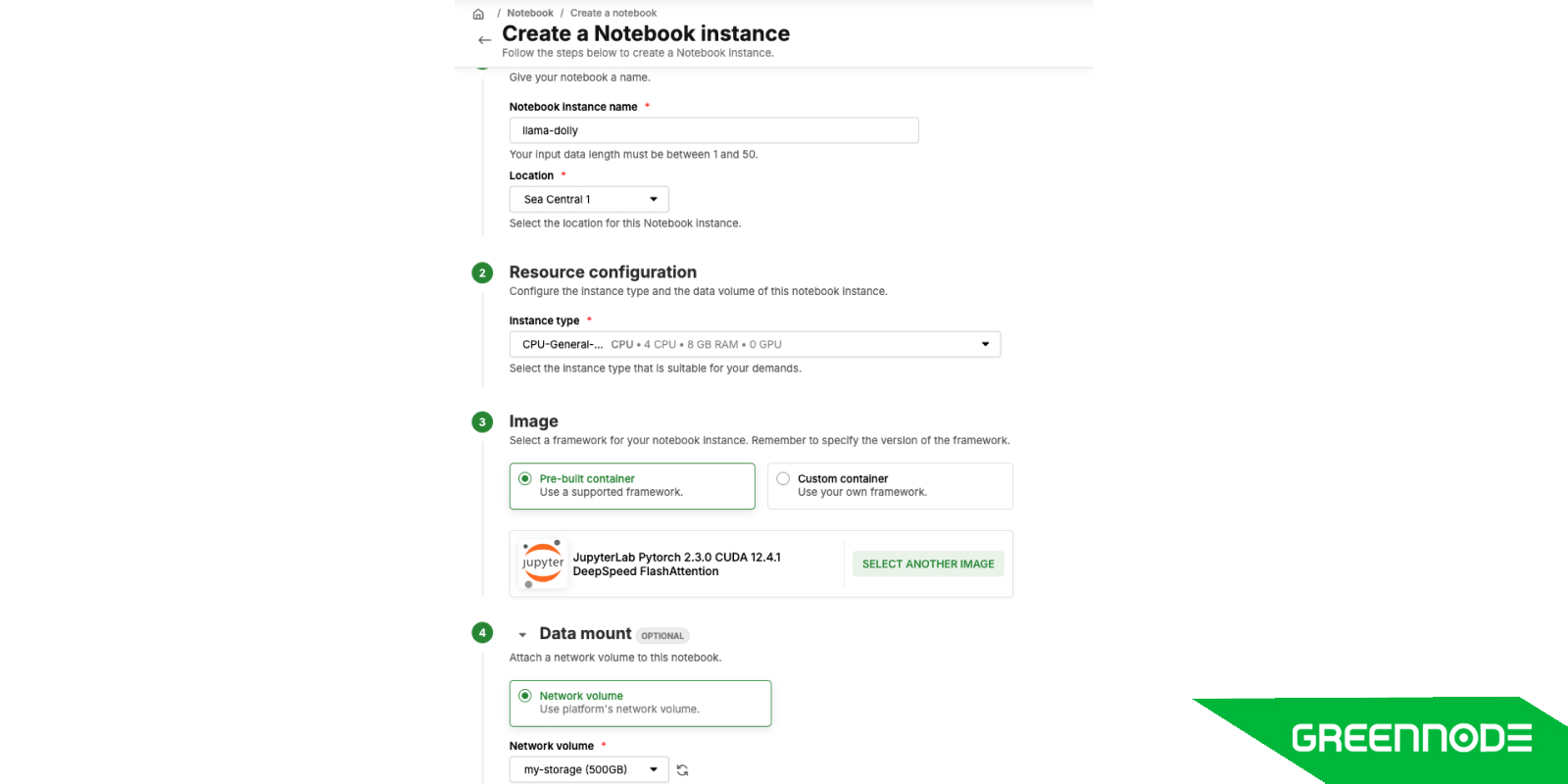

- Create a CPU Instance to pull data from HuggingFace to our Network Volume

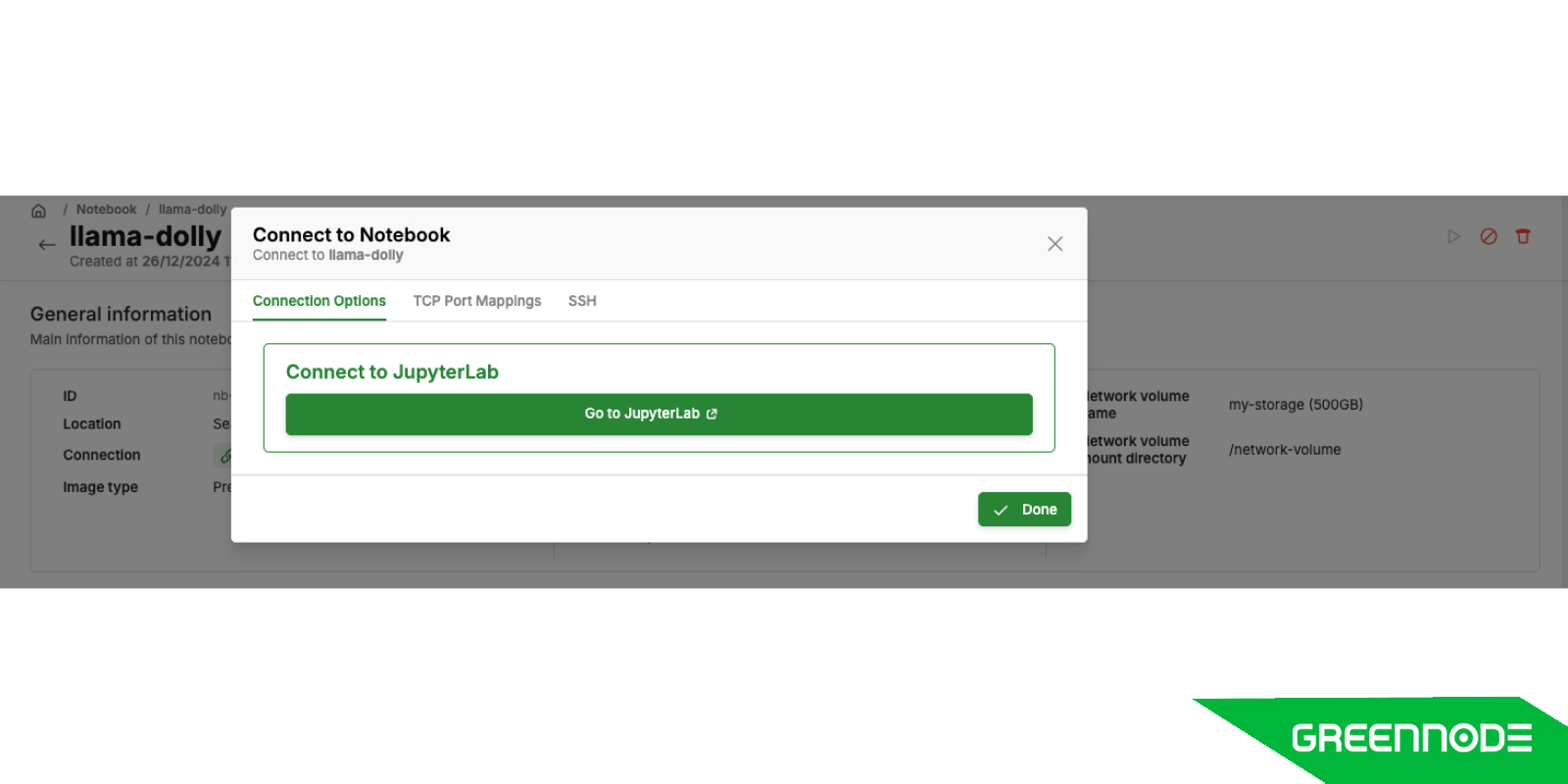

- Connect to this instance

- Pull data from HuggingFace to Network Volume

Fine-tune with Model Tuning

Model tuning, or hyperparameter optimization, is the process of adjusting a model’s hyperparameters to maximize its performance. These settings, which govern the learning process but are not derived from the data itself, can significantly influence the model's accuracy, precision, and overall effectiveness. Fine-tuning ensures the model adapts to specific tasks while maintaining high performance.

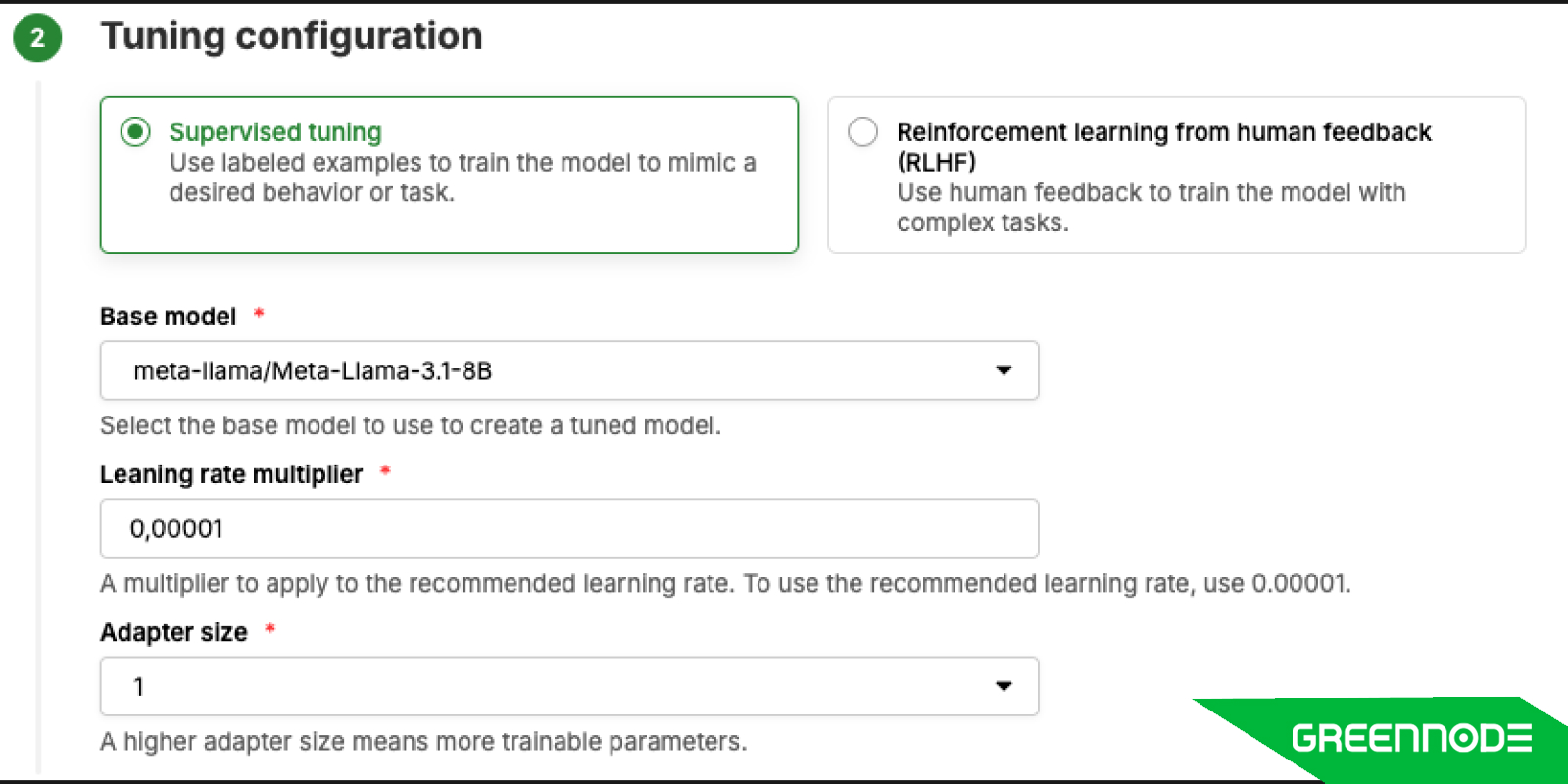

Defining the Parameters

To fine-tune effectively, we need to configure parameters for LoRA, training, and Supervised Fine-Tuning (SFT). Here are key steps and considerations based on my experience:

- Select a Model: Choose a base model suited to your task. For this guide, I selected Meta-Llama-3.1-8B.

Learning Rate Multiplier & Adapter Size:

- Learning rate multiplier: Use 1e-5 for the recommended rate.

- Adapter size: Determines the number of trainable parameters. No specific means fine tune full parameters. For this setup, I chose an adapter size of 1.

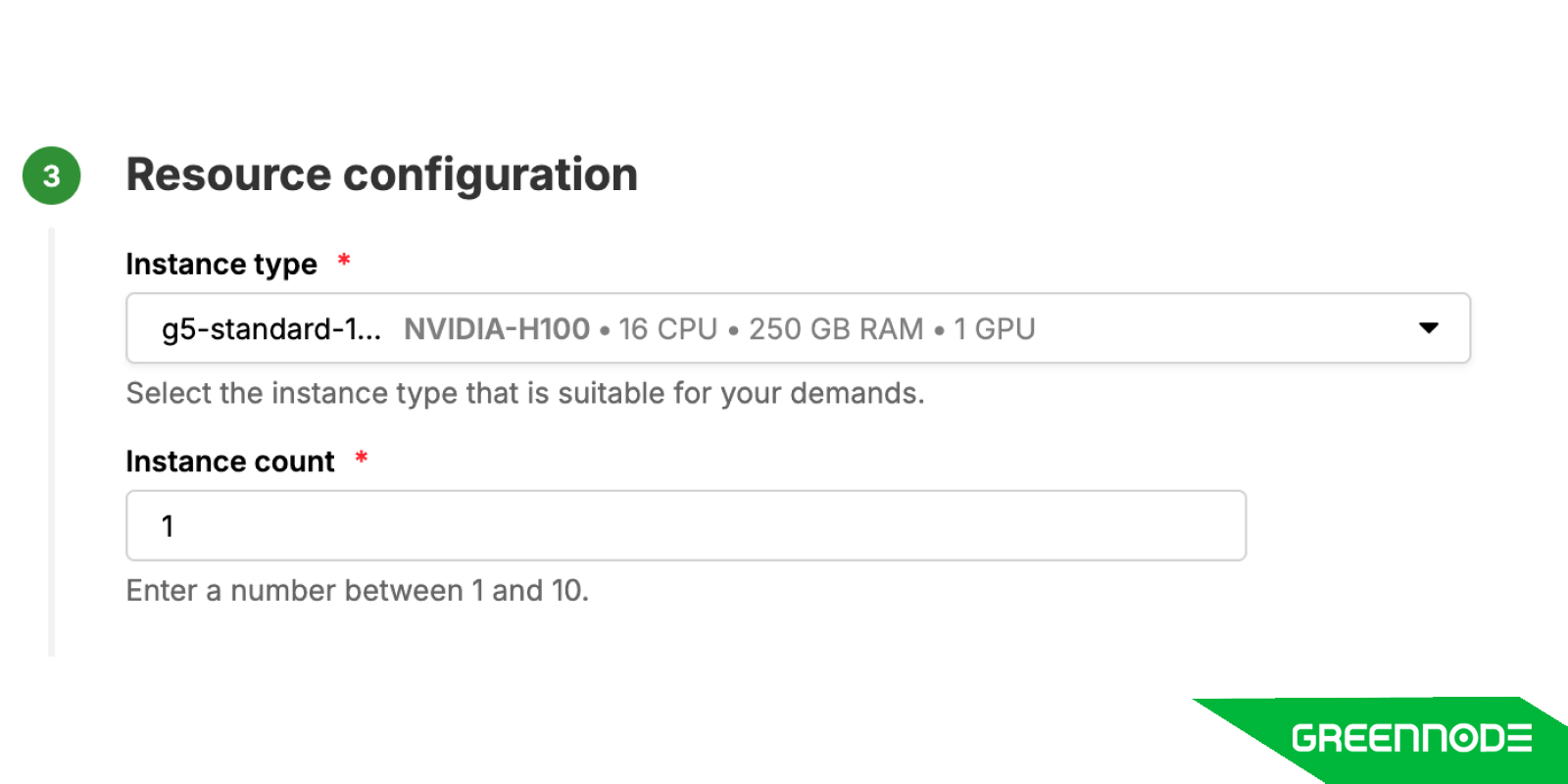

- Setup Your Running Resource: Specify the training instance type and count. For this task, I used a single GPU (NVIDIA H100).

- Mount Your Dataset: Define the dataset location.

- Input:

/network-volume/finetune-llama/databricks_dolly_15k/databricks_dolly_15k.json - Output:

/network-volume/finetune-llama/output/llama31-dolly

- Input:

- Setup advance tuning hyperparameters:

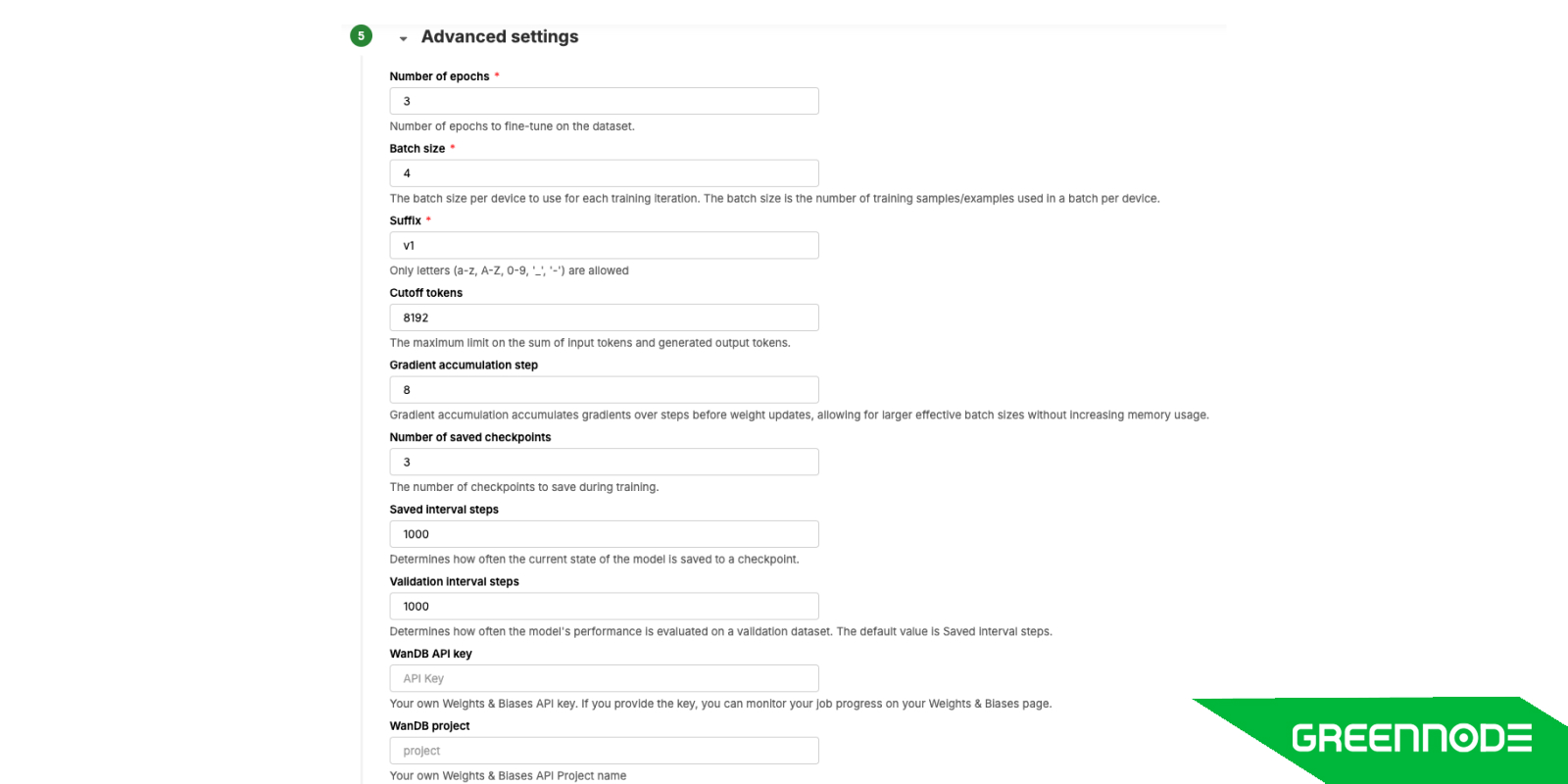

For this example, I ran the training for 3 epochs using a batch size of 4 and a gradient accumulation of 8. Gradient accumulation enables larger effective batch sizes by accumulating gradients over multiple steps before updating weights, making it memory efficient.

Key training configurations included:

- Number of Checkpoints: Set to save 3 checkpoints during the training process.

- Checkpoint Save Interval: Configured to save the model state every 1,000 steps.

- Validation Interval Steps: Aligned with the save interval, evaluating the model's performance on the validation dataset every 1,000 steps.

These settings ensured a balance between frequent performance evaluations and efficient checkpointing to capture the model's progress.

To monitoring training progress, integrate Weights & Biases (WanDB) by providing the WanDB API key and specifying the WanDB project.

Result of fine-tuning

Training logs:

{"current_steps": 10, "total_steps": 1335, "loss": 1.9954, "learning_rate": 1.4925373134328358e-06, "epoch": 0.022434099831744252, "percentage": 0.75, "elapsed_time": "0:00:35", "remaining_time": "1:17:55"}

{"current_steps": 20, "total_steps": 1335, "loss": 1.8703, "learning_rate": 2.9850746268656716e-06, "epoch": 0.044868199663488505, "percentage": 1.5, "elapsed_time": "0:01:05", "remaining_time": "1:12:06"}

{"current_steps": 30, "total_steps": 1335, "loss": 2.0236, "learning_rate": 4.477611940298508e-06, "epoch": 0.06730229949523275, "percentage": 2.25, "elapsed_time": "0:01:34", "remaining_time": "1:08:15"}

{"current_steps": 40, "total_steps": 1335, "loss": 1.8937, "learning_rate": 5.970149253731343e-06, "epoch": 0.08973639932697701, "percentage": 3.0, "elapsed_time": "0:02:01", "remaining_time": "1:05:31"}

{"current_steps": 50, "total_steps": 1335, "loss": 1.8384, "learning_rate": 7.46268656716418e-06, "epoch": 0.11217049915872125, "percentage": 3.75, "elapsed_time": "0:02:31", "remaining_time": "1:05:00"}

{"current_steps": 60, "total_steps": 1335, "loss": 1.751, "learning_rate": 8.955223880597016e-06, "epoch": 0.1346045989904655, "percentage": 4.49, "elapsed_time": "0:03:04", "remaining_time": "1:05:23"}

{"current_steps": 70, "total_steps": 1335, "loss": 1.778, "learning_rate": 9.99986188471085e-06, "epoch": 0.15703869882220975, "percentage": 5.24, "elapsed_time": "0:03:31", "remaining_time": "1:03:49"}

{"current_steps": 80, "total_steps": 1335, "loss": 1.7791, "learning_rate": 9.997406714054536e-06, "epoch": 0.17947279865395402, "percentage": 5.99, "elapsed_time": "0:03:59", "remaining_time": "1:02:42"}

{"current_steps": 90, "total_steps": 1335, "loss": 1.7549, "learning_rate": 9.991884049424443e-06, "epoch": 0.20190689848569826, "percentage": 6.74, "elapsed_time": "0:04:28", "remaining_time": "1:01:59"}

{"current_steps": 100, "total_steps": 1335, "loss": 1.6916, "learning_rate": 9.983297280726868e-06, "epoch": 0.2243409983174425, "percentage": 7.49, "elapsed_time": "0:04:55", "remaining_time": "1:00:48"}

{"current_steps": 110, "total_steps": 1335, "loss": 1.5813, "learning_rate": 9.971651678667743e-06, "epoch": 0.24677509814918677, "percentage": 8.24, "elapsed_time": "0:05:30", "remaining_time": "1:01:21"}

{"current_steps": 120, "total_steps": 1335, "loss": 1.6788, "learning_rate": 9.956954391517399e-06, "epoch": 0.269209197980931, "percentage": 8.99, "elapsed_time": "0:06:08", "remaining_time": "1:02:08"}

{"current_steps": 130, "total_steps": 1335, "loss": 1.6393, "learning_rate": 9.939214440722828e-06, "epoch": 0.2916432978126753, "percentage": 9.74, "elapsed_time": "0:06:41", "remaining_time": "1:02:01"}

{"current_steps": 140, "total_steps": 1335, "loss": 1.6242, "learning_rate": 9.918442715370163e-06, "epoch": 0.3140773976444195, "percentage": 10.49, "elapsed_time": "0:07:15", "remaining_time": "1:02:00"}

{"current_steps": 150, "total_steps": 1335, "loss": 1.6327, "learning_rate": 9.894651965500774e-06, "epoch": 0.33651149747616377, "percentage": 11.24, "elapsed_time": "0:07:48", "remaining_time": "1:01:41"}

{"current_steps": 160, "total_steps": 1335, "loss": 1.6702, "learning_rate": 9.86785679428507e-06, "epoch": 0.35894559730790804, "percentage": 11.99, "elapsed_time": "0:08:16", "remaining_time": "1:00:46"}

{"current_steps": 170, "total_steps": 1335, "loss": 1.6276, "learning_rate": 9.838073649058831e-06, "epoch": 0.38137969713965225, "percentage": 12.73, "elapsed_time": "0:08:46", "remaining_time": "1:00:06"}

{"current_steps": 180, "total_steps": 1335, "loss": 1.5712, "learning_rate": 9.805320811227534e-06, "epoch": 0.4038137969713965, "percentage": 13.48, "elapsed_time": "0:09:16", "remaining_time": "0:59:31"}

{"current_steps": 190, "total_steps": 1335, "loss": 1.5741, "learning_rate": 9.769618385044931e-06, "epoch": 0.4262478968031408, "percentage": 14.23, "elapsed_time": "0:09:55", "remaining_time": "0:59:47"}

{"current_steps": 200, "total_steps": 1335, "loss": 1.5271, "learning_rate": 9.730988285272705e-06, "epoch": 0.448681996634885, "percentage": 14.98, "elapsed_time": "0:10:23", "remaining_time": "0:58:56"}

{"current_steps": 210, "total_steps": 1335, "loss": 1.5655, "learning_rate": 9.68945422372881e-06, "epoch": 0.4711160964666293, "percentage": 15.73, "elapsed_time": "0:10:50", "remaining_time": "0:58:03"}

{"current_steps": 220, "total_steps": 1335, "loss": 1.5073, "learning_rate": 9.64504169473276e-06, "epoch": 0.49355019629837354, "percentage": 16.48, "elapsed_time": "0:11:18", "remaining_time": "0:57:19"}

{"current_steps": 230, "total_steps": 1335, "loss": 1.5426, "learning_rate": 9.597777959456764e-06, "epoch": 0.5159842961301178, "percentage": 17.23, "elapsed_time": "0:11:45", "remaining_time": "0:56:27"}

{"current_steps": 240, "total_steps": 1335, "loss": 1.5696, "learning_rate": 9.54769202919237e-06, "epoch": 0.538418395961862, "percentage": 17.98, "elapsed_time": "0:12:15", "remaining_time": "0:55:57"}

{"current_steps": 250, "total_steps": 1335, "loss": 1.6195, "learning_rate": 9.494814647542816e-06, "epoch": 0.5608524957936063, "percentage": 18.73, "elapsed_time": "0:12:46", "remaining_time": "0:55:25"}

{"current_steps": 260, "total_steps": 1335, "loss": 1.5734, "learning_rate": 9.439178271552096e-06, "epoch": 0.5832865956253506, "percentage": 19.48, "elapsed_time": "0:13:11", "remaining_time": "0:54:33"}

{"current_steps": 270, "total_steps": 1335, "loss": 1.591, "learning_rate": 9.380817051782258e-06, "epoch": 0.6057206954570948, "percentage": 20.22, "elapsed_time": "0:13:39", "remaining_time": "0:53:53"}

{"current_steps": 280, "total_steps": 1335, "loss": 1.5749, "learning_rate": 9.319766811351209e-06, "epoch": 0.628154795288839, "percentage": 20.97, "elapsed_time": "0:14:11", "remaining_time": "0:53:28"}

{"current_steps": 290, "total_steps": 1335, "loss": 1.5479, "learning_rate": 9.256065023943868e-06, "epoch": 0.6505888951205833, "percentage": 21.72, "elapsed_time": "0:14:43", "remaining_time": "0:53:05"}

{"current_steps": 300, "total_steps": 1335, "loss": 1.6346, "learning_rate": 9.18975079081018e-06, "epoch": 0.6730229949523275, "percentage": 22.47, "elapsed_time": "0:15:12", "remaining_time": "0:52:29"}

{"current_steps": 310, "total_steps": 1335, "loss": 1.5251, "learning_rate": 9.12086481676409e-06, "epoch": 0.6954570947840718, "percentage": 23.22, "elapsed_time": "0:15:44", "remaining_time": "0:52:03"}

{"current_steps": 320, "total_steps": 1335, "loss": 1.6126, "learning_rate": 9.049449385198237e-06, "epoch": 0.7178911946158161, "percentage": 23.97, "elapsed_time": "0:16:17", "remaining_time": "0:51:39"}

{"current_steps": 330, "total_steps": 1335, "loss": 1.6549, "learning_rate": 8.975548332129678e-06, "epoch": 0.7403252944475603, "percentage": 24.72, "elapsed_time": "0:16:51", "remaining_time": "0:51:18"}

{"current_steps": 340, "total_steps": 1335, "loss": 1.4961, "learning_rate": 8.899207019292594e-06, "epoch": 0.7627593942793045, "percentage": 25.47, "elapsed_time": "0:17:22", "remaining_time": "0:50:49"}

{"current_steps": 350, "total_steps": 1335, "loss": 1.6111, "learning_rate": 8.82047230629447e-06, "epoch": 0.7851934941110488, "percentage": 26.22, "elapsed_time": "0:17:44", "remaining_time": "0:49:57"}

{"current_steps": 360, "total_steps": 1335, "loss": 1.4798, "learning_rate": 8.739392521852875e-06, "epoch": 0.807627593942793, "percentage": 26.97, "elapsed_time": "0:18:13", "remaining_time": "0:49:20"}

{"current_steps": 370, "total_steps": 1335, "loss": 1.6104, "learning_rate": 8.656017434130457e-06, "epoch": 0.8300616937745373, "percentage": 27.72, "elapsed_time": "0:18:43", "remaining_time": "0:48:49"}

{"current_steps": 380, "total_steps": 1335, "loss": 1.5655, "learning_rate": 8.570398220186396e-06, "epoch": 0.8524957936062816, "percentage": 28.46, "elapsed_time": "0:19:10", "remaining_time": "0:48:11"}

{"current_steps": 390, "total_steps": 1335, "loss": 1.6217, "learning_rate": 8.482587434563046e-06, "epoch": 0.8749298934380259, "percentage": 29.21, "elapsed_time": "0:19:36", "remaining_time": "0:47:29"}

{"current_steps": 400, "total_steps": 1335, "loss": 1.6265, "learning_rate": 8.392638977027055e-06, "epoch": 0.89736399326977, "percentage": 29.96, "elapsed_time": "0:20:10", "remaining_time": "0:47:09"}

{"current_steps": 410, "total_steps": 1335, "loss": 1.5607, "learning_rate": 8.300608059484762e-06, "epoch": 0.9197980931015143, "percentage": 30.71, "elapsed_time": "0:20:41", "remaining_time": "0:46:41"}

{"current_steps": 420, "total_steps": 1335, "loss": 1.5637, "learning_rate": 8.20655117209219e-06, "epoch": 0.9422321929332585, "percentage": 31.46, "elapsed_time": "0:21:10", "remaining_time": "0:46:08"}

{"current_steps": 430, "total_steps": 1335, "loss": 1.5723, "learning_rate": 8.110526048580402e-06, "epoch": 0.9646662927650028, "percentage": 32.21, "elapsed_time": "0:21:40", "remaining_time": "0:45:37"}

{"current_steps": 440, "total_steps": 1335, "loss": 1.5894, "learning_rate": 8.012591630817563e-06, "epoch": 0.9871003925967471, "percentage": 32.96, "elapsed_time": "0:22:10", "remaining_time": "0:45:05"}

{"current_steps": 450, "total_steps": 1335, "loss": 1.5678, "learning_rate": 7.912808032629407e-06, "epoch": 1.0095344924284912, "percentage": 33.71, "elapsed_time": "0:22:35", "remaining_time": "0:44:25"}

{"current_steps": 460, "total_steps": 1335, "loss": 1.5251, "learning_rate": 7.81123650290033e-06, "epoch": 1.0319685922602355, "percentage": 34.46, "elapsed_time": "0:23:07", "remaining_time": "0:43:59"}

{"current_steps": 470, "total_steps": 1335, "loss": 1.5316, "learning_rate": 7.707939387977785e-06, "epoch": 1.0544026920919798, "percentage": 35.21, "elapsed_time": "0:23:43", "remaining_time": "0:43:38"}

{"current_steps": 480, "total_steps": 1335, "loss": 1.514, "learning_rate": 7.602980093403019e-06, "epoch": 1.076836791923724, "percentage": 35.96, "elapsed_time": "0:24:11", "remaining_time": "0:43:04"}

{"current_steps": 490, "total_steps": 1335, "loss": 1.6545, "learning_rate": 7.496423044991662e-06, "epoch": 1.0992708917554683, "percentage": 36.7, "elapsed_time": "0:24:40", "remaining_time": "0:42:32"}

{"current_steps": 500, "total_steps": 1335, "loss": 1.5146, "learning_rate": 7.3883336492880645e-06, "epoch": 1.1217049915872126, "percentage": 37.45, "elapsed_time": "0:25:09", "remaining_time": "0:42:00"}

{"current_steps": 510, "total_steps": 1335, "loss": 1.5534, "learning_rate": 7.2787782534176265e-06, "epoch": 1.1441390914189569, "percentage": 38.2, "elapsed_time": "0:25:40", "remaining_time": "0:41:32"}

{"current_steps": 520, "total_steps": 1335, "loss": 1.6577, "learning_rate": 7.167824104361807e-06, "epoch": 1.1665731912507011, "percentage": 38.95, "elapsed_time": "0:26:07", "remaining_time": "0:40:57"}

{"current_steps": 530, "total_steps": 1335, "loss": 1.6113, "learning_rate": 7.055539307680762e-06, "epoch": 1.1890072910824454, "percentage": 39.7, "elapsed_time": "0:26:35", "remaining_time": "0:40:23"}

{"current_steps": 540, "total_steps": 1335, "loss": 1.6219, "learning_rate": 6.9419927857089905e-06, "epoch": 1.2114413909141897, "percentage": 40.45, "elapsed_time": "0:27:00", "remaining_time": "0:39:46"}

{"current_steps": 550, "total_steps": 1335, "loss": 1.5156, "learning_rate": 6.827254235249616e-06, "epoch": 1.2338754907459337, "percentage": 41.2, "elapsed_time": "0:27:41", "remaining_time": "0:39:30"}

{"current_steps": 560, "total_steps": 1335, "loss": 1.4985, "learning_rate": 6.711394084793303e-06, "epoch": 1.256309590577678, "percentage": 41.95, "elapsed_time": "0:28:18", "remaining_time": "0:39:10"}

{"current_steps": 570, "total_steps": 1335, "loss": 1.4869, "learning_rate": 6.594483451288032e-06, "epoch": 1.2787436904094223, "percentage": 42.7, "elapsed_time": "0:29:02", "remaining_time": "0:38:58"}

{"current_steps": 580, "total_steps": 1335, "loss": 1.6156, "learning_rate": 6.476594096486302e-06, "epoch": 1.3011777902411665, "percentage": 43.45, "elapsed_time": "0:29:32", "remaining_time": "0:38:27"}

{"current_steps": 590, "total_steps": 1335, "loss": 1.5712, "learning_rate": 6.357798382896532e-06, "epoch": 1.3236118900729108, "percentage": 44.19, "elapsed_time": "0:30:10", "remaining_time": "0:38:06"}

{"current_steps": 600, "total_steps": 1335, "loss": 1.6358, "learning_rate": 6.238169229365717e-06, "epoch": 1.346045989904655, "percentage": 44.94, "elapsed_time": "0:30:39", "remaining_time": "0:37:33"}

{"current_steps": 610, "total_steps": 1335, "loss": 1.5848, "learning_rate": 6.117780066320583e-06, "epoch": 1.3684800897363993, "percentage": 45.69, "elapsed_time": "0:31:04", "remaining_time": "0:36:55"}

{"current_steps": 620, "total_steps": 1335, "loss": 1.5229, "learning_rate": 5.996704790694739e-06, "epoch": 1.3909141895681436, "percentage": 46.44, "elapsed_time": "0:31:36", "remaining_time": "0:36:27"}

{"current_steps": 630, "total_steps": 1335, "loss": 1.5532, "learning_rate": 5.875017720569461e-06, "epoch": 1.4133482893998879, "percentage": 47.19, "elapsed_time": "0:32:01", "remaining_time": "0:35:50"}

{"current_steps": 640, "total_steps": 1335, "loss": 1.5044, "learning_rate": 5.752793549555985e-06, "epoch": 1.4357823892316322, "percentage": 47.94, "elapsed_time": "0:32:27", "remaining_time": "0:35:14"}

{"current_steps": 650, "total_steps": 1335, "loss": 1.5541, "learning_rate": 5.6301073009472915e-06, "epoch": 1.4582164890633762, "percentage": 48.69, "elapsed_time": "0:32:54", "remaining_time": "0:34:40"}

{"current_steps": 660, "total_steps": 1335, "loss": 1.5324, "learning_rate": 5.507034281667511e-06, "epoch": 1.4806505888951205, "percentage": 49.44, "elapsed_time": "0:33:28", "remaining_time": "0:34:13"}

{"current_steps": 670, "total_steps": 1335, "loss": 1.4164, "learning_rate": 5.383650036047248e-06, "epoch": 1.5030846887268647, "percentage": 50.19, "elapsed_time": "0:33:57", "remaining_time": "0:33:42"}

{"current_steps": 680, "total_steps": 1335, "loss": 1.5258, "learning_rate": 5.260030299453176e-06, "epoch": 1.525518788558609, "percentage": 50.94, "elapsed_time": "0:34:28", "remaining_time": "0:33:12"}

{"current_steps": 690, "total_steps": 1335, "loss": 1.6005, "learning_rate": 5.1362509518003656e-06, "epoch": 1.5479528883903533, "percentage": 51.69, "elapsed_time": "0:34:58", "remaining_time": "0:32:41"}

{"current_steps": 700, "total_steps": 1335, "loss": 1.5907, "learning_rate": 5.012387970975897e-06, "epoch": 1.5703869882220975, "percentage": 52.43, "elapsed_time": "0:35:25", "remaining_time": "0:32:07"}

{"current_steps": 710, "total_steps": 1335, "loss": 1.4976, "learning_rate": 4.8885173862023215e-06, "epoch": 1.5928210880538418, "percentage": 53.18, "elapsed_time": "0:35:56", "remaining_time": "0:31:38"}

{"current_steps": 720, "total_steps": 1335, "loss": 1.5863, "learning_rate": 4.7647152313696276e-06, "epoch": 1.615255187885586, "percentage": 53.93, "elapsed_time": "0:36:26", "remaining_time": "0:31:07"}

{"current_steps": 730, "total_steps": 1335, "loss": 1.5431, "learning_rate": 4.641057498364333e-06, "epoch": 1.6376892877173304, "percentage": 54.68, "elapsed_time": "0:36:55", "remaining_time": "0:30:36"}

{"current_steps": 740, "total_steps": 1335, "loss": 1.4806, "learning_rate": 4.517620090424356e-06, "epoch": 1.6601233875490746, "percentage": 55.43, "elapsed_time": "0:37:24", "remaining_time": "0:30:05"}

{"current_steps": 750, "total_steps": 1335, "loss": 1.509, "learning_rate": 4.3944787755483156e-06, "epoch": 1.682557487380819, "percentage": 56.18, "elapsed_time": "0:37:53", "remaining_time": "0:29:33"}

{"current_steps": 760, "total_steps": 1335, "loss": 1.5581, "learning_rate": 4.271709139987823e-06, "epoch": 1.7049915872125632, "percentage": 56.93, "elapsed_time": "0:38:21", "remaining_time": "0:29:01"}

{"current_steps": 770, "total_steps": 1335, "loss": 1.6015, "learning_rate": 4.149386541851354e-06, "epoch": 1.7274256870443074, "percentage": 57.68, "elapsed_time": "0:38:52", "remaining_time": "0:28:31"}

{"current_steps": 780, "total_steps": 1335, "loss": 1.6632, "learning_rate": 4.027586064848144e-06, "epoch": 1.7498597868760517, "percentage": 58.43, "elapsed_time": "0:39:22", "remaining_time": "0:28:01"}

{"current_steps": 790, "total_steps": 1335, "loss": 1.449, "learning_rate": 3.906382472200511e-06, "epoch": 1.772293886707796, "percentage": 59.18, "elapsed_time": "0:39:50", "remaining_time": "0:27:28"}

{"current_steps": 800, "total_steps": 1335, "loss": 1.5676, "learning_rate": 3.78585016075293e-06, "epoch": 1.7947279865395402, "percentage": 59.93, "elapsed_time": "0:40:16", "remaining_time": "0:26:56"}

{"current_steps": 810, "total_steps": 1335, "loss": 1.5119, "learning_rate": 3.6660631153059467e-06, "epoch": 1.8171620863712845, "percentage": 60.67, "elapsed_time": "0:40:47", "remaining_time": "0:26:26"}

{"current_steps": 820, "total_steps": 1335, "loss": 1.5329, "learning_rate": 3.5470948632030588e-06, "epoch": 1.8395961862030286, "percentage": 61.42, "elapsed_time": "0:41:17", "remaining_time": "0:25:56"}

{"current_steps": 830, "total_steps": 1335, "loss": 1.5028, "learning_rate": 3.4290184291983573e-06, "epoch": 1.8620302860347728, "percentage": 62.17, "elapsed_time": "0:41:43", "remaining_time": "0:25:23"}

{"current_steps": 840, "total_steps": 1335, "loss": 1.5665, "learning_rate": 3.311906290632688e-06, "epoch": 1.884464385866517, "percentage": 62.92, "elapsed_time": "0:42:13", "remaining_time": "0:24:52"}

{"current_steps": 850, "total_steps": 1335, "loss": 1.545, "learning_rate": 3.1958303329458125e-06, "epoch": 1.9068984856982614, "percentage": 63.67, "elapsed_time": "0:42:40", "remaining_time": "0:24:20"}

{"current_steps": 860, "total_steps": 1335, "loss": 1.4895, "learning_rate": 3.080861805551892e-06, "epoch": 1.9293325855300056, "percentage": 64.42, "elapsed_time": "0:43:07", "remaining_time": "0:23:49"}

{"current_steps": 870, "total_steps": 1335, "loss": 1.5222, "learning_rate": 2.967071278105378e-06, "epoch": 1.95176668536175, "percentage": 65.17, "elapsed_time": "0:43:37", "remaining_time": "0:23:18"}

{"current_steps": 880, "total_steps": 1335, "loss": 1.572, "learning_rate": 2.854528597184141e-06, "epoch": 1.974200785193494, "percentage": 65.92, "elapsed_time": "0:44:06", "remaining_time": "0:22:48"}

{"current_steps": 890, "total_steps": 1335, "loss": 1.6228, "learning_rate": 2.74330284341645e-06, "epoch": 1.9966348850252382, "percentage": 66.67, "elapsed_time": "0:44:35", "remaining_time": "0:22:17"}

{"current_steps": 900, "total_steps": 1335, "loss": 1.6197, "learning_rate": 2.6334622890780915e-06, "epoch": 2.0190689848569825, "percentage": 67.42, "elapsed_time": "0:45:04", "remaining_time": "0:21:46"}

{"current_steps": 910, "total_steps": 1335, "loss": 1.4866, "learning_rate": 2.5250743561856856e-06, "epoch": 2.0415030846887268, "percentage": 68.16, "elapsed_time": "0:45:33", "remaining_time": "0:21:16"}

{"current_steps": 920, "total_steps": 1335, "loss": 1.5393, "learning_rate": 2.4182055751118816e-06, "epoch": 2.063937184520471, "percentage": 68.91, "elapsed_time": "0:46:03", "remaining_time": "0:20:46"}

{"current_steps": 930, "total_steps": 1335, "loss": 1.5802, "learning_rate": 2.3129215437478837e-06, "epoch": 2.0863712843522153, "percentage": 69.66, "elapsed_time": "0:46:36", "remaining_time": "0:20:18"}

{"current_steps": 940, "total_steps": 1335, "loss": 1.6246, "learning_rate": 2.209286887238334e-06, "epoch": 2.1088053841839596, "percentage": 70.41, "elapsed_time": "0:47:03", "remaining_time": "0:19:46"}

{"current_steps": 950, "total_steps": 1335, "loss": 1.6094, "learning_rate": 2.1073652183133e-06, "epoch": 2.131239484015704, "percentage": 71.16, "elapsed_time": "0:47:31", "remaining_time": "0:19:15"}

{"current_steps": 960, "total_steps": 1335, "loss": 1.6133, "learning_rate": 2.0072190982416755e-06, "epoch": 2.153673583847448, "percentage": 71.91, "elapsed_time": "0:47:59", "remaining_time": "0:18:44"}

{"current_steps": 970, "total_steps": 1335, "loss": 1.5259, "learning_rate": 1.908909998430013e-06, "epoch": 2.1761076836791924, "percentage": 72.66, "elapsed_time": "0:48:28", "remaining_time": "0:18:14"}

{"current_steps": 980, "total_steps": 1335, "loss": 1.4899, "learning_rate": 1.812498262690311e-06, "epoch": 2.1985417835109367, "percentage": 73.41, "elapsed_time": "0:48:59", "remaining_time": "0:17:44"}

{"current_steps": 990, "total_steps": 1335, "loss": 1.5945, "learning_rate": 1.71804307019995e-06, "epoch": 2.220975883342681, "percentage": 74.16, "elapsed_time": "0:49:27", "remaining_time": "0:17:14"}

{"current_steps": 1000, "total_steps": 1335, "loss": 1.5789, "learning_rate": 1.6256023991765063e-06, "epoch": 2.243409983174425, "percentage": 74.91, "elapsed_time": "0:49:58", "remaining_time": "0:16:44"}

{"current_steps": 1000, "total_steps": 1335, "eval_loss": 1.5424456596374512, "epoch": 2.243409983174425, "percentage": 74.91, "elapsed_time": "0:50:25", "remaining_time": "0:16:53"}

{"current_steps": 1010, "total_steps": 1335, "loss": 1.4356, "learning_rate": 1.5352329912897067e-06, "epoch": 2.2658440830061695, "percentage": 75.66, "elapsed_time": "0:50:54", "remaining_time": "0:16:23"}

{"current_steps": 1020, "total_steps": 1335, "loss": 1.594, "learning_rate": 1.446990316832445e-06, "epoch": 2.2882781828379137, "percentage": 76.4, "elapsed_time": "0:51:23", "remaining_time": "0:15:52"}

{"current_steps": 1030, "total_steps": 1335, "loss": 1.5321, "learning_rate": 1.36092854067214e-06, "epoch": 2.310712282669658, "percentage": 77.15, "elapsed_time": "0:51:47", "remaining_time": "0:15:20"}

{"current_steps": 1040, "total_steps": 1335, "loss": 1.5043, "learning_rate": 1.2771004890034322e-06, "epoch": 2.3331463825014023, "percentage": 77.9, "elapsed_time": "0:52:14", "remaining_time": "0:14:48"}

{"current_steps": 1050, "total_steps": 1335, "loss": 1.563, "learning_rate": 1.1955576169225369e-06, "epoch": 2.3555804823331465, "percentage": 78.65, "elapsed_time": "0:52:42", "remaining_time": "0:14:18"}

{"current_steps": 1060, "total_steps": 1335, "loss": 1.6485, "learning_rate": 1.1163499768432412e-06, "epoch": 2.378014582164891, "percentage": 79.4, "elapsed_time": "0:53:20", "remaining_time": "0:13:50"}

{"current_steps": 1070, "total_steps": 1335, "loss": 1.5246, "learning_rate": 1.0395261877738705e-06, "epoch": 2.400448681996635, "percentage": 80.15, "elapsed_time": "0:53:47", "remaining_time": "0:13:19"}

{"current_steps": 1080, "total_steps": 1335, "loss": 1.4818, "learning_rate": 9.651334054740996e-07, "epoch": 2.4228827818283794, "percentage": 80.9, "elapsed_time": "0:54:17", "remaining_time": "0:12:49"}

{"current_steps": 1090, "total_steps": 1335, "loss": 1.5432, "learning_rate": 8.932172935099448e-07, "epoch": 2.4453168816601236, "percentage": 81.65, "elapsed_time": "0:54:46", "remaining_time": "0:12:18"}

{"current_steps": 1100, "total_steps": 1335, "loss": 1.4881, "learning_rate": 8.238219952246807e-07, "epoch": 2.4677509814918674, "percentage": 82.4, "elapsed_time": "0:55:16", "remaining_time": "0:11:48"}

{"current_steps": 1110, "total_steps": 1335, "loss": 1.4595, "learning_rate": 7.569901066429042e-07, "epoch": 2.4901850813236117, "percentage": 83.15, "elapsed_time": "0:55:44", "remaining_time": "0:11:17"}

{"current_steps": 1120, "total_steps": 1335, "loss": 1.5592, "learning_rate": 6.927626503243551e-07, "epoch": 2.512619181155356, "percentage": 83.9, "elapsed_time": "0:56:13", "remaining_time": "0:10:47"}

{"current_steps": 1130, "total_steps": 1335, "loss": 1.5417, "learning_rate": 6.311790501835724e-07, "epoch": 2.5350532809871003, "percentage": 84.64, "elapsed_time": "0:56:41", "remaining_time": "0:10:17"}

{"current_steps": 1140, "total_steps": 1335, "loss": 1.6007, "learning_rate": 5.722771072908156e-07, "epoch": 2.5574873808188445, "percentage": 85.39, "elapsed_time": "0:57:08", "remaining_time": "0:09:46"}

{"current_steps": 1150, "total_steps": 1335, "loss": 1.5243, "learning_rate": 5.160929766691198e-07, "epoch": 2.579921480650589, "percentage": 86.14, "elapsed_time": "0:57:36", "remaining_time": "0:09:15"}

{"current_steps": 1160, "total_steps": 1335, "loss": 1.5966, "learning_rate": 4.626611451017271e-07, "epoch": 2.602355580482333, "percentage": 86.89, "elapsed_time": "0:58:07", "remaining_time": "0:08:46"}

{"current_steps": 1170, "total_steps": 1335, "loss": 1.4661, "learning_rate": 4.1201440996349537e-07, "epoch": 2.6247896803140773, "percentage": 87.64, "elapsed_time": "0:58:34", "remaining_time": "0:08:15"}

{"current_steps": 1180, "total_steps": 1335, "loss": 1.5985, "learning_rate": 3.6418385908932316e-07, "epoch": 2.6472237801458216, "percentage": 88.39, "elapsed_time": "0:59:08", "remaining_time": "0:07:46"}

{"current_steps": 1190, "total_steps": 1335, "loss": 1.53, "learning_rate": 3.1919885169188924e-07, "epoch": 2.669657879977566, "percentage": 89.14, "elapsed_time": "0:59:45", "remaining_time": "0:07:16"}

{"current_steps": 1200, "total_steps": 1335, "loss": 1.4997, "learning_rate": 2.7708700034047244e-07, "epoch": 2.69209197980931, "percentage": 89.89, "elapsed_time": "1:00:15", "remaining_time": "0:06:46"}

{"current_steps": 1210, "total_steps": 1335, "loss": 1.4739, "learning_rate": 2.3787415401187895e-07, "epoch": 2.7145260796410544, "percentage": 90.64, "elapsed_time": "1:00:47", "remaining_time": "0:06:16"}

{"current_steps": 1220, "total_steps": 1335, "loss": 1.6267, "learning_rate": 2.0158438222389775e-07, "epoch": 2.7369601794727987, "percentage": 91.39, "elapsed_time": "1:01:17", "remaining_time": "0:05:46"}

{"current_steps": 1230, "total_steps": 1335, "loss": 1.6008, "learning_rate": 1.6823996026102074e-07, "epoch": 2.759394279304543, "percentage": 92.13, "elapsed_time": "1:01:47", "remaining_time": "0:05:16"}

{"current_steps": 1240, "total_steps": 1335, "loss": 1.5112, "learning_rate": 1.3786135550148626e-07, "epoch": 2.781828379136287, "percentage": 92.88, "elapsed_time": "1:02:21", "remaining_time": "0:04:46"}

{"current_steps": 1250, "total_steps": 1335, "loss": 1.507, "learning_rate": 1.1046721485405365e-07, "epoch": 2.8042624789680315, "percentage": 93.63, "elapsed_time": "1:02:54", "remaining_time": "0:04:16"}

{"current_steps": 1260, "total_steps": 1335, "loss": 1.5695, "learning_rate": 8.607435331221048e-08, "epoch": 2.8266965787997758, "percentage": 94.38, "elapsed_time": "1:03:25", "remaining_time": "0:03:46"}

{"current_steps": 1270, "total_steps": 1335, "loss": 1.5616, "learning_rate": 6.46977436328422e-08, "epoch": 2.84913067863152, "percentage": 95.13, "elapsed_time": "1:03:53", "remaining_time": "0:03:16"}

{"current_steps": 1280, "total_steps": 1335, "loss": 1.4343, "learning_rate": 4.635050714569489e-08, "epoch": 2.8715647784632643, "percentage": 95.88, "elapsed_time": "1:04:22", "remaining_time": "0:02:45"}

{"current_steps": 1290, "total_steps": 1335, "loss": 1.4591, "learning_rate": 3.104390569927895e-08, "epoch": 2.8939988782950086, "percentage": 96.63, "elapsed_time": "1:04:52", "remaining_time": "0:02:15"}

{"current_steps": 1300, "total_steps": 1335, "loss": 1.6285, "learning_rate": 1.878733474815375e-08, "epoch": 2.9164329781267524, "percentage": 97.38, "elapsed_time": "1:05:23", "remaining_time": "0:01:45"}

{"current_steps": 1310, "total_steps": 1335, "loss": 1.5196, "learning_rate": 9.588317585835227e-09, "epoch": 2.9388670779584967, "percentage": 98.13, "elapsed_time": "1:06:02", "remaining_time": "0:01:15"}

{"current_steps": 1320, "total_steps": 1335, "loss": 1.4836, "learning_rate": 3.4525007268720876e-09, "epoch": 2.961301177790241, "percentage": 98.88, "elapsed_time": "1:06:33", "remaining_time": "0:00:45"}

{"current_steps": 1330, "total_steps": 1335, "loss": 1.5183, "learning_rate": 3.8365044091381864e-10, "epoch": 2.983735277621985, "percentage": 99.63, "elapsed_time": "1:07:08", "remaining_time": "0:00:15"}

{"current_steps": 1335, "total_steps": 1335, "epoch": 2.994952327537858, "percentage": 100.0, "elapsed_time": "1:07:26", "remaining_time": "0:00:00"}

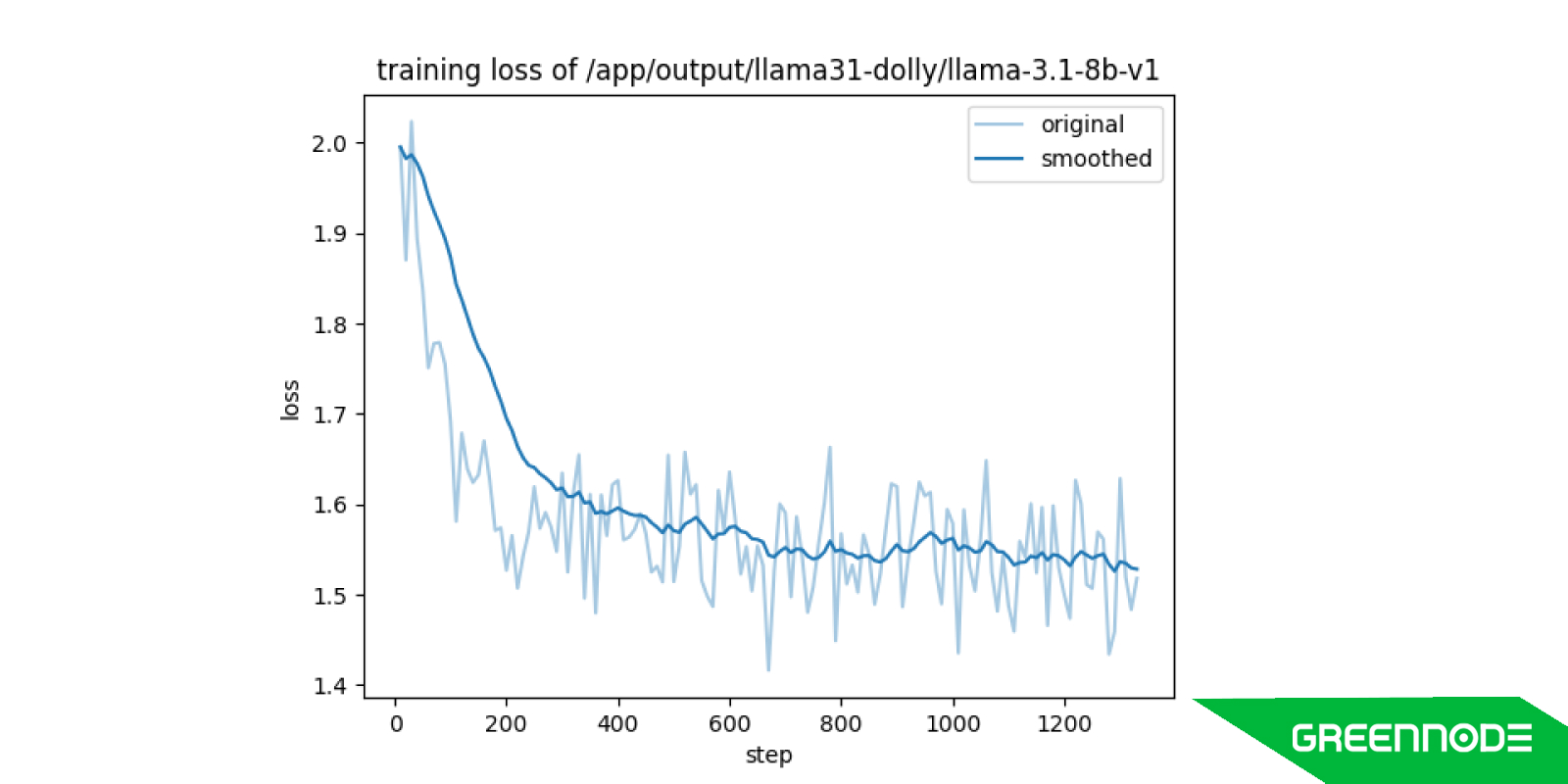

The fine-tuning process took just one hour to complete. Prompting the fine-tuned model with the same question from the introduction now produces the specific and targeted response I was hoping for.

There are many things to do in Europe. You can visit the Eiffel Tower in Paris, France, the Colosseum in Rome, Italy, or the Leaning Tower of Pisa in Pisa, Italy. You can also visit the Louvre Museum in Paris, France, the Vatican Museums in Vatican City, or the Uffizi Gallery in Florence, Italy. You can also visit the Acropolis in Athens, Greece, the Parthenon in Athens, Greece, or the Acropolis Museum in Athens, Greece. You can also visit the Grand Bazaar in Istanbul, Turkey, the Hagia Sophia in Istanbul, Turkey, or the Blue Mosque in Istanbul, Turkey. You can also visit the Palace of Versailles in Versailles, France, the Palace of Fontainebleau in Fontainebleau, France, or the Palace of Versailles in Versailles, France.

Conclusion

Fine-tuning LLaMA 3 on the GreenNode platform bridges cutting-edge AI capabilities with actionable marketing insights. By following this guide, businesses can achieve a competitive edge, delivering personalized, data-driven marketing solutions that resonate with their audiences. Whether you are an AI professional, marketer, or decision-maker, the synergy between LLaMA 3 and GreenNode offers a pathway to innovation and success.

Try to fine-tune LLaMA 3 with GreenNode today!