Prompt engineering emerges as a captivating frontier in the realm of AI, swiftly gaining momentum as society embraces the potential of LLMs. Over the past few years, research in prompt engineering has surged exponentially, particularly with the widespread adoption of consumer applications like ChatGPT, which have captivated the online landscape.

At its core, prompt engineering entails the intricate process of formulating queries for foundational models such as LLMs to elicit desired outputs. Whether the goal is comprehending a research paper, translating foreign text, or crafting code for a personalized rendition of Angry Birds, prompt engineering serves as the linchpin for unlocking fresh insights and resolving previously insurmountable challenges.

Given that LLMs often resemble "black boxes" from an external standpoint, mastering the art of prompting them correctly becomes imperative for achieving success.

In this blog, we delve into the fundamental principles, techniques, and best practices of prompt engineering, equipping you to harness the full potential of contemporary AI tools and navigate this transformative era with confidence. Let us embark on this journey together.

What is Prompt Engineering?

Envision having Tony Stark's J.A.R.V.I.S. as your personal assistant - an incredibly intelligent entity with extensive knowledge spanning every imaginable topic. Despite its brilliance, there are instances where it fails to provide the precise or accurate answer you seek. This is where the concept of prompt engineering becomes invaluable. Prompt engineering entails furnishing your all-knowing robotic assistant with a specific set of instructions or guidelines, enhancing its comprehension of the task at hand.

Playing a pivotal role in elevating the capabilities of Large Language Models (LLMs) across diverse tasks, from question answering to arithmetic reasoning, prompt engineering involves the adept creation and refinement of prompts. It encompasses the use of various techniques to interact seamlessly with LLMs and other tools.

Yet, prompt engineering extends beyond the mere construction of prompts. It encompasses a varied set of skills and methodologies, empowering researchers and developers to engage with LLMs more effectively and grasp their capabilities comprehensively. This skill set proves invaluable for enhancing the safety and reliability of LLMs, as well as expanding their functionalities by incorporating domain knowledge and integrating external tools.

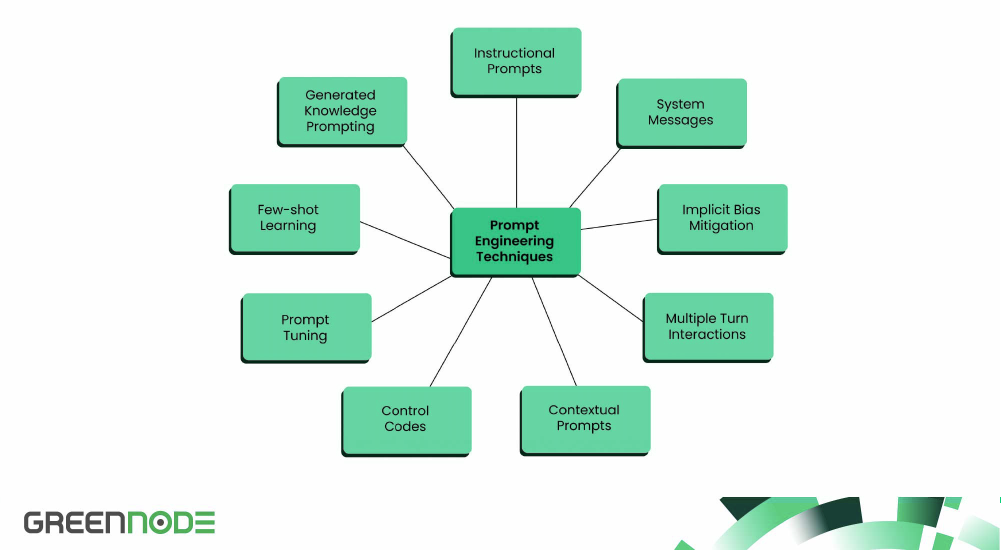

Prompt Engineering Techniques

Prompt Engineering emerges as a dynamically evolving realm of research, propelled by the widespread adoption of foundation models and LLMs across diverse industries and disciplines. Let's delve into some of the techniques and best practices that characterize this rapidly advancing field.

Instructional Prompts

These prompts furnish explicit instructions to direct the language model's behavior. By specifying the desired format or task, the model generates responses aligned with the provided instructions. Instructional prompts play a crucial role in refining the model's output, ensuring relevance and accuracy.

Example: "Compose a persuasive essay presenting arguments either for or against the use of renewable energy sources". This prompt guides the model to generate an essay advocating a stance on renewable energy with supporting arguments.

System Messages

System messages are employed to define the role or context of the language model. By establishing a clear persona or context, the model tailors its responses accordingly. This technique constructs a conversational framework that guides the model's behavior, leading to more coherent and pertinent replies.

Example: "You are an AI language model specializing in psychology. Provide advice to someone grappling with anxiety". The system message sets the model as a knowledgeable psychologist, enabling it to offer appropriate advice to a user seeking help with anxiety.

Contextual Prompts

Contextual prompts encompass relevant information or preceding dialogue to provide the model with the necessary context for generating responses. By incorporating context, the model better understands the user's intent, resulting in more contextually appropriate and coherent replies.

Example: Prompt: "User: Can you recommend a good restaurant in Paris?" The user's query embeds the essential context, empowering the model to offer a pertinent and helpful recommendation for a restaurant in Paris.

Implicit Bias Mitigation

Employing prompt engineering becomes instrumental in mitigating potential biases in the language model's responses. Through meticulous prompt construction and avoidance of leading or biased language, efforts can be directed towards encouraging fair and unbiased outputs. This technique aims to foster inclusive and objective responses from the model.

Example:

- Biased prompt: “Why are all teenagers so lazy?”

- Unbiased prompt: “Discuss the factors that contribute to teenage behavior and motivation.”

By reframing the prompt to eliminate generalizations and stereotypes, the model is guided to provide a more balanced and nuanced response.

Multiple-turn Interactions

This strategy involves engaging in a multi-turn conversation with the model. Through successive turns or exchanges, each interaction contributes additional context, enabling the model to generate more coherent and contextually aware responses.

Example:

- Turn 1: “User: What are the top tourist attractions in New York City?” <model output>

- Turn 2: “User: Thanks! Can you also suggest some popular Broadway shows?”

This multi-turn interaction enables the model to understand the user's follow-up question and respond with relevant suggestions for Broadway shows in New York City.

Control Codes

Harnessing control codes modifies the behavior of the language model by specifying attributes like sentiment, style, or topic. The incorporation of control codes in prompt engineering provides finer control over the model's responses, facilitating customization for specific requirements.

Example: “[Sentiment: Negative] Express your disappointment regarding the recent product quality issues.”

Including the sentiment control code directs the model to generate a response expressing negative sentiment about the mentioned product quality issues.

Prompt Tuning

A highly efficient technique, prompt tuning adapts large-scale pre-trained language models (PLMs) to downstream tasks. It involves freezing the PLM and focusing on tuning soft prompts, learned through backpropagation and incorporating signals from labeled examples. Here are examples illustrating the utility of prompt tuning:

- Sentiment Analysis: User attaches the prompt “Is the following movie review positive or negative?” before inputting “This movie was amazing!” The model generates a response indicating the sentiment of the review.

- Text Translation: User attaches the prompt “Translate the following text from English to French:” before inputting “The cat is grey.” The model translates the text into French.

- Text Summarization: User attaches the prompt “Summarize the following poem in one sentence:” before inputting a poem. The model generates a summary of the poem in a single sentence.

Prompt tuning enables users to adapt a pre-trained language model to specific tasks by providing a suitable prompt, saving time and computational resources compared to fine-tuning the entire model.

Few-shot Learning

The concept of few-shot learning entails training language models with a limited number of examples to adeptly adapt to specific tasks or domains. Prompt engineering, by offering a small set of examples, empowers the model to generalize and apply acquired knowledge to analogous scenarios. This technique proves particularly beneficial in situations where data for a particular task is scarce or when fine-tuning is essential for specific use cases.

Example: Training a language model with a handful of dialogue samples from a movie facilitates the capture of the style, speech patterns, and personality of a particular character. The few-shot learning approach enables the model to generate responses that align with the traits and behavior characteristics of that specific character.

Generated Knowledge Prompting

The technique of generated knowledge prompting harnesses the AI model's ability to generate knowledge for addressing specific tasks. By showcasing demonstrations and directing the model towards a particular problem, we can tap into the AI's capacity to produce pertinent knowledge, aiding in solving the given task.

Consider the following examples:

- Commonsense Reasoning: Suppose a user poses a question that demands commonsense knowledge, such as “Do fish sleep?” The model can then generate knowledge about the behavior of fish, drawing on its understanding of the subject, and deliver an informed response to the question.

- Fact-Checking: Imagine a scenario where a user presents a statement that requires fact-checking, like “The capital of America is Berlin.” The model can employ its knowledge generation capabilities to acquire information about the capitals of countries. Based on this knowledge, the model can determine whether the statement is true or false and provide an accurate answer.

Navigating Token Limits in LLMs

LLMs function within a designated "budget" of tokens, which encompass the number of words or units of information in each interaction. This budget comprises both the tokens in the prompt and the tokens in the response. Prompt engineers must meticulously consider these limits to ensure optimal utilization of the model's capacity while avoiding surpassing the predefined boundaries.

Token limits can vary among different LLMs, necessitating an awareness of the specific constraints associated with the model at hand. For instance, OpenAI's GPT-3 model imposes a maximum token limit of 4096 tokens, whereas other models like GPT-2 may have lower limits, such as 1024 tokens. These limits dictate the permissible length of both the prompt and response that the model can effectively handle. Surpassing these limits can lead to truncation or incomplete outputs, jeopardizing the coherence and quality of the generated responses.

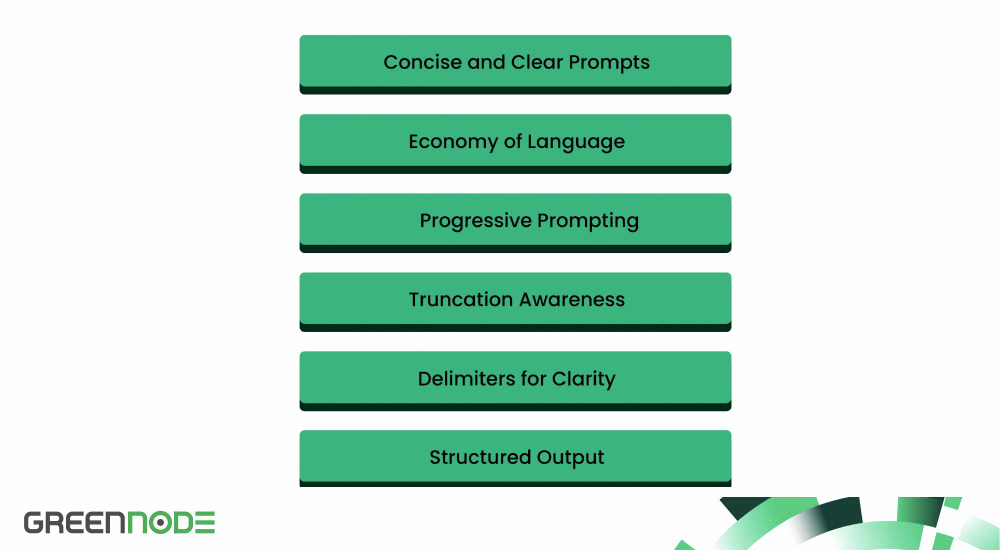

Best Practices for Building Effective Prompts

Prompt engineering is a nuanced blend of art and science, demanding a meticulous balance of brevity, clarity, and adaptability to facilitate seamless interactions within the confines of the available token budget. In the pursuit of this scientific artistry, constant refinement of your approach, objective evaluation of feedback, and adaptation to the idiosyncrasies of the specific AI model in use are essential to deliver optimal results.

However, a foundational set of best practices exists to guide your prompt engineering process toward success:

- Concise and Clear Prompts: Craft prompts that are succinct and clear, delivering necessary information without unnecessary verbosity.

- Economy of Language: Encourage an economy of language in both prompts and responses, ensuring efficiency in communication.

- Progressive Prompting: Break down lengthy or multi-step tasks into smaller, sequential prompts for clarity and ease of comprehension.

- Truncation Awareness: Be mindful of potential truncation of input and output when approaching the token limits of the model in use.

- Delimiters for Clarity: Use angle brackets (<>), opening and closing tags (<code></code>), and other delimiters to clearly delineate different portions and subsections of your input.

- Structured Output: Request output in a structured format like bulleted lists, JSON, or HTML to facilitate integration with other applications and processes.

- Tonal Guidelines: Instruct the model to adopt a specific tone, such as 'creative' or 'conversational,' aligning with your desired use case.

What is the Future of Prompt Engineering?

In the face of AI's rapid evolution and the increasing demand for advanced language models, the future of prompt engineering unfolds with promising prospects. Here are several anticipated scenarios on the horizon:

- Emergence of Prompt Marketplaces: Similar to how app stores transformed software accessibility, the future of prompt engineering may witness the establishment of prompt marketplaces. These platforms will serve as hubs where prompt engineers showcase their expertise, providing an array of pre-designed prompts tailored for specific tasks and industries. Beyond simplifying prompt engineering, these marketplaces will encourage collaboration and knowledge sharing within the community.

- Surge in Demand for Prompt Engineers: As reliance on AI models grows and prompts play a pivotal role in shaping their behavior, prompt engineering is poised to become a highly sought-after profession. Recognizing the importance of fine-tuning language models for specific applications, organizations will seek prompt engineers skilled in crafting effective prompts, optimizing model outputs, and seamlessly integrating AI systems into diverse domains. The role of a prompt engineer will serve as a crucial bridge between AI models and real-world applications.

- Advancements in Prompt Design Tools: The future of prompt engineering will witness the development of sophisticated tools and platforms empowering prompt engineers to effortlessly create, refine, and experiment with prompts. These intuitive interfaces will offer advanced features such as real-time model feedback, prompt optimization suggestions, and visualization of prompt-engine model dynamics. With user-friendly design tools at their disposal, prompt engineers will experience heightened productivity and creativity, hastening the pace of innovation.

As prompt engineering evolves, it is poised to become an indispensable component of AI development. Industry experts, by skillfully crafting nuanced prompts, will unlock the full potential of language models, ushering in a new era of intelligent interactions and transformative problem-solving.

At GreenNode, we are dedicated to aiding enterprises in realizing their AI aspirations and meeting deadlines. Our unwavering support extends throughout every stage of your journey. Join us as we elevate your AI experience and propel your business into a future of innovation and success.

Frequently Asked Questions (FAQs) About Prompt Engineering

1. What exactly is prompt engineering?

Prompt engineering is the practice of designing and refining the inputs (prompts) we give to large language models (LLMs) so they produce better, more useful outputs. Instead of writing long code or rules, you craft the right wording, structure, or context in your prompt to guide the model’s response.

2. Why is prompt engineering important?

Because the quality of a model’s output is heavily influenced by the input it receives. A well-designed prompt can make the difference between a vague, off-topic answer and a clear, accurate response. For businesses, effective prompt engineering can save hours of manual work and reduce errors, especially when scaling AI across teams.

3. Do I need to be a programmer to learn prompt engineering?

Not at all. While technical users often push the boundaries with advanced prompting, anyone can learn the basics. Think of it more like learning how to ask better questions than learning to code. That said, understanding the model’s strengths and limitations does help when you want to design prompts for more complex workflows.

4. What are some best practices for writing effective prompts?

- Be clear and specific with instructions.

- Provide examples (few-shot prompting) to show the model what you expect.

- Use constraints (e.g., “answer in bullet points” or “keep under 200 words”).

- Iterate: test different wordings and see which works best.

- Combine prompts with tools like retrieval-augmented generation (RAG) for better accuracy.

5. How is prompt engineering used in real-world applications?

Companies use prompt engineering for everything from writing marketing copy and generating code snippets to automating customer support and analyzing documents. In research, it’s used to fine-tune LLMs for specialized domains like law, medicine, or finance, often without retraining the model.

6. Will prompt engineering still matter as AI gets smarter?

Yes, but it will evolve. As models improve, prompts may become shorter and more natural, but the skill of knowing how to frame a problem for an AI system will always be valuable. Some experts believe future tools will even “auto-engineer” prompts behind the scenes, but humans will still need to set the right goals and context.