In the fast-evolving world of AI development, moving from model experimentation to production deployment remains a huge hurdle for many teams. Engineers, startups, and enterprises alike face the complexity of provisioning infrastructure, managing cost visibility, and stitching together multiple tools for building modern AI apps.

With our latest platform release, GreenNode is closing that gap.

We’re excited to introduce the GreenNode Serverless AI Model. This game-changing feature allows developers and teams to directly run, experiment, and integrate with 20+ top-tier open-source models using a unified API and no-code Playground. Alongside this, we’re rolling out key upgrades, including SSO login via VNG Cloud account and native access to VNG Cloud services right inside the GreenNode portal.

Explore our latest features here: https://aiplatform.console.greennode.ai/

This release is about one thing: simplifying your AI workflow — from idea to production.

New Product Release: GreenNode Serverless AI Model

Building and deploying AI applications today remains a high-friction process—even for experienced teams. While open-source models have accelerated innovation, most organizations still face a long list of operational roadblocks before a single model can be used in production.

From the outset, teams must invest heavily in GPU clusters, compute infrastructure, persistent storage, and highly specialized talent. Perhaps most critically, many product teams lack the ML engineers or AI researchers needed to fine-tune models, optimize performance, or simply deploy models at scale. And as the AI landscape continues to evolve rapidly, the hesitation to invest in an inflexible or overly complex infrastructure stack becomes a key blocker to innovation.

GreenNode's latest release addresses these challenges head-on with a production-ready solution: Model-as-a-Service (MaaS). Instead of building and managing AI infrastructure from scratch, developers can now access a powerful catalog of 20+ AI models and run them instantly via API—with full control, visibility, and cost transparency. Learn more: https://greennode.ai/product/model-as-a-service

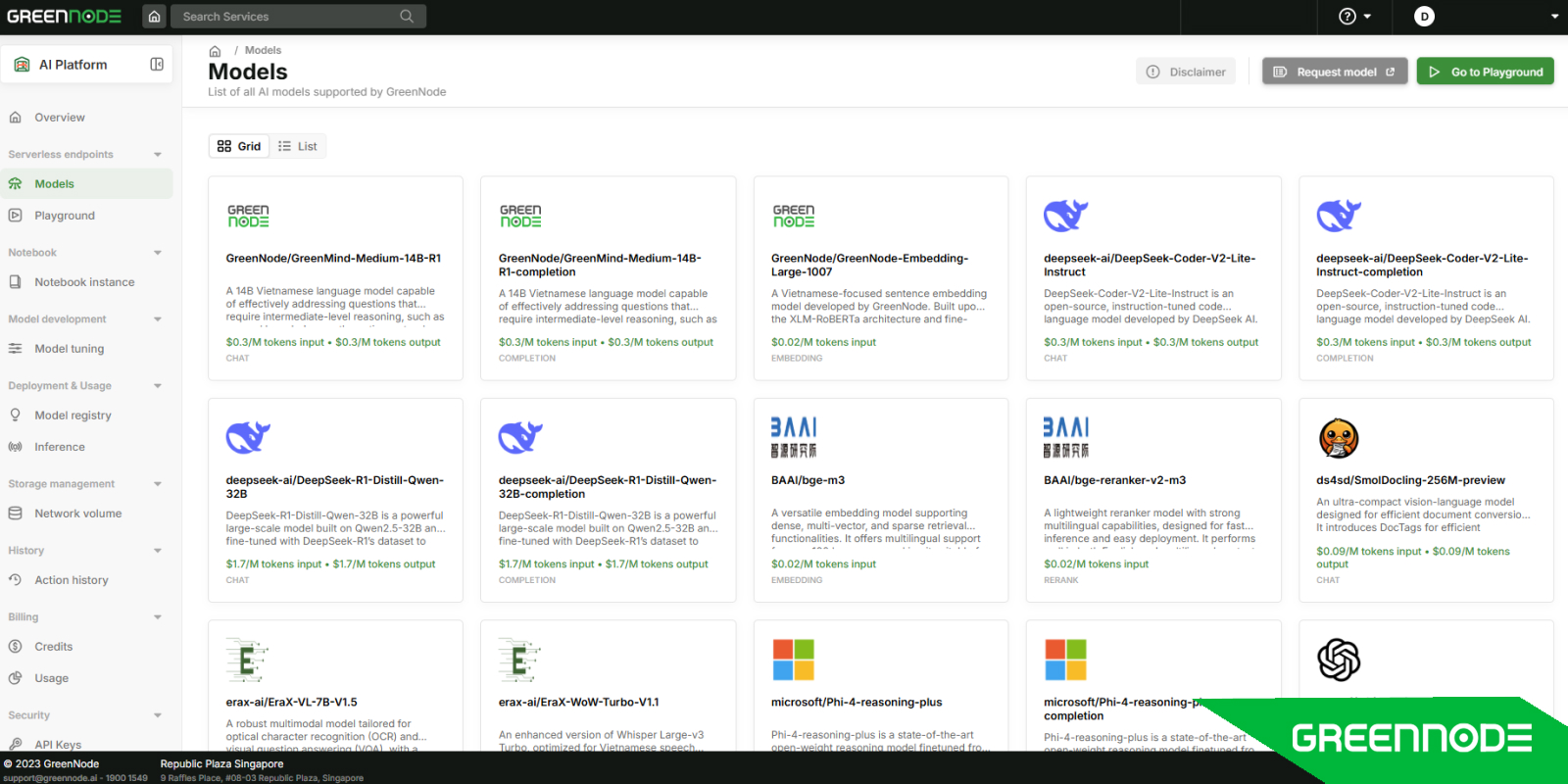

From the GreenNode portal, you can seamlessly access the full Model Catalog, featuring a curated selection of top-performing open-source models across key AI domains — including text generation, vision, speech, embeddings, and reranking.

Some standout models available today include:

- Text generation: DeepSeek-Coder, Qwen3-30B, GreenMind, and Phi-4-Reasoning-Plus — ideal for chat, summarization, code completion, and more.

- Vision models: EraX-VL, Phi-4-Vision, and SmolDocling support tasks like image captioning, OCR, and document understanding.

- Speech processing: Models like Whisper-Large-V3, EraX-WoW-Turbo, and Vietnamese-language Zalo-ASR/Zalo-TTS enable real-time transcription and voice generation.

- Embeddings & reranking: Powerful models such as BAAI/bge-m3 and GreenNode-Embedding-Large are optimized for semantic search, retrieval, and relevance ranking.

Explore the full list of supported models directly on the GreenNode Model Catalog to see what's available. You may also request your custom model tailored to your specific use case or domain requirements.

GreenNode AI model playground

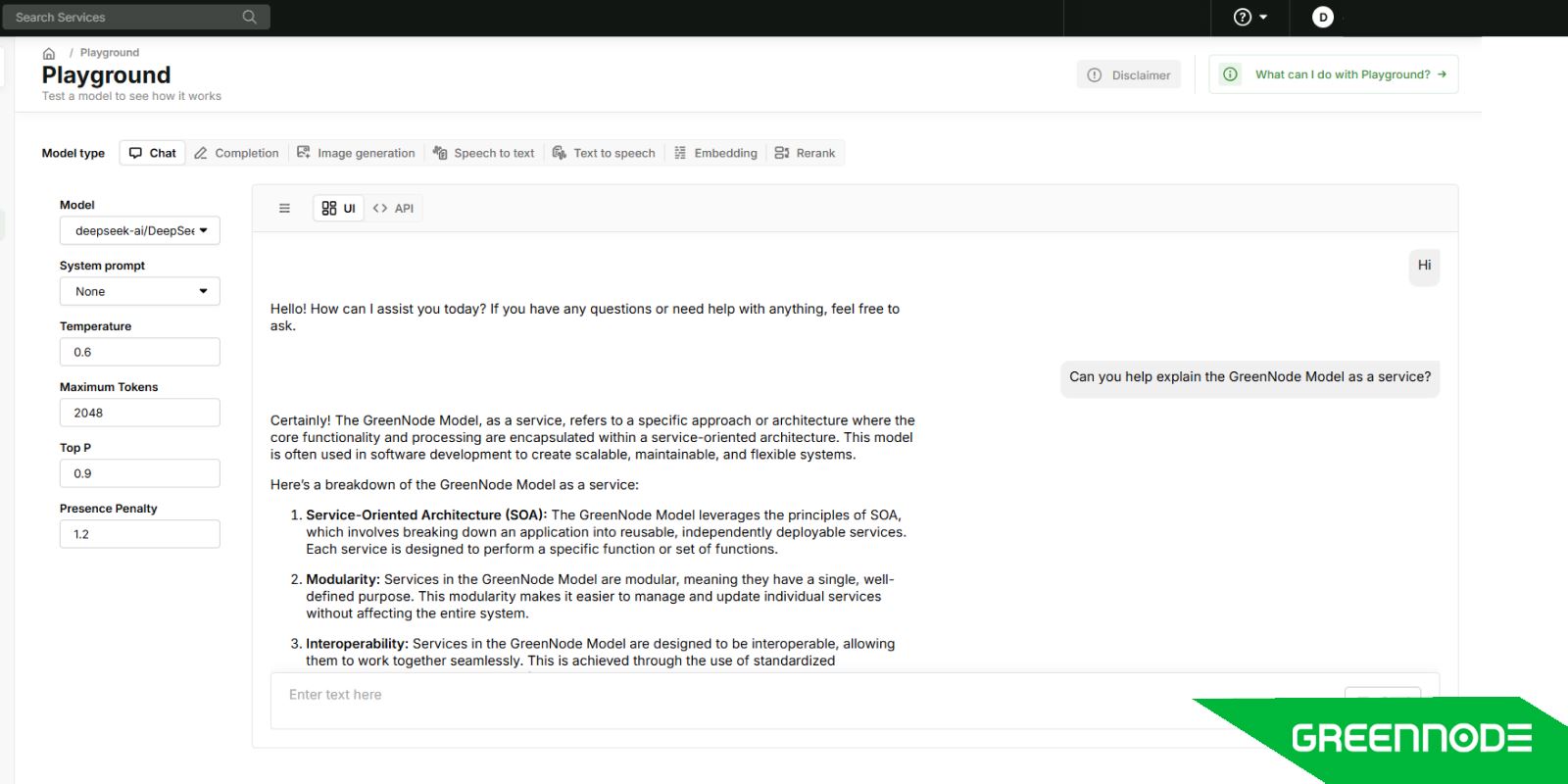

The journey from experimenting with AI models to actually deploying them into production is often filled with friction. Developers and product teams typically bounce between local notebooks, cloud APIs, and infrastructure setup — slowing down innovation. That’s why we built the GreenNode AI Model Playground — a frictionless, zero-setup environment to try out top open-source models, compare results, and instantly prepare for deployment.

With the seven distinct playgrounds, you can input text prompts and receive live responses from a wide range of models, including chat-based LLMs, text generators, image captioners, speech-to-text, and more. You can visually inspect outputs and fine-tune inference settings like temperature, top-p, and max tokens in real time — all without writing a single line of code.

But the Playground goes beyond just testing. Once you’ve configured a setup that works for your use case, you can instantly export the prompt and configuration into a ready-to-use API payload or SDK snippet, making it effortless to plug into your production pipeline. It bridges the gap between experimentation and real-world application — no infrastructure, no provisioning, just fast iteration.

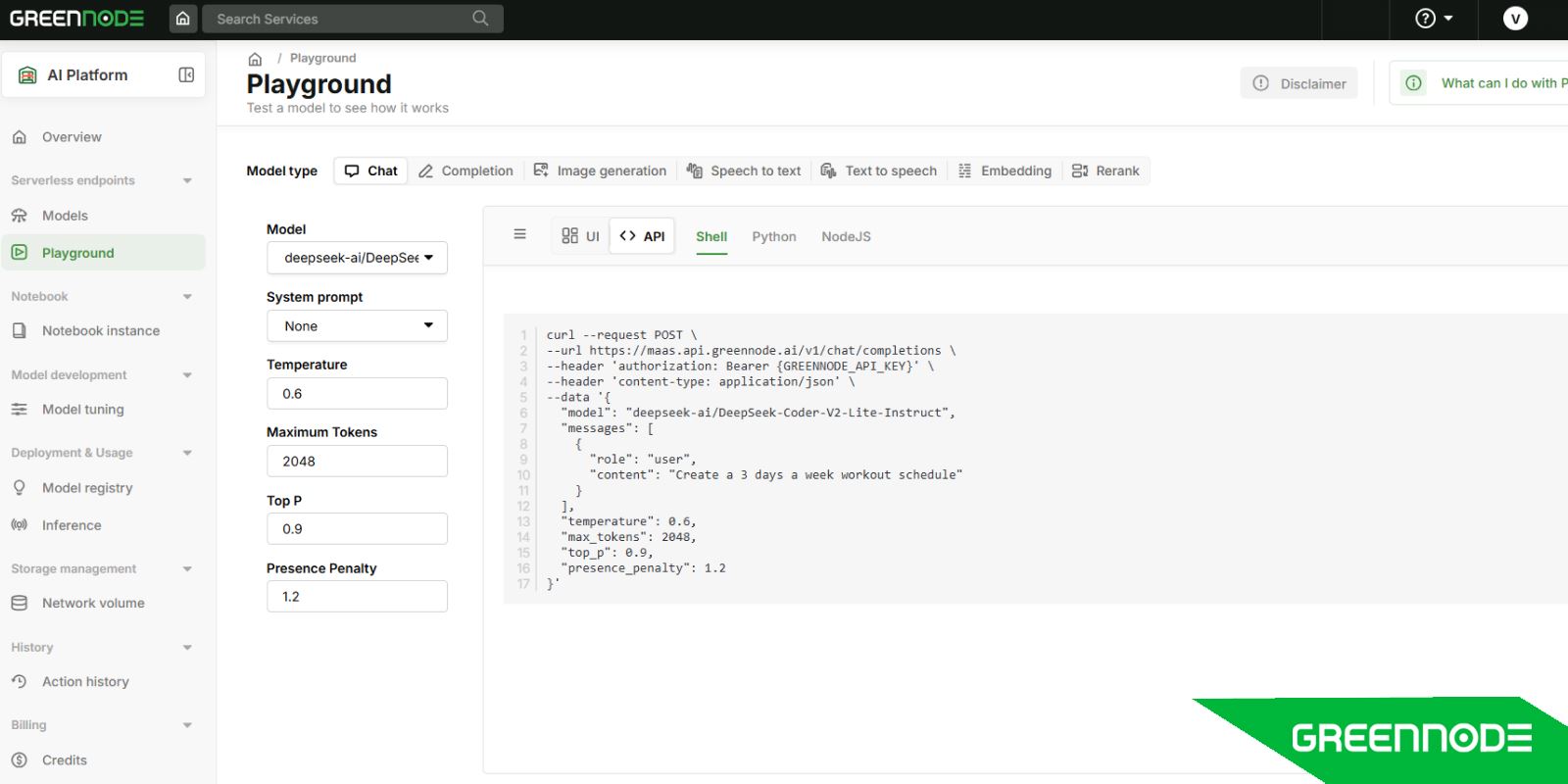

Retrieve and run your AI models easily

Accessing models on GreenNode is seamless. Every model is pre-integrated with the GreenNode runtime, allowing you to test, evaluate, and deploy directly through the Playground or standard API endpoints (Shell, Python, NodeJS)— no setup required.

Whether you're prototyping or moving to production, models can be easily called via HTTP or integrated using SDKs (Only available in Python).

Sample API Usage

curl --request POST \

--url https://maas.api.greennode.ai/v1/chat/completions \

--header 'authorization: Bearer {GREENNODE_API_KEY}' \

--header 'content-type: application/json' \

--data '{

"model": "deepseek-ai/DeepSeek-Coder-V2-Lite-Instruct",

"messages": [

{

"role": "user",

"content": "Create a 3-days a week workout schedule"

}

],

"temperature": 0.6,

"max_tokens": 2048,

"top_p": 0.9,

"presence_penalty": 1.2

}' Get started quickly with full documentation and examples at: GreenNode API Docs

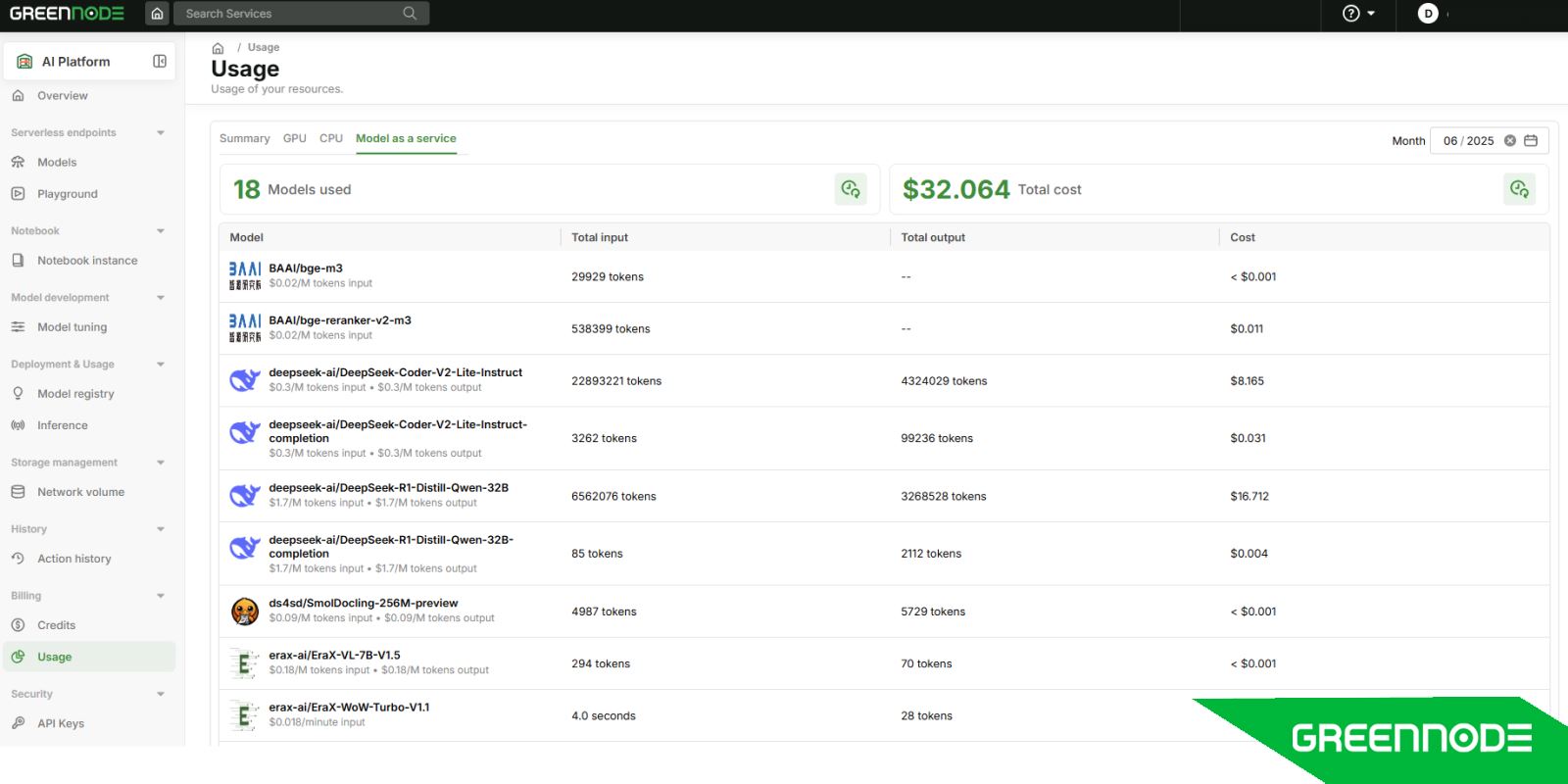

Model tokenization mechanism and billing usage

Tokens are the fundamental units of computation in most transformer-based models. A single token can be as short as one character (e.g., “a”) or as long as a short word (e.g., “chat”). When you send an input like a paragraph or conversation history to a model, it’s first tokenized into chunks — and each chunk represents a unit of work for the model to process. With GreenNode’s Model-as-a-Service (MaaS), we’ve designed a billing model that’s both transparent and developer-friendly, powered by a clear tokenization mechanism that works consistently across all supported models.

GreenNode uses standardized tokenizers based on the model family (e.g., Qwen, DeepSeek, Phi) to ensure accurate and consistent measurement. The total token count for a request includes both:

- Input tokens: The tokens in the prompt or context you send

- Output tokens: The tokens generated by the model in response

Both of these are counted toward your billed usage by measuring:

- The number of input tokens

- The number of output tokens

- The corresponding model's token price

The platform then calculates your cost automatically and logs the transaction to your real-time usage dashboard, allowing full visibility into token consumption and spend.

For image generation or speech-to-text models, usage is measured per step or per minute, depending on the model’s modality. This ensures fair, model-appropriate billing across use cases. This token-based billing mechanism offers several advantages:

- Predictability: You know exactly how much a request will cost before deploying to production

- Transparency: Your billing dashboard clearly breaks down token usage by model, request, and time

- Optimization: Developers can fine-tune prompts or truncate context length to optimize performance vs. cost

- Scalability: Works seamlessly across use cases — from chatbots and summarization to voice transcription or reranking

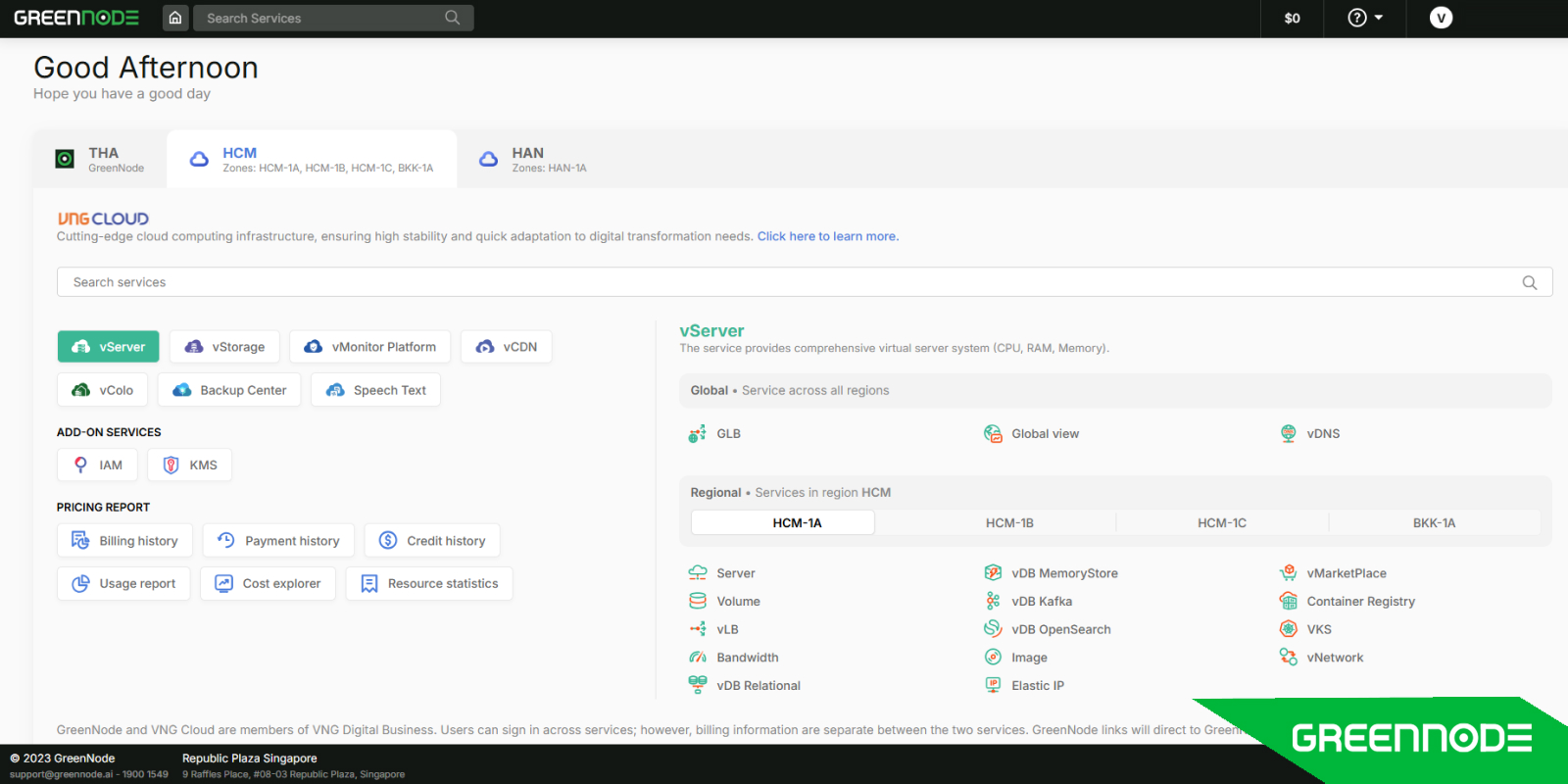

Available Local Cloud Service in GreenNode Unified Portal

GreenNode is excited to announce a deeper integration with VNG Cloud, our sister company under the VNG Digital Business ecosystem — designed to bring powerful, regionally-optimized infrastructure directly into your AI development workflow.

With this release, users can now seamlessly access VNG Cloud’s suite of local cloud services right within the GreenNode portal. From provisioning compute instances (vServer), managing data with vStorage, setting up Backup, to leveraging other infrastructure solutions — everything is now unified under one interface, hosted in Vietnam and Thailand for low-latency and compliance-ready performance.

Furthermore, to streamline the user experience, GreenNode now supports Single Sign-On (SSO) via VNG Cloud accounts — allowing developers and IT teams to authenticate once and navigate across AI and cloud environments with a single identity. It’s easier account management, unified access control, and improved team coordination — especially for organizations scaling across AI and cloud-native workloads.

⚠️ Note: VNG Cloud services are currently available only in Vietnam and Thailand regions. While users can seamlessly sign in across both GreenNode and VNG Cloud platforms using SSO, billing and usage information remain managed separately between the two services.Other Platform Enhancements Powered by GreenNode

To reduce latency and improve container loading speed for regional users, GreenNode has successfully migrated its container registry hub from Vietnam to Thailand.

This change enhances the performance of model deployments and image pulls across Southeast Asia, minimizing cold start delays and ensuring faster environment provisioning, especially for teams using custom models or Docker-based AI workflows. The move reflects our ongoing commitment to infrastructure localization and latency-sensitive deployment.

Also Read: Custom AI Model: Build or Buy? Choosing the Best Option for Your Business

Final Verdicts

With the introduction of Model-as-a-Service, integrated SSO, and access to local VNG Cloud services, this release marks a major step forward in delivering a seamless AI development experience tailored for modern enterprises in Southeast Asia.

Looking ahead, GreenNode will continue to expand its model catalog and introduce advanced comparison tools — making it even easier for users to evaluate, choose, and operationalize the right AI models. Start your AI journey with GreenNode TODAY!