Key Trends Driving the Next Wave of Enterprise GPU Adoption

The landscape of GPU-accelerated computing in the business realm is on the brink of significant expansion. A notable trend gaining momentum is the integration of generative AI, enabling the creation of human-like text and diverse images. This surge in interest is propelled by transformative technologies like transformer models, seamlessly embedding AI into everyday applications, ranging from conversational interfaces to the generation of complex protein structures.

Additionally, visualization and 3D computing are capturing widespread attention, especially in fields like industrial simulation and collaboration. GPUs are poised to revolutionize data analytics and machine learning, catalyzing efficiency and cost-effectiveness, particularly in core applications like Apache Spark. Furthermore, the rapid growth of AI inference deployments is propelled by the expansion of smart spaces and industrial automation, making GPUs indispensable in this domain.

To meet the demands of these intricate computational needs, a new wave of computing technologies is emerging. This includes innovative GPU architectures from NVIDIA, alongside cutting-edge CPUs developed by AMD, Intel, and NVIDIA. Global system manufacturers have ingeniously integrated these technologies into robust computing platforms tailored to handle a diverse array of accelerated computing workloads.

These systems, certified by NVIDIA, guarantee optimal performance, reliability, and scalability for enterprise solutions. They are readily available for purchase, empowering businesses to leverage these advancements fully. We’ll provide in-depth insights into these groundbreaking technologies and guidance on how enterprises can harness their potential in this blog post.

Optimal Performance for AI and Large Language Models (LLM)

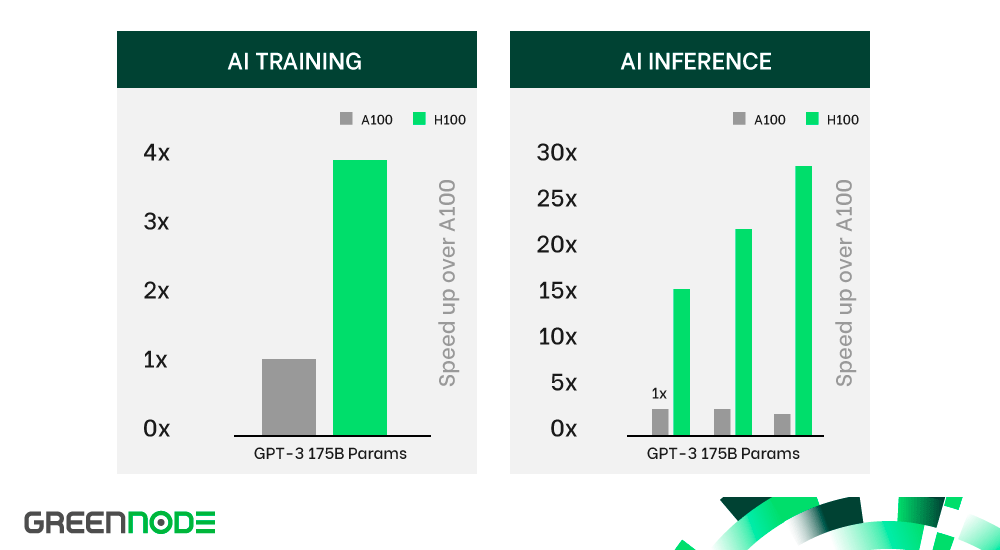

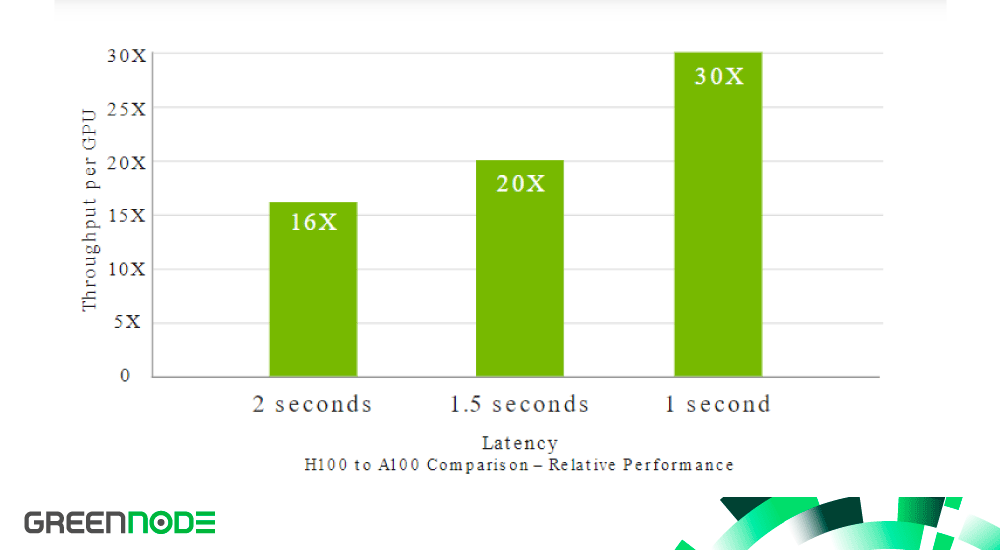

NVIDIA HGX H100 servers have been meticulously optimized for training large language models and inference tasks, delivering unparalleled performance. These servers outpace their predecessors, the NVIDIA A100 Tensor Core GPUs, by a significant margin. Specifically, they exhibit up to 4 times faster speed for AI training and a remarkable 30 times faster speed for AI inference.

The latest servers boast cutting-edge features, including a powerful combination of GPUs and CPUs, ensuring top-tier performance for both Artificial Intelligence (AI) and High-Performance Computing (HPC) applications. Here's a breakdown of their exceptional capabilities:

- 4-way H100 GPUs: These servers are equipped with H100 GPUs, delivering an astounding 268 TFLOPs (TeraFLOPs) of FP64 (Double Precision) performance.

- 8-way H100 GPUs: For even more intensive tasks, the servers come with configurations featuring 8 H100 GPUs, collectively providing an impressive 31,664 TFLOPs of FP8 (Half Precision) performance.

- NVIDIA SHARP In-Network Compute: The servers feature 3.6 TFLOPs of FP16 (Single Precision) performance, enhanced by NVIDIA SHARP in-network compute, optimizing computational efficiency.

- Fourth-generation NVLink: With fourth-generation NVLink technology, these servers achieve 3 times faster all-reduce communications, streamlining data exchange and enhancing processing capabilities.

- PCIe Gen5 End-to-End Connectivity: The servers utilize PCIe Gen5 technology for end-to-end connectivity, ensuring higher data transfer rates between the CPU, GPU, and network components. This results in seamless and rapid data flow across the system.

- Exceptional Memory Bandwidth: Each GPU in these servers boasts an impressive memory bandwidth of 3.35 TB/s, facilitating swift access to data and enhancing overall system responsiveness.

During the NVIDIA GTC 2023 keynote, NVIDIA unveiled the groundbreaking NVIDIA H100 NVL, a PCIe product with dual NVLink connections and a substantial 94 GB of HBM3 memory. Specifically engineered for large language models, this innovation boasts a remarkable performance increase, delivering 12 times the power of the NVIDIA HGX A100 for GPT-3 applications.

For enhanced connectivity and scalability, the configuration allows for optional use of the NVLink bridge. With this bridge, two GPUs can be seamlessly connected, achieving a bandwidth of 600 GB/s, nearly five times the speed of PCIe Gen5. This advancement significantly enhances data transfer efficiency.

Designed for mainstream accelerated servers that fit into standard racks and offer lower power consumption per server, the NVIDIA H100 PCIe GPU excels in applications that utilize one to four GPUs simultaneously. This includes a wide range of applications such as AI inference and High-Performance Computing (HPC).

Furthermore, NVIDIA's partners have already begun shipping NVIDIA-Certified servers featuring the H100 PCIe GPU, making this cutting-edge technology available to enterprises today. GreenNode, as a prominent partner of NVIDIA, is among the first providers to offer the H100 PCIe GPU. Additionally, later this year, NVIDIA-Certified systems equipped with both the NVIDIA H100 PCIe and NVIDIA HGX H100, are expected to further expand the possibilities for running the latest AI and HPC applications with enhanced performance and scalability. These systems are set to empower enterprises with unprecedented capabilities, marking a significant leap in the realm of computational technologies in Vietnam.

Also read: NVIDIA H100 vs H200: Key Differences in Performance, Specs, and AI Workloads

Accelerated High-Performance Computing with H100

The H100 significantly advances NVIDIA's leadership in inference technology by accelerating inference speeds by up to 30 times and achieving the lowest latency. Its fourth-generation Tensor Cores enhance all precisions, including FP64, TF32, FP32, FP16, INT8, and now FP8. This optimization reduces memory usage and boosts performance while ensuring accuracy, especially in tasks like Large Language Models (LLMs). H100 introduces groundbreaking AI capabilities that enhance the synergy of High-Performance Computing (HPC) and AI, speeding up scientific discoveries for researchers tackling global challenges.

Compared to double-precision Tensor Cores, the H100 triples the Floating-Point Operations Per Second (FLOPS), delivering 60 teraflops of FP64 computing power for HPC. AI-integrated HPC applications can harness H100's TF32 precision, achieving one petaflop throughput for single-precision matrix-multiply operations without any code modifications.

Additionally, the H100 introduces new DPX instructions, providing a 7-fold increase in performance over A100 and 40 times faster processing compared to CPUs in dynamic programming algorithms like Smith-Waterman for DNA sequence alignment and protein alignment, crucial for protein structure prediction.

In AI application development, data analytics often consumes a significant amount of time. Large datasets scattered across multiple servers pose challenges for scale-out solutions relying on commodity CPU-only servers, leading to limited computing performance scalability.

Accelerated servers featuring the H100 address this challenge by providing substantial computing power, coupled with a remarkable 3 terabytes per second (TB/s) of memory bandwidth per GPU and scalability enabled by NVLink and NVSwitch. This setup empowers the handling of data analytics tasks with high performance, allowing seamless support for massive datasets. When combined with technologies like NVIDIA Quantum-2 InfiniBand, Magnum IO software, GPU-accelerated Spark 3.0, and NVIDIA RAPIDS™, the NVIDIA data center platform excels in accelerating these extensive workloads, achieving unparalleled levels of performance and efficiency.

Final thoughts

In conclusion, the evolution of GPU-accelerated computing, particularly with the introduction of groundbreaking technologies such as the H100, signifies a pivotal moment in the realm of artificial intelligence and high-performance computing. These advancements have not only revolutionized the efficiency and scalability of data analytics but have also propelled the development of large language models and other AI applications.

H100 has opened a new era of computational capabilities, where tasks that once consumed significant time and resources can now be executed swiftly and with unparalleled precision. As we move forward, these advancements not only open new horizons for scientific research but also empower businesses with transformative tools to address real-world challenges.