By now, most of us in the AI world agree: generic, English-only models don’t cut it anymore.

Real-world voice data is messy, multilingual, and emotionally charged. If you’ve ever tried transcribing customer support calls in Thai or deciphering Vietnamese with regional accents, you know exactly what I mean. For both languages, the official records of the languages are less than 100 years old, with hundreds of dialects creating a barrier for any AI practitioners

PopTech, a forward-leaning tech company in Asia, decided to stop waiting for global models to catch up—and started building their own.

This is the story of how their team is fine-tuning Whisper Large V3 (10B) to make voice AI work for Southeast Asia—using GreenNode as their infrastructure partner.

The Problem: Call Data Is an Untapped Goldmine—Until It’s Not

PopTech works across banking, energy, and insurance. In each case, they faced a similar issue: call centers were overflowing with rich but unused voice data.

The dream?

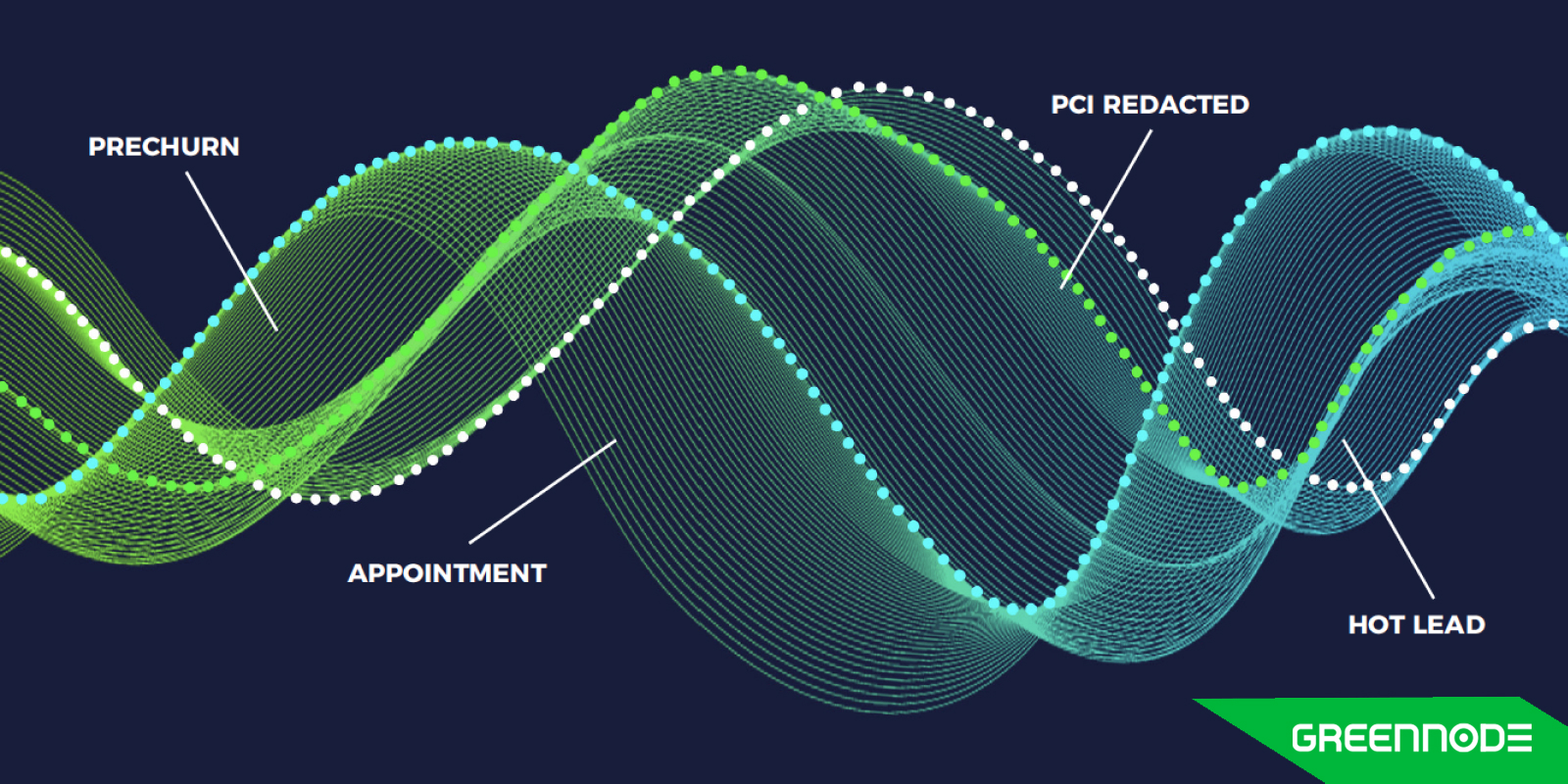

→ Transcribe voice calls with high accuracy

→ Analyze customer sentiment and agent quality

→ Do it in near real-time, across multiple languages (but first Vietnamese and Thai)

The reality?

→ No off-the-shelf model performed well on Thai or Vietnamese

→ Regional accents reduce accuracy

→ GPU bills from big cloud providers couldn’t be sustainable in the long run.

The solution wasn’t just about a better model. It required

- Infrastructure that scales but doesn’t break the budget

- A fine-tuning loop tailored to their data and goals

- A team willing to get their hands dirty and do the hard work

The Experiment: Fine-Tuning Whisper on GreenNode

PopTech chose to go with OpenAI’s Whisper Large V3 (10B) as their base model, primarily because of its open-source nature and strong multilingual support.

They kicked off a fine-tuning pipeline using voice data from customer support centers in Thailand and Vietnam for an insurance company. With the characteristics of a complex and highly humanistic financial product such as insurance, continuously evaluating and improving the quality of customer care services is an essential requirement to bring competitive advantages to businesses.

First off, the goal was clear: improve transcription accuracy for local dialects and extract richer metadata like sentiment and compliance based on an open-source voice model (Whisper— in this case). To make that happen, they needed GPU-compute resources. And a platform that free their hands from all the manual setup.

Here’s where GreenNode came in.

Instead of wrangling credits from different hyperscalers like most startups do, they know exactly what they want and give clear requests.

- A40 instances for dev/test workloads (handled 5 concurrent voice streams efficiently)

- H100 and A100 for an enormous production environment (anything that can handle 4000 + calls per day)

“GreenNode’s deep understanding of GPU sizing saved us from overprovisioning and unnecessary cost. Setting up our environment felt just like using Docker—intuitive, fast, and familiar. Deploying a notebook took only minutes, mounting data via volumes was seamless, and the local support team was responsive and helpful. No more waiting 24 hours for a vague Zendesk response.”

— Quan, AI Engineer, PopTech

What's Next: From Demo to Deployment

The fine-tuned models are now in the final stages of review, with teams in Thailand and Vietnam validating outputs against local dialects. PopTech is also exploring how to push this into production pipelines where calls can be transcribed and scored in near real-time.

In the long run, the voice data can be leveraged to deliver analytics solutions that transform insights into actionable intelligence, supporting the evaluation and enhancement of product quality and the development of future financial services.

To fulfill these requirements, the development team aims to upgrade to a more robust infrastructure and a more stable, optimized processing system. Their wishlist includes:

- GPU slicing (MIG) to optimize for lightweight workloads

- Slack/Telegram notifications for when resources are ready

- Pre-built fine-tuning pipelines for voice tasks

This level of ambition shows one thing clearly: Southeast Asia isn’t waiting around for Big Tech to localize its AI. They’re building it themselves—with the right tools.

Why This Use Case Matters (Especially in 2025)

This isn’t just about PopTech. It’s about a shift happening across the AI world. We have passed the 1st phase of training models; now it’s the “inferencing era,” where once you run any instance, what are the business outcomes for the business? This question is starting to have clearer answers day by day.

- Local-language AI is no longer optional—it’s essential

- Open-source + local compute is beating out the old “just use an API” model

- Engineers want fine-grained control, visibility, and customization

- Companies need infrastructure that feels like their own, not just a black box on a cloud invoice

If you're an LLM engineer working on speech, or you're struggling to make multilingual models actually work for your region or domain, this use case should hit home.

Want to Try It Yourself?

GreenNode is designed for AI builders—not just researchers, but engineers shipping real products to production.

Whether you’re fine-tuning Whisper, LLaMA, or experimenting with open models from Hugging Face, GreenNode gives you:

- Full GPU control (H100, L40S, RTX4090)

- Easy onboarding, 100% self-serve portal, even for teams without a DevOps team

- Transparent pricing & flexible payments (especially if you’re in APAC). View our pricing here: https://greennode.ai/pricing