Every modern analytics and AI project starts with one question: how do I get the data in?

Before insights can be generated or models can be trained, data has to be collected, transported, and made available in the right place, ensuring it is clean, structured, and ready for use. That process is called data ingestion, and it forms the backbone of every data pipeline. However, 80% of cloud data warehouses identify ingestion as the most common bottleneck in scaling analytics workloads (Databricks).

In this article, we’ll unpack what data ingestion really means: its definition, types, and core processes, and highlight the top tools used by data teams today.

What Is Data Ingestion?

According to IBM, data ingestion is the process of collecting and importing data from various sources into a centralized storage or processing system, such as a data warehouse, data lake, or real-time analytics engine. It is the first stage of the data pipeline, ensuring that information, whether structured, semi-structured, or unstructured, is made available for downstream tasks like transformation, analytics, and machine learning.

In simpler terms, data ingestion is how raw data travels from where it’s created (applications, IoT devices, APIs, sensors, logs, or external feeds) to where it’s analyzed. Without it, data scientists, analysts, and business tools would have nothing to work with.

The core purpose of data ingestion is to create a reliable and automated flow of data across the organization. This enables:

- Timely access to information for decision-making.

- Consistency between systems and departments.

- Scalability as data sources grow in number and volume.

- Accuracy by ensuring the same version of truth feeds analytics and AI models.

Why Data Ingestion Matters for Modern Data Architecture

Data is being generated everywhere, from customer interactions and connected devices to internal systems and cloud services. Effective data ingestion serves as the bridge between raw data and actionable insight, making it a cornerstone of modern data architecture. Here’s why it matters:

Centralization and Accessibility: Data ingestion allows organizations to unify disparate data sources, from CRM systems, SaaS apps, IoT devices, and on-prem databases into a single, centralized repository. This creates a unified view of business operations and eliminates data silos that often lead to duplicated or conflicting insights.

Real-Time Insights and Responsiveness: With the rise of streaming ingestion, businesses can now act on data the moment it’s generated. Real-time ingestion supports use cases like fraud detection, predictive maintenance, and personalized customer experiences, where milliseconds can make the difference between success and missed opportunity.

Foundation for Analytics, AI, and CompliancCompliance: Every dashboard, model, or AI recommendation depends on reliable data ingestion. It ensures that analytics tools always pull fresh, consistent, and governed data, which is critical for maintaining accuracy and compliance with data regulations like GDPR or PDPD.

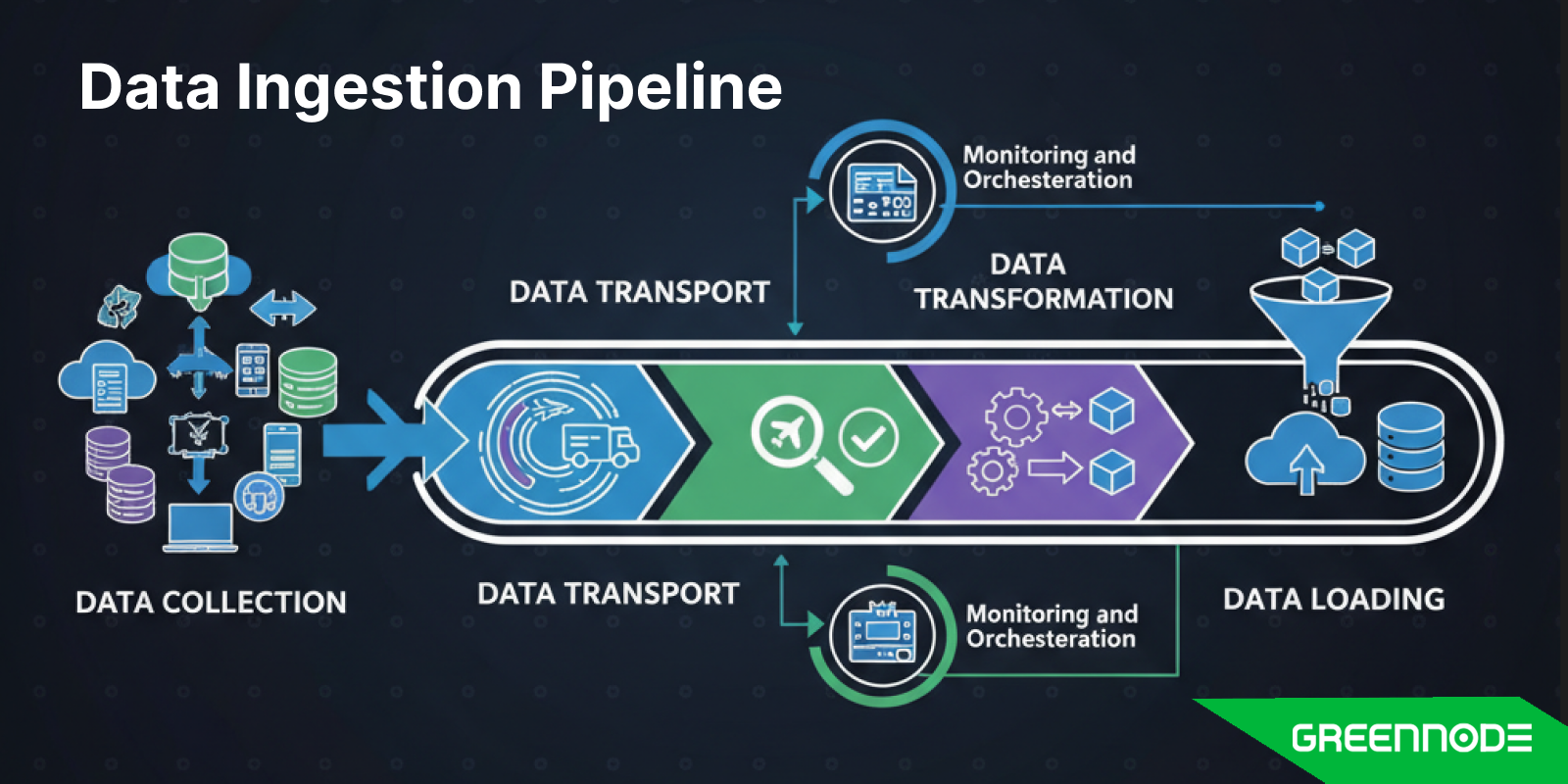

Data Ingestion Pipeline

A data ingestion pipeline is the foundation of any modern data architecture. It’s the structured flow that moves information from where it’s created, such as applications, sensors, or databases, to where it’s analyzed, often in a data warehouse, data lake, or real-time analytics system. A well-designed ingestion pipeline ensures that data reaches its destination quickly, securely, and in a usable format, forming the first step toward meaningful analytics and AI.

Key stages of a modern data ingestion pipeline include:

- Data Collection

- Data Transport

- Data Validation

- Data Transformation

- Data Loading

- Monitoring and Orchestration

Now, let’s dive deeper into each stage of the data ingestion pipeline.

Data collection: This is the first step of the pipeline, where information is gathered from a wide range of sources including APIs, SaaS platforms, IoT devices, log files, or traditional databases. Depending on business requirements, data can be ingested in batches at scheduled intervals or streamed continuously in real time. Batch ingestion is often used for large but infrequent updates, such as daily sales or weekly performance reports, while streaming ingestion handles data that needs immediate attention, like financial transactions, IoT sensor readings, or user activity logs. The goal at this stage is to establish consistent, scalable connections to all data sources so that no valuable information is missed.

Data Transport: Once the data is collected, it moves into the transport stage, where it is transferred from its source to an ingestion layer or staging environment. This phase often relies on message brokers and data movement tools to ensure data flows continuously and securely. Reliability, speed, and fault tolerance are crucial here because the system must handle network interruptions, retries, and encryption without losing any records. In essence, this stage acts as the high-speed highway connecting raw data to the systems that will eventually make sense of it.

Data Validation: After the data arrives in the staging area, it goes through validation, which ensures that the information is accurate, complete, and consistent. This is a critical quality checkpoint: data is examined for missing fields, incorrect types, out-of-range values, or duplicated entries. If errors are found, the pipeline either corrects them automatically or flags them for review. Validation ensures that only clean, reliable data progresses downstream.

Data Transformation: Here is where validated data is converted into a usable, standardized format that’s optimized for analysis or machine learning. This may include normalization to remove redundancies, aggregation to summarize large datasets, or standardization to ensure uniform naming conventions, units, and formats. In many systems, transformation happens in the ingestion pipeline itself known as ETL (Extract, Transform, Load), while in others it occurs after loading. The purpose is the same: to make the data consistent, structured, and easy to analyze.

Data Loading: In this step, the prepared data is written into its final destination such as a cloud data warehouse, a data lake, or a lakehouse platform. Here, the data is organized for performance and accessibility, often through partitioning, indexing, or caching. A well-optimized loading process ensures that business intelligence tools, dashboards, and machine learning systems can access the latest data without delay.

Monitoring and Orchestration: Finally, a mature data ingestion pipeline includes monitoring and orchestration to maintain its reliability over time. Monitoring tools track metrics like latency, throughput, and data quality, while orchestration frameworks schedule workflows and manage dependencies. Continuous monitoring allows teams to detect failures early, maintain data freshness, and ensure compliance with data governance policies.

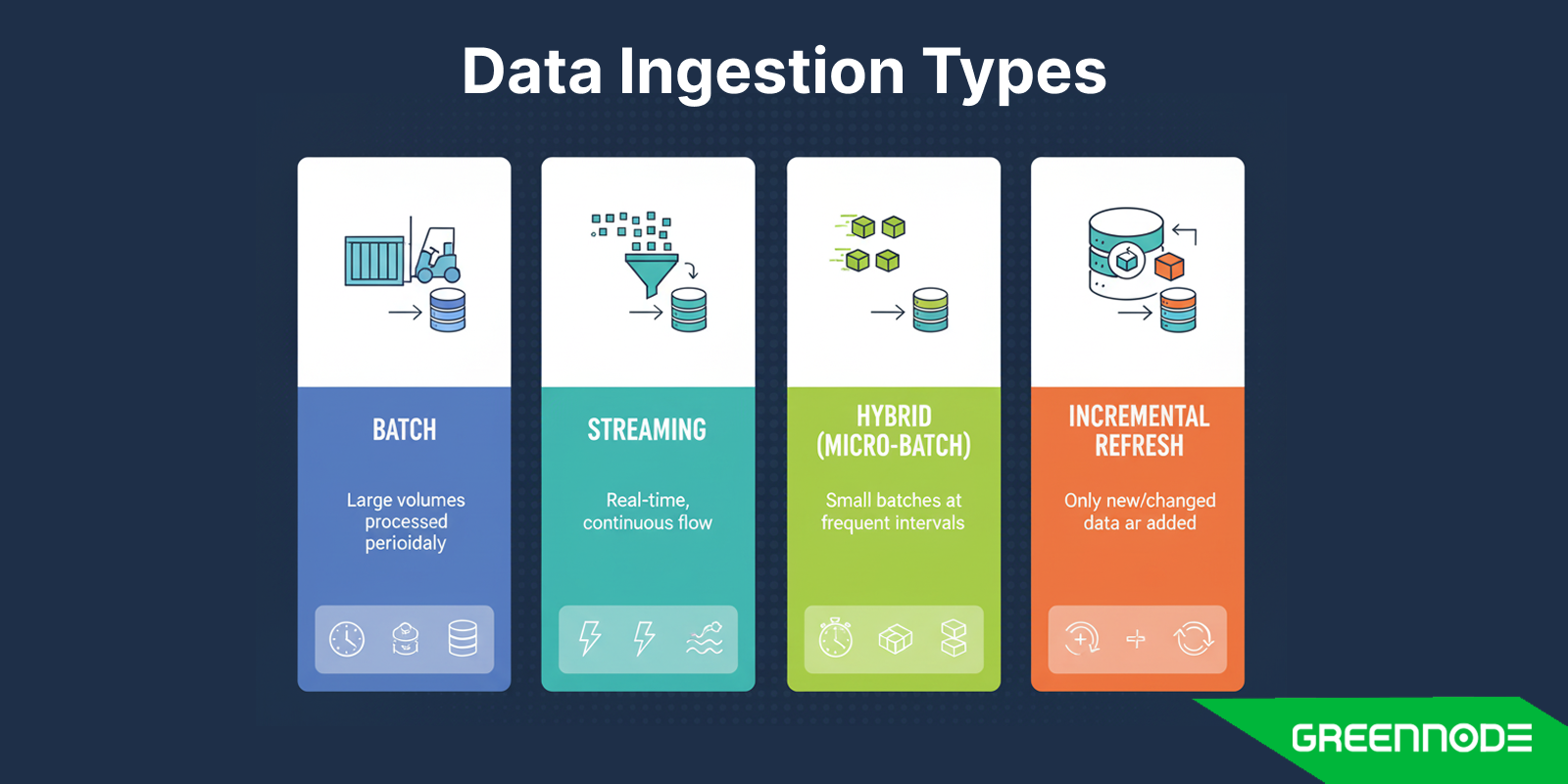

Data Ingestion Types

Data ingestion can take several forms depending on how frequently data is collected and how quickly it needs to be available for analysis. Broadly, ingestion methods fall into four categories: batch, streaming, hybrid (or micro-batch), and incremental refresh. Understanding each approach helps data teams choose the right strategy for their business goals, performance requirements, and infrastructure.

Batch Ingestion

Batch ingestion refers to the process of collecting and transferring data at scheduled intervals such as hourly, daily, or weekly rather than continuously. In this approach, data from multiple sources is grouped together, processed, and loaded into the destination system as a single large batch.

Batch ingestion is particularly useful for workloads where real-time updates are unnecessary or impractical. For example, a retailer might aggregate daily sales transactions overnight, or a finance team might process end-of-day reports to update dashboards the next morning. This method is simple, cost-efficient, and well-suited for handling large historical datasets or periodic data synchronization between systems.

However, batch ingestion has its limitations: it introduces latency between when data is generated and when it becomes available for analysis. In scenarios that demand up-to-the-minute accuracy, organizations often turn to streaming or hybrid approaches.

Streaming (Real-Time) Ingestion

In contrast, streaming ingestion (sometimes called real-time ingestion) involves continuously capturing and delivering data as it is generated.

This approach is essential in use cases like IoT sensor monitoring, fraud detection, online advertising, or real-time user personalization. For example, a logistics company may track live GPS signals from thousands of vehicles, or a bank may flag suspicious transactions within seconds.

Streaming ingestion relies on technologies which can handle high-throughput, low-latency data streams. While it demands more complex infrastructure, it provides the agility that modern analytics and AI systems require, ensuring that decisions are based on the latest available information.

Hybrid or Micro-Batch Ingestion

Between batch and streaming lies hybrid ingestion, also known as micro-batch ingestion. This method blends the best of both worlds: it collects data in small, frequent batches, for instance, every few minutes, allowing near-real-time updates without the overhead of continuous streaming.

Hybrid ingestion provides flexibility for organizations that want up-to-date data but don’t need second-by-second processing. It’s often used in business intelligence pipelines, monitoring systems, or log analytics where the goal is to balance freshness with cost efficiency.

Hybrid ingestion is especially valuable when dealing with mixed workloads, for instance, a company might use streaming for critical events (like error alerts) while relying on micro-batches for less time-sensitive metrics.

Incremental vs. Full Refresh

Another important distinction in ingestion strategy is between incremental loading and full refresh.

A full refresh replaces all existing data in the target system with the latest version from the source. This approach ensures data completeness but can be time-consuming and resource-intensive, especially for large datasets. It’s typically used when the underlying schema changes or when the data volume is manageable enough for complete reloads.

Incremental ingestion, on the other hand, only processes new or updated records since the last load. This method significantly reduces processing time and bandwidth, making it ideal for large-scale or continuously changing datasets. Modern ETL tools and ingestion frameworks often support incremental loading using timestamps, change-data-capture (CDC), or version tracking.

By combining incremental logic with batch or streaming ingestion, organizations can optimize both speed and efficiency, ensuring that pipelines stay up to date without wasting compute or storage resources.

Business Benefits of a Streamlined Data Ingestion Process

A well-designed data ingestion process shapes how fast and how effectively an organization can act on its data. When ingestion pipelines are reliable, automated, and scalable, they unlock a series of measurable business benefits that reach far beyond the IT department.

Enhanced Data Democratization

Streamlined data ingestion breaks down data silos, making information readily accessible across departments and business units. Instead of isolated teams managing separate datasets, everyone can draw from the same unified, trusted source of truth. This accessibility fosters a data-driven culture, where analysts, marketers, and executives can explore insights directly without waiting for technical intervention.

Reduced Operational Costs and Manual Effort

Automated ingestion pipelines eliminate repetitive manual data handling, freeing engineering teams from writing endless scripts or fixing failed transfers. This not only lowers operational costs but also reduces the risk of human error in critical processes.

A real example comes from a global energy company that partnered with Grant Thornton to automate audit-data ingestion. The result was dramatic: processing time dropped from 24 hours to just 4 hours per quarter, an 83% improvement, allowing finance teams to focus on analysis instead of data preparation. Automation at the ingestion layer directly translates into measurable time and cost savings.

Faster and More Accurate Decision-Making

Data loses value as it ages. A streamlined ingestion process ensures that fresh, validated data reaches business users and AI systems in near real time, improving the speed and quality of insights.

Improved Data Quality and Reliability

An efficient ingestion pipeline enforces validation, cleaning, and consistency checks as data moves from source to destination. This ensures that the information feeding analytics dashboards or machine learning models is accurate and trustworthy.

Scalability and Future-Proofing

A streamlined ingestion framework allows organizations to scale gracefully as data sources multiply and data volumes grow. Whether onboarding a new SaaS platform, integrating IoT feeds, or expanding into new markets, a flexible ingestion architecture supports growth without disrupting existing pipelines.

Exploring Data Ingestion Tools

Choosing the right data ingestion tool is essential for building a reliable, scalable, and efficient data pipeline. Below are the main categories of ingestion tools and examples widely used across the industry.

Open-Source Data Ingestion Tools

Open-source ingestion tools provide flexibility and customization for engineering teams that prefer full control over data flows. They are ideal for organizations with skilled technical resources and a need for on-premise or hybrid deployment.

Recommendation Tools:

Apache Kafka – A distributed streaming platform for handling real-time data feeds and event-driven pipelines.

Apache NiFi – A visual tool for building data flows, supporting drag-and-drop design and fine-grained routing.

Airbyte – An open-source ELT platform with hundreds of prebuilt connectors for APIs, databases, and SaaS tools.

Cloud-Native & Managed Ingestion Services

Cloud-native ingestion tools offer fully managed services that handle scalability, monitoring, and infrastructure maintenance. These platforms are best for organizations operating in cloud ecosystems such as AWS, Azure, or Google Cloud.

Recommendation Tools:

AWS Kinesis – Manages large-scale real-time streaming for applications like clickstream analytics and IoT telemetry.

Azure Data Factory – A serverless data integration service for batch and streaming ingestion with drag-and-drop workflows.

Google Cloud Dataflow – Provides unified batch and stream processing built on Apache Beam, integrating seamlessly with BigQuery.

ETL and ELT Integration Platforms

ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) platforms include ingestion as part of broader data integration. They help automate data collection from diverse sources, applying transformations and pushing the data into analytics-ready environments.

Recommendation Tools:

Fivetran – Delivers automated, incremental data ingestion from SaaS apps and databases into cloud warehouses.

Matillion – Focuses on cloud-native ETL and in-warehouse transformations for Snowflake, BigQuery, and Redshift.

Talend Data Integration – Provides enterprise-grade governance and real-time ingestion with built-in data quality tools.

Real-Time Streaming and Event-Driven Platforms

Streaming ingestion platforms enable continuous data movement and low-latency analytics. They are built for applications that require instant visibility and response, such as fraud detection, IoT monitoring, or live dashboards.

Recommendation Tools:

Confluent Platform – An enterprise distribution of Kafka offering managed clusters, connectors, and schema registry.

Redpanda – A high-performance, Kafka-compatible streaming engine optimized for low latency and efficiency.

Striim – Real-time data integration and event streaming platform supporting hybrid cloud environments.

Orchestration and Workflow Management Tools

Orchestration tools coordinate ingestion jobs, manage dependencies, and automate retries in case of failure. They ensure data pipelines run reliably and on schedule.

Recommendation Tools:

Apache Airflow – The industry-standard workflow scheduler for data pipelines, built on Directed Acyclic Graphs (DAGs).

Prefect – A modern orchestration tool that combines simplicity with observability and hybrid execution.

Dagster – An orchestration framework designed for data assets, emphasizing modularity and testing.

How to Choose the Right Data Ingestion Tools and Strategy

Selecting the right data ingestion strategy and tool is critical to building an analytics or AI ecosystem that is fast, scalable, and trustworthy. The process involves understanding your data, setting performance expectations, balancing costs, and ensuring governance. Below is a step-by-step guide to help you evaluate your options effectively.

Define Your Business Use Case & Data Characteristics: Start by identifying what problem your data pipeline needs to solve. Understand the type, volume, and frequency of data your organization generates.

Evaluate Throughput, Latency & Scale Requirements: The right ingestion method depends on how much data you need to move and how quickly you need it available.

Consider Cost & Infrastructure Constraints: Cost-effectiveness often depends on how ingestion is managed and where it’s hosted.

Assess Governance, Security, Compliance & Data Quality: A streamlined ingestion pipeline must maintain security and compliance while ensuring the data remains clean and reliable.

Choosing the right ingestion strategy comes down to aligning technology with business goals. Batch systems deliver simplicity and cost savings; streaming enables immediacy and agility; hybrid methods balance both. By weighing your use case, performance targets, infrastructure limits, and compliance needs, you can design a pipeline that makes your data not only accessible but also dependable.

Common Data Ingestion Challenges and How to Overcome Them

Even with the best tools and strategies, building a data ingestion pipeline isn’t without challenges. As data volumes, formats, and business needs evolve, organizations must anticipate technical and operational bottlenecks. Below are some of the most common data ingestion challenges and practical ways to address them.

Data Silos, Schema Drift & Format Diversity: One of the biggest hurdles in data ingestion is dealing with fragmented data sources that store information in different formats and structures. Data silos make it difficult to maintain consistency, while schema drift, when data structures change without notice, can break pipelines or cause errors.

- Adopt a schema registry (e.g., Confluent Schema Registry) to track data versions and handle structure changes automatically.

- Use standardized data formats such as Parquet, Avro, or JSON for better interoperability across systems.

- Encourage cross-department collaboration to prevent isolated systems and ensure that new data sources follow defined ingestion standards.

Latency vs Cost Trade-Off: Faster data ingestion often comes at a higher cost. Real-time streaming systems require constant compute resources, whereas batch ingestion can be more cost-efficient but introduces delay. Finding the right balance between performance and budget is key.

- Define clear SLAs for data freshness because not all use cases need real-time data.

- Use a hybrid or micro-batch approach to get near-real-time performance without continuous compute costs.

- Optimize data routing with event filtering or message queues to reduce unnecessary traffic.

- Monitor utilization and scale resources dynamically with cloud features.

Monitoring, Observability & Failures in Ingestion: Data pipelines are prone to silent failures: incomplete loads, duplicate records, or dropped messages. Without strong observability, these issues can go unnoticed until they impact analytics or AI outcomes.

- Implement centralized pipeline monitoring dashboards using tools like Apache Airflow, Prefect, or Datadog.

- Log and track ingestion metrics such as throughput, latency, and error rates in real time.

- Set up automated alerts and retry mechanisms to handle transient failures gracefully.

- Regularly validate ingested data against source counts to detect data loss or duplication early.

Scalability & Changing Sources: As organizations evolve, new data sources, APIs, and business systems are constantly added. Static ingestion pipelines can’t easily adapt to this growth.

- Design ingestion with modularity; each source should operate independently with reusable connectors or templates.

- Use cloud-native and elastic architectures to scale automatically as data volumes grow.

- Employ open frameworks like Apache Beam or Kubernetes-based orchestration to support multiple environments and future integrations.

- Conduct quarterly ingestion reviews to evaluate whether tools, formats, and APIs still align with business needs.

FAQs About Data Ingestion

1. How is data ingestion different from ETL or ELT?

Data ingestion focuses on moving data efficiently from one place to another, while ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) add transformation and cleansing steps.

In other words, data ingestion is about getting the data in, and ETL/ELT is about making it usable. In modern data pipelines, ingestion often works alongside ETL tools to automate the entire flow.

2. What are the main types of data ingestion?

There are three primary types of data ingestion:

- Batch ingestion – Transfers data at fixed intervals (e.g., hourly, daily).

- Streaming ingestion – Moves data continuously in real time as events occur.

- Hybrid or micro-batch ingestion – Combines both, sending frequent small batches for near real-time insights.

Choosing the right type depends on how fresh your data needs to be and how much volume you process.

3. How can companies ensure data quality during ingestion?

Data quality starts at the ingestion layer. Organizations should:

- Validate data types and formats during ingestion.

- Deduplicate and filter invalid or corrupted records.

- Track data lineage to trace errors back to the source.

- Use built-in quality checks from tools like Informatica, Databricks Auto Loader, or Talend to catch inconsistencies early.