Artificial intelligence (AI) is becoming pervasive in the enterprise. To support these AI applications, businesses look toward optimizing AI servers and performance networks. Many enterprises prioritize GPU acceleration while overlooking a critical component: Storage infrastructure. According to Gartner, most enterprises will not have to build new storage infrastructure for generative artificial intelligence (GenAI) because they will fine-tune existing large language models (LLMs) instead of training new models. However, large-scale GenAI deployments will still require distinct storage performance and data management capabilities for the data ingestion, training, inference, and archiving stages of the GenAI workflow, rather than relying on traditional cloud storage.

If the storage system is not designed properly, it can become a bottleneck, slowing down data transmission, prolonging model validation, and wasting valuable GPU resources. This not only impacts performance but also hinders innovation in AI.

To fully optimize AI/ML projects, businesses must go beyond GPUs and adopt a high-performance, scalable, and flexible storage system. In this article, we will explore how GreenNode helps enterprises overcome storage challenges, eliminate bottlenecks, and accelerate their AI/ML journey.

Data Silos & Legacy Storage - Key Bottlenecks in AI/ML Workflows

AI workloads require fast, seamless access to massive datasets, yet enterprises often struggle with data silos—where information is fragmented across disparate storage systems. This hinders real-time data aggregation, complicates model training, and reduces AI inferencing accuracy. Without a unified data layer, AI models face latency issues and inefficient resource utilization.

Moreover, traditional storage architectures—including monolithic NAS, legacy cloud solutions, and on-premises storage—were not designed to support AI-scale workloads. These systems suffer from low IOPS, limited scalability, and high retrieval latencies, making them unsuitable for multi-node training.

To overcome these challenges, enterprises require a next-generation AI storage infrastructure capable of delivering ultra-low latency, high throughput, and seamless scalability.

Introduce GreenNode – High-Performance AI Storage for Data-Intensive Workloads

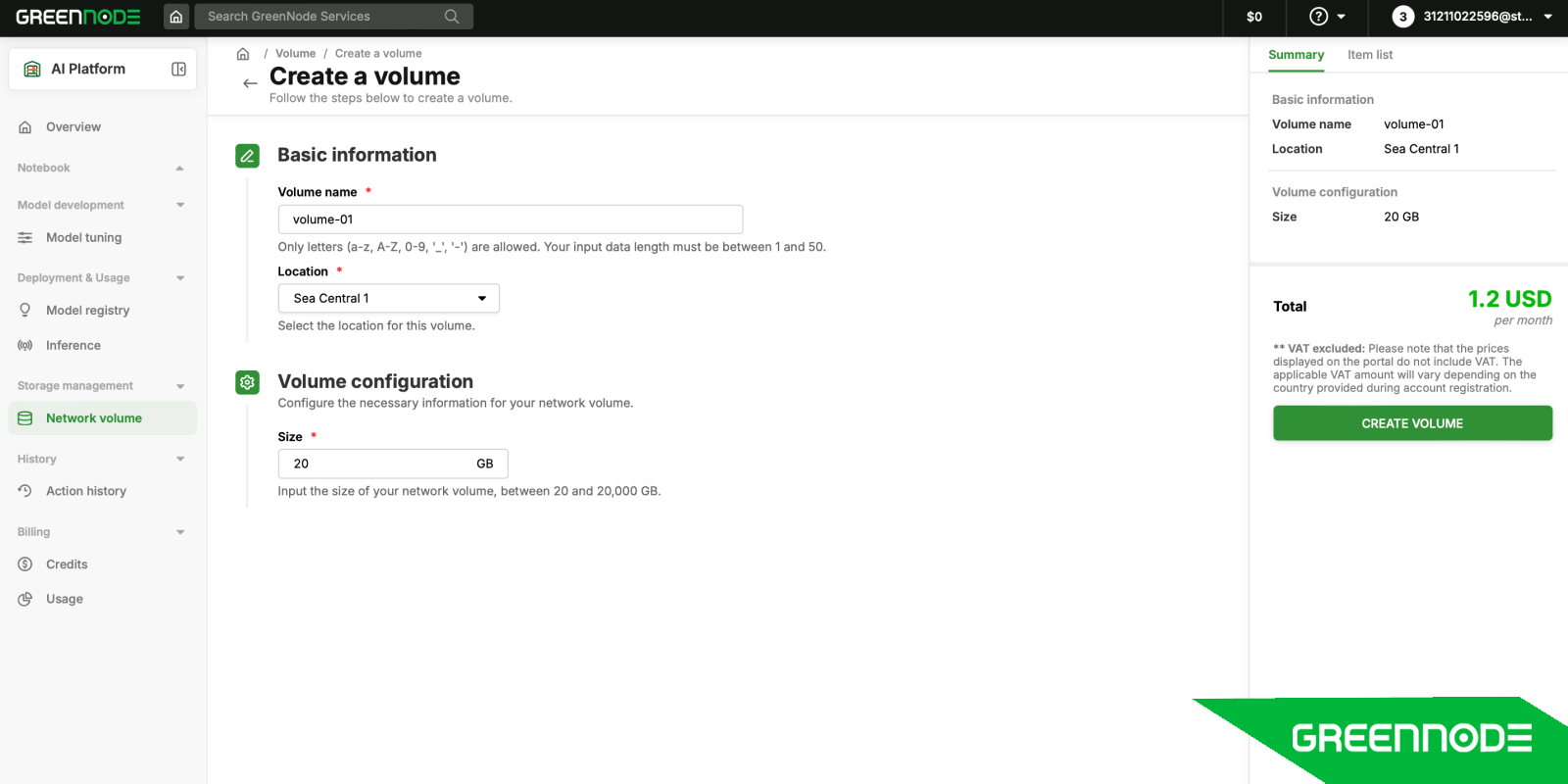

GreenNode’s Network Volume is designed to deliver a unified, high-bandwidth, AI-optimized storage solution, eliminating performance bottlenecks while ensuring low-latency access to massive datasets. We consolidate structured and unstructured data into a single, high-performance storage fabric, facilitating real-time access and optimized data pipelines.

Built on a hyperscale cluster with H100 GPUs, multiple GPU options, and InfiniBand connectivity, GreenNode ensures seamless data flow and accelerated AI workloads. Our infrastructure is 100% optimized for NVIDIA architecture, enabling parallel data processing at scale. No complex setup, no coding required—just instant, high-speed storage for your AI projects!

Powered by VAST, GreenNode provides a disaggregated, AI-native storage architecture that evolves alongside modern computing demands. This next-generation infrastructure is built to handle AI workloads at any scale, ensuring AI engineers can efficiently process massive datasets with uncompromised speed and reliability.

Eliminate AI Bottlenecks – Optimize AI Training with GreenNode

Breaking Down Data Silos for Unrestricted AI Access

GreenNode enables enterprises to centralize and unify AI data, eliminating data silos and accelerating data ingestion pipelines. By supporting high-speed, multi-protocol access (NFS and S3), GreenNode ensures that AI workloads can efficiently retrieve and process massive datasets without performance degradation. Additionally, optimized read/write speeds for large-scale checkpoints (200–400GB) enable seamless, nondisruptive AI training cycles.

Overcoming Legacy Storage Constraints with AI-Native Infrastructure

Training large language models like GPT-3 175B requires sophisticated parallelism strategies to overcome GPU memory constraints and optimize performance. Traditional approaches struggle with scalability bottlenecks, inefficient checkpointing, and high overhead in model restoration. These challenges stem from monolithic compute-storage coupling, leading to slow I/O throughput, data fragmentation, and excessive synchronization overhead.

At GreenNode, we deliver scalable, high-performance AI infrastructure that accelerates LLM training while minimizing operational complexity. With streamlined deployment through the GreenNode Portal, AI practitioners can instantly provision an optimized compute and storage stack purpose-built for large-scale AI workloads.

Leveraging Disaggregated Shared Everything (DASE) architecture, powered by VAST, we separate computers from storage while ensuring seamless pipeline parallelism, rapid checkpointing, and high-throughput data streaming. Built on Global Efficiency Codes, GreenNode eliminates checkpoint bottlenecks, minimizes restore latency, and enables efficient scaling for multi-GPU LLM workloads.

Seamless integration with NVIDIA SuperPOD & DGX systems ensures that hundreds of GPUs can be efficiently orchestrated without hitting I/O bottlenecks. By eliminating storage-related constraints, GreenNode enhances GPU utilization rates, shortens AI training cycles, and enables large-scale AI deployments.

Unlock AI/ML Potentials with GreenNode Today

GreenNode is more than just an AI-ready storage platform—it is a fully optimized, AI-centric data infrastructure designed to eliminate storage bottlenecks, maximize compute efficiency, and accelerate AI innovation. Built on VAST’s next-generation architecture and integrated with NVIDIA GPU solutions, GreenNode empowers enterprises to streamline AI workflows, optimize training performance, and scale AI operations without constraints.

Don’t let storage bottlenecks throttle your AI workloads. Contact us today to explore how GreenNode can optimize AI pipelines and drive enterprise-scale AI adoption.