If you’re like most technical teams in 2025, you’ve probably been using ChatGPT or Claude to speed up content, code, or decisions. It works—until you start asking:

How much are we really spending? How secure is our data? Do we really need something this big?

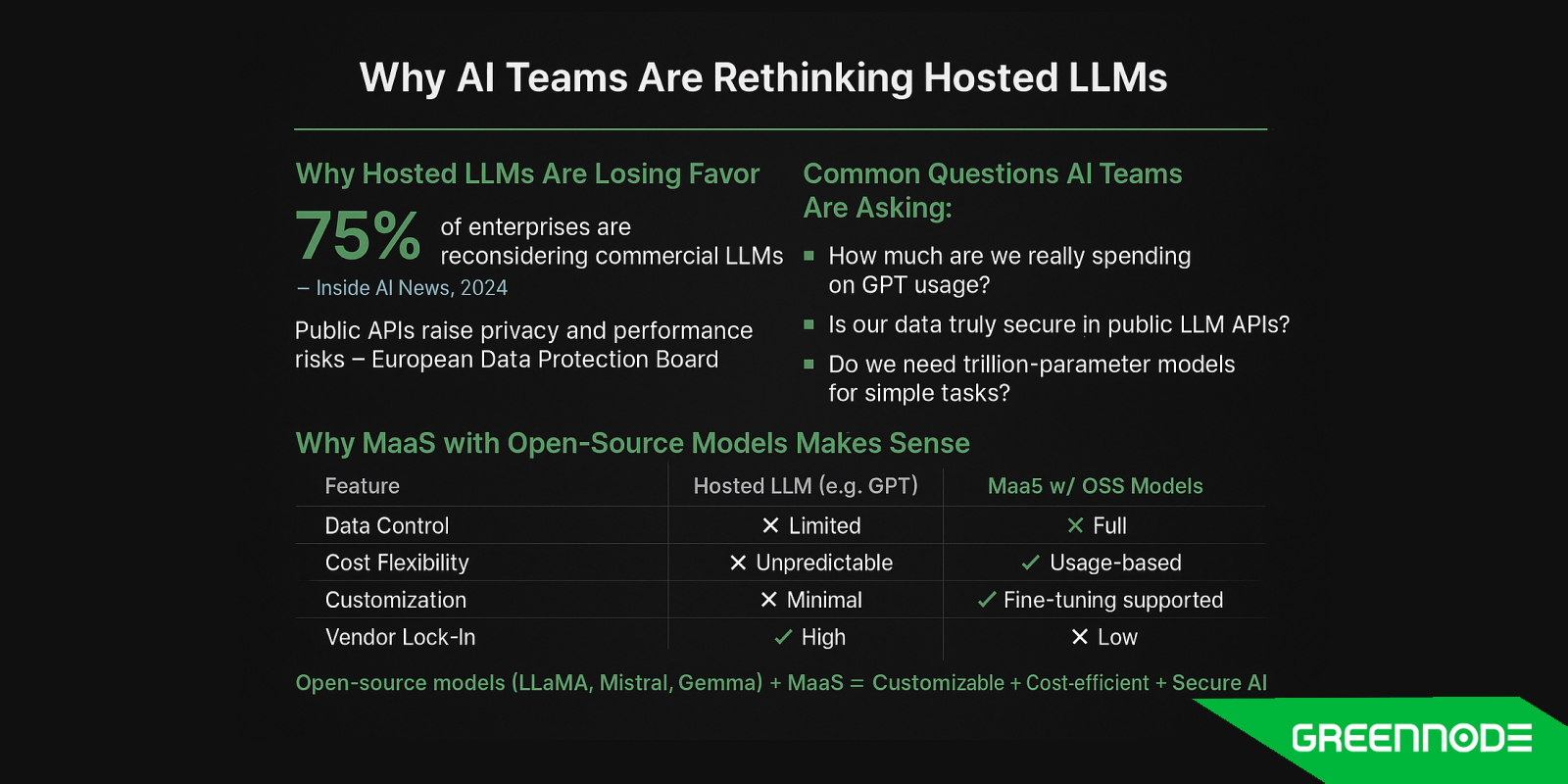

You’re not alone. A 2024 report by Inside AI News found that 75% of enterprises are rethinking commercial LLMs due to concerns over cost, lock-in, and data exposure. The European Data Protection Board also warns that public LLM APIs bring serious risks around privacy and predictability.

So ask yourself:

"Do I really need a 1.7T model to summarize a report? And who else sees that data?"

That’s why many teams are shifting to open-source models like LLaMA, Mistral, and Gemma—hosted through flexible, secure Model-as-a-Service (MaaS) platforms.

In this article, GreenNode will help you evaluate the right MaaS provider—before the next GPT bill surprises you.

Define Your AI Workload Requirements

Before choosing any Model-as-a-Service (MaaS) provider, you’ve got to get brutally clear on what you actually need from the model. Not all workloads are created equal.

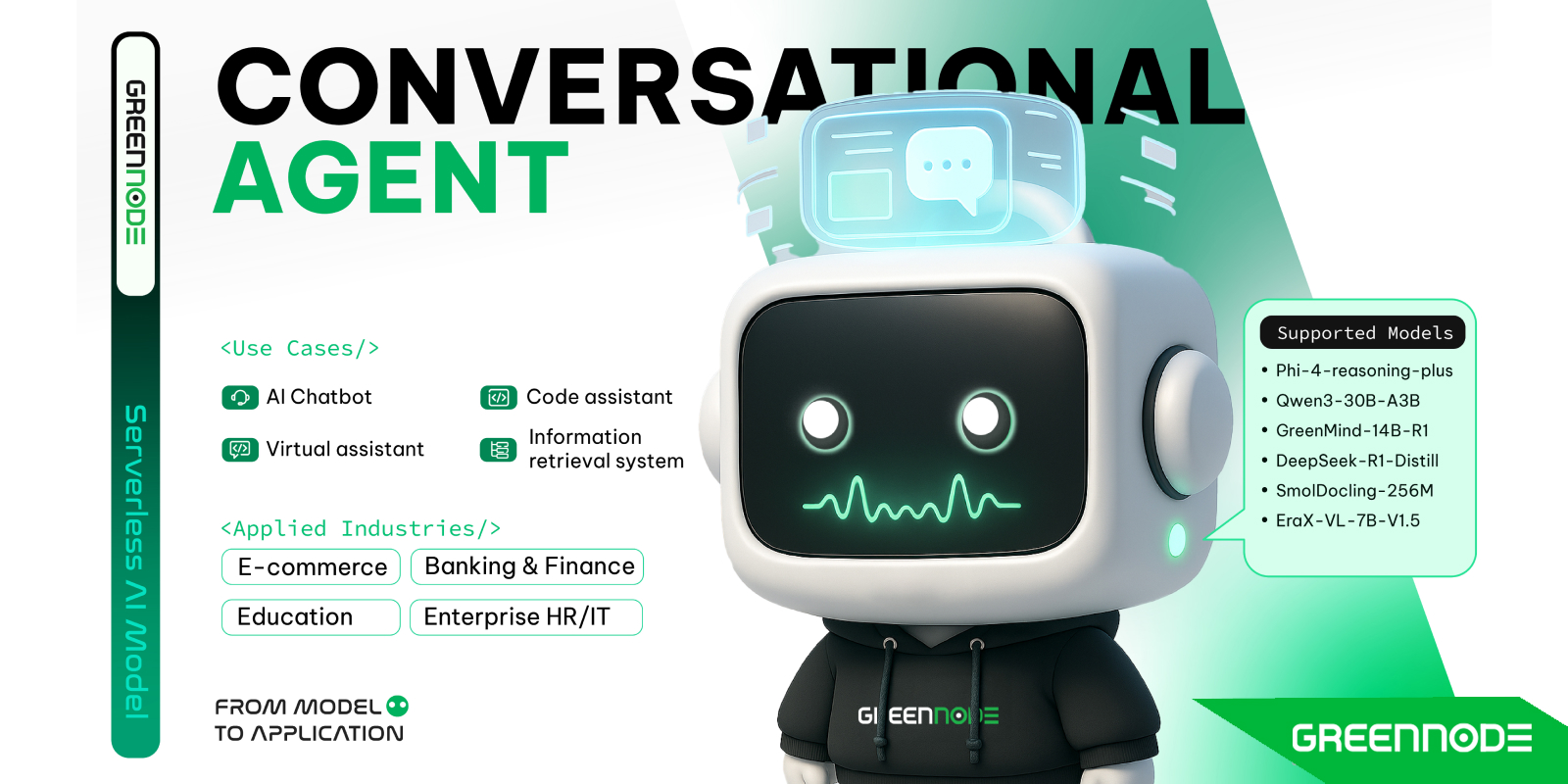

Are you doing text generation, like writing emails or chatbots? Embeddings for RAG? Maybe image generation, speech-to-text, or even fine-tuning custom models for legal or financial docs?

Then comes the nature of your workload:

- Real-time inference (like responding to customers live) needs low latency, ideally under 500 ms.

- Batch processing (like summarizing 10,000 PDFs overnight) can tolerate delays but needs scale.

You also need to consider token throughput (how much data you're pushing per second), concurrent users, and especially compliance needs. For example, if you're in banking or healthcare, data residency and privacy aren’t optional—they’re non-negotiable.

Take a fintech app, for instance. It might need fast, secure, and region-specific model hosting with <100ms latency. Hosted APIs like ChatGPT might not cut it here due to lack of transparency or control.

“Your LLM provider should fit your use case, not the other way around.”

The clearer you are here, the better you'll match with the right MaaS solution.

Check Model Coverage and Customization Capabilities

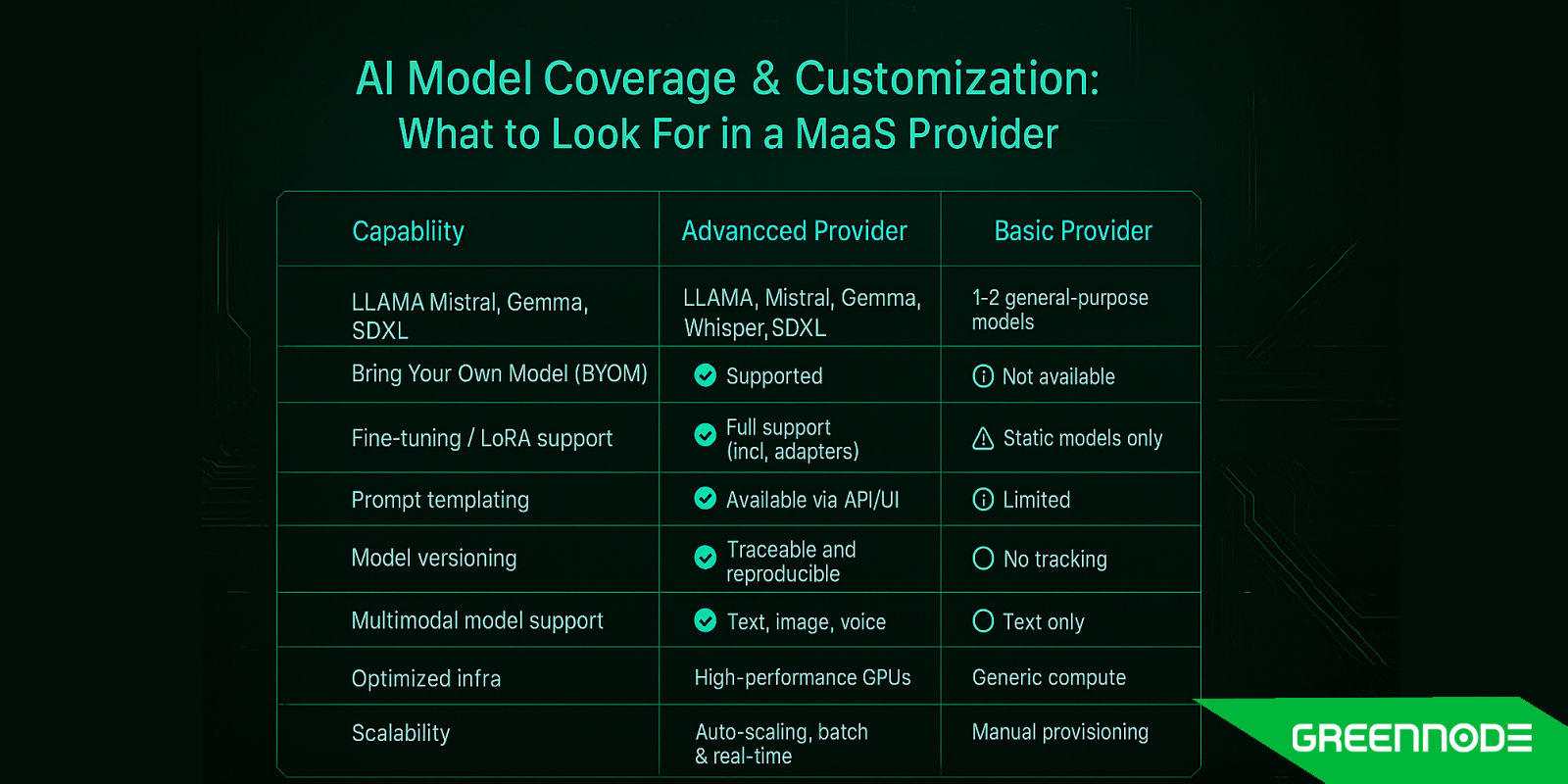

Once you’ve nailed down your workload needs, the next thing to ask is: Can this provider actually support the models I care about?

A strong MaaS provider should offer a broad catalog of open-source models, not just one or two crowd favorites. Look for support for top-tier models like LLaMA, Mistral, Gemma, Whisper (for speech), and SDXL (for image generation). Bonus points if they let you bring your own model—because you never know when you’ll need something niche or proprietary.

Customization is key, especially if you want your AI to match your tone, brand, or domain. Ask if they support LoRA adapters, prompt templating, or full fine-tuning workflows. These features turn generic models into task-specific experts.

You’ll also want versioning and reproducibility. If you can’t track which version of a model generated which result, things get messy fast—especially in regulated industries.

At GreenNode, for example, you get access to both prebuilt models from a library of 20+ AI models and the flexibility to fine-tune or host your own, all running on high-performance GPU infrastructure purpose-built for LLMs and multimodal workloads.

Evaluate Infrastructure Performance and GPU Access

Let’s be real—no matter how fancy the model is, it’s only as good as the hardware it runs on. One of the first things to check with a MaaS provider is what kind of GPUs they’re using. Are they offering top-tier GPU cards that are optimized for LLMs? How many cards are available for your AI models? Or are you unknowingly running on consumer-grade GPUs that throttle performance under pressure?

- Auto-scaling is another big one. When your traffic spikes, can the system scale up inference nodes on demand? If not, expect slowdowns or even dropped requests.

- High availability is also important if you have users across different geographies. Lower latency = happier customers. Look for providers with edge routing and global availability zones.

- Then there’s the choice between shared vs. dedicated compute. Shared is more cost-effective and works well for development or low-traffic workloads. But for production—especially when you need consistent performance or strict SLAs—dedicated compute is often worth the extra cost.

That said, many teams struggle with the decision between dedicated inference and serverless/shared setups. Here are a few important questions to ask yourself:

- Is your traffic high enough that you need to reserve dedicated GPU nodes just for this workload?

- Even if that means paying by the hour for GPU resources instead of by the token?

- How many seconds of delay are you willing to trade for a lower cost?

- What throughput guarantees does the provider offer for shared workloads? And does performance vary depending on the user’s geographic location?

Thinking through these trade-offs will help you choose an infrastructure setup that actually fits your use case—without overpaying or underperforming. This will lead you to the next part of this blog: Money.

Analyze Pricing, Flexibility, and Cost Optimization

Let’s talk money—because model quality doesn’t mean much if the cost structure makes it unsustainable.

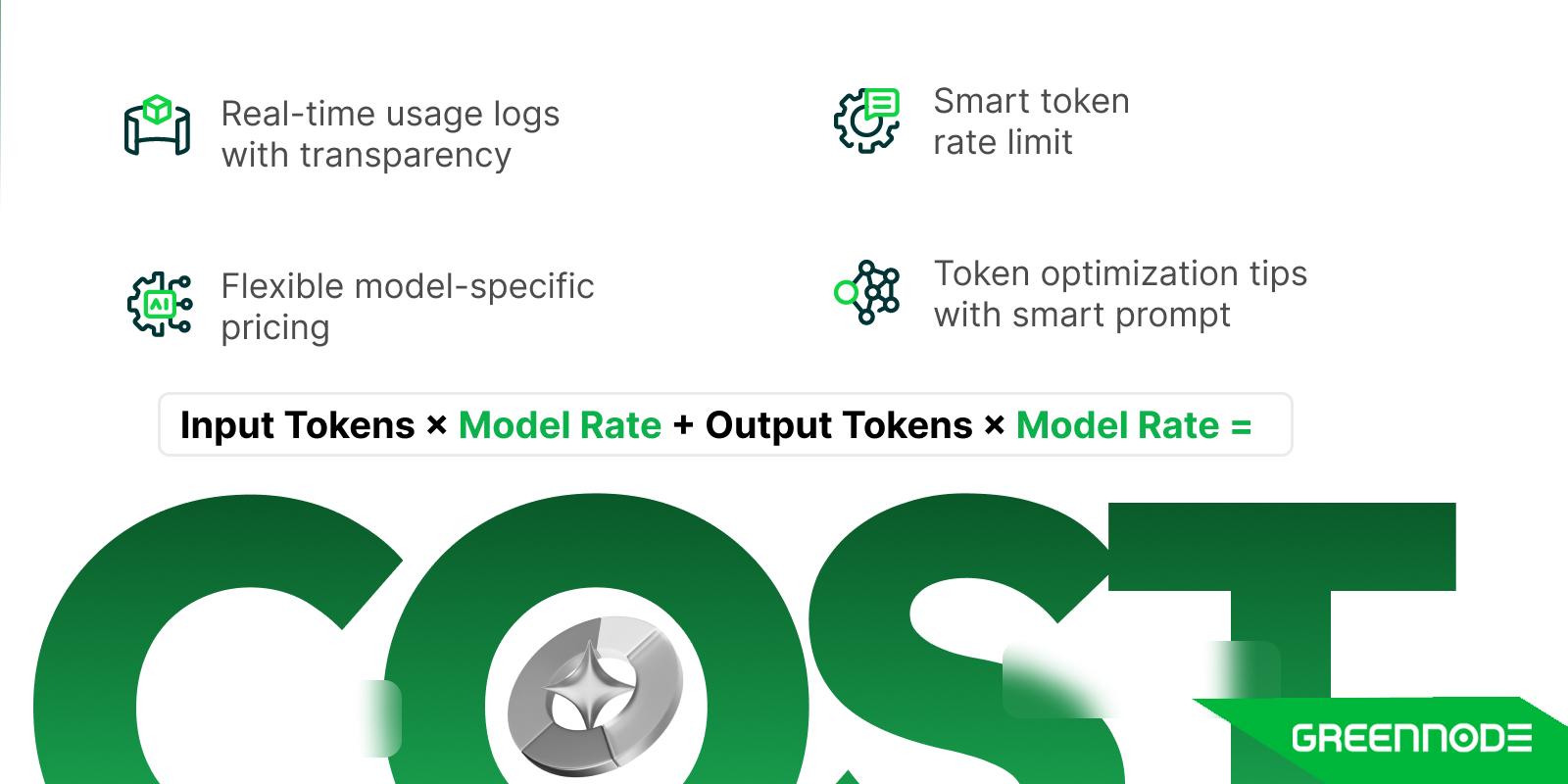

First, check how the provider charges for usage. Some use token-based pricing (e.g., per word or API call), while others charge by the second or hour based on GPU time. Both have pros and cons: token-based is predictable for light usage, while time-based can be more cost-efficient at scale—especially for heavy inference or fine-tuning.

Then look at payment models: is it strictly pay-as-you-go, or can you reserve compute upfront for discounted rates? For many teams, a hybrid setup works best—on-demand for dev and testing, reserved for production workloads.

You’ll also want transparent billing dashboards, real-time usage monitoring, and clear cost breakdowns by project or model. Hidden costs can add up fast, especially if you’re experimenting with multiple modalities like text, image, and speech models, each with different compute demands.

Consider Developer Experience and API Usability

When choosing a Model-as-a-Service provider, the developer experience is just as important as the model itself. Look for a platform with clean, RESTful or gRPC-based APIs, along with SDKs in popular languages like Python, Go, and JavaScript. This ensures quick integration with your existing stack.

It’s also helpful to have multiple ways to interact with the platform—such as a web dashboard, CLI tools, and a fully documented API. Platforms that offer robust documentation, sample code, and error handling guides can drastically reduce onboarding time and integration friction.

According to Amplience’s vendor checklist, ease of integration and clear documentation are two of the most critical elements for enterprise AI adoption. A platform that prioritizes the developer journey will help you move faster from idea to deployment.

Look for Platform-Level Features and Integrations

Beyond the core model APIs, mature MaaS providers offer a suite of platform-level features that improve visibility, control, and integration.

Look for a real-time monitoring dashboard showing usage metrics like latency, token count, and error rates. Features like prompt versioning, audit logs, and rollback capabilities are essential for debugging and maintaining consistent model behavior—especially in production environments.

It’s also worth checking whether the provider integrates with vector databases, embedding pipelines, or retrieval-augmented generation (RAG) workflows. Some platforms go further by supporting MLOps tools, role-based access, and secure gateways for enterprise environments.

As Squirro notes, enterprise-grade AI platforms should deliver not just raw inference but also orchestration features that help teams scale their models reliably and securely.

Assess Support, Community, and Ecosystem

Finally, don’t forget to zoom out and look at the bigger picture—the ecosystem around the platform. It’s not just about the tech; it’s about who’s behind it and how well they support you when things get messy (and they will).

Start with the basics: is the documentation clear, and are there real humans you can talk to if you hit a wall? Does the provider offer enterprise support, SLAs, or onboarding help if you're scaling up?

Then peek into their community presence. Are they active on GitHub and Discord? Hosting frequent webinars? Publishing useful updates—not just sales fluff? A lively, engaged ecosystem usually means the product is evolving, and you'll be able to grow with it. Responsive vendors who actually share knowledge are often the ones who stick with you when the hype fades and the real work begins.

Final Verdict

As more teams outgrow proprietary hosted APIs, the shift toward open-source models and customizable, transparent AI infrastructure is becoming the smarter long-term path. With the right Model-as-a-Service (MaaS) provider, you gain full control over cost, performance, and data—while avoiding lock-in and scaling headaches.

Choosing a provider that truly fits your team’s needs—technically and operationally—can unlock faster deployment cycles, better ROI, and safer enterprise AI adoption.

If you’re exploring options that are fast, reliable, and stable with an abundance of AI model types, don’t overlook GreenNode’s Model-as-a-Service platform. Built on powerful GPU infrastructure, GreenNode offers flexible deployment options, fine-tuning capabilities, and localized support—making it an ideal choice for startups, innovation teams, and enterprises ready to scale open-source AI.

Explore the GreenNode model as a service, or contact us to get $150 free credit for your AI workload TODAY!