Deciding between transfer learning and fine-tuning is a key step in building today’s machine learning and large language model (LLM) workflows. With pre-trained models becoming the backbone of everything from computer vision to enterprise-grade AI assistants, teams must decide whether to reuse these models as-is or invest in adapting them more deeply to their domain.

The difference isn’t just academic: it impacts training time, GPU consumption, model accuracy, and ultimately how well solutions perform in real-world environments. This article unpacks the distinctions between transfer learning and fine-tuning, helping you choose the right path to optimize both resources and outcomes in your ML projects.

What is Transfer Learning?

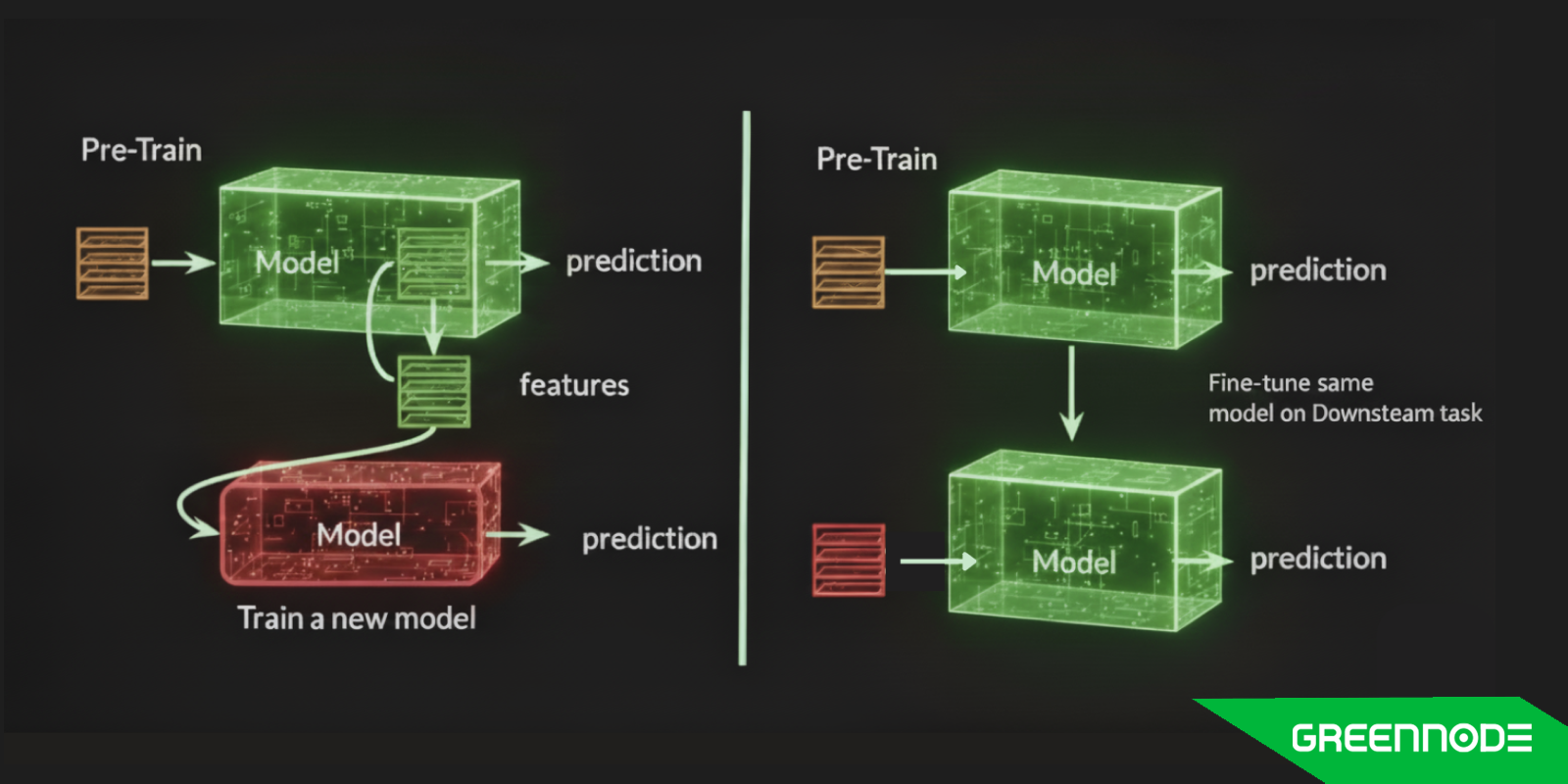

Transfer learning is a technique in machine learning where a model trained on one task is reused as the starting point for another, often related, task. Instead of building a model from scratch, you take advantage of the knowledge captured in a large, pre-trained model such as one trained on ImageNet for vision or BERT for natural language. By keeping most of the original parameters fixed and adapting only the final layers, transfer learning makes it possible to achieve good results with less data, less training time, and lower computational costs.

The core idea is efficiency: rather than relearning general features (edges in images, sentence structures in text), you focus only on the parts specific to your problem. For example, in computer vision, the early layers of a pre-trained model capture universal patterns like shapes or textures, while only the last layers need adjustment to classify medical images or detect defects in manufacturing.

This approach is particularly valuable when data is limited or resources are constrained, making it a widely used method across domains such as NLP, image recognition, and speech processing.

Here is an example code structure in PyTorch for fine-tuning, building upon the previous feature extraction example:

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import models

# 1. Load a pre-trained model (e.g., ResNet-18)

# We set 'weights' to load the pre-trained weights from ImageNet

model_ft = models.resnet18(weights='IMAGENET1K_V1')

# 2. Freeze all the parameters (feature extraction layers)

# We iterate over the parameters and set requires_grad to False

for param in model_ft.parameters():

param.requires_grad = False

# 3. Modify the final fully-connected (FC) layer

# ResNet-18's FC layer input features

num_ftrs = model_ft.fc.in_features

# Replace the FC layer with a new one for your specific number of classes (e.g., 2)

num_classes = 2 # Example: Ants and Bees

model_ft.fc = nn.Linear(num_ftrs, num_classes)

# 4. Define Loss Function and Optimizer

# Only the parameters of the new FC layer have requires_grad=True, so only they will be optimized.

criterion = nn.CrossEntropyLoss()

optimizer_ft = optim.SGD(model_ft.fc.parameters(), lr=0.001, momentum=0.9)

# 5. Training Loop (Simplified - you would replace this with your full training logic)

# (Your dataset and dataloaders would be defined here)

# for epoch in range(num_epochs):

# for inputs, labels in dataloaders['train']:

# # ... training steps ...

# optimizer_ft.zero_grad()

# outputs = model_ft(inputs)

# loss = criterion(outputs, labels)

# loss.backward()

# optimizer_ft.step()What is Fine-Tuning?

Fine-tuning is the process of taking a pre-trained model and updating its parameters on a new dataset so it can perform well on a specific task. Unlike transfer learning, where most of the original model’s weights remain frozen, fine-tuning allows some or all the layers to continue learning during training. This makes the model more adaptable, especially when the target task is different from the one it was originally trained on.

At its core, fine-tuning strikes a balance: it leverages the general knowledge captured in a large pre-trained model while adjusting it to fit the nuances of a new domain. For example, a language model like BERT can be fine-tuned for sentiment analysis, question answering, or medical text classification. Similarly, an image model trained on ImageNet can be fine-tuned to detect diseases in X-rays or classify defects in industrial products.

Fine-tuning can be done in different ways:

- Full fine-tuning: all layers are updated, often with a lower learning rate to avoid destroying useful pre-trained features.

- Partial fine-tuning: only the later layers are unfrozen, while the early ones remain fixed.

- Parameter-efficient fine-tuning (PEFT): techniques like LoRA or adapter layers update only a small subset of parameters, reducing compute and memory costs.

Because it adapts the model more deeply, fine-tuning generally requires more computation and a larger dataset than transfer learning. But when applied correctly, it can deliver higher accuracy and domain-specific performance.

Here is an example code structure in PyTorch for fine-tuning, building upon the previous feature extraction example:

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import models

# 1. Load a pre-trained model (e.g., ResNet-18)

model_ft = models.resnet18(weights='IMAGENET1K_V1')

# 2. Modify the final fully-connected (FC) layer

num_ftrs = model_ft.fc.in_features

num_classes = 2 # Example: Ants and Bees

model_ft.fc = nn.Linear(num_ftrs, num_classes)

# 3. Fine-Tuning - Unfreeze some or all layers

# In fine-tuning, you typically start by training the whole model,

# or unfreezing specific late layers for a small learning rate.

# Option A: Unfreeze ALL parameters for training (Full fine-tuning)

# Since we didn't freeze them in step 1, they are already trainable by default.

# We can skip the 'for param in model_ft.parameters(): param.requires_grad = True' part.

# Option B: Selective unfreezing (e.g., unfreeze the last block)

# To demonstrate selective unfreezing, let's assume we froze everything previously (for clarity, uncomment below):

# for param in model_ft.parameters():

# param.requires_grad = False

#

# # Now, unfreeze the last convolutional block (layer4) and the new FC layer

# for param in model_ft.layer4.parameters():

# param.requires_grad = True

#

# for param in model_ft.fc.parameters():

# param.requires_grad = True

# 4. Define Loss Function and Optimizer

# Crucially, we now pass *all* the parameters we want to train to the optimizer.

# We often use different learning rates for the unfreeze layers vs the new layer.

# Get all trainable parameters (layer4 and fc in Option B)

params_to_update = []

for name, param in model_ft.named_parameters():

if param.requires_grad == True:

params_to_update.append(param)

# Set a smaller learning rate (e.g., 0.0001) for the entire model or for the base layers

# and a slightly larger one for the new FC layer if you're using parameter groups.

# Simple Fine-tuning (one learning rate for all trainable params):

criterion = nn.CrossEntropyLoss()

optimizer_ft = optim.SGD(params_to_update, lr=0.0001, momentum=0.9) # Note the very small LR (0.0001)

# Advanced Fine-tuning (different learning rates for different groups):

# optimizer_ft = optim.SGD([

# {'params': model_ft.layer4.parameters(), 'lr': 0.00001}, # Very low LR for deeper layers

# {'params': model_ft.fc.parameters(), 'lr': 0.001} # Higher LR for the new layer

# ], momentum=0.9)

# 5. Training Loop (Simplified)

# (Your dataset and dataloaders would be defined here)

# The training loop is the same, but more parameters are updated.

# for epoch in range(num_epochs):

# for inputs, labels in dataloaders['train']:

# # ... training steps ...

# optimizer_ft.zero_grad()

# outputs = model_ft(inputs)

# loss = criterion(outputs, labels)

# loss.backward()

# optimizer_ft.step()Key Differences Between Fine-Tuning and Transfer Learning

Choosing between transfer learning and fine-tuning often comes down to practical trade-offs. Both methods rely on pre-trained models, but they differ in how much of the model is retrained, how much data is needed, and the balance between efficiency and accuracy. The table below highlights the key differences across the most important aspects.

| Aspect | Transfer Learning | Fine-tuning |

| Training scope and layer freezing | Most layers remain frozen; typically only the final classifier head is trained. | Some or all layers are unfrozen and updated during training. |

| Data requirements and domain shift | Works well with small datasets when the new task is similar to the pre-training domain. | Requires more data, especially if the new task is very different from the original domain. |

| Compute cost and scalability | Low compute cost and faster training, since fewer parameters are updated. | Higher compute cost and longer training times, as more parameters are optimized. |

| Performance trade-offs and risk of overfitting | More stable and less prone to overfitting but may underperform if the target domain is very different. | Can achieve higher accuracy and domain-specific performance, but there is a greater risk of overfitting if data is limited. |

In short, transfer learning is the go-to option when you need speed, efficiency, and solid performance with limited data, while fine-tuning is the better choice when accuracy and domain adaptation are critical. Understanding these distinctions helps teams make informed decisions about which approach best fits their data, resources, and project goals.

When to Use Transfer Learning vs Fine-Tuning

Deciding between transfer learning and fine-tuning comes down to the size of your dataset, the resources you have, and how specific your task is. Transfer learning works best when you need something quick and efficient, while fine-tuning is the better choice when accuracy and domain adaptation matter most. In some cases, a mix of both can give the right balance. The following guide breaks down when each option makes the most sense.

When to Use Transfer Learning

- Small datasets: Ideal when labeled data is limited (e.g., medical imaging, niche text classification).

- Resource constraints: Suitable when training budgets or GPU resources are tight.

- Similar domains: Works well if the target task is close to the pre-training domain (e.g., using ImageNet features for other natural images).

- Rapid prototyping: Useful for quick proof-of-concepts or baseline models.

- Low risk of overfitting: Preferred when you want stable performance without heavy tuning.

When to Use Fine-Tuning

- Large, high-quality datasets: Necessary to adapt models deeply (e.g., training LLMs for legal or scientific text).

- Domain shift: Best when the new task is very different from the original (e.g., adapting general BERT to biomedical NLP).

- High performance required: Used when accuracy and domain-specific generalization are critical.

- Enterprise/production use cases: Common in applications where a generic model underperforms without customization.

- Sufficient compute available: Practical when training time and GPU budget are not a major constraint.

Also Read: Fine-tuning RAG Performance with Advanced Document Retrieval System

When to Use Hybrid Approaches (Adapters, LoRA, Prompt Tuning)

- Very large models: Useful when full fine-tuning is too costly.

- Need for efficiency: Keeps most parameters frozen while adapting only small subsets.

- Multi-domain adaptation: Flexible for deploying the same backbone across many tasks.

- LLM customization: Popular for adapting foundation models (GPT, LLaMA, etc.) without retraining from scratch.

Future Trends in Transfer Learning & Fine-Tuning

Parameter-Efficient Fine-Tuning (LoRA, Adapters, Prefix Tuning)

As models scale into billions of parameters, retraining the entire network for every new task becomes impractical. This has led to the rise of parameter-efficient fine-tuning (PEFT) methods. Techniques such as LoRA (Low-Rank Adaptation), adapter layers, and prefix tuning update only a small fraction of a model’s parameters while keeping the majority frozen. This approach reduces compute and memory demands, lowers costs, and makes it possible to adapt large language models on commodity hardware without sacrificing much accuracy.

Role of Foundation Models & Self-Supervised Learning

The shift toward foundation models trained on vast and diverse datasets is reshaping both transfer learning and fine-tuning strategies. These models, often trained using self-supervised learning, capture general-purpose representations that can be adapted to a wide range of downstream tasks. Instead of training from scratch, organizations now focus on adapting these foundations by choosing between light transfer learning, full fine-tuning, or parameter-efficient approaches depending on their goals. This trend accelerates deployment and democratizes access to advanced AI capabilities.

Multi-Modal Adaptation (Vision + Language Models)

The next frontier is multi-modal adaptation, where models integrate different data types such as text, images, speech, and video. Pre-trained multi-modal systems like CLIP or Flamingo demonstrate how transfer learning and fine-tuning can be extended beyond a single modality.

Fine-tuning multi-modal foundation models opens new possibilities, from automated report generation in healthcare (combining imaging data with clinical notes) to AI assistants that can interpret and respond to both visual and textual queries. As demand for richer, cross-domain applications grows, multi-modal adaptation will become a standard part of the model training toolkit.

FAQs

Is fine-tuning always better than transfer learning?

Not necessarily. Fine-tuning can deliver higher accuracy and domain-specific performance, but it also requires more data, compute, and careful tuning to avoid overfitting. Transfer learning is often more efficient and works well when data is limited or when the target task is similar to the pre-training domain.

How much data do you need for fine-tuning a model?

The amount varies widely depending on the model size and the complexity of the task. For smaller models, a few thousand labeled examples may be enough. Large language models or vision models usually benefit from tens of thousands of samples or more. In general, the larger the model, the more data you need for effective fine-tuning.

What are parameter-efficient fine-tuning methods like LoRA?

Parameter-efficient fine-tuning (PEFT) techniques such as LoRA (Low-Rank Adaptation), adapters, and prefix tuning update only a small subset of model parameters while keeping the backbone frozen. This reduces training cost and memory usage, making it possible to adapt very large models with modest compute resources.

Can transfer learning and fine-tuning be combined in practice?

Yes. A common workflow is to start with transfer learning, freezing most layers and training only a new classifier head, then selectively unfreeze layers for fine-tuning once you have more data or need higher accuracy. Hybrid approaches like this offer a balance between efficiency and performance.