The deeper you dive into the AI zone, the more you realize just how limitless this technology is. From the perspective of an enterprise, using AI is already complex — but developing it is an even bigger challenge. So why does building and owning core AI technology matter? After all, you could just plug into APIs from OpenAI, Gemini, Qwen, and the like.

The answer is simple: you can’t depend on the tech giants forever.

As one of the companies committed to developing core AI technologies from day one, we know this journey is far from easy. But every step forward lays the foundation for the kind of rocket science breakthroughs that will define the future.

GreenMind—an open-source reasoning large language model (LLM) developed by GreenNode—has now become the first Vietnamese LLM integrated into NVIDIA NIM. This milestone not only highlights the R&D capabilities of our engineering team, but also reinforces our long-term commitment to building sovereign AI.

Also Read: Best Open-Source AI Platforms for 2025: The Frameworks Powering Next-Gen ML and LLMs

Vietnam Rising on the Global AI Map

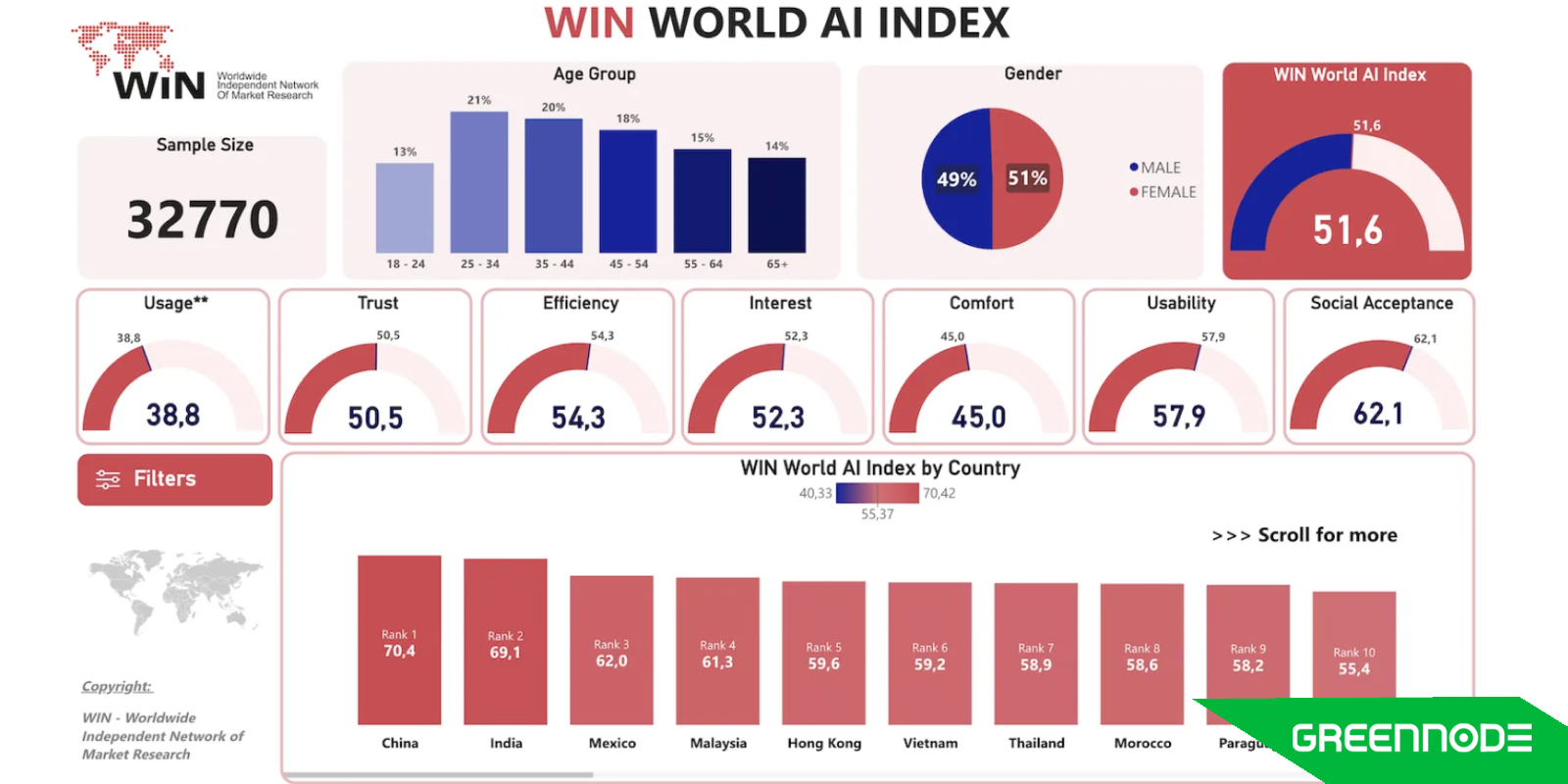

According to the WIN World AI Index 2025, Vietnam is already sitting at 6th place out of 40 countries in AI readiness. Not bad at all — top 10 globally is like qualifying for the AI Champions League.

The government’s national AI strategy has been ambitious from the start: top 5 in ASEAN, top 60 worldwide, five AI brands with regional recognition, plus a national center for high-performance computing. In other words: Vietnam isn’t just playing catch-up, it wants to be a serious AI contender.

But here’s the catch — until recently, there was still a missing piece: a homegrown large language model. Most existing LLMs are built abroad, and while they’re powerful, they often trip over Vietnamese nuance — cultural context, legal terminology, or even everyday syntax. For enterprises, that’s a problem.

At the same time, companies everywhere are rethinking their reliance on closed-source, commercial LLMs. Why? Privacy concerns, unpredictable costs, and the dreaded vendor lock-in. Open-source models are winning attention because they give enterprises what they actually want: control, flexibility, and cost efficiency.

And that’s exactly the gap GreenMind was built to fill.

GreenMind and The Journey to NVIDIA NIM

Leveraging a total number of 8 NVIDIA H100 Tensor Core GPUs for fine-tuning and inferencing, GreenNode AI Lab dramatically accelerated model refinement and performance. Unlike conventional LLMs that deliver simple and immediate responses, GreenMind is designed to take intermediate reasoning steps prior to generating a final answer, logical reasoning, and contextual understanding.

To ground the model in Vietnamese realities, GreenNode curated a high-quality Vietnamese instruction dataset with 55,418 samples drawn from diverse domains of culture, legal and civic knowledge and education.

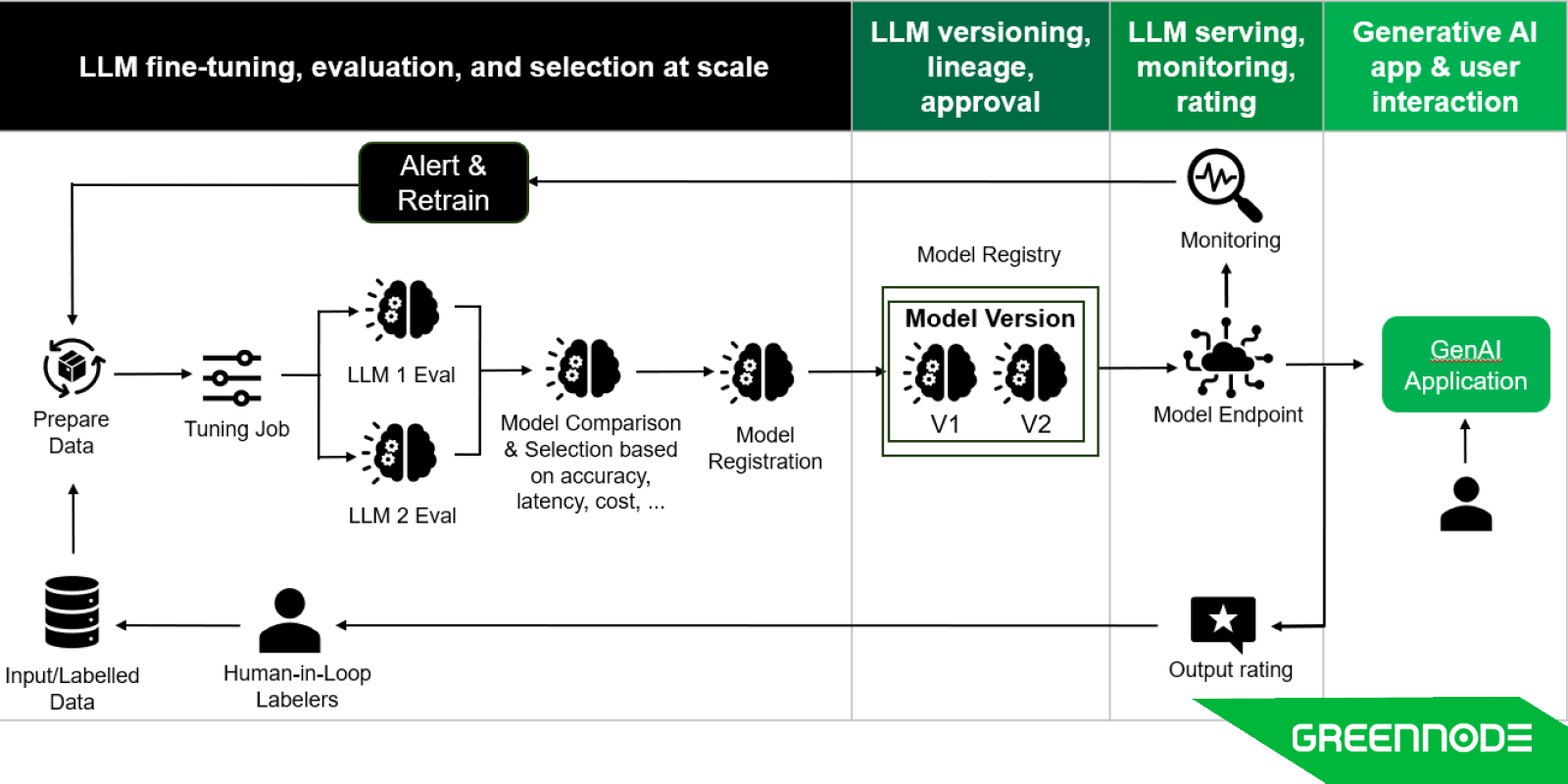

Through a self-improving data loop combined with a human-in-the-loop approach, GreenMind continuously refines its reasoning steps and outcomes. With those features, the model is well-suited for enterprise AI assistants, context-aware conversational chatbots, advanced document and information retrieval, and complex Vietnamese reasoning tasks - from logic and mathematics to scenario analysis.

Key features:

- Chain-of-Thought (CoT): Enables multi-step logical reasoning.

- Group Relative Policy Optimization (GRPO): Rewards accuracy, reasoning correctness, and language quality with a structured reasoning-output format (<think> … </think> <answer> …).

- 55,418 high-quality Vietnamese samples: Covering law, civic knowledge, culture, education—each verified by humans or drawn from trusted sources.

- Reasoning depth: Arithmetic, symbolic logic, commonsense inference, analogical and counterfactual reasoning.

This makes GreenMind especially suited for enterprise AI assistants, legal and financial reasoning, and context-aware chatbots.

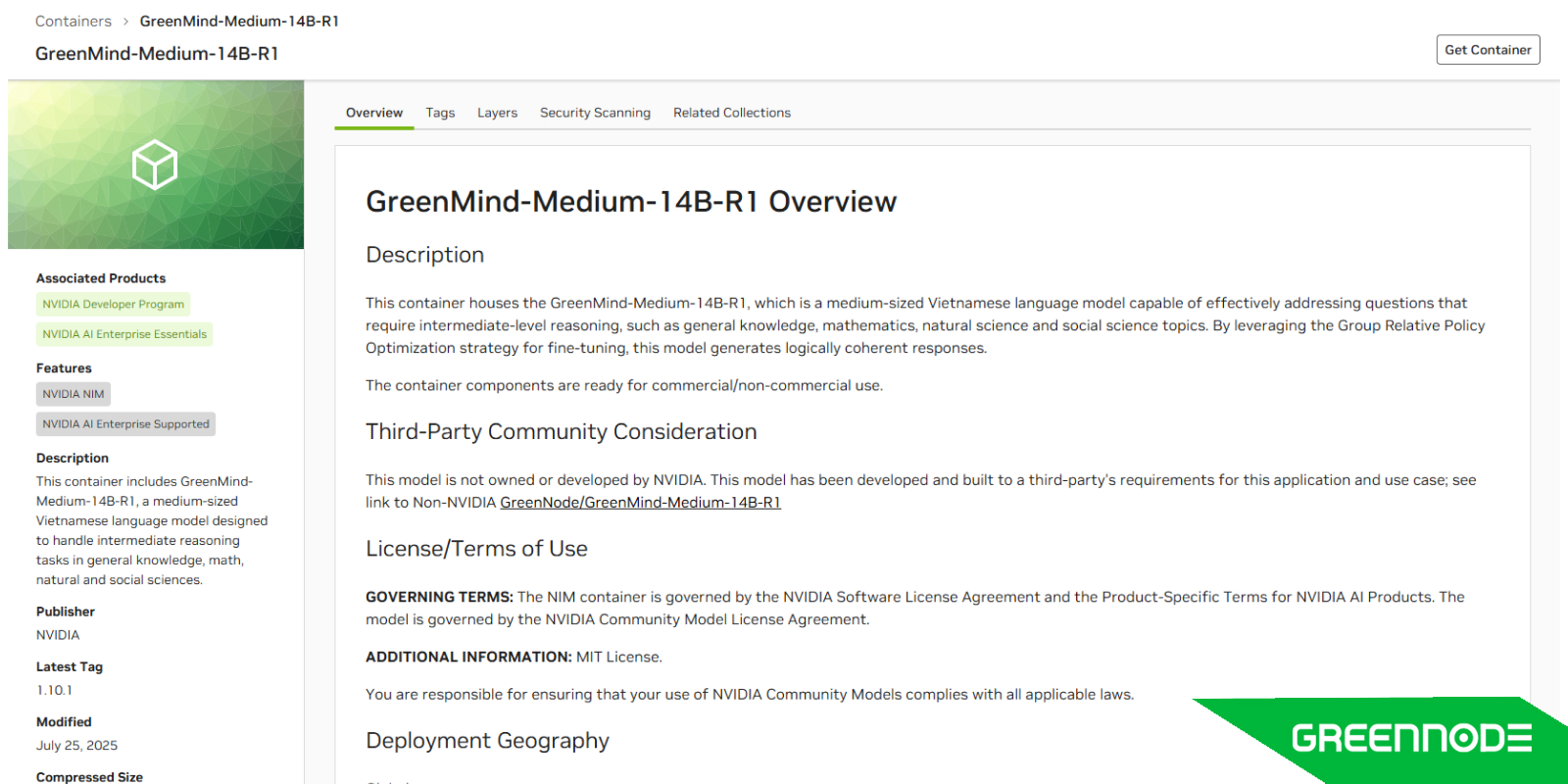

The integration of GreenMind into NVIDIA NIM was a demanding yet rewarding journey. Every model selected for NIM must undergo a rigorous validation process, including strict technical benchmarks and comprehensive documentation of architecture, datasets, fine-tuning methodology, and research.

Over six months of relentless refinement since its initial release, GreenMind successfully met the requirements and earned its place on NVIDIA NIM.

“Right from the start, our vision was to bring a Vietnamese LLM to be on pair with global models such as Mistral, Claude, and others. GreenMind integration into NVIDIA NIM is not only a validation of our efforts but also a milestone for indigenous AI development in Vietnam. With the talent and expertise available in the country, we believe more groundbreaking “Made in Vietnam” models will soon make their mark on the global stage,” said Võ Trọng Thư, Head of AI Lab at GreenNode.

What made the success of GreenMind?

GreenMind may not claim to be the model that changes the entire game — but it has already proven that it can set a new standard for Vietnamese AI research and application. The secret behind GreenMind’s leap forward lies not only in its architecture, but also in the data that shaped it and the benchmarks that validated it.

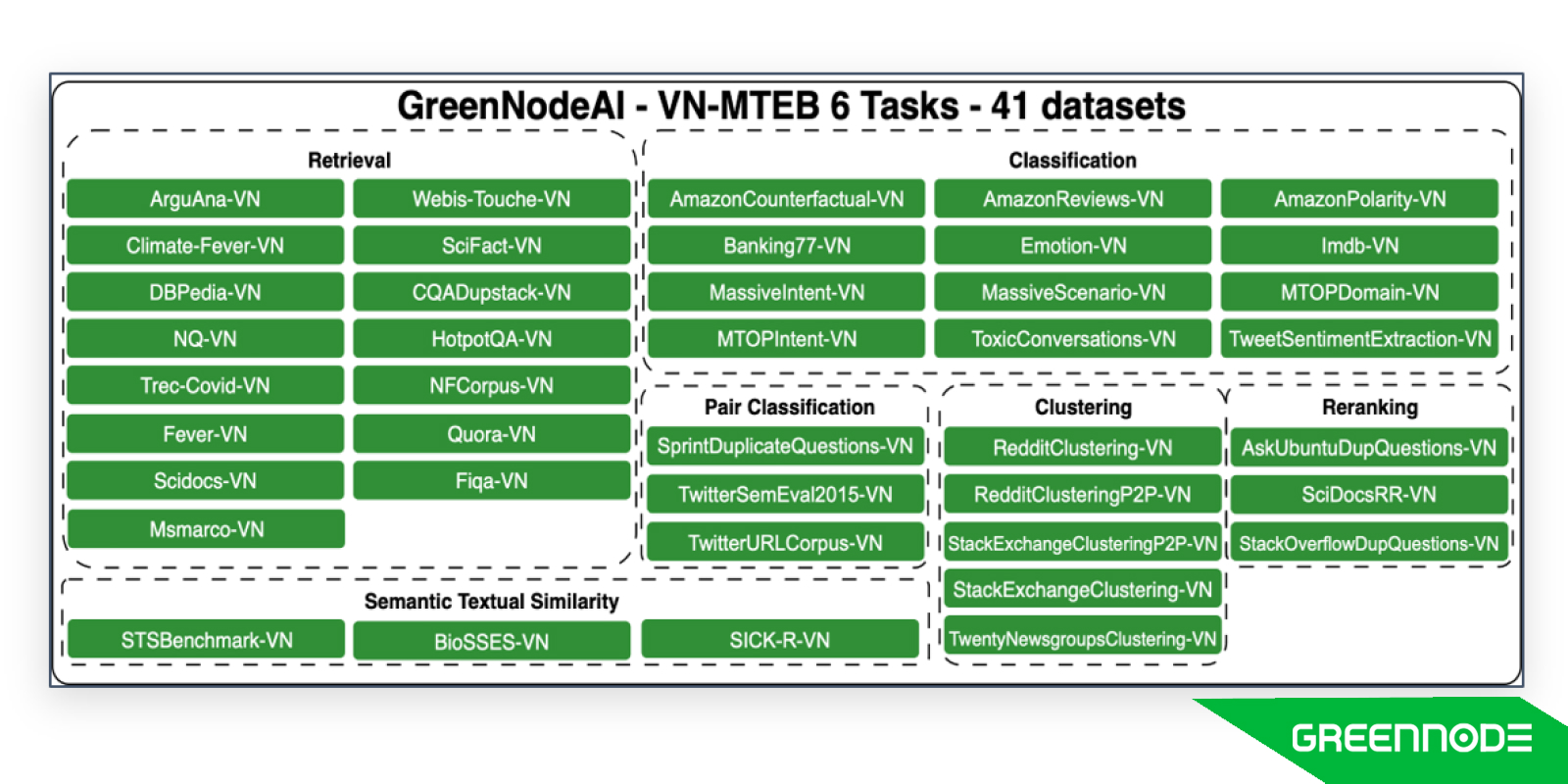

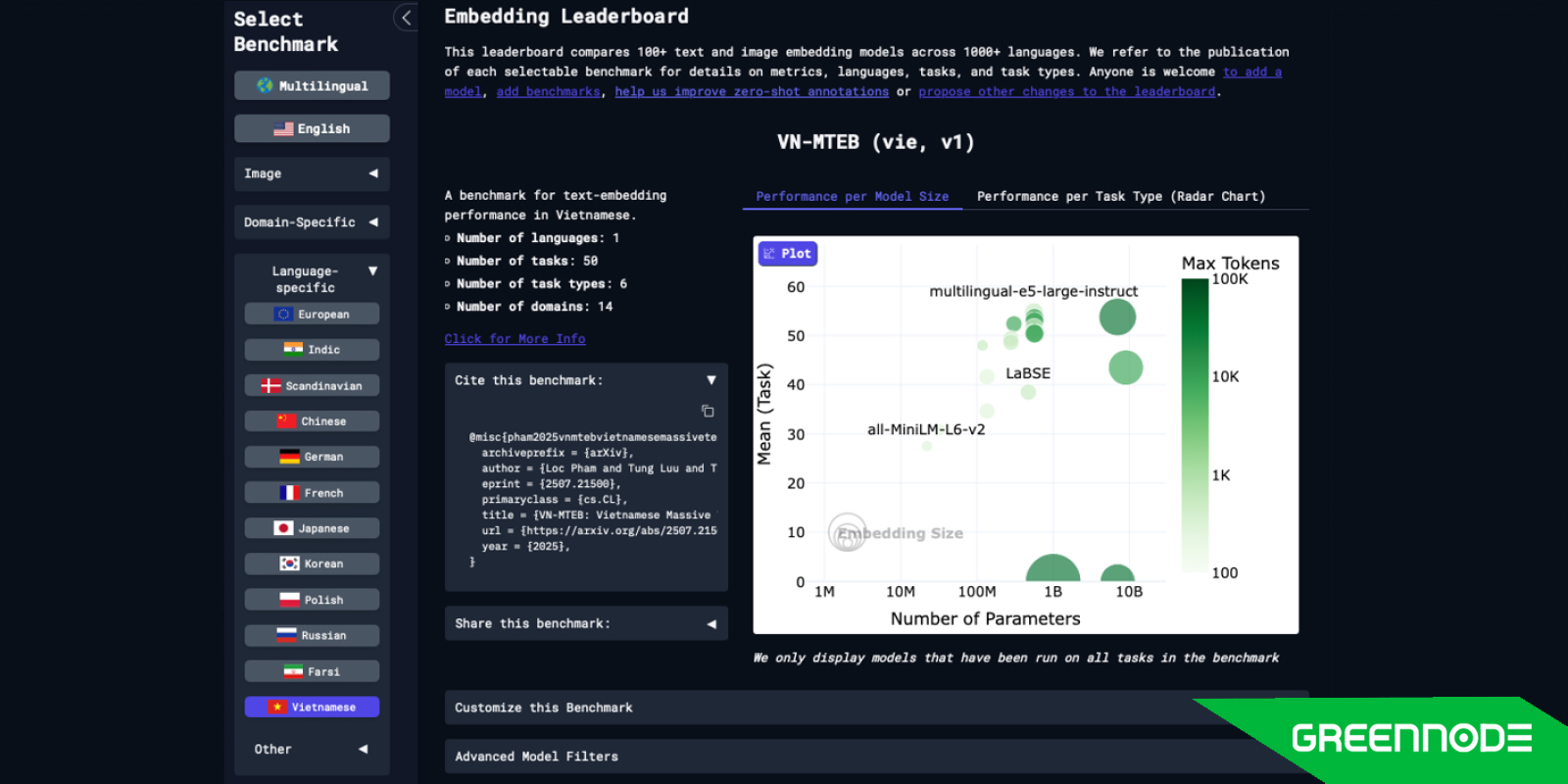

When building the dataset to fine-tune GreenMind, our team didn’t stop at conventional approaches. We extended and adapted the widely used MTEB benchmark into a Vietnamese-specific version (VN-MTEB), laying the foundation for a stronger benchmark ecosystem for Vietnamese datasets and models moving forward.

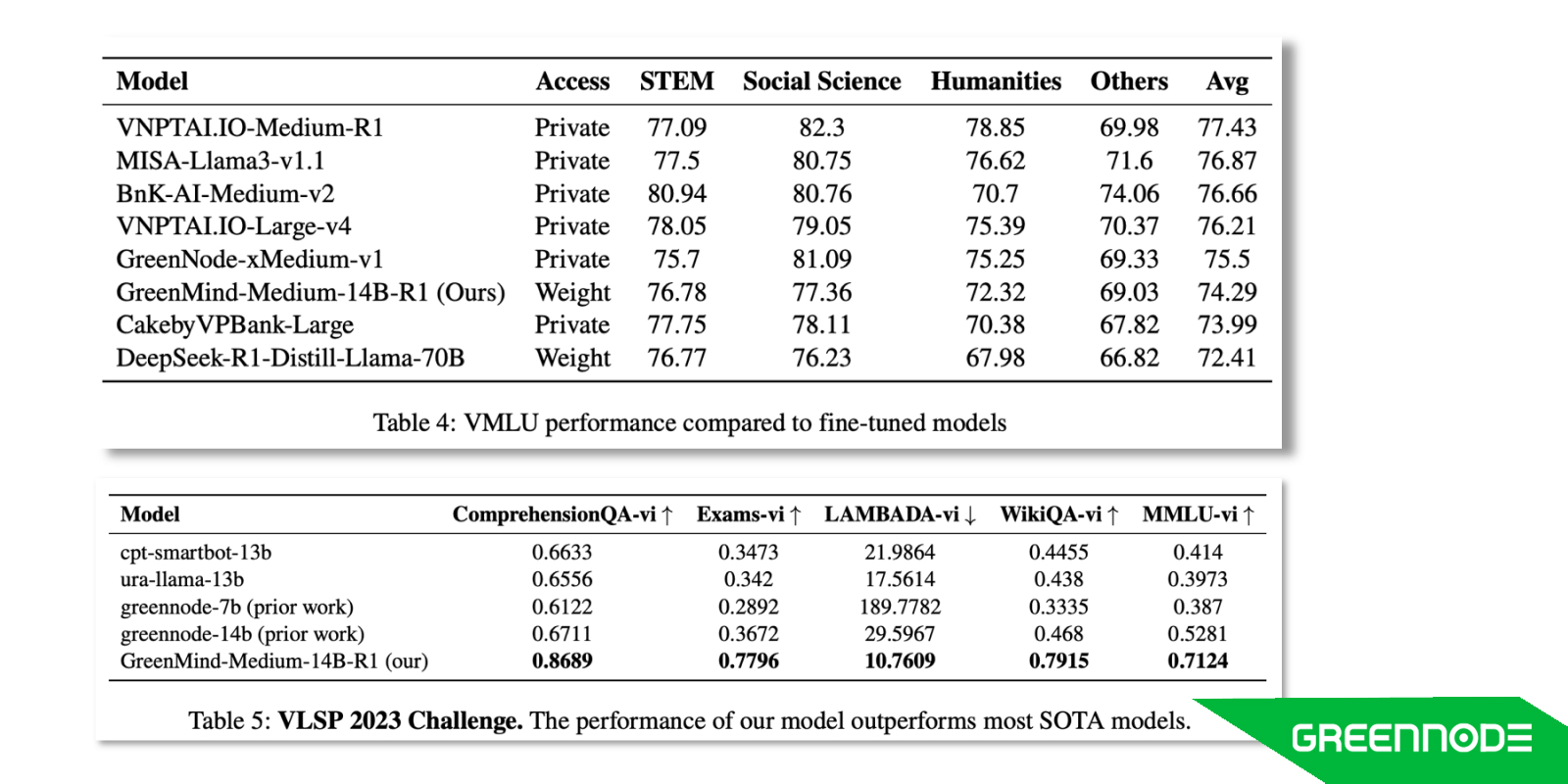

Armed with this high-quality dataset, GreenMind has consistently delivered outstanding performance, securing top results across multiple leaderboards such as VLMU and VLSP. These achievements demonstrate not just technical capability, but also the broader impact of creating a benchmark framework tailored for Vietnamese.

And this is only the beginning. GreenMind is now available to the public, making it easier than ever to experiment, deploy, and innovate with Vietnam’s first reasoning LLM recognized on NVIDIA AI NIM.

- Try GreenMind now on NVIDIA NGC Catalog

- Or run it directly on GreenNode Serverless AI

- And SDK: GreenNode NIM

Final thoughts

When we said you can’t depend on the tech giants forever, GreenMind was the answer we had in mind. From curated datasets and reasoning-focused training to proving itself on benchmarks like VN-MTEB, VMLU, VLSP, and NVIDIA AI NIM it shows what happens when you build core AI instead of borrowing it.

The journey doesn’t stop here. GreenMind’s roadmap points toward lighter, faster versions, multimodal capabilities, and deeper ecosystem integration. But today, it already stands as proof that we can go beyond consuming AI technologies — we can create them, refine them, and deploy them at scale.

The future of AI will belong to those who don’t just use it, but who master the core. Grab your future today with GreenNode.