Open-source AI platforms are reshaping how machine learning and large language models (LLMs) are built, deployed, and scaled. By harnessing the power of community-driven development, these tools offer not only cost efficiency but also unmatched flexibility for researchers, engineers, and enterprises alike. From frameworks like PyTorch and TensorFlow to open LLMs such as LLaMA, Mistral, or GreenMind, the open-source ecosystem is accelerating innovation at a pace proprietary solutions can’t match.

In this article, we’ll explore the most important open-source AI platforms available today, highlight their strengths and trade-offs, and show where the field is headed. Whether you’re a developer choosing a framework, a business leader evaluating adoption, or simply curious about the future of AI, this guide will help you navigate the tools, use cases, comparisons, and trends shaping the next wave of AI development.

What is Open-Source AI?

Open-source AI refers to artificial intelligence models, frameworks, and tools whose source code or training assets are made freely available under open licenses. This means developers, researchers, and organizations can use, study, modify, and redistribute them without the restrictions of proprietary software.

In practice, open-source AI covers:

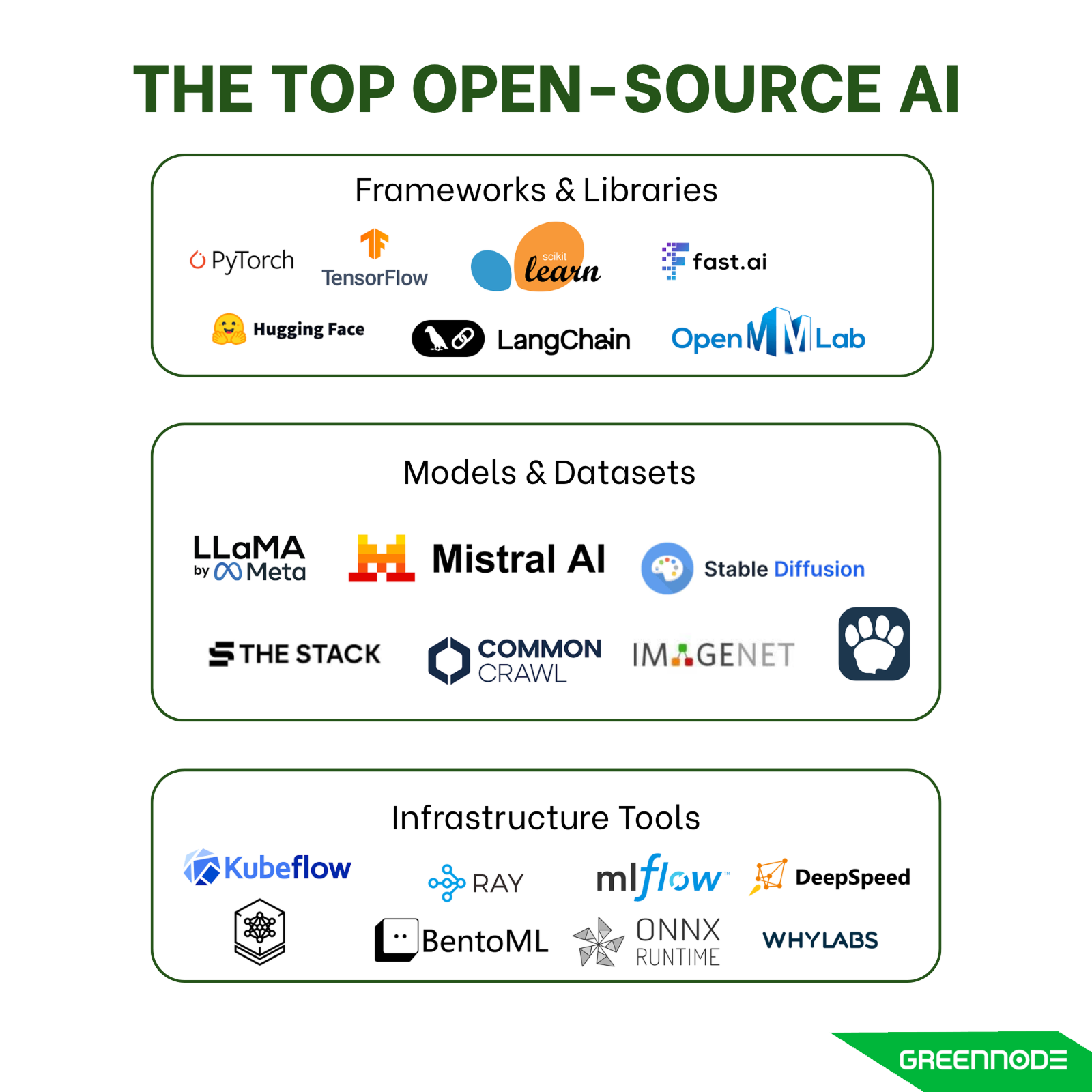

- Frameworks & libraries like PyTorch, TensorFlow, Scikit-learn, which provide building blocks for machine learning.

- Models & datasets such as LLaMA, Falcon, Stable Diffusion, BLOOM, GreenMind, where weights and training code are released publicly.

- Infrastructure tools like MLflow, Kubeflow, Ray, which support training, monitoring, and deployment.

Why Open-Source Matters for AI Development

Open-source has become a backbone of AI progress for several reasons:

- Innovation: Because code and models are shared openly, breakthroughs propagate faster across the community. Generative-AI projects like LangChain and Stable Diffusion rose sharply in popularity, entering GitHub’s top projects by contributor count in 2023.

- Trust: Transparent codebases allow users and auditors to inspect model internals, detect biases, and validate safety. Many companies now adopt open-source components not just for flexibility but to avoid black-box dependency. According to a McKinsey survey, 76% of organizations expect to increase use of open-source AI in coming years.

- Customization: With full access to code and architecture, research teams and businesses can adapt models to domain-specific needs such as tuning, pruning, or modifying components in ways proprietary systems often disallow. According to a survey from Anaconda, 58% of organizations already use open-source components in at least half of their AI/ML projects.

Because of these advantages, many of today’s leading AI frameworks and models have emerged from open research and open-source ecosystems.

Categories of Open-Source AI

Open-source AI can be grouped into several broad categories, each serving a different stage of the machine learning and large language model (LLM) workflow.

- Machine Learning Frameworks (PyTorch, TensorFlow, JAX, Scikit-learn): These are the foundational platforms that provide the building blocks for training and evaluating machine learning models. They define how models are structured, optimized, and executed.

- Deep Learning & LLM Libraries (Hugging Face Transformers, OpenMMLab, FastAI): High-level libraries and pre-trained models that simplify deep learning and LLM development. They accelerate innovation by allowing developers to adapt powerful models without starting from scratch.

Also Read: From Data to Benchmarks: GreenMind becomes Vietnam’s First Reasoning LLM on NVIDIA NIM

- Workflow & Deployment Tools (MLflow, Ray, Kubeflow, ONNX): These tools manage the end-to-end ML lifecycle, experiment tracking, scaling compute resources, and deploying models in production environments, ensuring reliability and efficiency.

- LLM Orchestration & Agents (LangChain, Haystack, LlamaIndex): A newer class of tools designed to connect LLMs with external data, APIs, and multi-step reasoning. They make it possible to build intelligent AI agents and enterprise-ready applications on top of foundation models.

When to Use Open-Source AI?

Open-source AI is not always the right fit for every organization, but when used strategically, it can unlock significant advantages in flexibility, cost savings, and innovation.

You should consider using open-source AI when:

- You need transparency and control: Open-source tools allow full visibility into how models and algorithms work, helping teams audit, customize, and align them with internal standards or compliance needs.

- You want to reduce vendor lock-in: By relying on community-driven frameworks instead of proprietary platforms, organizations maintain ownership of their data, models, and infrastructure decisions.

- You’re working with limited budgets: Open-source eliminates expensive licensing fees and enables cost-effective experimentation which is ideal for startups, research labs, or public institutions.

- You need rapid innovation: Open-source ecosystems evolve quickly through global collaboration. New methods, benchmarks, and architectures are often available months before they appear in commercial tools.

- You want to build sovereign or domain-specific AI: For countries or enterprises developing localized models like GreenMind, Vietnam’s first open-source reasoning LLM integrated into NVIDIA NIM, open-source provides the foundation for sovereignty, adaptability, and long-term sustainability.

However, organizations should be ready to invest in skilled engineering teams, robust governance, and security measures to manage open-source tools effectively.

Top Open-Source AI Platforms in 2025

The open-source AI landscape is evolving fast, and platforms are at the center of that transformation. Unlike individual tools that handle specific steps like training or inference, AI platforms bring the entire machine learning workflow together. From data preparation to deployment and monitoring, these platforms make it easier for teams to collaborate, scale, and innovate. Below are five of the most proven and widely adopted open-source AI platforms in 2025.

Kubeflow

Kubeflow is one of the most complete open-source platforms for managing the full ML lifecycle on Kubernetes. It allows teams to build, train, and deploy models using scalable pipelines and supports integration with TensorFlow, PyTorch, XGBoost, and many others. Its biggest strengths are scalability and production reliability, making it ideal for enterprise-level AI infrastructure. However, Kubeflow’s complexity and Kubernetes dependency can make setup challenging for smaller teams.

Best for: Organizations already using Kubernetes that need robust, end-to-end ML orchestration.

MLflow

MLflow, created by Databricks, focuses on experiment tracking, model versioning, and deployment. It’s simple, lightweight, and integrates easily with other frameworks, making it one of the most widely used MLOps platforms. While MLflow doesn’t cover data processing or distributed training, it excels at model management and reproducibility, which are two essentials for collaborative AI development.

Best for: Data science teams managing multiple experiments or sharing models across projects.

Ray

Ray is an open-source compute platform designed for distributed AI workloads. It powers tools like Ray Train, Ray Serve, and Ray RLlib, supporting everything from reinforcement learning to large-scale inference. Ray stands out for its scalability and ease of integration with Hugging Face, PyTorch, and other frameworks. It’s an excellent choice for teams running compute-intensive AI pipelines, though managing clusters can be complex at very large scales.

Best for: Teams training or serving large models that need efficient distributed compute.

Hugging Face Hub and Inference Endpoints

The Hugging Face ecosystem has grown far beyond a library, it’s now a complete open-source AI platform. The Model Hub lets developers share, version, and benchmark models, while Inference Endpoints provide quick deployment for production use. Its active community and integrations with popular frameworks make Hugging Face a cornerstone for open LLM development and collaboration.

Best for: Developers working on NLP, generative AI, or fine-tuning open LLMs for production.

ONNX Runtime and Triton Stack

For teams focused on model interoperability and high-performance inference, the ONNX Runtime and NVIDIA Triton Inference Server combination is a powerful open-source platform. It allows models trained in different frameworks (PyTorch, TensorFlow, JAX) to run efficiently on CPUs, GPUs, or edge devices. While it’s not a full MLOps suite, it’s an essential part of production-grade AI deployment pipelines.

Best for: Companies optimizing model deployment across varied hardware environments.

Pros and Cons of Open-Source AI Platforms

Open-source AI platforms have become the backbone of modern machine learning and LLM development. They give organizations flexibility, control, and transparency that proprietary systems often lack. However, they also introduce technical and operational challenges that teams must be ready to handle.

Pros of Open-Source AI Platforms

- Cost Efficiency: Open-source platforms eliminate licensing fees and allow organizations to experiment without heavy upfront investment. This makes them ideal for startups, research institutions, and enterprises building long-term AI capabilities.

- Transparency and Trust: With access to the source code, teams can audit, debug, and verify how algorithms make decisions. This level of transparency is essential for compliance, explainability, and responsible AI adoption.

- Flexibility and Customization: Unlike closed systems, open-source platforms let developers tailor pipelines, models, and infrastructure to their exact needs. They can integrate multiple tools, modify components, and scale systems freely.

- Community and Collaboration: Large open-source communities like those around Kubeflow, MLflow, and Hugging Face, constantly release updates, fix bugs, and share best practices. This collective innovation often moves faster than traditional vendor-driven development.

- Ecosystem Integration: Open platforms can connect easily with other frameworks and hardware, from NVIDIA GPUs to Kubernetes clusters. This interoperability makes it easier to build hybrid or multi-cloud AI infrastructure.

Cons of Open-Source AI Platforms

- Complex Setup and Maintenance: Many open-source platforms require strong DevOps or MLOps expertise to deploy and manage. Without the right engineering skills, setup can be time-consuming and error-prone.

- Limited Official Support: Unlike commercial platforms, open-source solutions don’t always provide 24/7 support or guaranteed SLAs. Most assistance comes from community forums or documentation, which can delay problem resolution.

- Scalability Challenges: Some platforms need careful optimization to scale efficiently across large datasets or GPU clusters. Poor configuration can lead to performance bottlenecks or high operational costs.

- Security and Compliance Risks: Because anyone can contribute to open-source projects, vulnerabilities can appear if code isn’t regularly reviewed and patched. Enterprises must establish internal security audits and governance frameworks to mitigate risk.

- Fragmentation of Tools: The open-source ecosystem is vast, with overlapping tools and frameworks. Choosing the right combination and keeping them compatible can be difficult without clear technical leadership.

Future of Open-Source AI

Open-source AI is entering a new phase. What began as a movement driven by academic curiosity and open collaboration is now shaping how enterprises, governments, and developers approach large-scale AI development. The next few years will be defined by three major shifts.

Community-Driven LLM Development

The success of community-led large language models like LLaMA, Falcon, and Mistral shows how open collaboration can rival closed corporate R&D. These projects have proven that the world’s best AI models no longer have to come exclusively from big tech labs. Open weights and transparent training processes allow researchers to test, improve, and adapt models faster than proprietary ecosystems.

This trend is also fueling local and “sovereign” AI initiatives where countries and enterprises want to build models aligned with their language, culture, and data privacy requirements. The result is a growing global network of regional open-source LLMs that collectively strengthen the diversity and resilience of the AI ecosystem.

Rise of Parameter-Efficient Methods for Open LLMs

The next big shift is parameter-efficient fine-tuning (PEFT). Techniques like LoRA (Low-Rank Adaptation), adapters, and prefix tuning make it possible to customize massive models without retraining billions of parameters. This lowers compute costs and makes fine-tuning accessible even to smaller organizations.

In open-source communities, these methods have become the default way to adapt LLMs, especially when budgets are limited or data privacy prevents sending information to third-party APIs. The combination of open weights and efficient adaptation is accelerating innovation, enabling specialized models for healthcare, finance, education, and local languages.

Open-Source in Enterprise Adoption (Hybrid Solutions)

Enterprises are no longer viewing open-source AI as experimental. Instead, many are adopting hybrid strategies: combining open frameworks with proprietary infrastructure for scalability, security, and compliance.

This approach offers the best of both worlds: the innovation speed and flexibility of open-source, with the reliability and support of enterprise systems. Cloud providers like NVIDIA, Google, and AWS are already integrating open models and frameworks into their ecosystems, bridging the gap between open innovation and production-ready deployment.

In the next few years, enterprises expect to increasingly rely on open-source foundations for internal LLMs, data pipelines, and sovereign AI projects, while maintaining proprietary layers for governance and performance optimization.

Open-Source AI FAQs

1. What is open-source AI?

Open-source AI refers to frameworks, models, and tools whose code and training resources are freely available for anyone to use, modify, and distribute. It promotes transparency, collaboration, and faster innovation by allowing developers worldwide to build upon shared foundations like PyTorch, TensorFlow, or open LLMs such as GreenMind, LLaMA, and Mistral.

2. Is open-source AI safe to use in production?

Yes, but it depends on how it’s implemented. Open-source tools are generally safe when regularly updated and properly secured. Enterprises should establish internal governance like reviewing licenses, validating model sources, and applying cybersecurity best practices to ensure compliance and reliability.

3. What are the main benefits of open-source AI for businesses?

The biggest advantages are cost savings, flexibility, and independence. Open-source tools eliminate expensive licensing fees, offer full control over data and models, and allow faster experimentation. They also attract top engineering talent, as developers prefer working with transparent and widely supported ecosystems.

4. Can open-source AI compete with proprietary models like GPT-4 or Claude?

Increasingly, yes. While proprietary models still lead in scale and performance, open-source models are catching up fast thanks to community contributions and efficient fine-tuning methods like LoRA and adapters. For many specialized or local use cases, open-source LLMs now deliver comparable results at a fraction of the cost.

5. How do companies make money with open-source AI?

Many organizations build business models around support, managed services, and cloud deployments of open-source tools. Others offer enterprise editions or APIs built on open foundations. Hugging Face, Red Hat, and GreenNode are examples of companies combining open innovation with commercial solutions.