Every successful AI system, from predictive models to generative assistants, begins long before deployment. It starts with a well-designed AI development lifecycle. Yet, many organizations still struggle to move from experimental prototypes to production-ready solutions. In fact, industry reports suggest that over 80% of AI projects fail to scale, often due to poor lifecycle management, fragmented data, or weak governance.

Understanding the AI lifecycle means understanding how ideas evolve into intelligent systems: how data is gathered, models are trained, and results are monitored and refined over time. Managing this lifecycle effectively ensures not only accuracy and performance, but also compliance, transparency, and business value.

In this guide, we’ll break down each stage of the AI development lifecycle, explore how to manage it efficiently, and share proven best practices to power your AI projects. Whether you’re a data scientist, an ML engineer, or an enterprise leader scaling AI initiative, you’ll walk away with a clearer roadmap for building, deploying, and maintaining AI that lasts.

What is AI development lifecycle?

The AI development lifecycle is the structured process that guides how artificial intelligence systems are built, deployed, and maintained. Unlike traditional software, AI development is deeply data-driven and iterative, involving continuous cycles of data collection, model training, validation, deployment, and monitoring. Each stage plays a critical role in transforming raw data into actionable intelligence.

Managing the AI lifecycle effectively helps organizations ensure scalability, accuracy, and reliability while maintaining transparency and compliance. In essence, it’s the blueprint that turns AI from a concept into a sustainable, production-ready capability.

Why AI development lifecycle matter?

A well-managed AI development lifecycle helps ensure that every stage of AI development, from data preparation to model deployment and monitoring, aligns with business goals and ethical standards. It reduces risks associated with data quality issues, model drift, and compliance gaps while speeding up time to production.

In practice, following an AI lifecycle framework means:

- Improved project success rates: AI initiatives are more likely to deliver measurable ROI when they follow a disciplined process.

- Operational efficiency: Teams can reuse pipelines, tools, and workflows instead of reinventing them for every new model.

- Scalability: Consistent lifecycle management allows models to be deployed across departments or even across regions with minimal friction.

- Transparency and governance: Each stage creates checkpoints for data lineage, version control, and auditability which critical for enterprise and regulated industries.

Key Stages of AI Development Lifecycle

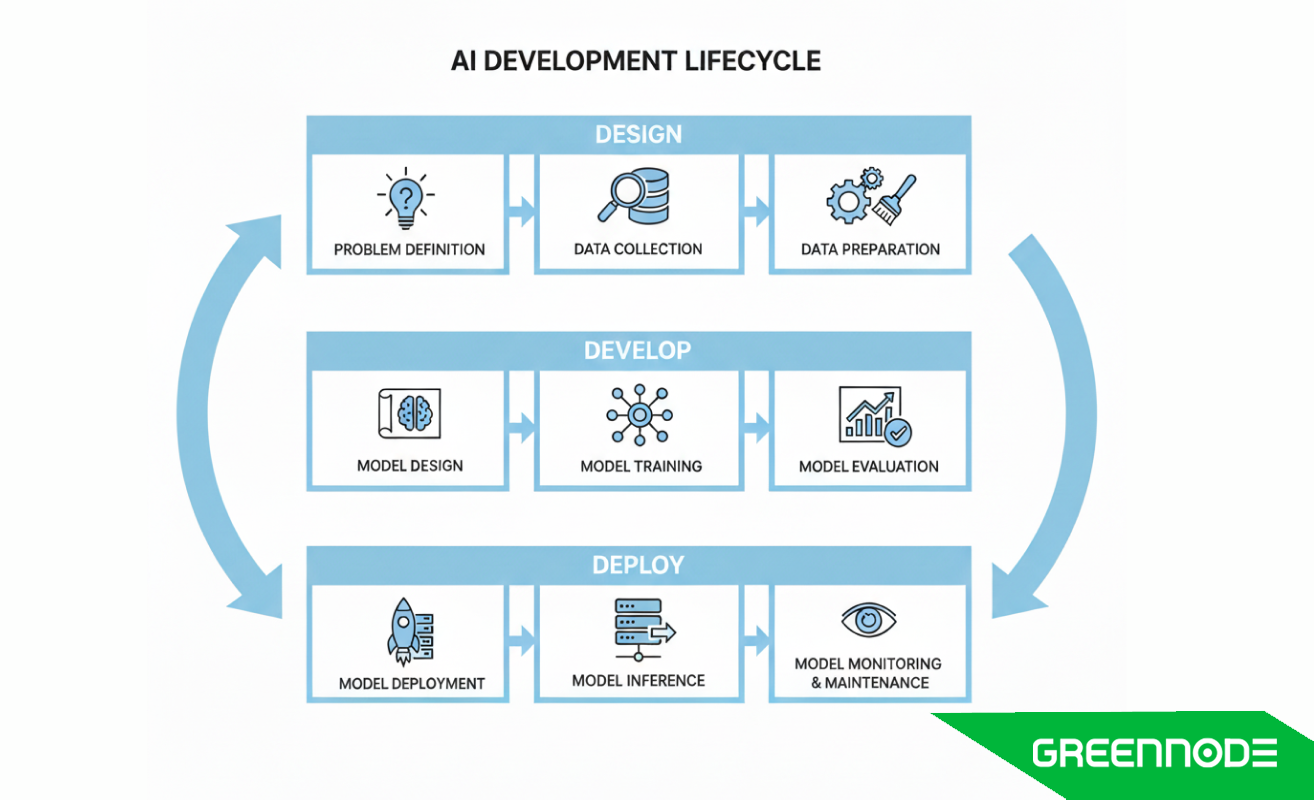

Building an AI system demands structure, testing, and iteration, including three main stages:

- Design: establishes the foundation for the project

- Develop: focuses on creating and validating the model

- Deploy: handles the release and ongoing management of the solution

The AI development lifecycle provides that framework, guiding teams through every phase of designing, training, deploying, and maintaining intelligent systems. Each stage plays a vital role in ensuring models are not only accurate but also ethical, secure, and scalable. Following is the detailed stages of an AI development lifecycle:

1. Problem Definition

Every AI project starts with a clear understanding of why it exists. This phase defines the objectives, requirements, and scope of the AI solution. Teams identify what problem is being solved, who benefits from it, and how success will be measured.

This step includes analyzing stakeholder needs, assessing feasibility, and outlining measurable performance metrics or KPIs. Ethical and regulatory considerations are also established early to prevent bias and ensure compliance. A well-scoped problem definition sets the direction for everything that follows, aligning business goals with technical execution.

2. Data Collection

Once the problem is defined, the next challenge is gathering relevant data. Data fuels every stage of the AI lifecycle - the model’s accuracy and reliability depend on it.

Teams identify potential data sources such as internal systems, sensors, or open datasets. They then implement data acquisition methods, like APIs or pipelines, to collect and store information securely. Throughout this process, privacy, consent, and data ownership are prioritized.

Strong data governance ensures traceability, while techniques like sampling and labeling guarantee that the data is representative and ready for the next phase.

3. Data Preparation

Raw data rarely fits directly into an AI system. This phase focuses on cleaning, integrating, and transforming data into a usable format. Missing values are filled, outliers are handled, and features are engineered to help the model learn efficiently.

Inconsistent or biased data can lead to inaccurate models, so preparation also includes augmentation, expanding datasets to improve diversity and representation. Scalable data pipelines and versioning systems are typically established here to maintain reproducibility across future iterations.

The goal of this phase is simple: ensure the model learns from accurate, consistent, and relevant information.

Also read: What is Data Ingestion? Definition, Types and Tools

4. Model Design

In this stage, the AI system begins to take shape. Engineers select the most appropriate algorithms and model architectures based on the problem type and data characteristics. Whether it’s a neural network, decision tree, or clustering model, the choice determines how the system learns and performs.

This phase also includes hyperparameter tuning, ensemble modeling, and applying techniques like transfer learning to boost efficiency. Models are designed not just for accuracy, but also for interpretability, transparency, and resilience against adversarial risks. A well-designed model sets the stage for reliable training and evaluation.

Also read: What is AI Model Training? Foundation, Techniques and Best Practices for Success

5. Model Training

Here, the model starts learning from the prepared data. Through iterative processes, the algorithm identifies patterns and adjusts its parameters to minimize errors. Training continues until the model achieves acceptable performance levels.

During training, developers monitor metrics such as accuracy, precision, recall, and loss to track progress and detect overfitting. Techniques like regularization, dropout, and early stopping are used to balance generalization and performance.

Large-scale projects may also require distributed training to handle massive datasets efficiently. Saving checkpoints during training allows recovery and continuity in case of interruptions.

6. Model Evaluation

After training, the model’s true effectiveness is tested. This phase assesses how well it performs on unseen data using evaluation metrics like F1 score, mean squared error, or ROC-AUC.

Beyond accuracy, evaluation also checks for bias, fairness, and robustness. Cross-validation ensures the model’s stability across different data splits, while error analysis identifies weaknesses that may need re-training.

If the model doesn’t meet performance or ethical criteria, it cycles back for refinement — an essential feedback loop that improves trust and reliability before deployment.

7. Model Deployment

Once validated, the model moves into production. Deployment transforms a trained model into a usable system that interacts with live data, whether through APIs, cloud environments, or embedded applications.

Key considerations here include scalability, latency, and integration with existing infrastructure. Models must perform reliably under real-world workloads, and deployment pipelines should include safeguards like versioning, rollback mechanisms, and performance testing.

Comprehensive documentation and knowledge transfer are critical to ensure that operations teams can maintain the model post-launch.

8. Model Monitoring & Maintenance

Once in production, models must be monitored continuously to ensure consistent performance as data and environments change.

Monitoring includes tracking prediction accuracy, detecting data drift, and identifying anomalies that could affect outcomes. Feedback loops are essential for collecting new data and retraining models when needed.

Regular audits verify compliance, while security monitoring protects against potential threats or misuse. This stage closes the loop of the AI lifecycle, feeding insights back into data collection and design for ongoing improvement.

Managing the AI Lifecyle: Best Practices to Harness your AI project

Managing the AI development lifecycle effectively is what separates experimental projects from scalable, production-grade systems. Without structure, even high-performing models can fail due to poor data quality, model drift, or lack of visibility. Successful organizations approach AI not as isolated models, but as living systems that require continuous management, governance, and iteration.

Here are the key best practices that ensure a reliable and sustainable AI lifecycle:

Align AI Strategy with Business Goals

Every lifecycle begins with purpose. Before building or deploying models, teams must connect technical outcomes to strategic objectives such as improving efficiency, reducing costs, or enhancing customer experience.

This alignment ensures that every phase, from data collection to model monitoring contributes to measurable business impact rather than technical experimentation.

Establish Clear Data Governance

Data governance is the foundation of lifecycle reliability. Establishing strong policies for data quality, ownership, and security prevents issues later in model performance and compliance.

A governance framework should include standards for data access, versioning, retention, and documentation. Regular audits and traceability practices help maintain trust and transparency, especially in regulated industries.

Adopt a Modular and Scalable Infrastructure

Scalability is essential for modern AI systems. A modular infrastructure, where data pipelines, training environments, and monitoring systems are designed independently but work cohesively, allows teams to grow efficiently.

Automated workflows and containerized environments support consistent performance across development, testing, and production. This modular approach also enables teams to reuse components, reducing development time and cost.

Monitor Continuously and Manage Model Drift

AI systems require ongoing monitoring to ensure accuracy and fairness over time. As data changes, models can degrade, which commonly is a challenge known as model drift.

To manage this, organizations should implement continuous tracking of performance metrics, retraining schedules, and alert mechanisms for anomalies. A strong monitoring strategy keeps models aligned with evolving real-world conditions, ensuring long-term reliability.

Maintain Traceability and Version Control

Version control is critical for both data and models. By maintaining detailed logs of datasets, algorithms, parameters, and configurations, teams can reproduce results and roll back to previous states if issues arise.

This transparency not only supports accountability but also simplifies collaboration and regulatory compliance, ensuring every decision made throughout the lifecycle can be traced.

Build Feedback Loops for Continuous Improvement

AI development is never truly finished. Continuous feedback loops where monitoring insights feed back into data collection and model retraining to create self-improving systems.

This iterative approach helps maintain accuracy, adapt to changing environments, and unlock new business opportunities as data evolves.

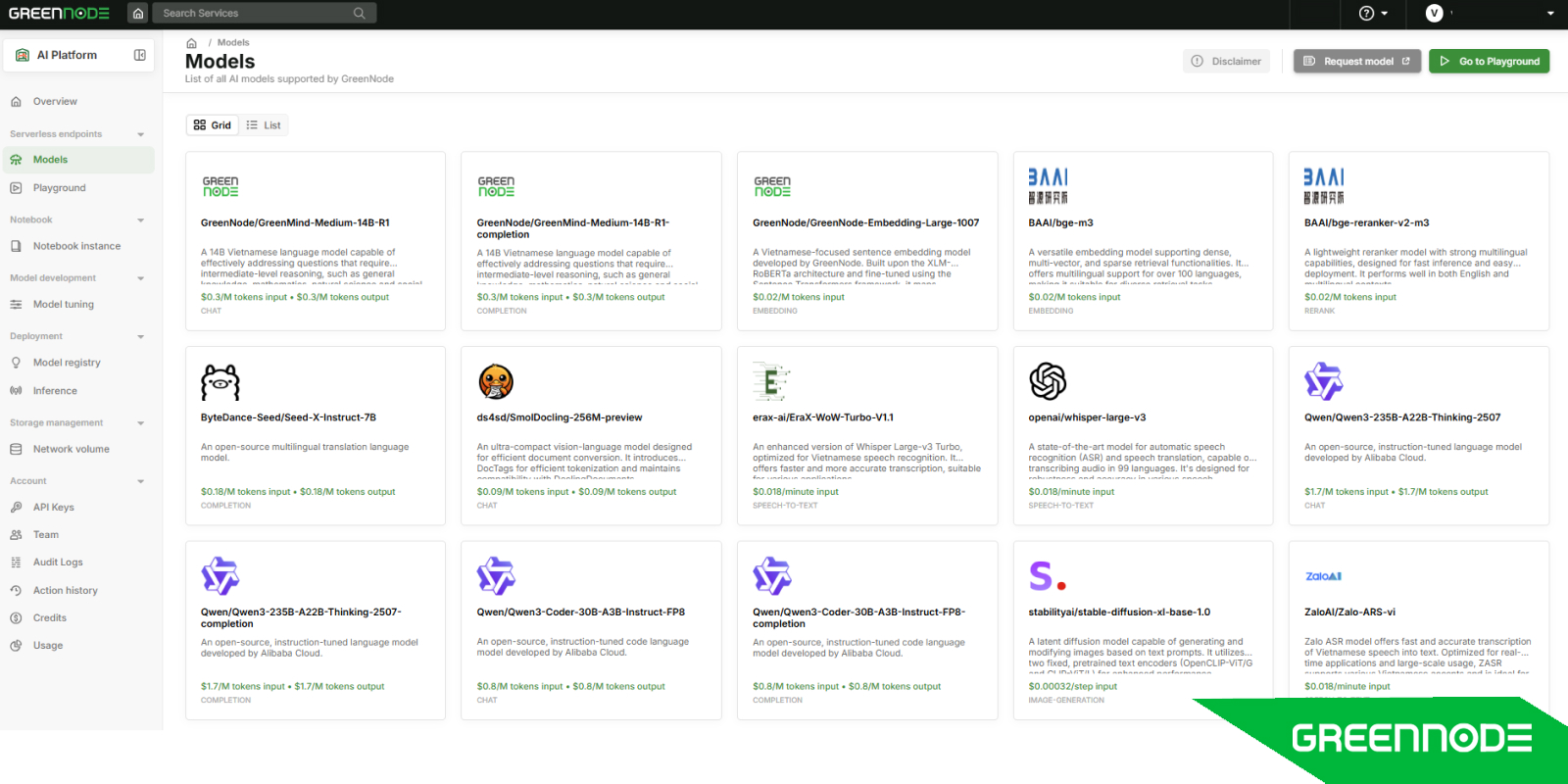

Power your AI Projects with GreenNode AI Platform

Managing the full AI development lifecycle from data preparation to deployment and monitoring often requires multiple tools, complex configurations, and specialized infrastructure. The GreenNode AI Platform simplifies this complexity by providing a unified environment that supports every stage of AI development, helping teams build, train, and scale AI models faster and more reliably.

A Platform Designed for the Complete AI Lifecycle

GreenNode AI Platform brings together everything you need to move from experimentation to production (compute power, model management, orchestration, and monitoring) all in one place.

Accelerate Data-to-Model Pipelines: Seamlessly ingest, prepare, and manage datasets across teams with built-in version control and secure storage. The platform provides an environment optimized for large-scale data operations, enabling faster iteration and cleaner data for model training.

Train Smarter, Not Harder: With access to GPU-powered compute clusters, data scientists can train complex models, from classical machine learning to large language models with ease. Distributed training support and resource auto-scaling ensure efficiency, regardless of workload size.

Streamlined Deployment: Once a model is validated, deployment is just a few clicks away. GreenNode enables one-click model serving and API generation, integrating seamlessly with production systems while maintaining versioning and rollback capabilities.

Continuous Monitoring & Optimization: AI performance doesn’t stop at deployment. GreenNode’s integrated monitoring tools help detect model drift, measure performance, and trigger retraining pipelines automatically, ensuring your models stay accurate, compliant, and aligned with real-world data.

Empowering Teams Through Simplicity and Scale

Where traditional AI development environments demand extensive setup, GreenNode offers a self-service platform that accelerates experimentation while maintaining governance and visibility. It’s designed for teams that want to focus on innovation, not just infrastructure. Our AI platform provides:

- Unified access for developers, data scientists, and MLOps teams.

- Consistent environments across training and production.

- Flexible compute scaling to meet growing AI demands.

- Built-in compliance and security for enterprise-grade reliability.

- The Bridge Between Experimentation and Production

In modern AI workflows, moving from proof of concept to production is where most projects stall. GreenNode AI Platform bridges this gap by offering the tools, infrastructure, and automation needed to operationalize the AI development lifecycle.

Ready to Power Your AI Lifecycle? Transform your AI ideas into production-ready systems with GreenNode AI Platform — a foundation built for scalability, speed, and simplicity.

FAQs about AI Development Lifecycle

1. What is the AI development lifecycle?

The AI development lifecycle is the end-to-end process of designing, building, deploying, and maintaining artificial intelligence systems. It includes several stages such as problem definition, data collection, model training, evaluation, deployment, and monitoring; all working together to ensure AI systems remain accurate, scalable, and aligned with business goals.

2. Why is managing the AI lifecycle important?

Effective AI lifecycle management helps teams maintain consistency, compliance, and performance across every stage of development. Without a structured lifecycle, projects often face issues like model drift, poor data quality, and scalability challenges. A managed lifecycle ensures AI systems deliver reliable results and continue improving through monitoring and retraining.

3. What are the common challenges in the AI development lifecycle?

The most common challenges include:

- Data quality issues and lack of labeled data.

- Model drift caused by changing real-world data.

- Integration difficulties with existing systems.

- Limited visibility and governance, especially in large organizations.

- Scalability and cost constraints in production environments.

Overcoming these requires disciplined lifecycle management, clear governance frameworks, and the right infrastructure support.