Artificial intelligence has advanced from experimental research to enterprise-critical systems in only a few years. At the center of this transformation lies AI model training, the process through which algorithms learn patterns from data and evolve into models capable of generating predictions, insights, or language. Without effective training, even the most sophisticated architectures fail to deliver business value.

Today’s models, from computer vision classifiers to large language models (LLMs) with hundreds of billions of parameters, demand specialized techniques, scalable infrastructure, and rigorous evaluation methods. Organizations that understand how to train models efficiently not only shorten development cycles but also reduce costs and improve performance.

In this article, we explore the foundations, techniques, and best practices that drive successful AI model training.

What is AI Model Training?

AI model training is the process of teaching an algorithm to recognize patterns and make predictions by learning from data. During training, the model is exposed to large datasets and adjusts its internal parameters, often millions or billions of weights, to minimize errors through optimization methods such as gradient descent. The goal is to generalize from training data so the model can perform accurately on unseen inputs.

Why AI Model Training Matters for Model Performance

The quality of training directly determines how well an AI system performs in real-world scenarios. Poorly trained models may overfit (perform well on training data but fail on new data) or underfit (fail to capture the complexity of patterns). High-performing models require not only vast amounts of labeled or unlabeled data but also the right combination of architecture, compute resources, and training strategies.

According to Gartner, enterprises that adopt advanced training practices can achieve up to 30% higher accuracy in production AI systems compared to those relying on basic methods.

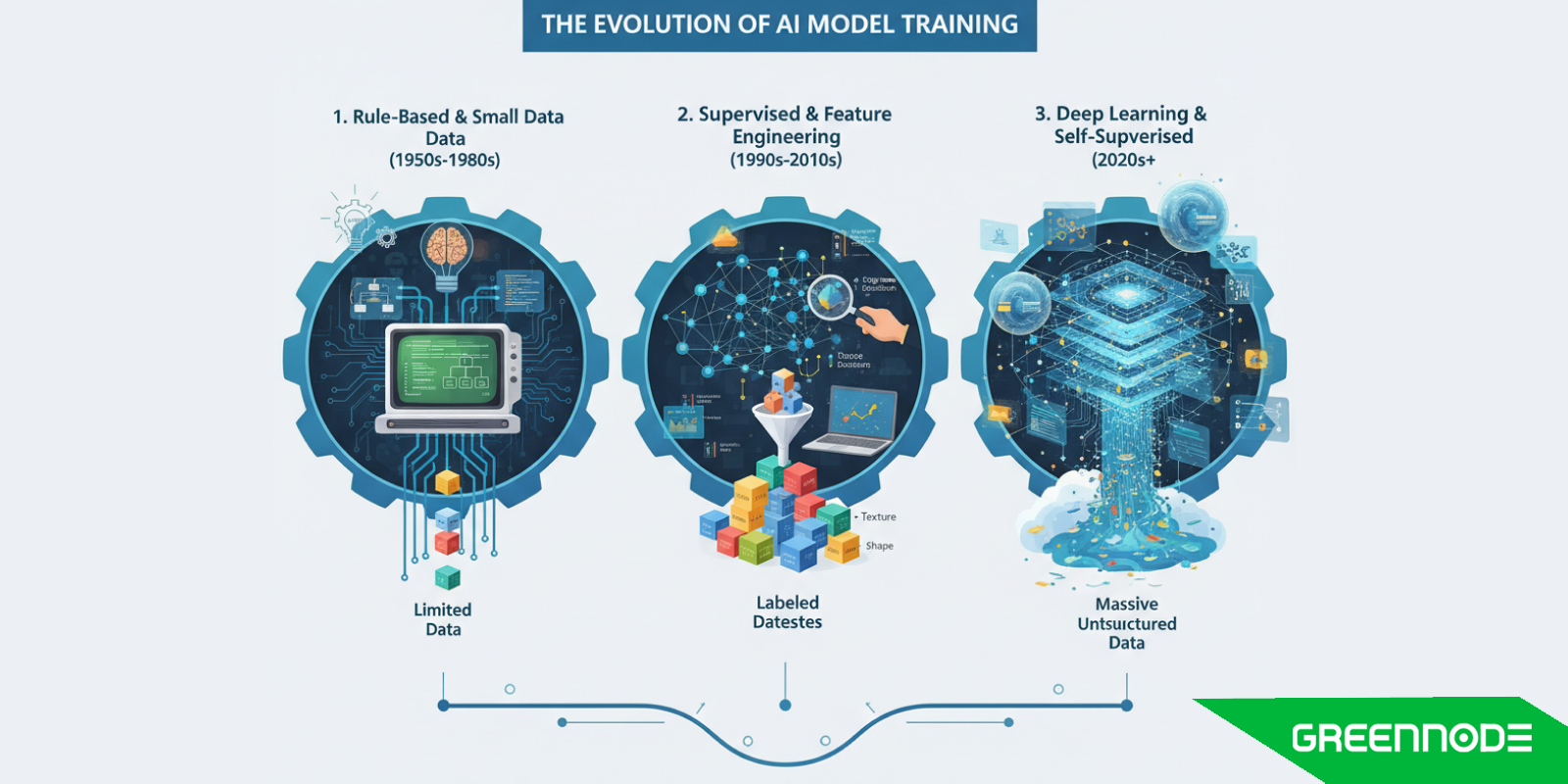

The Evolution of AI Model Training

AI model training has evolved significantly over the past two decades. Early systems relied on small datasets and relatively simple machine learning algorithms, often trained on CPUs.

The rise of deep learning in the 2010s, fueled by breakthroughs in GPU acceleration, enabled training of convolutional and recurrent neural networks at scale. Today, training has expanded to transformer-based architectures and LLMs with hundreds of billions of parameters, requiring distributed GPU clusters, cloud platforms, and sophisticated techniques like transfer learning, parameter-efficient fine-tuning (PEFT), and reinforcement learning with human feedback (RLHF).

This evolution reflects the growing importance of scalable infrastructure and advanced optimization methods in delivering state-of-the-art AI.

Foundation of AI Model Training

What Types of AI Model Training?

Supervised Learning

Supervised learning is the most widely used form of training. The model is provided with labeled datasets where each input has a corresponding output. Through repeated exposure, the model learns to map inputs to outputs, minimizing errors along the way.

Applications include fraud detection, medical image classification, and natural language translation.

Unsupervised Learning

In unsupervised learning, models work with unlabeled data. Instead of being told the correct output, the model identifies patterns, clusters, or relationships within the dataset. Techniques such as clustering and dimensionality reduction are common, with applications in market segmentation, anomaly detection, and recommendation systems.

Unsupervised learning is especially valuable when labeling data is expensive or impractical.

Semi-Supervised Learning

Semi-supervised learning blends the strengths of supervised and unsupervised methods. Models are trained on a small amount of labeled data along with a larger pool of unlabeled data. This approach reduces annotation costs while still leveraging structured information.

It has gained momentum in fields like speech recognition and healthcare, where obtaining labeled datasets can be costly or time-consuming.

Reinforcement Learning

Reinforcement learning (RL) is based on the concept of agents interacting with environments to maximize cumulative rewards. Instead of explicit labels, the model learns through trial and error, guided by reward signals. RL has enabled breakthroughs in robotics, autonomous systems, and strategy games.

In AI research, reinforcement learning with human feedback (RLHF) has been instrumental in improving the alignment and performance of large language models.

Key Components in AI Model Training

Training an AI model is not just about feeding data into an algorithm. It requires a combination of high-quality data, carefully designed architectures, optimized training techniques, and powerful infrastructure. Each component plays a decisive role in whether a model performs well in real-world applications.

Data Quality and Availability

Data is the foundation of every AI system. Without diverse and representative datasets, even the most advanced models will fail to generalize. Preprocessing tasks such as normalization, deduplication, and handling missing values directly impact performance. Data augmentation techniques like rotation in images or back-translation in text expand datasets and improve robustness. Landmark datasets like ImageNet for vision and Common Crawl for language models illustrate how data drives progress in AI training.

Model Architecture

The architecture defines how a model processes information and learns patterns. Earlier systems relied on convolutional neural networks (CNNs) for vision tasks or recurrent neural networks (RNNs) for sequential data.

Today, transformers dominate both vision and language due to their scalability and ability to capture long-range dependencies. Models such as GPT, BERT, and LLaMA are built on transformer architectures, with parameter counts stretching into the hundreds of billions. The choice of architecture directly affects compute requirements, scalability, and accuracy.

Optimization Algorithms

At the heart of training lies optimization, adjusting billions of parameters to minimize error. Traditional stochastic gradient descent (SGD) remains a staple, but modern AI workloads often rely on adaptive methods such as Adam, AdamW, or LAMB for large-scale models.

Learning rate schedules like cosine annealing or warm restarts further refine convergence. For LLMs, optimization strategies can determine whether training takes days or weeks, making this component a critical factor in efficiency and cost.

Hyperparameters

Hyperparameters are the knobs that control the training process. Batch size, learning rate, number of epochs, and regularization methods such as dropout or weight decay all influence convergence speed and model accuracy. Poorly tuned hyperparameters can lead to underfitting, overfitting, or wasted compute resources.

Evaluation Metrics

Evaluation ensures that models don’t just memorize data but actually perform well on unseen inputs. Metrics vary by task: accuracy, precision, recall, and F1-score for classification; BLEU or ROUGE for natural language processing; and MAP or NDCG for recommendation systems.

In the era of LLMs, new benchmarks such as MMLU (Massive Multitask Language Understanding) or VMLU are widely used to evaluate reasoning and knowledge retention. Continuous validation and monitoring are essential to avoid issues like model drift when deployed in production.

Infrastructure for AI Model Training

Modern AI training is as much about infrastructure as it is about algorithms. Training a model with millions or billions of parameters requires specialized hardware and distributed systems.

- GPUs: GPUs like the NVIDIA H100 and H200 are the industry standard, offering tensor cores and FP8 precision to accelerate training dramatically.

- TPUs: Google’s TPUs are tailored for large-scale tensor operations and are widely used in training transformer models like BERT and PaLM.

- Distributed Training: Scaling across multiple nodes with frameworks such as PyTorch Distributed, Horovod, or DeepSpeed allows models too large for a single machine to be trained efficiently.

- Cloud Platforms: Hyperscalers and regional cloud providers now offer elastic, on-demand access to AI training infrastructure. Hyperscalers are well-suited for large-scale training of frontier models where global availability, cutting-edge hardware, and near-infinite scalability are required. Regional providers often provide more cost-efficient options, lower-latency access, and data residency compliance, making them attractive for enterprises training domain-specific models or operating in regulated industries.

The cost of training also cannot be ignored. For example, training GPT-3 with 175 billion parameters was estimated at $4.6 million in compute resources. As a result, many organizations prefer fine-tuning pre-trained models rather than training from scratch. This hybrid approach balances cost efficiency with performance while still leveraging cutting-edge infrastructure.

Also Read: Demystifying AI Training with MultiNode

The Process of AI Model Training

While every AI project comes with its own unique challenges and requirements, the general process for training models follows a well-defined sequence. These five stages form the foundation of AI model training, ensuring that systems not only learn effectively but also deliver reliable results in production.

Step 1: Prepare the Data

Successful AI training starts with data that is accurate, consistent, and representative of real-world scenarios. Without high-quality data, downstream results are unreliable. Teams must identify and curate the right sources, establish both manual and automated collection pipelines, and implement rigorous cleaning and transformation processes. Tasks such as handling missing values, normalizing features, and augmenting data improve both the robustness and fairness of the model. As the saying goes, “bad data in, bad data out”, preparation determines the ceiling of model performance.

Step 2: Select a Training Model

Once the foundation of data is in place, the next step is choosing the right model. This involves defining project objectives, selecting an appropriate architecture (e.g., decision trees, CNNs, transformers), and weighing algorithms against practical constraints such as compute resources, deadlines, and costs. Different models demand varying amounts of data and infrastructure. For instance, training a transformer-based large language model requires distributed GPU clusters, while smaller tabular models can run effectively on CPUs. Strategic model selection ensures the system aligns with both technical goals and business realities.

Read more: Maximizing AI Throughput with GreenNode AI Platform

Step 3: Perform Initial Training

Initial training introduces the model to the data, focusing on establishing baseline performance. Similar to teaching a child to distinguish between a cat and a dog, the model begins with basic pattern recognition before advancing to complex reasoning.

At this stage, simplicity is key: using overly large datasets or unnecessarily complex algorithms risks overwhelming the system and producing unstable results. By starting with controlled parameters, data scientists can identify errors early, monitor learning behavior, and guide the model through steady, measurable improvements.

Step 4: Validate the Training

Once a model demonstrates consistent results during initial training, it moves into validation. Here, the model is tested against separate datasets designed to expose weaknesses and reveal blind spots. Validation emphasizes not just accuracy but also process integrity, using metrics such as:

- Precision: The percentage of positive predictions that are correct.

- Recall: The percentage of actual positives correctly identified.

- F1 Score: A balanced metric that considers both precision and recall, offering a holistic view of classification performance.

By running multiple validation passes, teams can identify issues like overfitting or underfitting, refine hyperparameters, and determine whether the model is ready for real-world testing.

Step 5: Test the Model

The final stage before deployment involves testing with live or production-like data. This “taking off the training wheels” phase evaluates whether the model delivers accurate and expected results outside curated datasets. Passing the test stage signals readiness for deployment, but it does not end the training journey.

AI models are dynamic, often learning continuously from new data. Each inference can represent another “lesson,” refining performance over time. However, continuous monitoring is essential to guard against drift or bias when models encounter outliers or shifting data distributions. If deficiencies emerge, retraining or fine-tuning ensures the model remains aligned with performance standards.

Advanced Techniques in AI Model Training

As AI models become larger and more complex, traditional training approaches are no longer sufficient. Organizations now rely on advanced techniques to accelerate training, reduce costs, and improve model performance. These methods are especially important when working with large language models (LLMs), multimodal systems, or real-time AI applications.

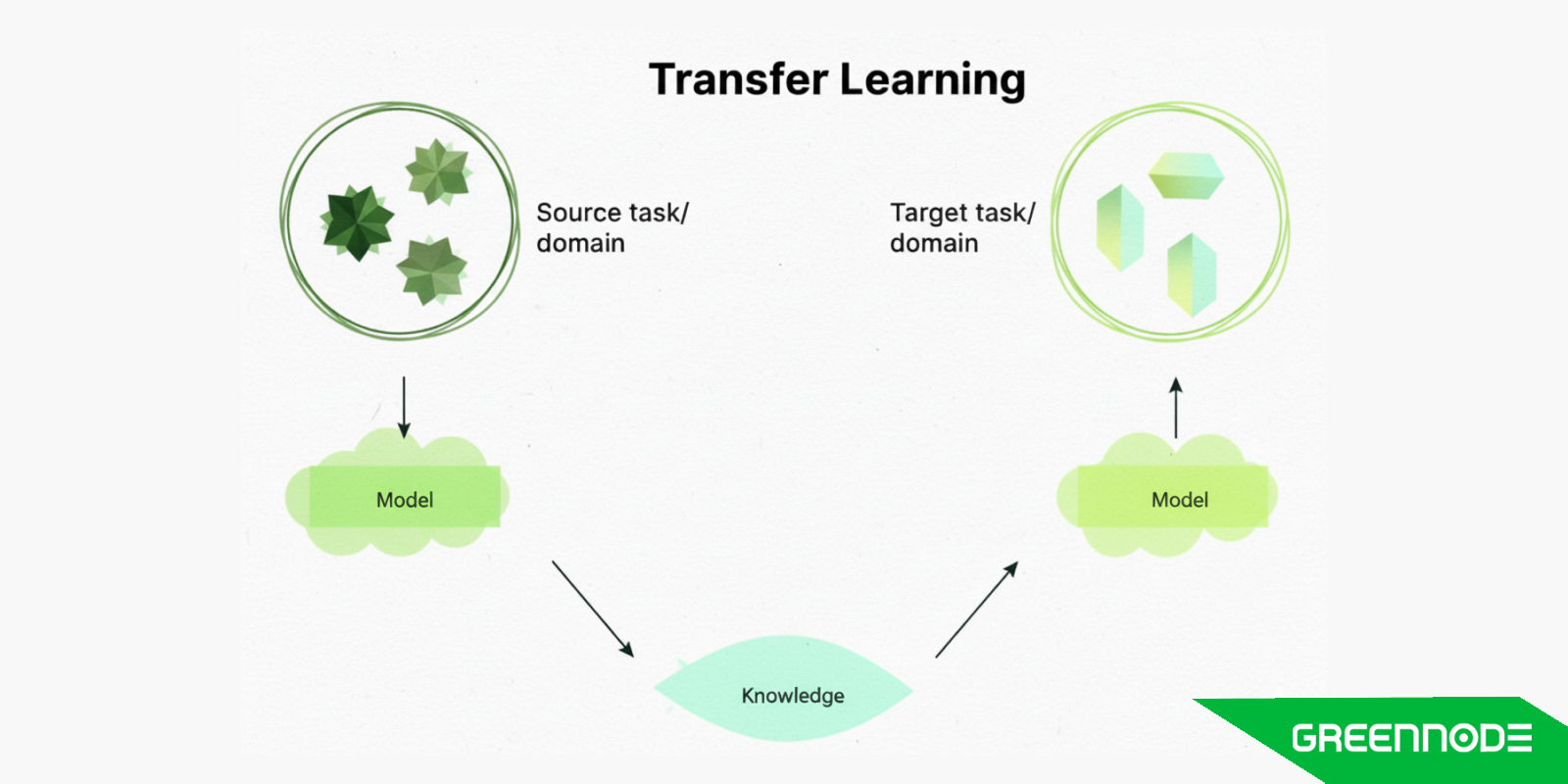

Transfer Learning

Transfer learning leverages pre-trained models as a starting point rather than building from scratch. By adapting an existing model to a new task with domain-specific data, organizations save both time and compute resources. For example, fine-tuning BERT on financial text allows it to perform specialized tasks like sentiment analysis in banking. Studies show transfer learning can reduce training requirements by 50–90% compared to full training.

Fine-Tuning and Parameter-Efficient Tuning

Fine-tuning adjusts all or part of a pre-trained model’s parameters for a new application. Recently, parameter-efficient fine-tuning (PEFT) methods such as LoRA (Low-Rank Adaptation) and prefix tuning have become popular. These approaches modify only a fraction of the parameters while freezing the rest, making it possible to customize massive LLMs on commodity hardware. This has proven critical for startups and research labs with limited resources.

Also Read: Fine-Tuning vs Transfer Learning: Key Differences for ML and LLM Workflows

Distributed Training

As models scale into hundreds of billions of parameters, training requires multiple GPUs or nodes. Distributed training techniques include:

- Data Parallelism: Splitting batches across GPUs.

- Model Parallelism: Splitting model layers across devices.

- Pipeline Parallelism: Passing model partitions sequentially across multiple nodes.

Frameworks like DeepSpeed, Megatron-LM, and PyTorch Distributed enable these approaches, making it feasible to train trillion-parameter models.

Mixed-Precision Training

Mixed-precision combines lower-precision (FP16, BF16, FP8) arithmetic with higher-precision operations. This reduces memory usage and increases throughput without significantly sacrificing accuracy. NVIDIA reports that mixed-precision can cut training time by up to 3x while lowering energy costs, making it a standard practice in enterprise AI pipelines.

Curriculum Learning

Inspired by human learning, curriculum learning trains models on easier tasks first before gradually introducing harder examples. This structured approach often improves convergence stability and accuracy, particularly in reinforcement learning and complex multimodal models.

Active Learning

Active learning reduces labeling costs by identifying the most informative data points for annotation. Instead of labeling massive datasets, teams label only what the model struggles with, improving efficiency. This is particularly valuable in domains like healthcare, where labeled data is scarce and expensive.

Reinforcement Learning with Human Feedback (RLHF)

For aligning large language models, RLHF has become essential. Models generate outputs, human annotators provide preference feedback, and reinforcement learning adjusts the model accordingly. This technique was central to improving models like ChatGPT, aligning them with user intent and ethical guidelines.

What are Challenges in AI Model Training?

Despite rapid progress in AI, training models remains a complex process filled with challenges that impact cost, accuracy, and scalability. Addressing these obstacles is critical for enterprises aiming to move from proof-of-concept to production-ready systems.

Data Quality and Bias

The quality of data defines the ceiling of model performance. No amount of algorithmic sophistication can compensate for incomplete, biased, or low-quality datasets. Bias in data, whether due to underrepresentation of demographic groups, sampling errors, or historical inaccuracies, leads to biased predictions and unfair outcomes.

For example, a study on a widely used U.S. healthcare algorithm, affecting over 200 million patients, revealed that its bias against Black patients reduced the number identified for additional care by more than 50%. Ensuring fairness requires meticulous examination of proxy variables, transparency in algorithmic design, and rigorous sub-group performance testing.

Compute Cost and Energy Efficiency

Training large-scale models is resource-intensive. OpenAI’s GPT-3, with 175 billion parameters, is estimated to have cost millions of dollars in compute time. Beyond cost, the environmental impact is significant: MIT researchers estimated that training a single large NLP model could emit as much carbon as five cars over their lifetimes.

To address this, organizations are adopting strategies such as mixed-precision training, parameter-efficient fine-tuning, and more energy-efficient hardware (e.g., GPUs with FP8 tensor cores). Balancing performance with sustainability is now a key priority.

Overfitting and Underfitting

Overfitting occurs when a model memorizes training data but fails to generalize to new inputs. Underfitting happens when the model is too simplistic to capture underlying patterns. Both scenarios reduce reliability.

Overfitting is common in small datasets with complex models, while underfitting often arises from overly constrained architectures. Regularization techniques (dropout, weight decay), cross-validation, and proper hyperparameter tuning are essential to strike the right balance.

Transition from Training to Deployment

Training a model is only half the journey; deployment introduces new challenges. Models that perform well in controlled environments may fail under real-world conditions due to distribution shifts, latency requirements, or infrastructure incompatibilities.

Integrating trained models into production requires robust MLOps pipelines, continuous monitoring, and retraining strategies. According to Gartner, 85% of AI projects fail to reach production, highlighting the importance of bridging the gap between research and deployment.

What are the Best Practices for Training AI Models

As models grow in scale and complexity, organizations must adopt best practices to ensure efficiency, reliability, and long-term value. These practices not only improve model accuracy but also help manage costs, infrastructure demands, and deployment risks.

Efficient Use of GPUs and Distributed Compute

Modern AI workloads, especially large language models (LLMs), demand significant computational power. Efficient GPU utilization is essential to reduce both time and cost:

- Batching and Mixed-Precision Training: Larger batch sizes and precision formats such as FP16, BF16, or FP8 accelerate throughput and reduce memory usage with minimal accuracy loss.

- Distributed Training: Scaling training across multiple GPUs or nodes using frameworks like DeepSpeed, Horovod, or PyTorch Distributed is now standard practice.

- Elastic Infrastructure: Leveraging hyperscalers or regional cloud providers ensures that teams can scale up compute for training and scale down when workloads are lighter.

Leveraging Pre-Trained Models and Transfer Learning

Training large models from scratch is often unnecessary and prohibitively expensive. Pre-trained models provide a head start by capturing general features from massive datasets. Fine-tuning these models on domain-specific data significantly reduces compute requirements while improving performance.

- Transfer Learning: Adapt general-purpose models like BERT or ResNet to specialized fields such as legal document analysis or medical imaging.

- Parameter-Efficient Tuning (PEFT): Techniques such as LoRA (Low-Rank Adaptation) or prefix-tuning update only a fraction of model parameters, making fine-tuning feasible on limited hardware.

According to Google Cloud, fine-tuning can cut costs by over 90% compared to training an equivalent model from scratch, making it a vital strategy for both startups and enterprises.

Automating Pipelines with MLOps

Training AI models is not a one-off task; it is part of a lifecycle. MLOps (Machine Learning Operations) applies DevOps principles to AI, automating repetitive processes and ensuring reproducibility.

- Automation: Tools such as Kubeflow, MLflow, and Vertex AI Pipelines help automate data ingestion, training runs, hyperparameter tuning, and model deployment.

- Versioning and Reproducibility: Tracking datasets, models, and training runs ensures experiments can be replicated and audited.

- Continuous Integration/Continuous Deployment (CI/CD): MLOps enables faster iteration by pushing new models into production without disrupting live systems.

This reduces human error and accelerates time-to-market, particularly for enterprises deploying AI at scale.

Monitoring and Retraining for Production Models

Deployment does not end the training journey. Models degrade over time as they encounter new data distributions, user behaviors, or adversarial conditions which is a phenomenon known as model drift. Best practices include:

- Monitoring: Continuous tracking of key metrics such as accuracy, latency, and fairness.

- Feedback Loops: Collecting user feedback and real-world results to guide retraining.

- Scheduled Retraining: Periodically refreshing models with new data ensures relevance.

- Adaptive Learning: In critical domains like fraud detection or cybersecurity, real-time retraining pipelines can automatically adapt to new threats.

How to Choose the Right AI Model Training Tool

Selecting the right tool or platform for AI model training is one of the most critical decisions in the development pipeline. The choice impacts not only the speed and accuracy of training but also scalability, integration, and long-term cost efficiency. Organizations should evaluate tools and platforms against several key criteria.

Alignment with Workload Type

Different tools are optimized for different workloads:

- Computer Vision: Frameworks such as TensorFlow and PyTorch offer strong support for convolutional neural networks (CNNs) and GPU acceleration.

- Natural Language Processing (NLP): Hugging Face Transformers and OpenNMT excel at training and fine-tuning transformer-based architectures for LLMs and machine translation.

- Reinforcement Learning: Tools like Ray RLlib or Stable Baselines specialize in distributed training for reinforcement learning workloads.

Start by matching the tool to the task. A platform that excels in NLP may not be equally efficient for high-resolution image generation or reinforcement learning.

Scalability and Infrastructure Compatibility

The best training tool is one that scales with your needs:

- Single-node training may suffice for prototypes or small models.

- Distributed training frameworks (e.g., PyTorch Distributed, Horovod, DeepSpeed) are necessary for models with billions of parameters.

- Cloud-Native Platforms: Hyperscalers and regional cloud providers often integrate training tools into their ecosystems, providing elastic compute resources and managed services.

Compatibility with existing hardware (GPUs, TPUs, or custom accelerators) and cluster managers (Kubernetes, Slurm) should be carefully considered to avoid vendor lock-in.

Ease of Integration with MLOps Pipelines

AI training doesn’t stop at experimentation; it must flow into production. Look for tools that integrate with MLOps frameworks such as Kubeflow, MLflow, or SageMaker. Features like model versioning, experiment tracking, and automated retraining ensure that workflows remain reproducible and auditable.

Cost and Resource Efficiency

Training budgets vary dramatically depending on the model scale. Tools that support mixed-precision training, checkpointing, and parameter-efficient fine-tuning can lower compute costs substantially. Many cloud-native tools also offer pay-as-you-go models, enabling startups to experiment affordably while allowing enterprises to scale with predictable costs.

Security and Compliance Considerations

For enterprises in regulated industries (e.g., healthcare, finance, government), security and compliance cannot be overlooked. The right tool should support features such as role-based access control, data encryption, and compliance certifications (GDPR, HIPAA, or ISO standards).

FAQs about AI Model Training

1. What is AI model training, and why is it important?

AI model training is the process of teaching an algorithm to recognize patterns and make predictions by learning from data. It involves feeding large datasets into a model, adjusting parameters to minimize errors, and evaluating performance against benchmarks. Without proper training, AI models cannot generalize to real-world scenarios, making training the foundation of effective AI systems.

2. How long does it take to train an AI model?

Training time varies widely depending on the size of the model, dataset, and infrastructure. Small machine learning models can be trained in minutes on a laptop, while large language models (LLMs) with billions of parameters can require weeks on thousands of GPUs. Techniques like distributed training, mixed-precision computation, and fine-tuning pre-trained models are often used to reduce training time and cost.

3. Do I need GPUs to train an AI model?

Not always. Small models or simple tasks can run efficiently on CPUs. However, GPUs (and sometimes TPUs or custom accelerators) are critical for deep learning and LLMs because they can handle the parallel computations required for training. For startups or teams without on-premise hardware, cloud providers and regional GPU services offer flexible, on-demand access to powerful infrastructure.

4. What is the difference between training from scratch and fine-tuning?

Training from scratch involves building a model with randomly initialized weights, requiring massive datasets and high compute power.

Fine-tuning starts with a pre-trained model and adapts it to a specific domain or task. This reduces training costs by up to 80–90%, makes it feasible for smaller teams, and often improves accuracy on specialized tasks.