The Spark of a Bold Idea

Every great journey begins with a spark—a moment of inspiration that lights the path forward. In the case of building a Large Language Model (LLM) from scratch, that spark is the idea of creating something revolutionary.

Something that could reshape how we interact with technology. It’s an idea that holds immense promise, but also demands courage, for the road ahead is long and fraught with challenges.

The early days of this journey are filled with excitement and ambition. The team, though small, is driven by a shared vision to build a cutting-edge LLM that could rival or even surpass the giants in the field.

However, as the initial excitement begins to settle, the gravity of the task at hand becomes clear. Building an LLM from scratch is not just about coding and data—it’s about navigating uncharted waters with limited resources and an unforgiving clock ticking in the background.

“Nothing Is Permanent Except Change”

Running a start-up is already filled with unknown variables. Undertaking such a challenge to create an LLM like that, that’s even more difficult.

That’s why having the right equipment is crucial, and in this case, that means acquiring access to vast amounts of computational power. But for a startup, every decision is a gamble, with limited resources at stake.

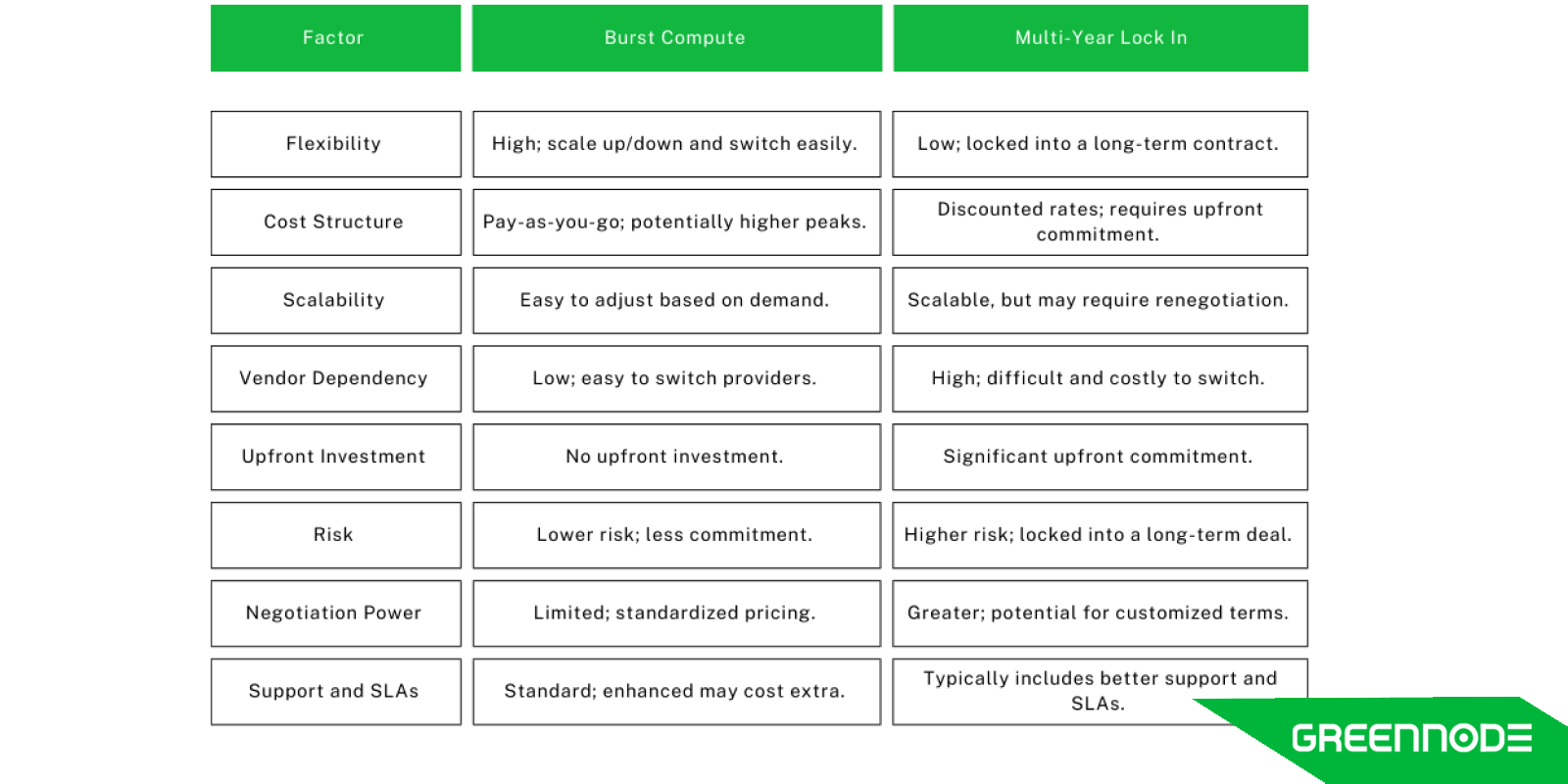

One of the first major crossroads on this journey is choosing between burst compute and a multi-year lock-in with a cloud vendor. Just to make it easier, here is a comparison table between the 2 options:

Burst computing is like renting a high-powered boat for a short, intense stretch of the river. It’s more expensive, but it offers the flexibility to navigate swiftly and change course if needed. This approach is ideal for one-off pretraining models, allowing the startup to scale up, use the resources, and then release them without the burden of a long-term commitment.

On the other hand, a multi-year lock-in feels like purchasing a long-term lease on a boat, promising stability over time. But stability can be deceptive in these fast-moving waters.

Vendors can delay, and the quality of the hardware they provide can vary significantly, turning what seemed like a safe bet into a perilous trap. Being locked into a bad vendor, with subpar cluster performance, can sink the entire operation.

When considering your options, it's also important to mention the choice of building your own infrastructure. While this gives you complete control, it can be extremely challenging and costly.

Building your own setup requires significant investment in both time and resources, and you won't have the support that comes with using large cloud providers, making it a much more demanding option overall.

Hardware’s unpredictability

Training a Large Language Model for a new startup can present unexpected hardware challenges. These problems might arise from various sources, and sometimes these issues are difficult to pinpoint or explain.

It’s not about superstition but rather the inherent complexities of managing and optimizing physical technology in a fast-moving, resource-constrained environment. Being prepared for these uncertainties is crucial as you work to develop and scale your LLM.

Startups can find a practical edge by partnering with vendors who have already tackled similar projects. These vendors often have well-developed architectures that might match the startup’s requirements closely. This alignment can significantly reduce the need for extensive customization, saving both time and resources.

Moreover, working with experienced vendors means tapping into their engineering expertise. Their teams are familiar with handling projects like yours and can help them navigate potential challenges more effectively. This experience can be invaluable, providing a smoother path from deployment to operation.

By choosing to collaborate with a vendor that has a proven track record, startups not only gain access to a reliable infrastructure but also benefit from reduced risks associated with hardware and performance variability. It’s a strategic way to leverage existing knowledge and support, making the complex process of model deployment more manageable.

The size of the team is also a concern

Having cool hardware is nice, don't get me wrong. But they don't run by themselves. A lot of hardware means a lot of people are required. The team, though small and dedicated, begins to feel the strain of the journey.

In the early days, a startup took pride in its lean, flat structure, with a small group of highly skilled individuals handling the workload. But as the project progresses, the cracks begin to show.

The workload, like the relentless current of the river, becomes overwhelming. The team members, despite their expertise and dedication, find themselves stretched too thin.

Exhaustion looms as a constant threat, with burnout becoming a real possibility. The startup’s initial pride in its small, nimble team begins to give way to the realization that growth and delegation are necessary for long-term sustainability.

In hindsight, the startup reflects on the importance of strategic hiring. The early focus on individual contributors—people who excel at diving deep into their work—proves to be a double-edged sword.

While individual prowess is admirable, there is only so much before one becomes too tired to continue. A more balanced approach, with a mix of hands-on experts and leaders who can manage and distribute the workload, would have alleviated some of the pressure and allowed the team to navigate the journey more smoothly.

Which Pill to Take?

When embarking on model development, every decision feels crucial. With the infrastructure in place and the right team assembled, the next step is choosing the development approach. For smaller teams, this choice can feel particularly high-stakes, as there's less room for trial and error compared to larger organizations. The pressure to get it right the first time is real.

Imagine this phase as a scene from The Matrix, where you face a choice between the red or blue pill. In this scenario, you’re deciding between a new, dense model, tweaking an existing one, or experimenting with an untested architecture. Each option carries its own set of risks and rewards. To make it easier to see the differences, here is a table:

| Approach | Maturity | Investment | Customization | Rewards | Risks |

| Choosing a New, Dense Model | Low | High | High | Cutting-edge capabilities, high potential performance | Unproven outcomes, high development and training costs |

| Tuning an Existing Model | High | Moderate | Moderate | Cost-effective, faster deployment, leveraging known performance | Limited improvements, potential performance ceiling |

| Exploring Untested Architecture | Very Low | Very High | Very High | Breakthrough advancements, novel capabilities | High uncertainty, risk of unforeseen challenges, possible wasted resources |

Choosing a new, dense model might offer cutting-edge capabilities and high potential performance, but it comes with significant development and training costs. The risk lies in unproven outcomes and the possibility that the model may not deliver as expected.

Tuning an existing model can be a more cost-effective approach, leveraging prior work and known performance. The rewards include potentially faster deployment and lower costs, but the risk involves limited improvements and the possibility of hitting the model's performance ceiling.

Exploring innovative, untested architecture opens the door to breakthrough advancements and novel capabilities, offering the potential for significant rewards if successful. However, it also comes with high uncertainty and the risk of unforeseen challenges, which could lead to wasted resources if the architecture doesn’t meet expectations.

In this high-stakes environment, risk is a constant companion. To achieve something groundbreaking, you need to be prepared to take calculated risks. Waiting for a flawless solution isn’t an option; every moment of indecision costs valuable resources, and idle time can quickly lead to mounting expenses.

Yet, this high-pressure situation can spark creativity and drive innovation. Decisions are guided by data and research and a mix of intuition and experience. Balancing careful analysis with instinct, you navigate the limited runway and stay ahead of the competition, making each decision a crucial step toward success.

Final Thoughts

The journey of building a Large Language Model from scratch is a rigorous test of resilience, adaptability, and strategic thinking. It demands not only technical expertise but also the capability to navigate a constantly evolving landscape with limited resources. This journey is marked by a series of challenges that require both skill and the flexibility to adjust as needed.

Lessons Learned

Each challenge faced along the way offers valuable lessons that can guide you toward effective solutions. With this in mind, here are the key lessons that can help you steer your project through the highs and lows of LLM development.

- Embrace Flexibility in Resource Management: Burst computing provides the agility necessary for navigating the unpredictable nature of LLM training. It enables quick adjustments and reduces the risks associated with long-term commitments.

- Prioritize Strategic Team Building: A well-rounded approach to hiring—combining strong individual contributors with effective leaders—can help manage the intense workload and prevent burnout.

- Make Informed, Intuitive Modelling Decisions: In a high-stakes environment, every decision has a significant impact. Balancing informed analysis with intuitive judgment while managing risk is essential for achieving success.

Call to Action

Starting up is tough. Building your own LLM is even tougher. But with GreenNode, you’re not alone in this journey. Our cutting-edge infrastructure and seasoned team of experts are here to guide you through every step, from initial setup to optimizing your models.

Let us handle the complexities, so you can stay focused on innovating and shaping the future of AI.

Start your journey with GreenNode today and turn your vision into reality.