Deploying machine learning models bridges the gap between experimentation and execution. It’s the stage where data science meets engineering, where models leave the comfort of Jupyter notebooks and start serving users at scale. Whether it’s recommending products, detecting fraud, or automating operations, successful deployment ensures that machine learning drives measurable outcomes, not just research milestones.

In this guide, we’ll explain what model deployment actually means, learn how to deploy machine learning models, and explore future trends that keep machine learning models reliable and efficient in production.

Machine Learning Model Overview

What are machine learning models?

A machine learning model is a mathematical representation that learns patterns from data to make predictions or decisions without being explicitly programmed. Rather than following fixed rules, it adapts by analyzing examples and discovering relationships between input features and outcomes.

As Microsoft explains, it is “an object that has been trained to recognize certain types of patterns,” while IBM defines it as a system that “learns the patterns of training data and subsequently makes accurate inferences about new data.”

In essence, a machine learning model is the product of training an algorithm on real-world data, once trained, it can recognize trends, classify information, detect anomalies, and generate reliable predictions automatically.

Machine learning models are now the foundation of modern AI systems. They power everything from voice recognition and recommendation engines to fraud detection and autonomous vehicles. Whether the task is classification (identifying spam emails), regression (forecasting sales), or clustering (grouping similar customers), every model follows the same principle: learn from past data to make informed predictions on future or unseen data.

Also Read: What is AI Model Training?

Machine Learning Model Example

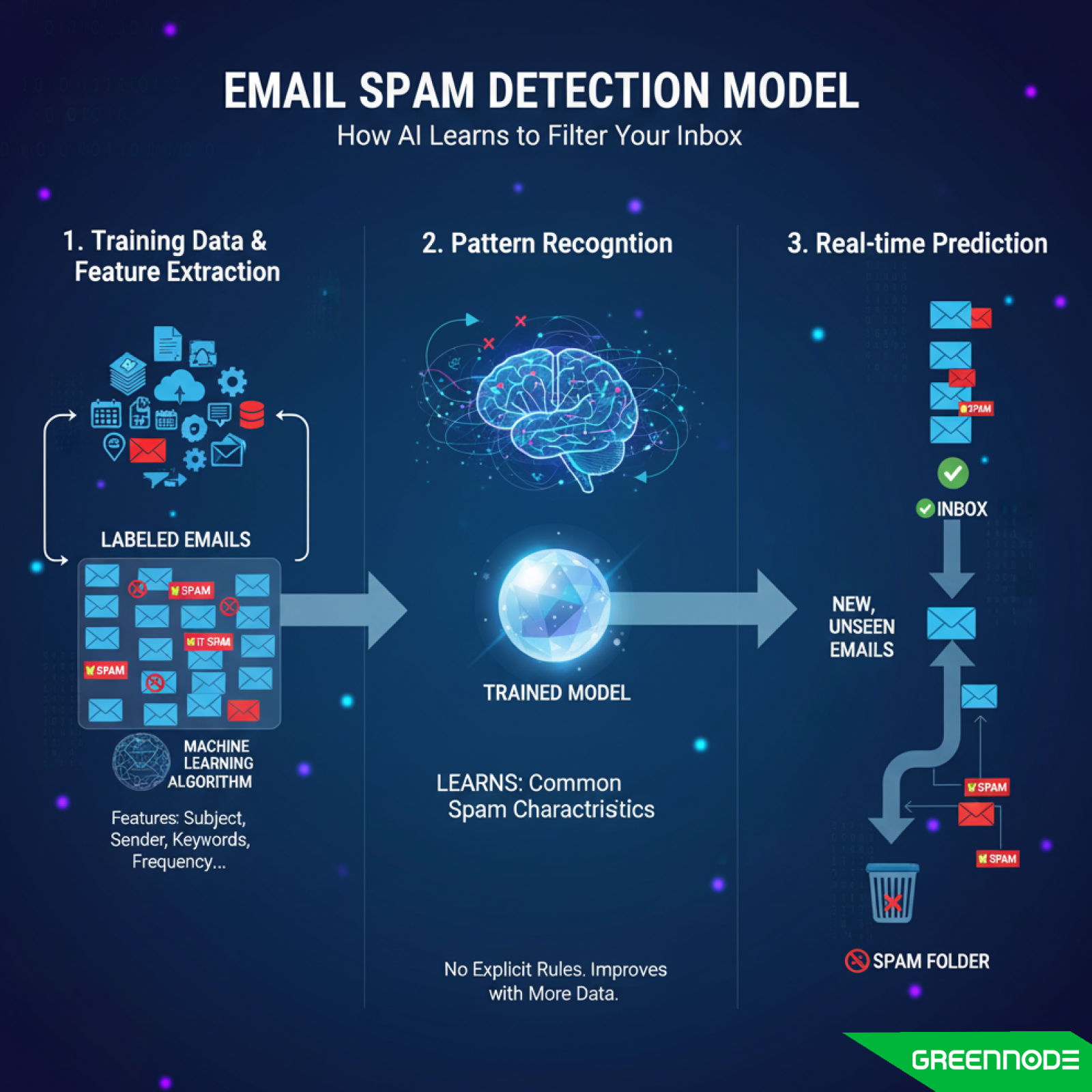

To understand how machine learning models work in practice, consider the case of a spam detection model used by email services. Engineers feed the model thousands of labeled emails, some tagged as “spam,” others as “not spam.” Each email includes features such as subject line, sender address, keywords, and message frequency. The model analyzes these examples to learn what characteristics are common in spam messages.

Once trained, the model can evaluate new, unseen emails and predict whether each one is spam or legitimate. It does this by applying the same learned patterns: no explicit rule-based programming is required. The more data it processes, the better its predictions become.

Another common example is a product recommendation model in e-commerce. Here, the model learns from historical customer data such as purchase history, browsing behavior, product similarities, to predict what a user is most likely to buy next. When you see a “You might also like…” section on a retail website, that’s a machine learning model at work, personalizing experiences in real time.

From identifying credit card fraud to optimizing supply chains or predicting medical diagnoses, machine learning models transform data into actionable insights. They don’t just automate tasks, they enable systems to learn, adapt, and continuously improve over time.

Machine Learning Model Types

There are many ways to categorize machine learning models, but most fall into five main types, each suited to different data problems and learning objectives:

- Supervised Learning Model

- Unsupervised Learning Model

- Reinforcement Learning Model

- Deep Learning / Neural Networks

- Ensemble & Hybrid Model

Understanding these categories helps determine how to train and apply a model effectively.

Supervised Learning Models

In supervised learning, the model is trained using labeled data which means each training example includes both inputs and the correct output. The model learns the mapping between the two, allowing it to predict outcomes for unseen data.

Supervised models are widely used for:

- Regression tasks, where the goal is to predict continuous values (e.g., forecasting sales or temperature).

- Classification tasks, which assign data into discrete categories (e.g., detecting spam emails or diagnosing medical conditions).

Common algorithms include Linear Regression, Logistic Regression, Decision Trees, Random Forests, and Support Vector Machines (SVMs). Supervised learning remains the backbone of most real-world machine learning systems because it’s easy to measure and validate model performance.

Unsupervised Learning Models

Unsupervised learning deals with unlabeled data, the model must discover hidden patterns or groupings without predefined answers. These models are valuable for exploring relationships in complex or unknown datasets.

Typical approaches include:

- Clustering, where data points are grouped by similarity (e.g., segmenting customers by behavior using K-Means or DBSCAN).

- Dimensionality reduction, which simplifies high-dimensional data into key features (e.g., using PCA or t-SNE for visualization).

Unsupervised learning is frequently used in data exploration, anomaly detection, recommendation systems, and feature extraction before supervised training.

Reinforcement Learning Models

Reinforcement learning (RL) is based on interaction and feedback. Instead of being told the correct answer, an RL agent learns through trial and error, receiving rewards for good actions and penalties for bad ones. Over time, it learns the strategy that maximizes cumulative reward.

This approach is ideal for sequential decision-making problems, such as robotics, gaming (like AlphaGo), autonomous vehicles, and resource optimization. Algorithms such as Q-Learning, Deep Q-Networks (DQN), and Policy Gradient methods are among the most popular in this field.

Deep Learning / Neural Networks

Deep learning is a subset of machine learning inspired by how the human brain processes information. These models use artificial neural networks (ANNs) composed of multiple layers that learn complex, non-linear relationships from massive datasets.

Key architectures include:

- Convolutional Neural Networks (CNNs) – for image and vision tasks.

- Recurrent Neural Networks (RNNs) and LSTMs – for sequential data such as speech and text.

- Transformers – for natural language processing and large language models (LLMs) that power generative AI.

Deep learning enables breakthroughs in computer vision, speech recognition, translation, and generative modeling, but requires large computational resources and data volumes.

Ensemble & Hybrid Models

Ensemble learning combines multiple machine learning models to improve predictive accuracy and robustness. Instead of relying on a single algorithm, ensemble methods aggregate outputs from several models to reduce bias and variance.

Popular ensemble techniques include:

- Bagging (e.g., Random Forests) – trains models independently and averages results.

- Boosting (e.g., XGBoost, AdaBoost) – sequentially trains models to correct previous errors.

- Stacking – blends different model types to form a meta-learner for final prediction.

Hybrid models extend this idea further by mixing machine learning and deep learning architectures or combining supervised and unsupervised components to achieve better generalization and interpretability.

Each machine learning model type serves a different purpose: supervised models learn from known examples, unsupervised models uncover structure, reinforcement models adapt through feedback, deep learning captures complex patterns, and ensembles blend strengths for higher accuracy.

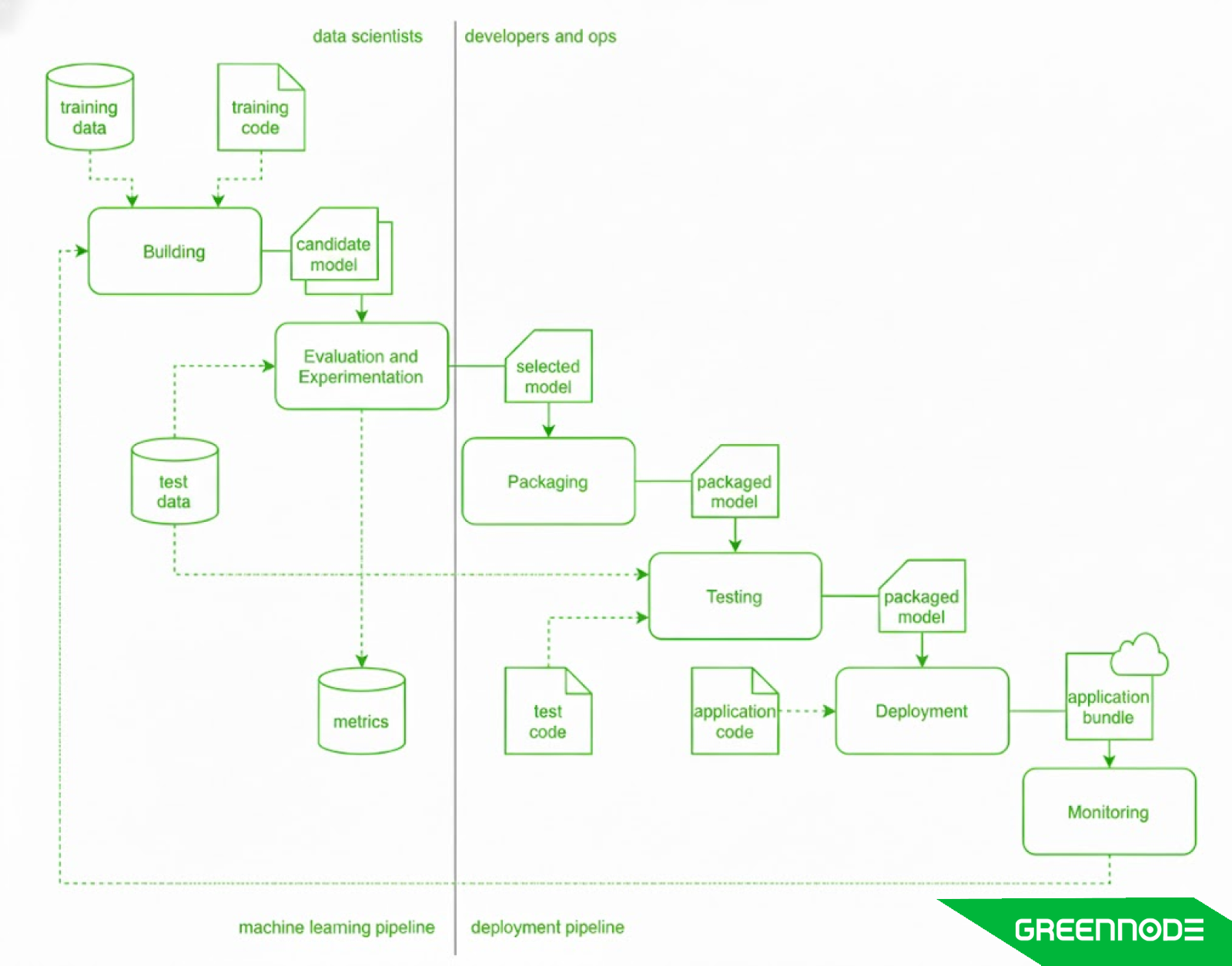

How to Deploy Machine Learning Models

Once a machine learning model has been trained and validated, the next step is deployment, which is the process of integrating it into a production environment so it can generate predictions in real-world applications. Deployment bridges the gap between development and business impact, turning a model from a research experiment into a usable solution that supports decisions, automates tasks, or powers intelligent products.

Deploying machine learning models can vary based on infrastructure, use case, and performance requirements, but the fundamental steps remain similar across most organizations. Here are key steps of machine learning model deployment:

- Prepare and Package the Model

- Choose a Deployment Strategy

- Integrate With an Application or API

- Monitor Model Performance in Production

- Automate with MLOps Pipelines

- Ensure Governance, Security, and Compliance

Prepare and Package the Model

Before deployment, the model must be properly packaged for reuse. This includes saving the trained model along with its dependencies (libraries, configurations, and preprocessing code) in a consistent format such as ONNX, TensorFlow SavedModel, or Pickle for Python-based models.

Many teams use version control tools like MLflow, DVC, or Weights & Biases to track experiments, manage model versions, and ensure reproducibility. Containerization tools like Docker make it easier to package the model and its runtime environment so it can run reliably across servers or cloud environments.

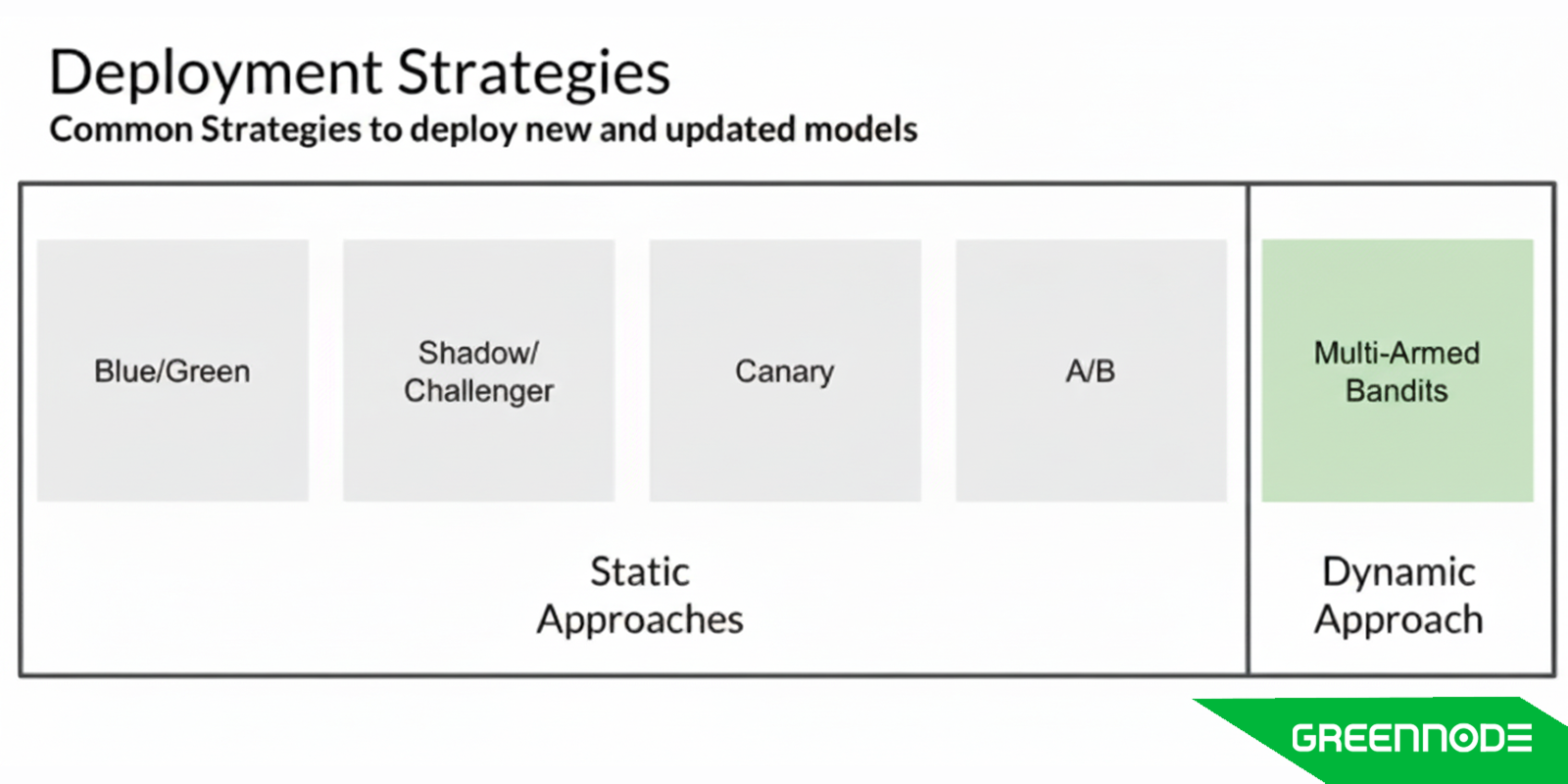

Choose a Deployment Strategy

The deployment approach depends on how predictions need to be served. There are three main strategies:

- Batch inference: The model processes large volumes of data at scheduled intervals. Common in analytics or reporting systems (e.g., weekly churn prediction).

- Online (real-time) inference: The model serves predictions instantly via APIs or web services, which are ideal for fraud detection, recommendation systems, and chatbots.

- Edge deployment: The model runs locally on devices such as smartphones, IoT sensors, or embedded systems, reducing latency and bandwidth usage.

Selecting the right approach depends on data velocity, latency requirements, and cost constraints.

Integrate With an Application or API

After packaging, the model needs to be connected to the application that will use its predictions. Typically, this is done through an API (Application Programming Interface). Frameworks like TensorFlow Serving, TorchServe, or FastAPI allow models to be deployed as REST endpoints.

This integration enables the application to send input data (such as a user’s profile or transaction details) and receive predictions (like fraud probability or product recommendations) in real time. For large-scale systems, this API layer often sits behind a load balancer or gateway to ensure scalability and fault tolerance.

Monitor Model Performance in Production

Models can degrade over time as data patterns shift, a phenomenon known as model drift. To maintain accuracy and reliability, organizations must continuously monitor key metrics such as prediction accuracy, latency, and resource utilization.

Tools like Evidently AI, WhyLabs, and Prometheus can track model health and alert teams when performance declines. Regular retraining pipelines, often orchestrated with Kubeflow, Airflow, or Prefect ensure that models stay aligned with current data trends.

Automate with MLOps Pipelines

To scale deployment efficiently, many organizations adopt MLOps (Machine Learning Operations) - a discipline that combines DevOps practices with machine learning workflows. MLOps enables automation across the entire lifecycle: data preprocessing, model training, validation, deployment, and monitoring.

Ensure Governance, Security, and Compliance

As machine learning models become central to business operations, governance and compliance are critical.

Deployed models should adhere to organizational policies and regulatory frameworks like GDPR, HIPAA, or Vietnam’s PDPD.

Best practices include:

- Enforcing role-based access control (RBAC) and audit logs.

- Securing data pipelines with encryption in transit and at rest.

- Implementing bias detection and explainability tools to ensure ethical AI decisions.

- Proper governance not only protects sensitive data but also builds trust in AI-driven outcomes.

Deploying machine learning models is the stage where innovation meets impact. It involves packaging, serving, monitoring, and scaling models so they deliver consistent results in real-world conditions. Whether you deploy via APIs, batch pipelines, or edge devices, success depends on reliability, automation, and continuous monitoring.

Also Read: From Zero to Hero: A Step-by-Step Guide to LLaMA 3 Fine-Tuning on GreenNode

Future Trends in Machine Learning Models

The evolution of machine learning models continues to accelerate as new techniques, tools, and architectures push the boundaries of what AI systems can achieve. In 2025 and beyond, the focus is shifting from building isolated models toward developing scalable, adaptive, and responsible AI systems that learn continuously and operate across diverse environments. Below are some of the most significant trends shaping the next generation of machine learning.

Foundation and Pre-Trained Models

Large pre-trained models often referred to as foundation models are redefining the landscape of machine learning. Instead of training from scratch, developers now fine-tune massive pre-trained models for specific domains or languages. This approach drastically reduces training costs and improves performance with minimal labeled data.

Organizations are increasingly adopting these foundation models as the backbone of their AI systems, using them for everything from document summarization to multi-modal reasoning. Expect to see greater specialization of such models across industries such as finance, healthcare, education, and cybersecurity, where domain adaptation becomes a key differentiator.

Also read: Machine Learning in Healthcare: Applications, Benefits, and Real-World Impact

AutoML and Low-Code Model Development

The rise of Automated Machine Learning (AutoML) and low-code/no-code tools is democratizing access to model creation. By automating feature engineering, hyperparameter tuning, and model selection, AutoML reduces development time and expands AI adoption across small and mid-sized organizations. As a result, machine learning is becoming less about coding expertise and more about problem-solving making data-driven innovation accessible to every business function.

Edge and Federated Learning

As connected devices proliferate, more machine learning models are being deployed on the edge, directly on smartphones, sensors, and IoT devices. Edge AI enables real-time inference with minimal latency, powering applications such as autonomous vehicles, industrial monitoring, and healthcare diagnostics.

In parallel, federated learning allows models to train collaboratively across distributed data sources without centralizing sensitive information. This enhances privacy and compliance while enabling organizations to leverage decentralized datasets for smarter, more secure AI.

Together, these trends make machine learning faster, more efficient, and privacy-aware, a necessity in data-regulated environments.

Explainable and Responsible AI

As machine learning drives critical business and social decisions, explainability and ethics are no longer optional. Future models will need to be interpretable, allowing stakeholders to understand why a model made a prediction.

Techniques such as SHAP, LIME, and integrated gradients are becoming standard tools for model transparency. Simultaneously, organizations are integrating fairness audits, bias detection, and governance frameworks into their ML pipelines to meet compliance standards and build public trust.

Responsible AI will be a competitive advantage, as customers and regulators demand more transparency in automated decision-making.

Multi-Modal and Continual Learning Models

Traditional models are often trained for a single task or data type, but the next wave of machine learning models is designed to learn from multiple modalities like text, image, video, and audio, simultaneously. Multi-modal architectures, such as CLIP or Gemini, enable more context-rich understanding and flexible problem-solving.

At the same time, continual learning (or lifelong learning) aims to help models evolve without forgetting previous knowledge. This will make future AI systems adaptive, resilient, and capable of long-term reasoning more similar to human intelligence than ever before.

FAQs About Machine Learning Models

1. What defines a machine learning model?

A machine learning model is a mathematical system that learns patterns from data and uses those patterns to make predictions or decisions without explicit programming. Once trained on historical data, the model can generalize to new, unseen data — identifying trends, classifying information, or forecasting outcomes.

2. How many types of machine learning models are there?

Machine learning models are generally divided into five main categories:

- Supervised learning models – trained with labeled data for prediction or classification tasks.

- Unsupervised learning models – uncover patterns or clusters in unlabeled data.

- Reinforcement learning models – learn through trial and reward to optimize decisions.

- Deep learning models – use neural networks to handle complex, high-dimensional data.

- Ensemble or hybrid models – combine multiple models to improve accuracy and robustness.

Each type serves different goals depending on the data, problem complexity, and desired outcomes.

3. What deployment method is best for real-time inference?

For real-time inference, the best approach is online or streaming deployment, where the machine learning model is hosted as an API or microservice that responds instantly to new inputs. Tools such as TensorFlow Serving, TorchServe, FastAPI, or AWS SageMaker Endpoints enable low-latency predictions.

In contrast, batch deployment is better suited for periodic, large-volume processing where real-time responses aren’t required.

4. How can I measure if my model is performing well?

Model performance is measured using evaluation metrics tailored to the task:

- For classification, use accuracy, precision, recall, and F1 score.

- For regression, track mean absolute error (MAE), mean squared error (MSE), or R² score.

- For ranking or recommendation systems, measure AUC-ROC or precision@k.

Beyond metrics, continuous monitoring in production is vital, checking for model drift, latency, and data quality ensures the model remains reliable over time.