NVIDIA's H100 GPUs, heralding a groundbreaking era in artificial intelligence (AI) with unparalleled speed and capabilities, are readily accessible to customers across multiple platforms.

The H100 serves as the evolutionary successor to NVIDIA's A100 GPUs, which have played a pivotal role in advancing the development of modern large language models. According to NVIDIA, the H100 offers AI training speeds up to nine times faster and boasts an incredible thirtyfold improvement in inference performance when compared to the A100.

This new GPU comes equipped with an integrated Transformer Engine, a pivotal component in the advancement of generative AI models like GPT-3. Additionally, it incorporates dynamic programming instructions (DPX) to expedite code execution.

All the major OEMs now have H100 server solutions for accelerating the training of large language models, and all the leading cloud providers have been actively introducing their H100 instances. In this blog, we will dive deeper into NVIDIA® H100 GPU and explore why this GPU can help accelerate AI across the cloud.

Why AI Workloads Need Faster Compute

AI workloads, especially those involving large language models (LLMs) and generative systems, are growing in size, complexity, and real-time demand. As these models scale into hundreds of billions of parameters and increasingly power interactive applications, the compute requirements escalate accordingly. Hyperscale training tasks now demand hardware that can handle massive parallelism, high memory bandwidth, and low latency which are capabilities beyond traditional systems.

This rising demand has driven GPU acceleration to the forefront of AI infrastructure strategy. Cloud providers are rapidly adopting accelerators like NVIDIA’s H100 to serve generative AI and LLM training workloads at scale. For instance, NVIDIA officially markets the H100 as delivering “industry-leading conversational AI, speeding up large language models (LLMs) by 30×” over prior generations. Moreover, in large-scale training benchmarks using 512 GPU nodes, H100’s per-GPU utilization has hit 904 TFLOPS, and performance has improved by 27% year over year.

Because of that, the H100 currently occupies a strong position as the workhorse GPU for AI across the cloud. Leading cloud and AI firms have integrated H100s into their offerings to meet the explosive compute needs of generative platforms and advanced model training pipelines.

What Makes the NVIDIA H100 GPU Unique?

The NVIDIA H100 is not just another GPU, it represents a generational leap in accelerated computing. Built on the Hopper architecture, it is engineered to meet the demands of today’s largest AI workloads, from large language models (LLMs) to exascale high-performance computing (HPC).

Hopper Architecture Overview

At the core of the H100 lies NVIDIA’s Hopper architecture, designed specifically for AI and HPC workloads. Hopper introduces features such as Transformer Engine technology, enabling mixed FP8 and FP16 precision that accelerates training and inference for transformer-based models. Compared to the previous Ampere generation, Hopper delivers significant efficiency gains, making it the de facto choice for generative AI, LLM training, and scientific simulations at scale.

CUDA and Tensor Cores for Deep Learning

The H100 includes over 14,000 CUDA cores and 4th-generation Tensor Cores optimized for deep learning. These Tensor Cores enable specialized matrix operations critical for neural networks, offering massive parallelism for both dense training and real-time inference. Benchmarks show that the H100 can deliver up to 30x speedups on LLM training compared to CPUs, enabling enterprises to cut development time from months to days.

High Bandwidth Memory (HBM3) – 3.35 TB/s

Memory bandwidth is often a bottleneck in training and inference. The H100 integrates 80 GB of HBM3 memory with 3.35 TB/s bandwidth, one of the highest in the industry at launch. This enables faster data transfer between memory and processing units, allowing for training on larger datasets and supporting batch sizes that were previously impractical. The high throughput is especially critical for workloads like climate modeling, DNA sequencing, and LLM fine-tuning.

Security and Scalability Features

Beyond raw performance, the NVIDIA H100 GPU incorporates enterprise-grade features designed for secure and scalable deployments:

Confidential Computing: Support for trusted execution environments (TEEs) ensures that sensitive data remains protected during processing, a critical requirement in healthcare and finance.

Multi-Instance GPU (MIG): The H100 can be partitioned into multiple secure GPU instances, allowing organizations to run multiple workloads simultaneously with strong isolation.

NVLink and NVSwitch: These technologies provide high-bandwidth interconnects, enabling efficient scaling across multiple GPUs within a server or across large GPU clusters.

These features make the H100 uniquely capable of handling everything from isolated AI inference tasks to distributed training at supercomputing scale, all while meeting enterprise requirements for security and compliance.

Also read: NVIDIA H100 Benchmark: Unleashing Unmatched AI/ML Performance in MLPerf Tests

AI Workloads Accelerated by H100 GPUs

Advancements in GPU-Accelerated Computing

Harness unparalleled performance, scalability, and security across a spectrum of workloads utilizing the NVIDIA® H100 GPU. By employing the NVIDIA NVLink® Switch System, it becomes possible to interconnect as many as 256 H100 GPUs, significantly boosting the acceleration of exascale workloads.

Additionally, this GPU boasts a dedicated Transformer Engine designed to tackle trillion-parameter language models. These groundbreaking technological advancements of the H100 can catapult the processing speed of large language models (LLMs) to an astounding 30 times that of the previous generation, setting new standards for conversational AI.

Boosting Large Language Model (LLM) Inference Speed

The PCIe-based H100 NVL with NVLink bridge, tailored for large language models (LLMs) of up to 175 billion parameters, takes advantage of the Transformer Engine, NVLink, and an ample 188GB HBM3 memory. This configuration not only ensures peak performance but also facilitates seamless scalability within any data center, effectively introducing LLMs into the mainstream.

Servers equipped with H100 NVL GPUs deliver an exceptional 12-fold boost in GPT-175B model performance compared to NVIDIA DGX™ A100 systems, all the while maintaining low latency in power-restricted data center environments.

Securely Workload Acceleration from Enterprise to Exascale

Transformational AI Training

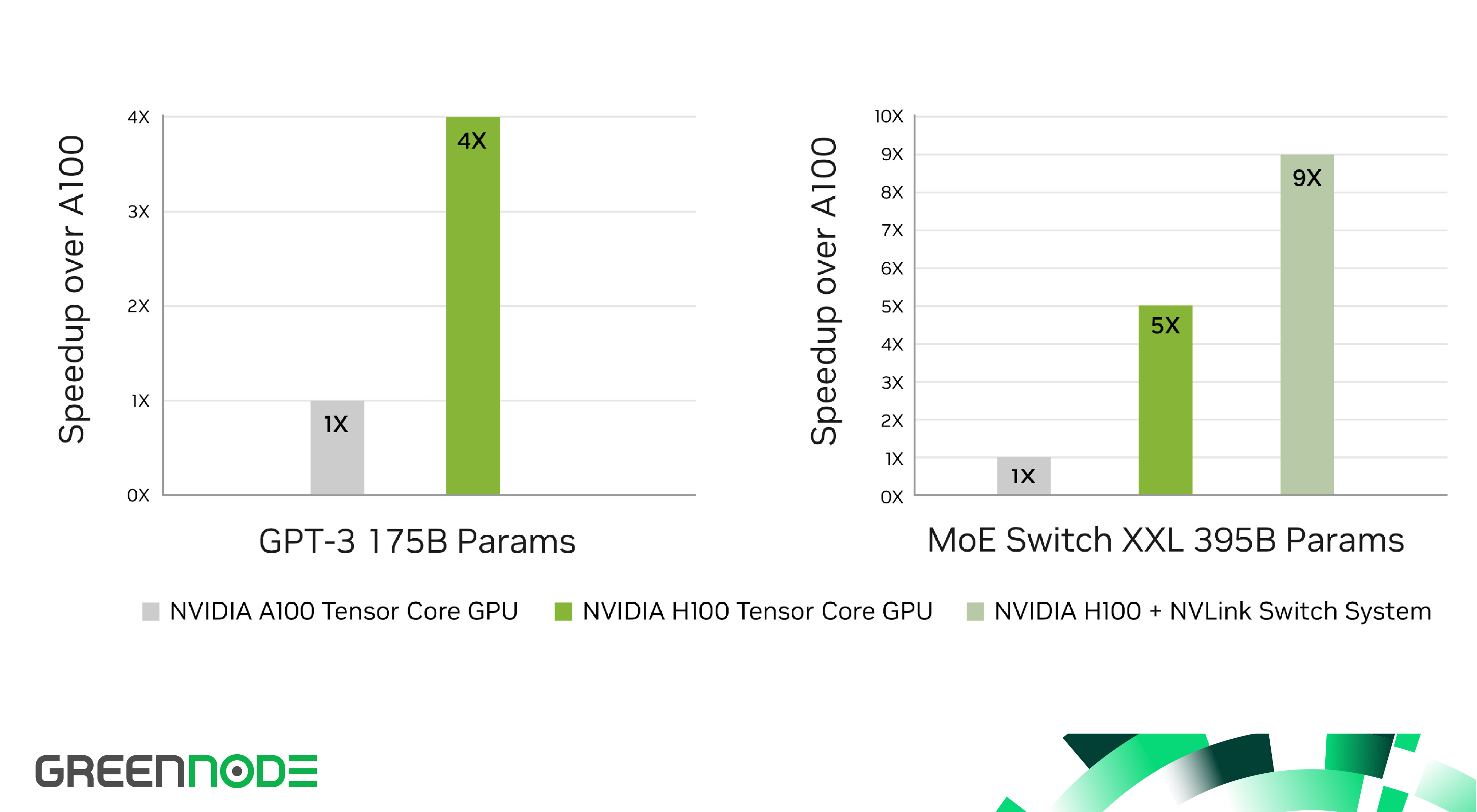

The H100 boasts fourth-generation Tensor Cores and a Transformer Engine with FP8 precision, enabling training speeds up to 4 times faster than the previous generation for GPT-3 (175B) models. This speed boost is achieved through the combined power of fourth-generation NVLink, offering a GPU-to-GPU interconnect speed of 900 gigabytes per second (GB/s), NDR Quantum-2 InfiniBand networking that accelerates inter-GPU communication across nodes, PCIe Gen5, and NVIDIA Magnum IO™ software, providing efficient scalability from small enterprise setups to extensive, unified GPU clusters.

Scaling up H100 GPU deployment in data centers yields exceptional performance, democratizing access to the next generation of exascale high-performance computing (HPC) and trillion-parameter AI for researchers across the board.

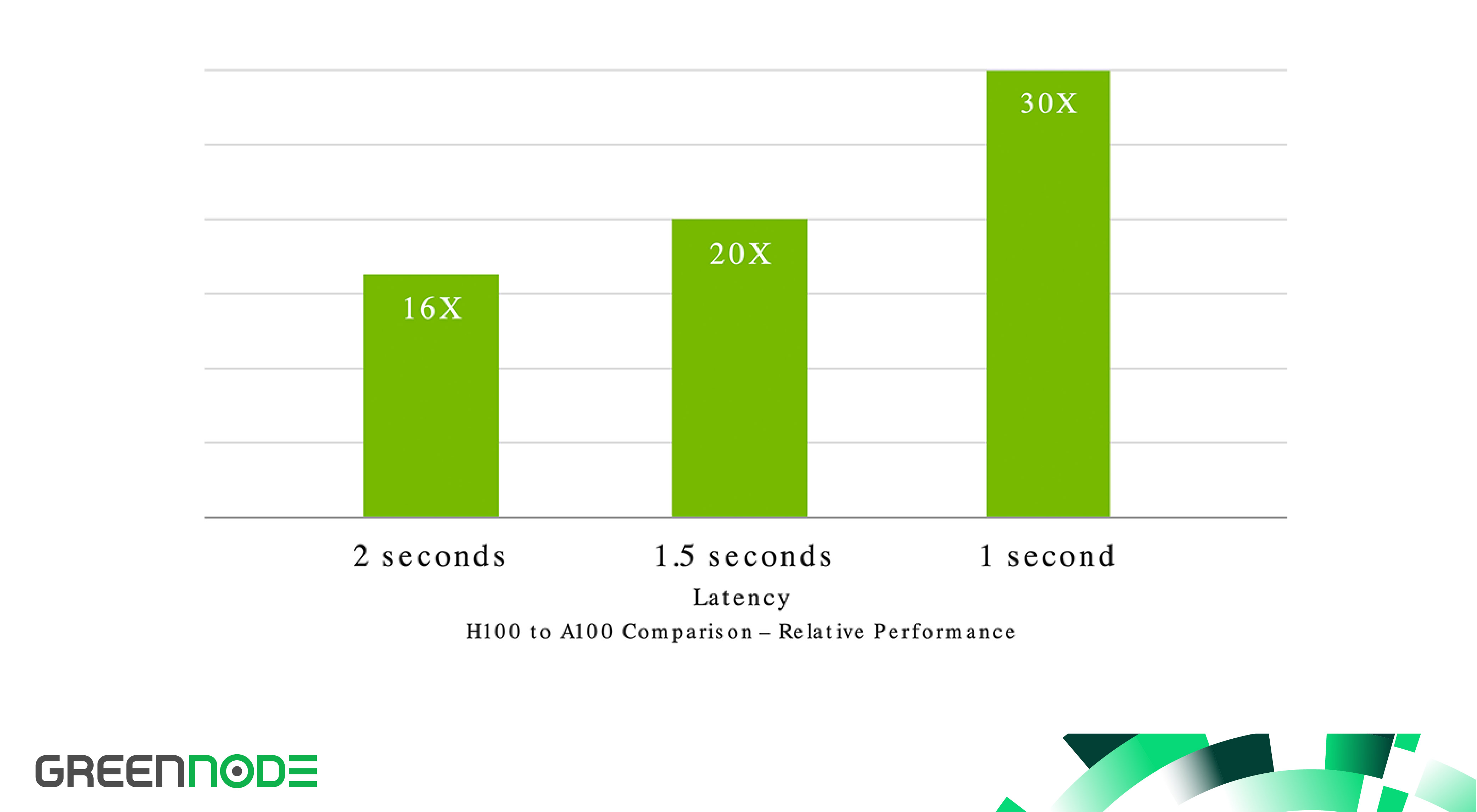

Real-Time Deep Learning Inference

AI addresses a diverse range of business challenges, employing a wide variety of neural networks. A superior AI inference accelerator should not only provide top-tier performance but also the flexibility to expedite these networks.

The NVIDIA H100 GPU builds upon NVIDIA's dominant position in the inference market with numerous innovations, amping up inference speeds by a remarkable 30X and ensuring minimal latency. The fourth-generation Tensor Cores enhance all precisions, spanning FP64, TF32, FP32, FP16, INT8, and now FP8, reducing memory utilization and boosting performance, all while preserving the accuracy required for Large Language Models (LLMs).

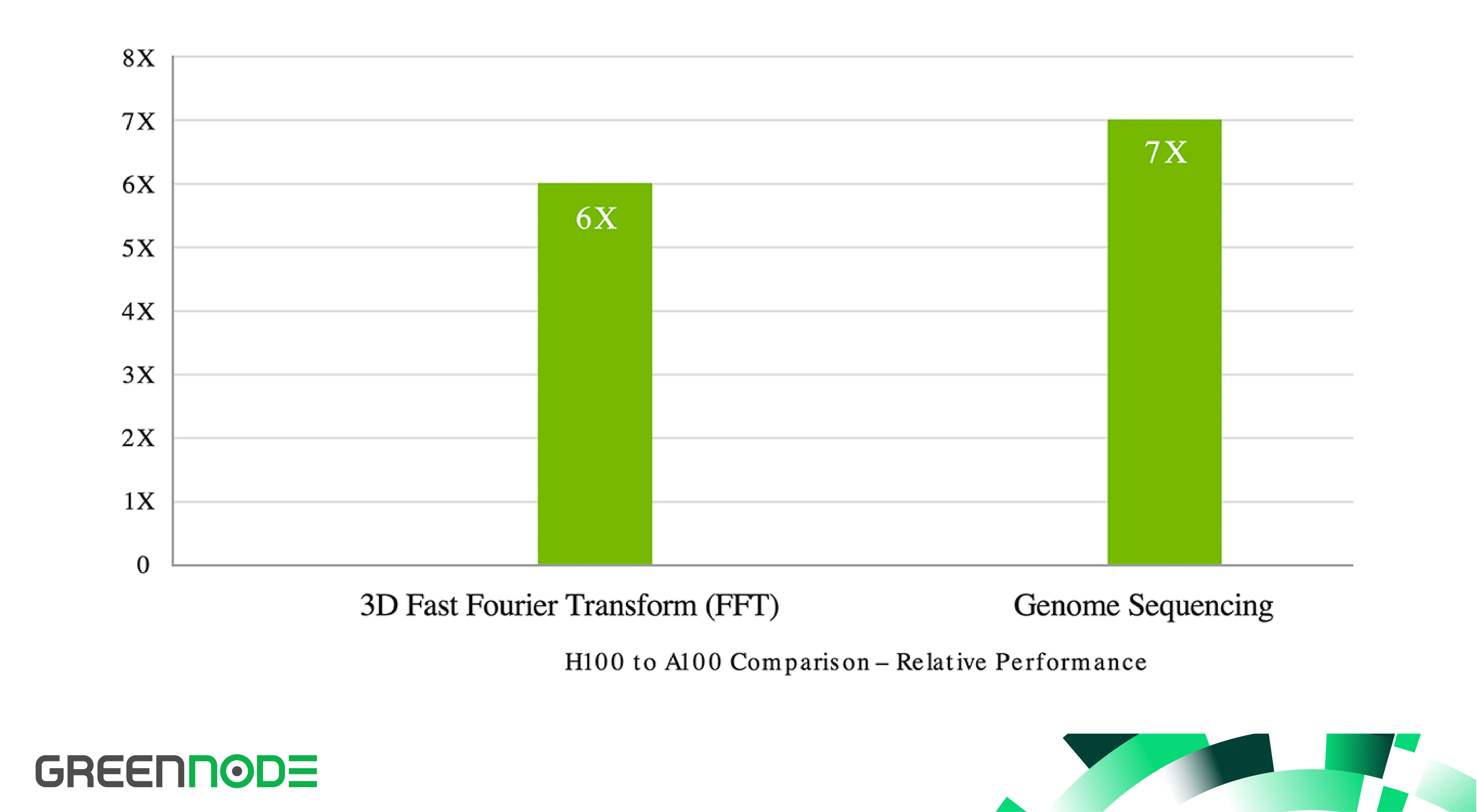

Exascale High-Performance Computing

The NVIDIA data center platform consistently outpaces Moore's law in delivering enhanced performance. The revolutionary AI capabilities of the H100 further amplify the fusion of High-Performance Computing (HPC) and AI, expediting the time to discovery for scientists and researchers tackling some of the world's most pressing challenges.

The NVIDIA H100 GPU achieves a threefold increase in floating-point operations per second (FLOPS) for double-precision Tensor Cores, resulting in an impressive 60 teraflops of FP64 computing power for HPC. AI-integrated HPC applications can also take advantage of H100's TF32 precision, achieving a staggering one petaflop throughput for single-precision matrix-multiply operations without any need for code modifications.

Additionally, the H100 introduces new DPX instructions that yield a 7-fold performance improvement over the A100 and provide a remarkable 40-fold speed boost over CPUs for dynamic programming algorithms such as Smith-Waterman, used in DNA sequence alignment, and protein alignment for predicting protein structures.

Accelerated Data Analytics

Data analytics often consumes a significant portion of the time dedicated to AI application development. Large datasets distributed across numerous servers can strain scale-out solutions reliant on commodity CPU-only servers due to their limited scalability in terms of computing performance.

In contrast, accelerated servers equipped with the H100 deliver robust computational capabilities, boasting 3 terabytes per second (TB/s) of memory bandwidth per GPU, and scalability through NVLink and NVSwitch™. This empowers them to efficiently handle data analytics, even when dealing with extensive datasets. When coupled with NVIDIA Quantum-2 InfiniBand, Magnum IO software, GPU-accelerated Spark 3.0, and NVIDIA RAPIDS™, the NVIDIA data center platform stands out as an exceptional solution for accelerating these substantial workloads, offering unparalleled levels of performance and efficiency.

Enterprise-Ready Utilization

IT managers aim to optimize the utilization of compute resources within the data centers, both at peak and average levels. To achieve this, they often employ dynamic reconfiguration of computing resources to align them with the specific workloads in operation.

The NVIDIA H100 GPU, featuring second-generation Multi-Instance GPU (MIG) technology, maximizes GPU utilization by securely dividing it into as many as 7 distinct instances. With support for confidential computing, the H100 enables secure, end-to-end, multi-tenant utilization, making it particularly well-suited for cloud service provider (CSP) environments.

With NVIDIA H100 GPU and MIG, infrastructure managers can establish a standardized framework for their GPU-accelerated infrastructure, all while retaining the flexibility to allocate GPU resources with finer granularity. This allows them to securely provide developers with precisely the right amount of accelerated computing power and optimize the utilization of all available GPU resources.

Integrated Confidential Computing

Conventional confidential computing solutions are predominantly CPU-based, posing limitations for compute-intensive workloads such as AI and HPC. NVIDIA Confidential Computing represents a built-in security feature embedded within the NVIDIA Hopper™ architecture, rendering the H100 the world's inaugural accelerator to offer confidential computing capabilities. This advancement empowers users to safeguard the confidentiality and integrity of their data and applications while harnessing the unparalleled acceleration provided by H100 GPUs.

NVIDIA Confidential Computing establishes a hardware-based Trusted Execution Environment (TEE) that effectively secures and isolates the entire workload executed on a single H100 GPU, multiple H100 GPUs within a node, or individual Multi-Instance GPU (MIG) instances. GPU-accelerated applications can run without modification within this TEE, eliminating the need for partitioning. This integration enables users to combine the potent capabilities of NVIDIA's software for AI and HPC with the security provided by the hardware root of trust inherent in NVIDIA Confidential Computing.

Exceptional Performance for Large-Scale AI and HPC

The Hopper Tensor Core GPU will be the driving force behind the NVIDIA Grace Hopper CPU+GPU architecture, a purpose-built solution tailored for terabyte-scale accelerated computing. This architecture promises to deliver a remarkable 10-fold increase in performance for large-model AI and HPC workloads.

The NVIDIA Grace CPU capitalizes on the adaptability of the Arm® architecture, creating a CPU and server architecture expressly designed for accelerated computing right from its inception. Linking the Hopper GPU with the Grace CPU is achieved through NVIDIA's exceptionally swift chip-to-chip interconnect, boasting a bandwidth of 900GB/s, a substantial 7-fold improvement over PCIe Gen5. This pioneering design is poised to provide up to 30 times more aggregate system memory bandwidth to the GPU compared to current top-tier servers, all while delivering up to 10 times higher performance for applications that process terabytes of data.

What's on the horizon after the NVIDIA GPU H100?

GPUs find utility in both model training and inference processes.

Training represents the initial phase - it's about instructing a neural network model on how to execute a task, respond to queries, or create images. Inference, on the other hand, involves the real-world application of these models in practical scenarios.

In order to facilitate the broader adoption of inference capabilities, NVIDIA has introduced its new L4 GPU. The L4 serves as a versatile accelerator for efficient video, AI, and graphics processing.

NVIDIA GPU H100 is a streamlined, single-slot GPU that can be seamlessly integrated into any server, effectively transforming both servers and data centers into AI-powered hubs. This GPU delivers performance that is 120 times faster than a conventional CPU server while consuming a mere 1% of the energy.

GPU servers for AI/ML provided by GreenNode

At GreenNode, we pride ourselves on being the one-stop solution for all your enterprise AI needs, transcending from infrastructure to a robust platform. Our commitment is to bridge the gap between enterprises and the AI mainstream workload, leveraging the unparalleled performance of the NVIDIA powerhouse. Explore our NVIDIA H100 GPU to find what fit your business's needs.

FAQs about NVIDIA H100 GPU

1. What makes the NVIDIA H100 GPU different from other GPUs?

The NVIDIA® H100 Tensor Core GPU, powered by the Hopper architecture, is engineered for next-generation AI and high-performance computing (HPC). It introduces the Transformer Engine, which accelerates large language models (LLMs) up to 30x faster than previous generations like the A100. With advanced FP8 precision, NVLink 4.0, and enhanced Tensor Cores, the H100 delivers unmatched speed and scalability for deep learning, inference, and data analytics.

2. How does the H100 accelerate AI workloads in the cloud?

The H100 GPU excels at cloud-based AI workloads by supporting massive parallel processing and efficient multi-GPU communication through NVLink and NVSwitch. This allows distributed training of large AI models across multiple GPUs and nodes. When deployed on a GPU cloud platform like GreenNode, it gives enterprises instant access to high-performance compute without physical infrastructure — ideal for training, fine-tuning, and inferencing large models.

3. What types of AI workloads benefit most from the H100 GPU?

The NVIDIA H100 is optimized for a wide range of compute-intensive AI and ML workloads, including:

- Training large language models (LLMs) such as GPT, LLaMA, and Falcon.

- Generative AI applications, including image, video, and text generation.

- Deep learning and computer vision tasks requiring large datasets.

Scientific simulations and HPC applications in finance, energy, and healthcare.

Its combination of compute density and efficiency makes it the leading choice for scaling enterprise AI workloads in the cloud.

4. How does the H100 improve performance for LLMs and generative AI?

The Transformer Engine in the H100 architecture automatically switches between FP8 and FP16 precision, balancing speed and accuracy during model training and inference.

This allows LLMs and generative AI models to train faster and deliver results with higher efficiency. The GPU’s 80GB HBM3 memory and multi-node scalability enable organizations to run even trillion-parameter models without memory bottlenecks — a critical advantage for large-scale generative AI pipelines.

5. What are the benefits of running NVIDIA H100 GPUs on GreenNode Cloud?

By running the NVIDIA H100 GPU on GreenNode’s high-performance cloud platform, teams can access scalable GPU clusters purpose-built for distributed AI training.

GreenNode provides:

- Preconfigured Slurm-managed clusters for seamless scaling.

- Low-latency networking for multi-node LLM workloads.

- Real-time GPU monitoring and cost optimization tools.

- Flexible provisioning — from single-node fine-tuning to full multi-GPU AI infrastructure.

With GreenNode, enterprises gain on-demand access to the world’s fastest AI hardware without CapEx — ideal for research labs, startups, and global AI teams.