Training large AI models is a daunting task. Long runtimes, underutilized GPUs, and unpredictable infrastructure costs create major roadblocks for businesses scaling AI workloads. Without efficient orchestration, teams struggle with slow iterations, wasted computer power, and operational headaches.

Starting with our own customer’s pain points in Slurm management, we eliminate these challenges with intelligent workload distribution and optimized resource management. Our Managed Slurm Cluster enables multi-GPU, multi-node training, ensuring that every compute cycle is maximized for efficiency. No extra dollar gets wasted.

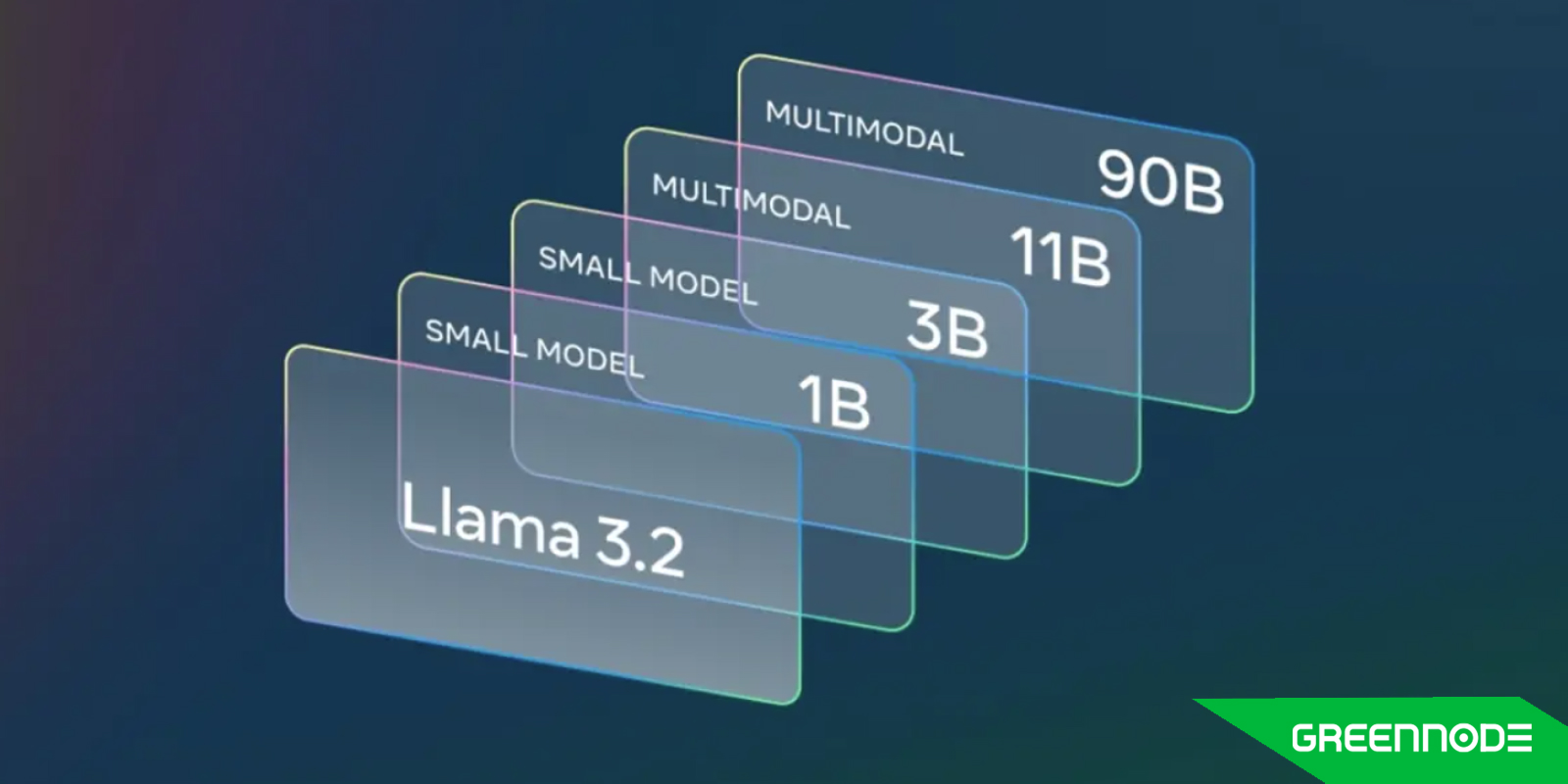

Take, for example, a recent use case where a customer fine-tuned LLaMA-3.2-1B using the Alpaca dataset on GreenNode’s AI platform. By leveraging Slurm’s resource scheduling, they:

- Cut training time significantly by distributing jobs across multiple GPUs, by half.

- Eliminated idle compute costs through dynamic scheduling.

- Ensured operational stability with automated recovery and monitoring tools.

Boosting AI Training Efficiency with GreenNode’s Distributed AI Workloads for Pre-configured SLURM Cluster

GreenNode’s Managed SLURM Cluster is purpose-built to simplify and accelerate distributed AI training—delivering a plug-and-play experience for even the most complex workflows. Traditional setups often require days of manual configuration and offer limited scalability, especially for large language models (LLMs) and multi-node training. In contrast, GreenNode’s solution is optimized from the ground up to support seamless distributed training—helping teams go from setup to training in hours, not days.

Whether you're fine-tuning a 1B+ parameter model or running a multi-GPU deep learning pipeline, our SLURM-based orchestration enables enterprise-scale training, intelligently distributing compute jobs across nodes and GPUs to maximize throughput. Customers have reported up to 2x faster training times thanks to this optimized job scheduling.

Every GPU cycle counts. That’s why our cost optimization features ensure that you only pay for what you use—no wasted idle time, no inefficient resource allocation. Smart queuing and real-time load balancing mean that compute resources are always working at peak utilization, translating directly into faster iteration and lower infrastructure costs.

And with live monitoring dashboards built into the platform, you’ll have real-time visibility into your system’s performance. From GPU memory usage to job status and system health, GreenNode gives you full transparency and control without the need for additional monitoring tools or DevOps overhead.

How to Set Up Distributed Training on GreenNode’s Managed SLURM Cluster

Before diving into training your AI models, it’s essential to ensure your SLURM Cluster is fully configured and ready for action. Set up SLURM Cluster for AI Training and Inference

One of the biggest barriers to distributed AI training is the setup—especially when dealing with multi-GPU, multi-node environments. That’s where GreenNode steps in. With our Managed SLURM Cluster, getting started is not only fast but also painless. Everything is pre-configured to help you hit the ground running, whether you're training a large language model or running deep learning experiments at scale.

For a complete technical walkthrough, check out our in-depth guide here: Distributed Training: LLaMA-Factory on Managed Slurm.

Here is a brief step-by-step guide:

Provision GPU Instances on GreenNode for Distributed Training

Deploy high-performance GreenNode GPU instances that match your LLaMA fine-tuning requirements (memory, compute). These instances are preconfigured to integrate with the managed Slurm cluster, allowing you to start without deep infrastructure setup.

Access the Cluster via SSH Head Node

Log in securely to the head node using SSH. Once connected, activate the environment that includes Python, CUDA, and other dependencies necessary for LLM training. This head node will orchestrate job scheduling and coordination across the cluster.

Install LLaMA-Factory and Supporting Libraries

Clone the LLaMA-Factory repository and install all required libraries (e.g. PyTorch, transformers, Slurm integration). This setup ensures your cluster nodes can run consistent code versions.

Prepare the YAML Training Configuration

Define a YAML configuration file that specifies model parameters (e.g. LLaMA-3.2-1B), training hyperparameters, data paths, and the Alpaca fine-tuning dataset. This file guides how the distributed job will execute across GPUs and nodes.

Submit Distributed Training Jobs Using Slurm Scripts

Use Slurm’s sbatch or other job submission commands to dispatch your training workload. The scripts manage resource allocation and parallel execution across multiple GPUs and nodes via Slurm scheduling.

Monitor GPU Utilization and Job Metrics

Use GreenNode’s built-in monitoring dashboard to track GPU usage, job status, throughput, and performance metrics. This visibility helps you detect bottlenecks and optimize resource allocation.

From small-scale experiments to full-blown LLM training, GreenNode’s SLURM Cluster is designed with one goal in mind: making AI infrastructure more efficient and accessible. Whether you're working on a single-node task or scaling across dozens of GPUs, our platform ensures your workloads run faster, smarter, and more cost-effectively—without the DevOps overhead.

Final thoughts

Distributed AI training doesn’t have to be complex or cost-prohibitive. With GreenNode’s Managed SLURM Cluster, you gain access to a production-ready environment designed specifically for large-scale AI workloads—without the DevOps hassle. From pre-configured GPU clusters to real-time monitoring and cost-efficient resource usage, our platform helps you move faster and train smarter.

Whether you're experimenting with new model architectures, fine-tuning LLMs, or deploying inference pipelines, GreenNode provides the robust infrastructure you need to succeed.

Explore more about our platform:

- Transparent, public pricing — know exactly what you’re paying for.

- Share your use case — and we’ll get back to you within 15 minutes, wherever you are.

- Browse our AI product portfolio — including the latest NVIDIA H200 GPUs.

Let GreenNode be your engine for scalable, efficient AI innovation.