By this point in the series, you and your team have done the hard work — planning, designing, sourcing hardware, and defining architecture. The blueprint is ready. Now comes the part that separates theory from reality: installation, integration, and day-to-day operations.

This is where things get real.

In this final post, we’ll walk you through the less glamorous but most critical phase of running a production-grade AI cluster — keeping it stable, efficient, and highly available at up to 99.5% SLA.

Testing 128 H100s Servers and Unlocking SR-IOV Performance for Optimized Deployment

In most large-scale infrastructure projects, there’s a clear handoff between teams: architects design the system, build teams deploy it, and ops teams take over maintenance. In our case, however, the same core team handled everything — from architecture to deployment and testing.

With the deployment phase in motion, we began testing — both on single-node and multi-node setups. This phase was critical, not only for catching hardware issues but also for validating the cluster’s ability to scale and recover.

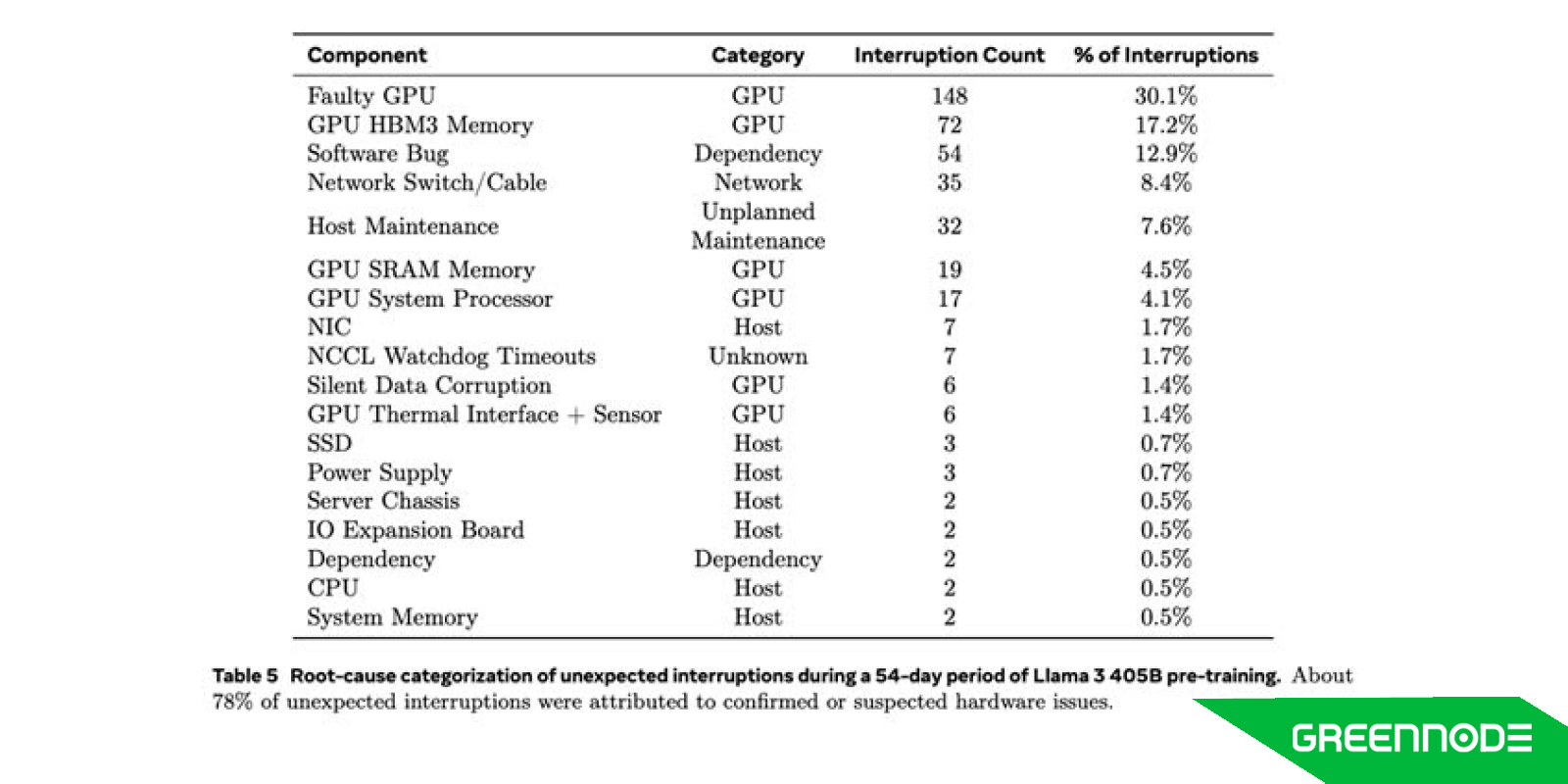

One of the most valuable outcomes from our initial testing was identifying hardware failures early. In our case, we experienced a 7% failure rate on our H100 servers (9 out of 128 servers), which — while painful — was still slightly better than what others reported, such as KSC’s 12% failure rate on H800 servers.

Each failure required coordinated efforts with Gigabyte and NVIDIA for RMA processing, and also pushed us to review and improve our spare part strategy to minimize downtime.

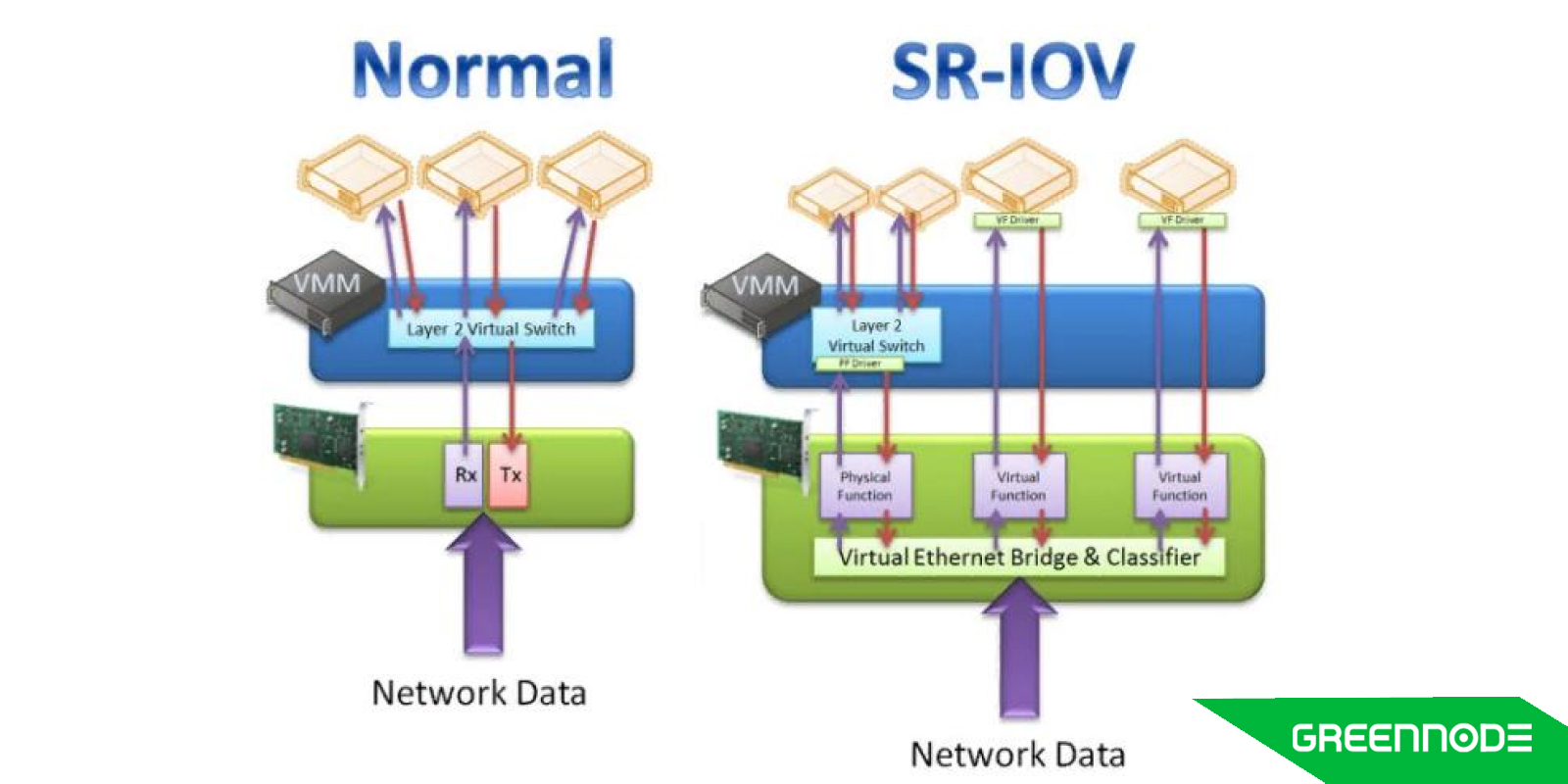

On the other hand, we also faced performance challenges on the virtualization side, especially during PÓC benchmarking involving high-throughput workloads. To meet demanding bandwidth requirements — such as delivering 100Gbps per VMvirtual interfaces — we implemented with SR-IOV (Single Root I/O Virtualization).

While SR-IOV isn’t a new technology, it has become essential in our setup. It allows virtual machines to bypass the hypervisor and communicate directly with hardware, like network cards or NVMe storage — unlocking near-native performance. This proved especially valuable in cloud environments where efficiency and cost per watt matter.

Our testing with partners validated SR-IOV’s impact, and we now see it as a key enabler for future projects requiring high-performance virtualization — not only for AI training but also for scalable inferencing workload.

Operations and Failures as The Necessary Parts of The Process

Running an AI cluster is fundamentally different from managing traditional infrastructure. Unlike legacy systems, where downtime in one component can trigger wide-scale service impact, a GPU cluster is built to tolerate individual node or GPU failures. Our cluster design ensures that AI jobs continue running, even when isolated nodes go down.

But aiming for an SLA in this environment is still no easy feat. With over 100 GPUs running at near-full capacity for extended periods, the risk of failure increases dramatically, especially when systems are pushed to their physical limits. So deciding the SLA is quite challenging, and to maintain SLA targets, we had to

- Build and actively manage a spare part inventory

- Establish escalation pathways with Gigabyte and NVIDIA for RMA support

- Proactively monitor power, thermal, and airflow metrics at the rack level

- Prepare for long-term degradation and unpredictable failure modes

This wasn’t a theoretical exercise — it was day-to-day firefighting, planning, and iteration. In the end, we successfully delivered our AI infrastructure to all customers with a committed SLA of up to 99.5% — a milestone made possible by the dedication and hands-on expertise of the engineers who personally designed, deployed, and now operate this cluster.

Hard Lessons Behind the Wins

Successfully delivering an AI cluster for one of our most strategic customers was more than just a technical achievement — it was a stress test of our people, processes, and decision-making under pressure. As we worked with multiple stakeholders to piece together this complex infrastructure, we quickly realized that there was no complete picture handed to us. Instead, we were left with a collection of puzzle pieces — some well-defined, others missing entirely — and it was up to us to fill in the gaps.

NVIDIA

While we didn’t purchase products directly from them — our transactions were through OEM vendors like Gigabyte — their ecosystem still governed much of the architecture, support, and validation process. And throughout the deployment, from software to hardware, we found ourselves needing support not just from the OEM but also from NVIDIA’s core team and NVIDIA Professional Services (NVPS).

- Their design is built around using fully validated, end-to-end solutions — including compute, storage, networking, and software — ideally within the NVIDIA ecosystem. But for practical and financial reasons, we opted to build a custom stack, integrating third-party vendors outside their comprehensive (and costly) packages.

- Their documentation lacked the updated information. Usually, while deploying very new gear, the software and firmware were behind the hardware depth we needed, and their support resources were heavily stretched across the vast region.

- The professional services team they assigned operated in different time zones, with a checklist-driven approach and little flexibility around project timelines. We had to push hard — and often take on work that wasn’t originally ours — just to keep things on track.

Gigabyte

When it comes to hardware vendors, we have worked with multiple server vendors before making our final decision.

The selection was based on several technical criteria, including

1. PCIe Topology & Interconnect Efficiency

A key bottleneck in large-scale distributed training environments lies in the PCIe topology between GPUs and CPUs. We observed that some vendors, particularly in OEM configurations, presented sub-optimal PCIe lane mapping, which degraded NCCL (NVIDIA Collective Communications Library) performance. Efficient GPU-to-GPU communication is critical, and we prioritized vendors that offered validated, high-bandwidth interconnect designs.

2. Firmware and Software Maturity

We assessed each vendor’s ability to deliver mature BIOS, BMC, and firmware support that integrates well with GPU orchestration frameworks. Stability in low-level system firmware is essential for:

- Reliable GPU enumeration

- SR-IOV and NVMe-oF enablement

- Integration with management platforms (Redfish, IPMI)

- Vendors with regularly updated firmware releases and good documentation were rated more favorably.

STT Bangkok Data Center

Our infrastructure was deployed at STT Bangkok 1, and overall, the experience was positive. The onboarding process was clear and efficient, with all requests managed through their customer portal. Their team showed flexibility and responsiveness, often going the extra mile to accommodate special requirements and exceptions.

STT BKK1 offers strong operational support and connects clients with reliable local partners or resellers for network provisioning. This simplified the overall setup and helped streamline the physical deployment phase.

Their facility operations team demonstrated solid expertise in data center management, ensuring that environmental controls, power planning, and physical access were handled smoothly — all key elements for a successful HPC cluster launch.

Tech people at their best

Throughout the project, one of the most important internal takeaways was the value of full ownership. Our team took on responsibilities that often extended beyond initial scopes — from architecture to deployment and on-the-ground troubleshooting. This mindset was essential to move fast and adapt in the face of ambiguity.

We also learned that assumptions can be costly. Relying on prior knowledge without validating it in new contexts led to delays and unnecessary troubleshooting. Every configuration, vendor, and integration point required verification, especially when working at this scale.

Cultural differences also played a major role. Teams from Thailand, Middle East (Israel) the UAE, and Europe had distinct working styles, communication habits, and holiday schedules. Respecting those differences — while staying firm on timelines — helped build trust and long-term alignment. Time zone challenges required extra planning and flexibility, especially when urgent coordination was needed.

Ultimately, this project reminded us that building infrastructure at scale isn't just a technical challenge — it’s a people challenge. Trust, clarity, and adaptability are what make complex collaborations work.

Last But Not Least

Looking back, there were clear opportunities where we could have saved time and cost — by formalizing change management earlier, validating assumptions more rigorously, and tightening the feedback loop between business, engineering, and procurement. But in exchange, we gained something more valuable: firsthand experience, hard-earned clarity, and the ability to move faster and smarter in future deployments.

What we built wasn’t just an AI cluster — it was a resilient foundation of technical depth, shared ownership, and execution under pressure. And that’s exactly what we’ve embedded into the solutions we now offer at GreenNode.

If you're building your own AI infrastructure and feeling the weight of complexity, you're not alone — we've been there. With GreenNode’s AI platform, you get production-grade GPU clusters and high-availability infrastructure, built by engineers who’ve done it end to end. We’ve handled the hard parts so you can focus on training and deploying your models, faster and with confidence. Explore more at AI Platform - One-stop AI platform for AI model training and deployment