In Part 1 of this series, we shared our background and walked you through the essential building blocks to get started with setting up an HPC/AI cluster. If you haven’t read it yet, you can check it out [here].

In this second part, we’ll break down the core architectural components of an AI cluster — including node, rack, and cluster-level design — and how an in-house team like ours managed to bring everything together and make it actually work. Be prepared for some hard-earned lessons and real-world failures along the way. Many of the tips in this post are based on our subjective experience, and the recommendations shared are for reference, not a one-size-fits-all blueprint.

But before we dive into the messy (and rewarding) part of execution, let’s start with a clear look at the foundational architecture you need to understand to build your own AI cluster.

The Essential Anatomy of An AI Cluster

AI workloads come in many forms — from simple model training to complex hyperparameter searches and large-scale distributed training. Each has different requirements, and your infrastructure must reflect that. For high-performance use cases like ours, we had no choice but to go with a large-scale distributed training architecture. This model is essential when training massive models across multiple nodes and GPUs, where performance and scalability are non-negotiable.

To support this architecture effectively, there are a few non-negotiable infrastructure realities:

- Distributed training demands low-latency, high-bandwidth server-to-server communication

- Inferencing clusters must be highly available and resilient to outages — far beyond what traditional server setups offer

Before you can get an AI cluster up and running, there are three essential levels of design you’ll need to carefully plan and execute. Each level builds on the previous one, and mistakes at any layer can lead to performance bottlenecks, system instability, or long-term scalability issues.

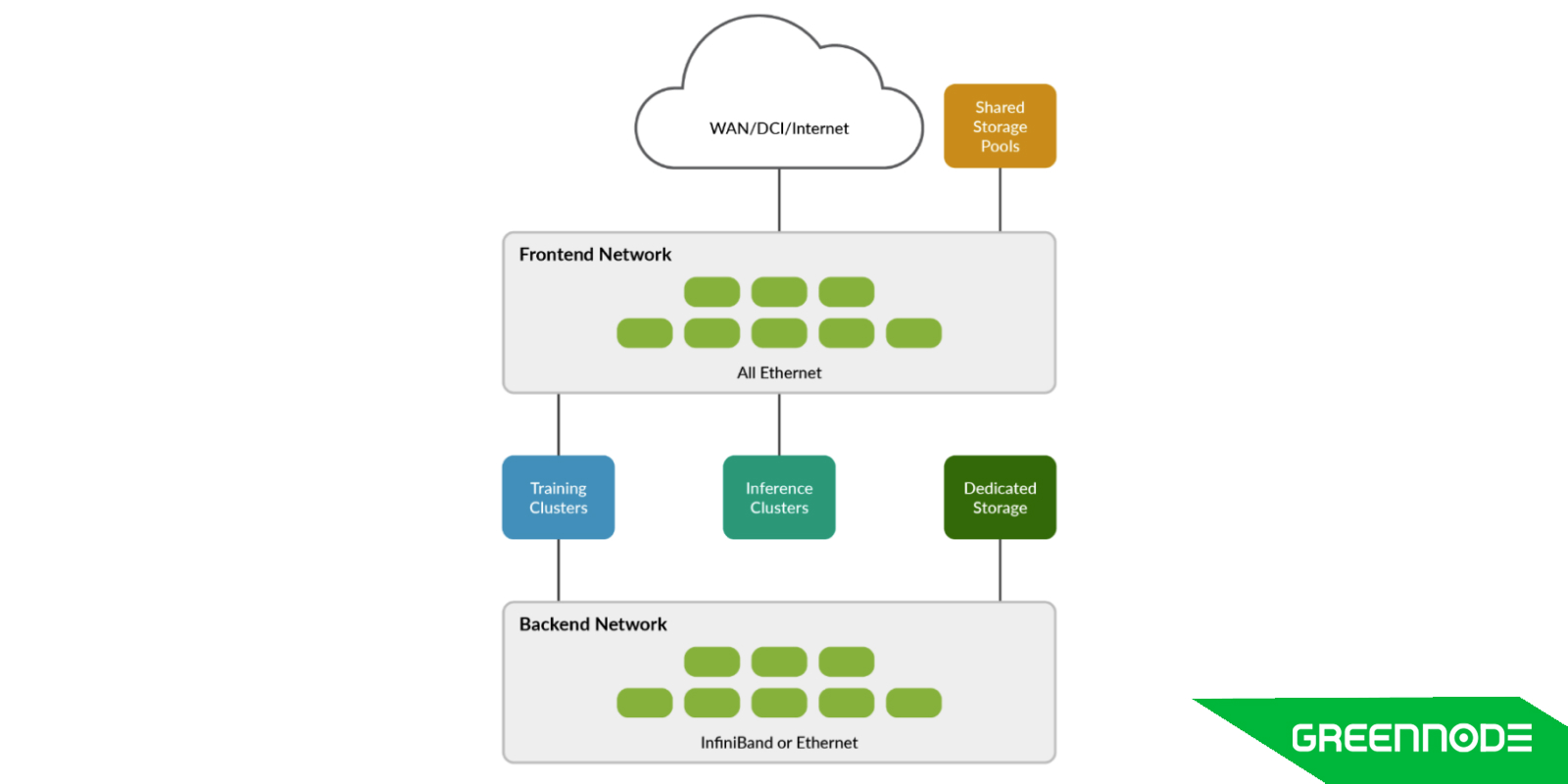

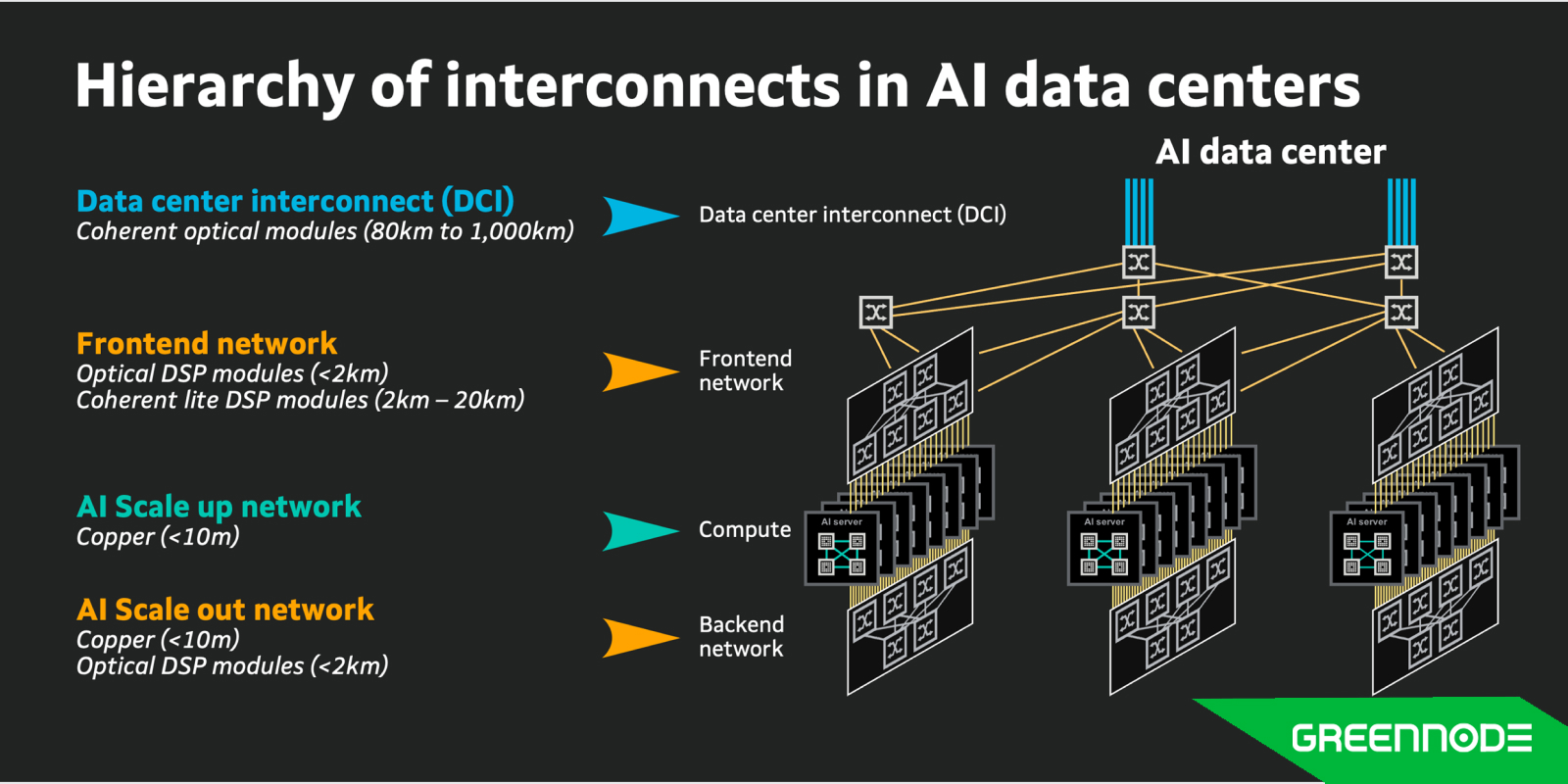

- Cluster-Level Design: this is the top-level architecture of the entire deployment. At this level, you're defining the strategic foundation, including data center for physical racks and capacity (cooling and power system, etc) and network topology & fabric.

- Rack-Level Design: focuses on the physical and logical arrangement of node position, cable and port structure within each rack. In our case, we had to build all the topology and mapping manually — from the rack layout to the final cabling design.

- Node-Level Design: selecting the right combination of GPUs, CPUs, memory, and NVMe storage per node based on your AI workload.

The 5 Core Components of AI Cluster Architecture

Once the three levels of cluster design are planned (cluster, rack, and node), the next step is to break down your system into five key architectural components. These are the foundational building blocks that determine how well your cluster performs — and how maintainable it will be long-term.

- Data Center: Refers to the electrical and physical infrastructure — including power distribution, cooling systems, and rack layout — that hosts and supports the entire cluster.

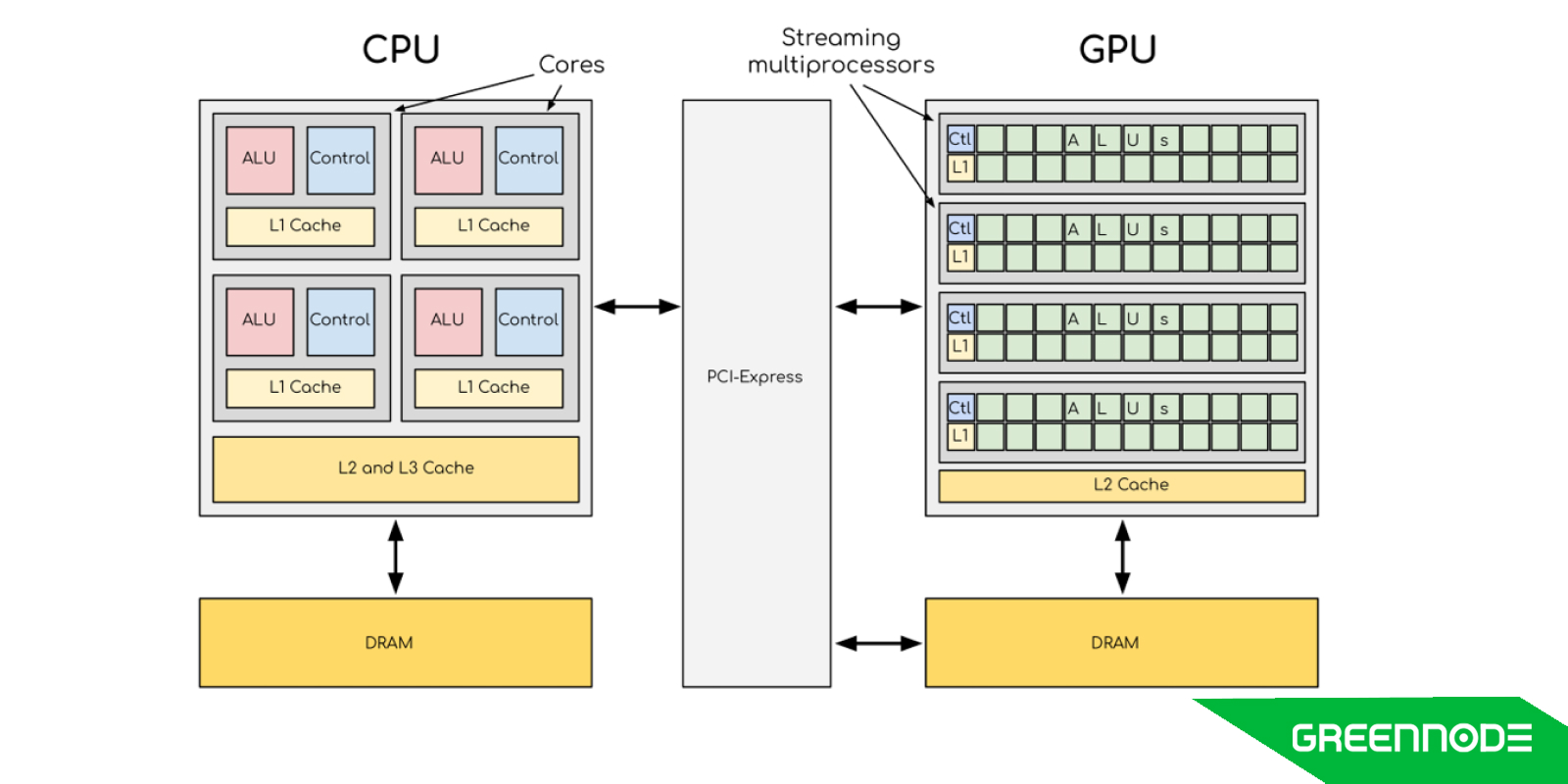

- Compute: A combination of GPU and CPU resources, along with supporting hardware, to process AI workloads — typically powered by platforms from NVIDIA, AMD, Intel or OEM vendor such as Asus, Gigabyte, Dell, HPE, HPC, etc.

- Networking: Includes multiple dedicated networks for compute operations, data storage, in-band management, and out-of-band management to ensure performance and reliability.

- Storage: Used to store and retrieve data efficiently for high-performance AI tasks, ensuring fast access to large datasets during training and inference.

- Software: Encompasses cluster orchestration, job scheduling, resource allocation, and container orchestration to manage workloads and ensure scalable, efficient operation.

NVIDIA Cloud Partner

At GreenNode, we chose to build our AI cluster based on the NVIDIA Cloud Partner (NCP) reference architecture. The NVIDIA Cloud Partner (NCP) reference architecture is a blueprint for building high-performance, scalable and secure data centers that can handle generative AI and large language models (LLMs). Basically, NVIDIA will sell a one-package architecture with compatible components for selected partners. This package includes:

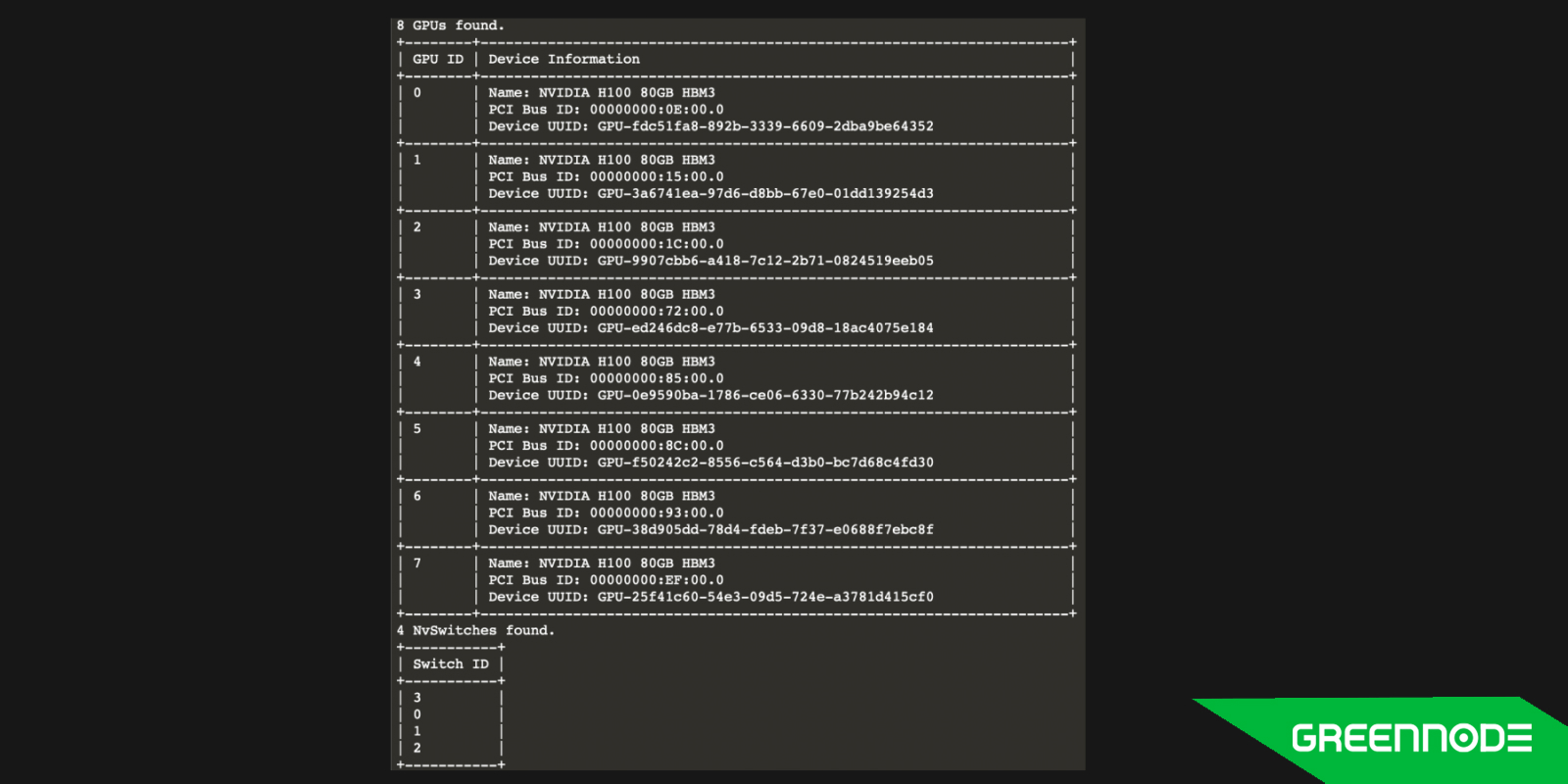

- GPUs come with servers from OEM vendors, which are validated by NVIDIA. We utilized 1024 NVIDIA H100 Tensor Core GPUs, integrated into server hardware provided by Gigabyte, a validated OEM partner under the NCP program.

- When it comes to network, we combine two layers of networking, including NVIDIA Cumulus Ethernet for out-of-band management and Mellanox InfiniBand for in-band compute communication, ensuring low-latency, high-bandwidth GPU-to-GPU performance across nodes.

- While NCP typically includes Base Command Manager (BCM) and NVIDIA AI Enterprise software, we bring along our existing provisioning stack and opt to run our cluster using a hybrid orchestration approach Slurm for job scheduling & resource management and Kubernetes for container orchestration and flexible workload.d deployment

Although NVIDIA offers Excelero’s NVMesh or DDN as part of the package, we chose to put our trust in integrating the VAST Data platform for our storage layer, providing the performance, scale, and simplicity needed to support high-throughput AI training workloads and open more opportunities for better scaling in the future.

On top of that, we deployed our entire rack at STT Data Center in Bangkok, Thailand — a reliable colocation facility known for its responsive support and operational transparency.

To bring all these components together — compute, storage, networking, and orchestration — you need a cohesive, high-performance communication backbone. This is where the concept of the AI Interconnect comes in. AI interconnect provides a framework to ensure efficient data movement and communication between the components comprising the AI cloud.

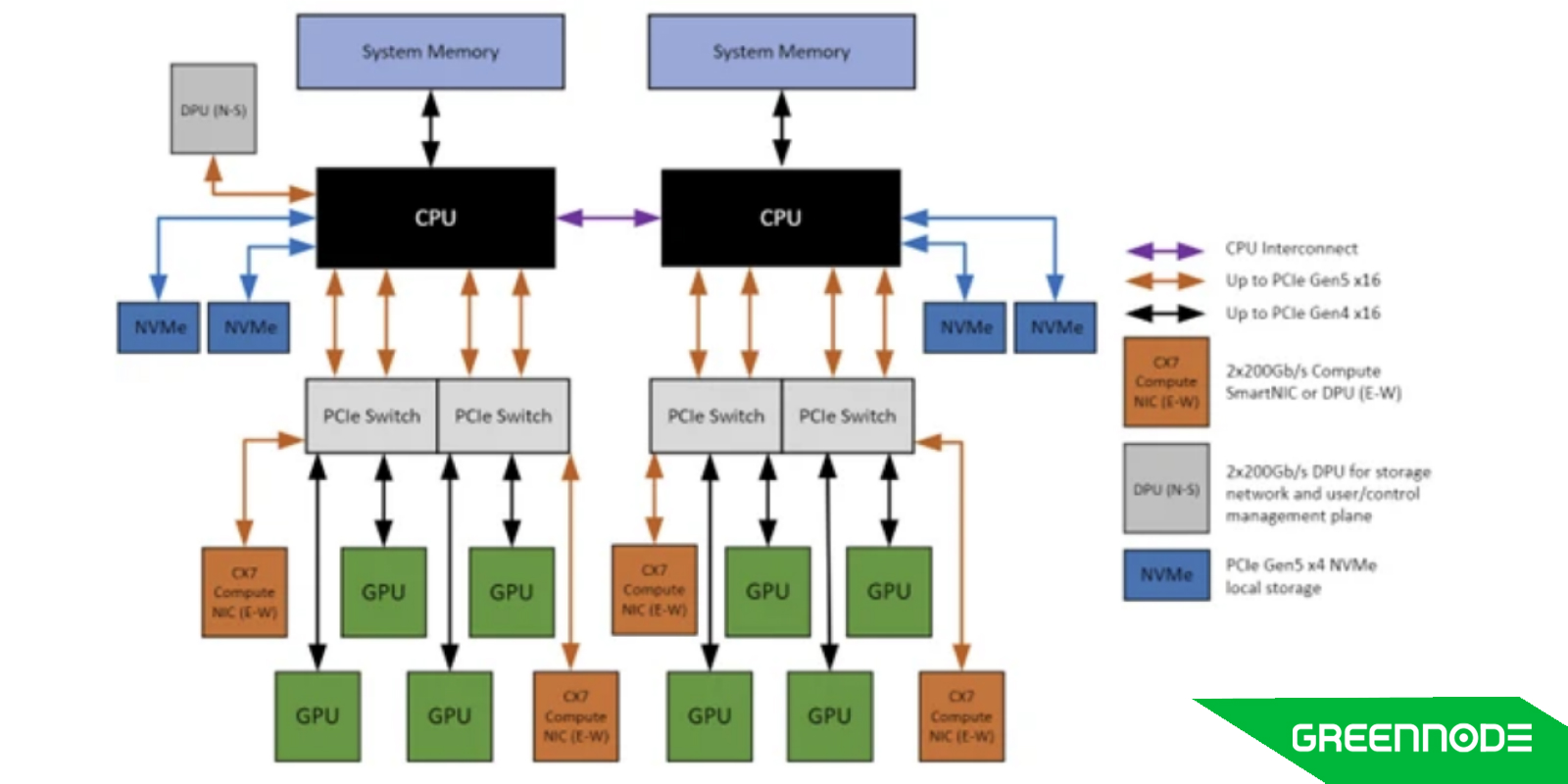

At GreenNode, our hardware interconnect architecture is designed to optimize for both speed and reliability, using the following key technologies:

- NVLink/PCISwitch: Enables ultra-fast GPU-to-GPU communication, both within a single node and across multiple nodes, allowing large models to train efficiently without bottlenecks.

- InfiniBand (IB): A high-speed, lossless, low-latency networking protocol used for RDMA (Remote Direct Memory Access) — ideal for distributed training and minimizing communication overhead between servers.

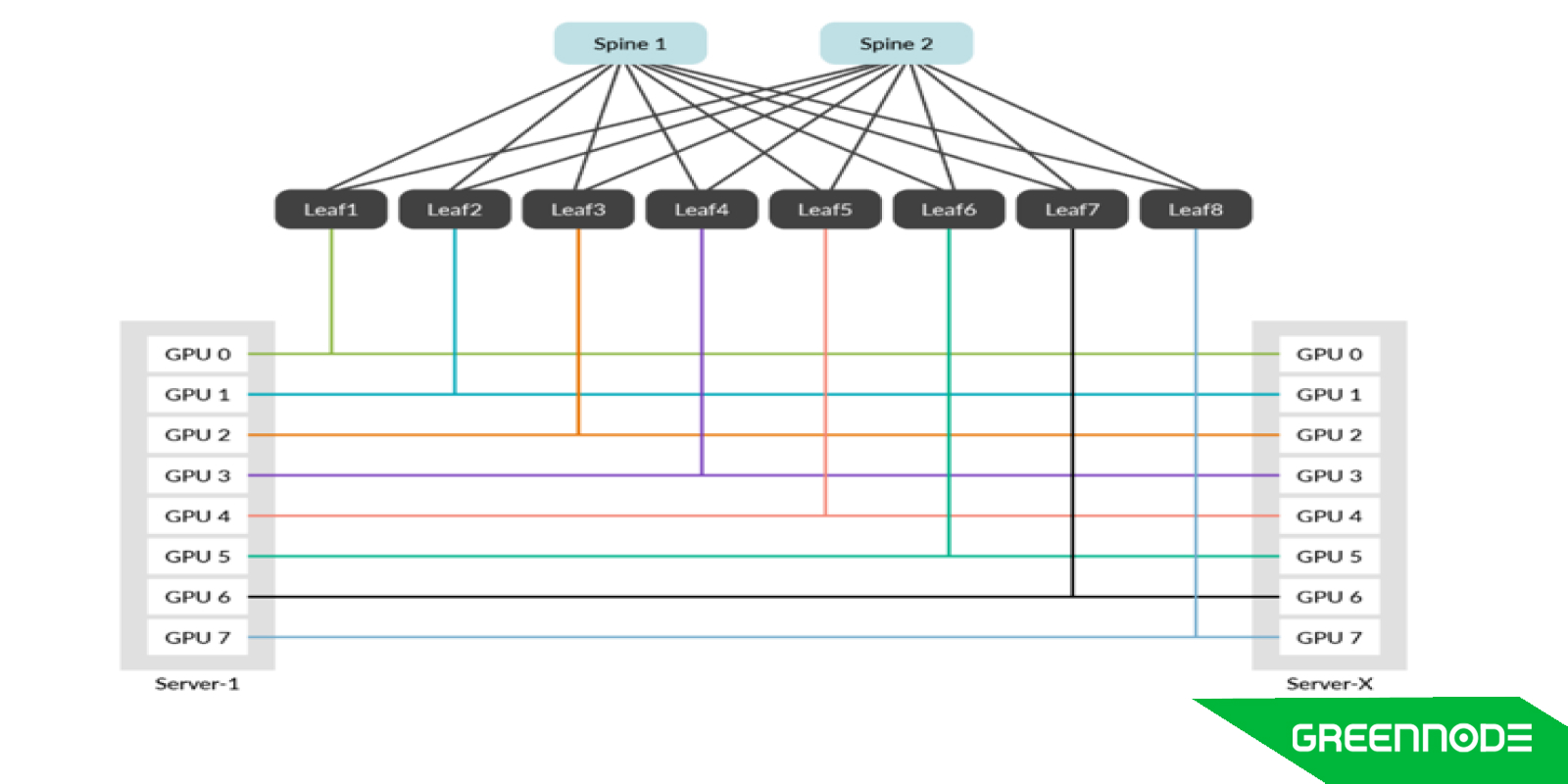

- RAIL Topology: Involves deploying multiple, parallel network paths to provide both redundancy and performance, ensuring the system remains operational and scalable even under load or failure conditions.

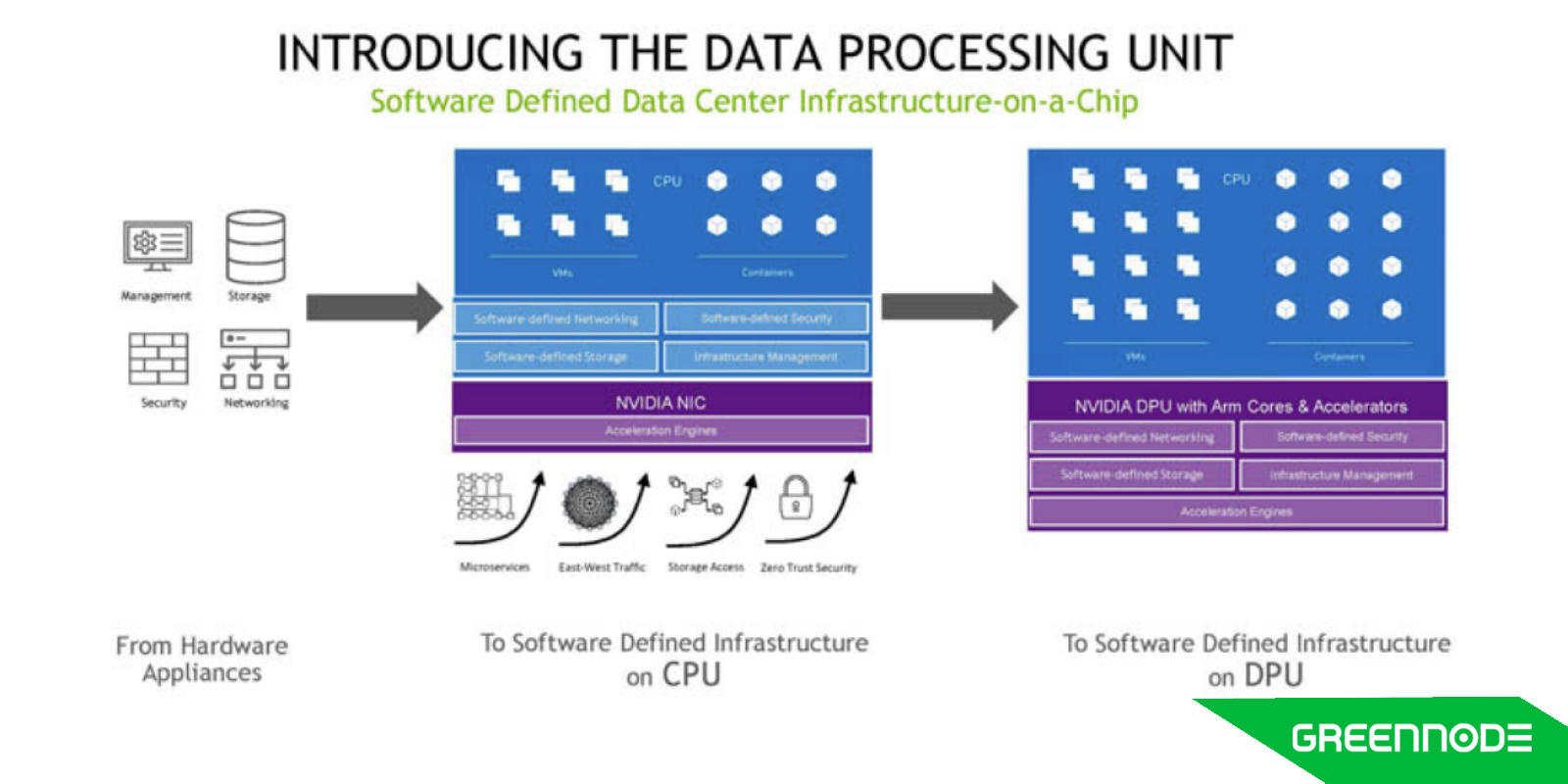

- DPU (Data Processing Unit): Essentially a smart NIC, the DPU offloads various tasks from the CPU, including encryption, key management, NVMe-over-Fabrics (NVMe-oF), compression, and more — freeing up host resources while enhancing security and I/O performance.

Factors to Consider When Putting Things Together

Setting up an AI cluster is not just a matter of choosing powerful GPUs and installing the right software. It involves balancing technical decisions, delivery timelines, and business constraints — all while dealing with a constant lack of clarity.

One of our biggest challenges was the lack of official reference architecture, especially around network topology, including PCI and NCCL design. What we received were mostly draft slides, BOM lists, and high-level guidance. That meant we had to build the entire topology ourselves — from data center layout to rack mapping and cabling — without a clear standard to follow.

At the same time, procurement lead time quickly became a critical bottleneck. Components were delivered in multiple phases, and even a minor delay from one vendor could hold up the whole deployment. We had to track deliveries daily, follow up weekly, and keep pushing for updates just to stay on schedule. As of mid-June 2024, some networking parts still hadn't arrived.

These delays directly impacted financial modeling. With a large investment at stake, every change had to be justified and revalidated. Our architecture was revised multiple times based on cost projections, vendor availability, and support requirements, like deciding whether to keep NVIDIA Ethernet gear for NCP compliance despite high cost and long delivery times.

The urgency of this project added another layer of complexity. We were under pressure to deliver a fully operational AI cluster within just five months — a deadline driven by contractual commitments to our customers. Failing to meet it wouldn’t just result in cost overruns — it could mean losing the deal entirely.

Under such tight time and financial constraints, we quickly realized that stepping up internally and building the architecture ourselves was the only viable path forward. Relying solely on vendor-provided structures, documentation, or so-called “validated” reference designs would have likely doubled our deployment time — or worse – due to:

- Limited human resources

- Geographic constraints

- And the immaturity of vendor reference architectures

In that context, leveraging our in-house capabilities and designing around practical realities — rather than waiting for perfect templates — became a strategic necessity.

What We’ve Learned So Far

We hope this post has given you a glimpse into the massive workload that lies ahead when building your own AI cluster — arguably just as painful as the monthly cloud bills you’ve probably endured. But here’s the good news: some of us have already carved a path forward, and the game is no longer exclusive to the tech giants. With the right structure in place, you can build smarter — and yes, save a significant amount for your business.

In the next part, we’ll face a hard truth: GPUs are inherently unstable. And when things break (and they will), that instability could burn a hole in your wallet. To deal with this, we’ll dive into frameworks, troubleshooting guides, and real-world workarounds that could save you time and money in the next part HERE.

P.S. If this whole journey feels overwhelming, remember you can always explore GreenNode AI Platform — a one-click solution for your end-to-end model training and inferencing needs.