Choosing the right embedding model can make or break your Retrieval-Augmented Generation (RAG) system. It’s the bridge between your data and the large language model, which determines whether your system retrieves the right information or delivers irrelevant noise. With dozens of open-source and commercial options now available, finding the “best” embedding model is no longer straightforward.

In 2025, new models like E5, BGE, and Mistral Embed are challenging established players such as OpenAI’s text-embedding models and Cohere Embed, offering better retrieval accuracy, lower latency, and open-weight transparency. At the same time, RAG has matured from a research prototype to a production-ready architecture, powering everything from internal knowledge assistants to domain-specific copilots.

This guide breaks down how to choose the right embedding model for your RAG pipeline, comparing performance, scalability, and use cases based on real benchmarks and community insights. Whether you’re optimizing cost, accuracy, or enterprise-grade reliability, you’ll find practical takeaways to help you build a faster, smarter retrieval layer for your AI systems.

Understanding the Core Concept

What is a Retrieval-Augmented Generation (RAG) System

Retrieval-Augmented Generation, or RAG, is an architecture designed to improve how large language models (LLMs) answer queries by combining retrieval of external knowledge with generative capabilities. In a pure LLM setup, the model relies solely on the information encoded in its parameters from training, which may become outdated or incomplete. RAG addresses this limitation by letting the model fetch relevant passages from a curated document store or database at inference time, then use those retrieved texts as additional context when generating its response.

In practice, a RAG system often proceeds in steps:

- Query embedding & retrieval: The user’s input is converted into a vector and matched against a database of document embeddings to fetch the top relevant passages.

- Augmentation of prompt/context: The retrieved passages are appended (or integrated) into the LLM’s input context (prompt).

- Generation / answer synthesis: The LLM then produces an answer using both its internal knowledge and the newly retrieved external facts.

- Optional citation or grounding: Some RAG systems also return references or citations to the documents used, improving traceability.

The key benefit of RAG is that it grounds generative AI in up-to-date, domain-specific data, without retraining the entire model. This makes RAG especially valuable for use cases where factual accuracy, domain relevance, and source accountability matter (e.g. enterprise knowledge assistants, specialized QA, legal or medical support).

Read more: Fine-tuning RAG Performance with Advanced Document Retrieval System

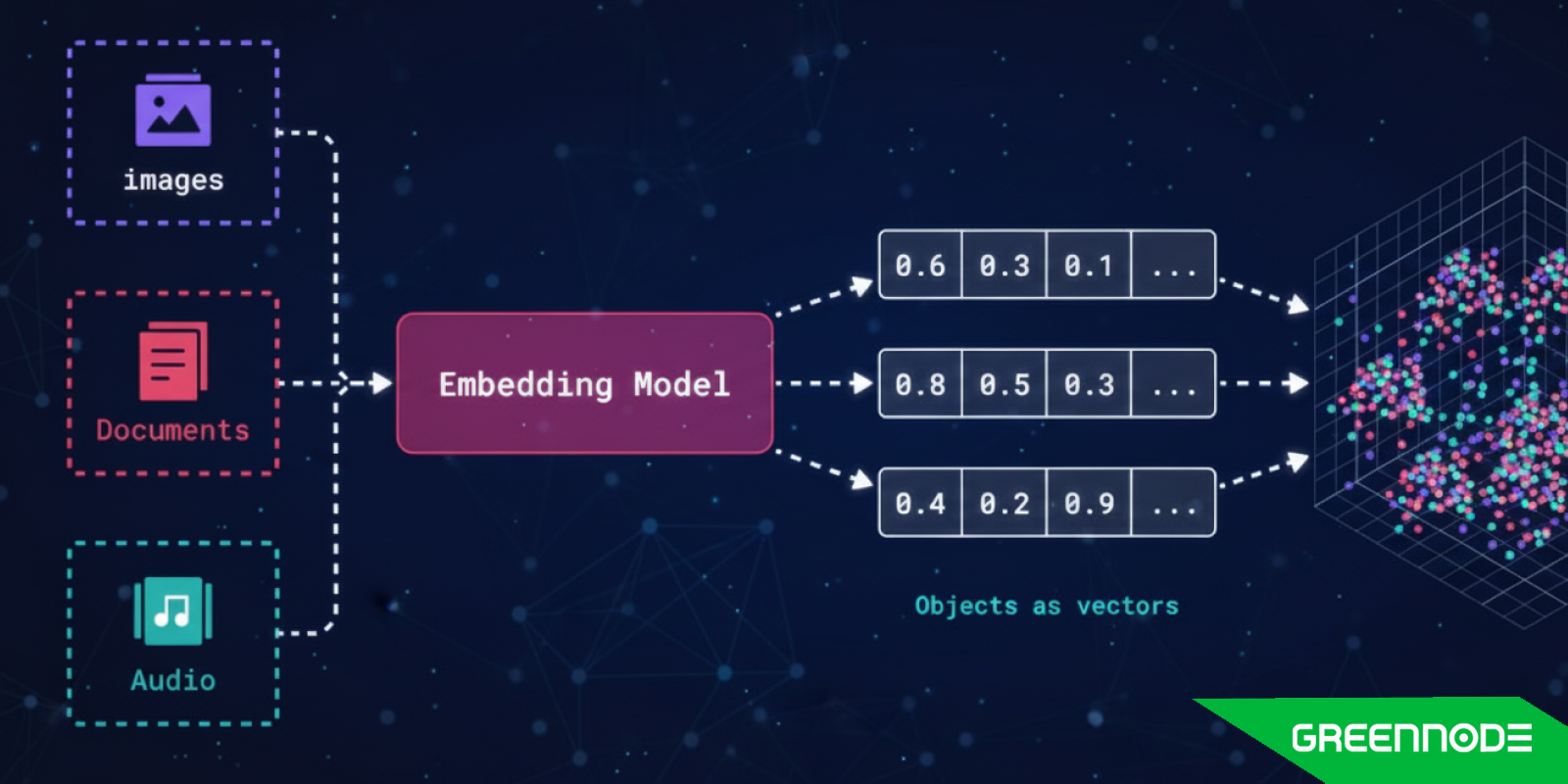

What is an Embedding Model

An embedding model is a machine learning component that transforms raw inputs (such as text, images, or audio) into fixed-length numerical vectors, commonly referred to as embeddings. These embeddings capture semantic or contextual meaning in a continuous vector space, such that inputs with similar meaning end up close together according to a similarity metric (e.g. cosine similarity or dot product).

In the context of RAG, embedding models are critical because:

- They convert both queries and documents into the same vector space so that similarity comparisons become feasible.

- They enable semantic search, not just keyword matching, so the system can find relevant passages even when exact words differ.

- The quality of embeddings directly influences retrieval accuracy: better embeddings help fetch more relevant passages, which in turn leads to more accurate generation.

- Embedding models differ by dimensionality, training data, architecture, latency, and domain specialization, all of which must be balanced when choosing one for RAG.

A simple analogy: if documents and queries were points on a map, the embedding model draws that map so that “nearby” means “semantically related.” The downstream retrieval step uses that map to pick the most relevant sources to feed into the language model.

What Role Do Embedding Models Play in RAG?

Embedding models are the connective tissue of any Retrieval-Augmented Generation (RAG) system. They transform both the user query and the documents in your knowledge base into vector representations, dense numerical arrays that capture semantic meaning rather than just surface-level keywords. This transformation allows the system to compare and retrieve information based on conceptual similarity, not simple string matching.

From Query to Vector Space

When a user submits a question, the embedding model converts it into a fixed-length vector. This vector lives in a multi-dimensional space where proximity represents related meaning. For instance, the phrases “AI model training” and “machine learning optimization” will produce embeddings that are very close to each other, even though they share few exact words.

This process allows RAG systems to understand intent rather than syntax, and it’s crucial when dealing with unstructured or diverse language inputs.

Retrieving the Right Context

Every document (or chunk of text) in the knowledge base has already been converted into its own embedding. The system uses vector similarity search via tools like Pinecone, Weaviate, or FAISS to compare the query embedding against all document embeddings and retrieve the closest matches.

In effect, the embedding model decides what “relevance” means. A higher-quality embedding model will produce tighter semantic clusters, improving recall and precision in retrieval. Poor embeddings, on the other hand, can lead to an irrelevant or redundant context, hurting the accuracy of the final LLM response.

Feeding Context to the LLM

The retrieved passages are then appended to the prompt given to the large language model (LLM). Because the quality of retrieved text determines the grounding of the generated output, embedding models indirectly influence how factual and context-aware the model’s answers are.

For example:

- A robust embedding model can distinguish between “Apple the company” and “apple the fruit.”

- A weak embedding model might retrieve recipes when the user was asking about stock performance.

Efficiency and Scalability

Embedding models also determine how efficiently RAG systems operate at scale. The dimensionality of embeddings (e.g., 384, 768, 1024 dimensions) affects both memory requirements and vector database performance. High-dimensional embeddings often deliver better accuracy, but they come with higher compute and storage costs.

Modern models like E5, BGE, or Cohere Embed v3 are optimized to balance quality and latency, allowing faster indexing, cheaper retrieval, and better throughput for real-time RAG applications.

Continuous Adaptation and Domain Fit

Finally, embedding models can be fine-tuned or domain-adapted to improve retrieval in specialized areas such as legal, medical, or technical documentation. This customization is one of the biggest advantages of using open-source embeddings (like E5 or BGE) over closed commercial APIs, since teams can retrain on their proprietary data to reflect their organization’s specific vocabulary and style.

Also read: Comprehensive Guide to Decode Embedding Models: The Key to Powerful RAG Systems

Criteria to Judge The Best Embedding Models for RAG

Not all embedding models are created equal. In a Retrieval-Augmented Generation (RAG) system, the right embedding model can dramatically improve retrieval accuracy, reduce latency, and lower operational costs. Evaluating which model fits best depends on a few key criteria that balance quality, speed, cost, and domain fit.

Retrieval Accuracy and Semantic Quality

The primary measure of any embedding model is how well it represents meaning. Good embeddings should bring semantically similar texts close together, even if they use different phrasing.

- Metrics to track: Recall@k, Precision@k, nDCG (normalized discounted cumulative gain).

- Benchmarks: The MTEB (Massive Text Embedding Benchmark) and BEIR suites are standard references for evaluating embedding models on diverse retrieval and classification tasks.

- Example: Models like E5-Large and BGE-M3 consistently top these benchmarks, outperforming older baselines like SBERT in multilingual or domain-general tasks.

High retrieval accuracy ensures your RAG system retrieves relevant, diverse, and factual context for the language model, the foundation for reliable responses.

Latency and Throughput

Embedding models vary widely in inference speed. Larger models tend to produce more accurate embeddings, but they also require more compute and time to process queries.

- Why it matters: In production-grade RAG pipelines (e.g., chatbots or search assistants), latency directly affects user experience.

- Rule of thumb: Models that can generate embeddings in under 50–100 ms per query are suitable for real-time retrieval.

- Optimization tip: Frameworks like TensorRT, ONNX Runtime, or vLLM can help accelerate embedding generation without quality loss.

Vector Dimensionality and Storage Cost

Each embedding model outputs vectors of a certain dimensionality (e.g., 384, 768, 1024, 1536 dimensions).

- Higher-dimensional vectors capture richer semantics but increase storage size and search complexity in vector databases.

- For large-scale RAG systems with millions of documents, this cost can grow quickly.

Example:

- OpenAI’s text-embedding-3-large (3072 dims) offers excellent accuracy but higher storage overhead.

- BGE-base (768 dims) or E5-small (384 dims) deliver good trade-offs for enterprise-scale deployments.

Your choice should align with your infrastructure’s capacity and your precision requirements.

Domain Fit and Adaptability

Some embedding models are trained on general web data, while others are optimized for specific domains (e.g., code, medical text, legal documents).

- General-purpose models (like E5 or OpenAI) perform well across varied topics.

- Domain-specific models (like BioBERT or Legal-BERT) excel in specialized vocabulary and context.

- Fine-tuning or adapter-based training (LoRA, PEFT) allows you to align embeddings with your proprietary corpus, boosting relevance.

If your RAG system targets a focused knowledge domain, adapting the embedding model will yield more consistent retrieval quality.

Cost, Licensing, and Deployment Constraints

Finally, practical considerations often dictate what’s feasible.

- Open-source models (e.g., BGE, E5, Mistral Embed) are flexible for on-premise or sovereign AI setups.

- Proprietary APIs (like OpenAI or Cohere) offer plug-and-play simplicity but come with per-request fees and data compliance limitations.

- Deployment options: Self-hosted models can run via frameworks like LangChain, SentenceTransformers, or NVIDIA NIM for optimized inference.

When building RAG systems for enterprise or government use, it’s essential to evaluate not just technical performance but also data governance and compliance requirements.

Best Embedding Models for RAG in 2025

The landscape of embedding models has evolved rapidly over the past year. As RAG systems move from research experiments to production-grade applications, developers and enterprises now have a growing set of both commercial and open-source embedding models to choose from. Each model offers its own trade-offs between accuracy, latency, cost, and domain adaptability.

Below are the best embedding models for RAG to consider in 2025, based on benchmark results (MTEB, BEIR), community adoption, and enterprise reliability.

E5 Family (Google Research / Hugging Face)

Type: Open-source (English & multilingual)

Best for: General-purpose RAG, multilingual search, question answering

E5 models, particularly E5-Large-V2 and E5-Mistral, have become the standard choice for open-source RAG pipelines. They use contrastive pretraining on web-scale datasets, aligning queries and passages directly for retrieval tasks.

Why it stands out:

- High performance on MTEB and BEIR leaderboards

- Multilingual capability (via multilingual-e5-base)

- Available in multiple sizes (small → large), suitable for both research and enterprise deployment

Use case: A company building a multilingual internal search or chatbot can use intfloat/multilingual-e5-large for strong cross-lingual retrieval.

BGE (BAAI General Embedding)

Type: Open-source (Beijing Academy of AI)

Best for: Scalable RAG pipelines, multilingual and fine-tuned applications

The BGE family (e.g., bge-base-en-v1.5, bge-m3) offers competitive retrieval accuracy and flexible architectures. These models have strong multilingual support and are optimized for fine-tuning using domain-specific data.

Why it stands out:

- Performs consistently across languages and domains

- Efficient for GPU and CPU inference

- Excellent candidate for fine-tuning or adapter integration

Use case: Enterprise RAG in finance or law, where precision in multilingual documents matters.

Mistral Embed / Mixtral-based Embeddings

Type: Open-source (Mistral AI)

Best for: Lightweight, high-speed embedding generation

Following the success of Mistral’s language models, the Mistral Embed models bring high throughput and solid semantic representation for retrieval tasks. Their architecture balances accuracy and latency, making them ideal for real-time RAG applications.

Why it stands out:

- Lower latency compared to larger open models

- Tuned for semantic search and re-ranking tasks

- Efficient for deployment on smaller GPU nodes

Use case: AI copilots or real-time customer support systems requiring rapid query embedding and response generation.

OpenAI Text-Embedding 3 Series

Type: Proprietary API

Best for: Production-ready RAG with stable infrastructure

The text-embedding-3-small and text-embedding-3-large models provide state-of-the-art performance for English and multilingual retrieval. Despite being closed-source, their reliability, scalability, and consistent latency make them one of the most popular choices in production systems.

Why it stands out:

- 3,072-dimensional embeddings with high semantic fidelity

- Stable API, easy integration with vector databases (Pinecone, Qdrant, Weaviate)

- Strong performance on MTEB and internal enterprise benchmarks

Use case: Enterprises that prioritize ease of use, uptime, and managed scaling over on-premise control.

Cohere Embed v3

Type: Proprietary API

Best for: Enterprise RAG with strong support for long text inputs

Cohere’s Embed v3 models are tuned for long-document retrieval and semantic clustering, enabling accurate retrieval from knowledge bases or PDFs. They’re also API-accessible, making them suitable for developers who prefer fully managed infrastructure.

Why it stands out:

- Supports inputs up to 8,192 tokens

- Offers domain-specific fine-tuning APIs

- Optimized for semantic search and hybrid RAG applications

Use case: Retrieval pipelines for knowledge-heavy domains like research, education, or technical documentation.

Other Emerging and Specialized Models

Several specialized embedding models are gaining attention:

- NV-Embed / NV-Retriever (NVIDIA): Optimized for enterprise RAG and hardware acceleration via NIM microservices.

- GTE-large / GritLM: Emerging open alternatives with competitive MTEB performance.

- LaBSE / Universal Sentence Encoder: Proven legacy models for multilingual RAG and translation search.

These models are valuable for specific infrastructure needs or when integrating with GPU-optimized serving environments.

How to Choose the Right Embedding Model for Your RAG System

Selecting the right embedding model is less about picking the “most powerful” option and more about finding one that fits your use case, infrastructure, and data. The best model for a chatbot might not suit a research assistant or document retrieval system. Below are four practical lenses for making the right choice.

Use Case–Based Decision

Different RAG applications rely on embeddings in distinct ways:

Question Answering (QA): Choose models optimized for semantic precision and context understanding, such as E5-Large or BGE-M3. They handle short queries well and retrieve precise supporting passages.

Document Retrieval / Knowledge Search: For systems scanning thousands of long documents, prioritize models that support longer context windows and high recall. Cohere Embed v3 or OpenAI Embedding 3-Large are strong candidates.

Summarization Pipelines: Use embeddings with good semantic clustering ability to group related passages before generating summaries. BGE-base or Mistral Embed perform well for clustering and context grouping.

Conversational / Chat Assistants: Look for low-latency models suited for real-time query handling. Mistral Embed or E5-Small balance accuracy and speed for production chat interfaces.

Tip: If you plan to build multi-function RAG apps (e.g., QA + summarization), benchmark embeddings across all target workflows before committing.

Budget & Cost vs. Performance Trade-Off

Embedding costs can scale quickly — especially when processing millions of documents or handling continuous user queries. You’ll need to weigh accuracy against cost:

- Open-source models (like E5, BGE, or Mistral Embed) offer excellent value for teams that can manage hosting and inference themselves.

- Commercial APIs (like OpenAI or Cohere) simplify integration but charge per 1,000 tokens, which can add up in data-heavy systems.

If you expect high query volumes or need to index large datasets, consider:

- Smaller vector sizes (e.g., 384–768 dimensions) to reduce storage and compute cost.

- Batch encoding and asynchronous pipelines to optimize throughput.

Rule of thumb: Start with open models for experimentation, then upgrade to managed APIs once cost and scale are predictable.

Infrastructure Constraints

Your available infrastructure heavily influences which model you can deploy efficiently.

GPU Memory: High-end models like E5-Large or OpenAI 3-Large require substantial VRAM (≥24 GB) to process batches efficiently. For smaller GPUs, opt for E5-Small or BGE-Base variants.

Vector Databases: The choice of vector DB (Pinecone, Weaviate, Chroma, Qdrant) dictates how embedding size and indexing scale affect latency.

- Lower-dimension vectors = faster search, smaller index size.

- Higher-dimension vectors = richer semantics, but slower search and higher memory use.

Deployment Environment: If you run on cloud infrastructure like AWS or GCP, managed services with auto-scaling (e.g., Pinecone or Vertex AI Matching Engine) simplify scaling. For sovereign AI or on-prem setups, consider self-hosted solutions with FAISS or Milvus for more control.

Domain Adaptation & Fine-Tuning Strategy

Out-of-the-box embedding models are trained on general web data and good for broad tasks, but often weak in domain-specific jargon. Fine-tuning helps align embeddings with your internal corpus.

Domain-Specific Fine-Tuning: Use frameworks like SentenceTransformers or Hugging Face PEFT to fine-tune on question–answer or document pairs from your domain (legal, medical, financial, etc.).

Parameter-Efficient Techniques: Apply LoRA or adapter tuning to improve domain adaptation without retraining the full model.

Continual Learning: Refresh embeddings periodically if your data changes often (e.g., regulatory or scientific updates).

Example: A healthcare company fine-tuning BGE-base on internal research abstracts saw a 15–20% increase in retrieval accuracy for clinical queries.

To sum up, hen choosing an embedding model for your RAG system:

- Match model scale to your use case and infrastructure.

- Balance accuracy against latency and cost.

- Fine-tune for domain relevance whenever possible.

There’s no single “best” embedding model, only the one best aligned with your retrieval goals, data domain, and operational limits.

Explore GreenMind Embedding: Turning Text Into Meaningful Intelligence

Modern AI systems don’t just need to read text—they need to understand it. That’s where GreenMind Embedding (GreenNode-Embedding-Large-1007) comes in.

Developed by GreenNode AI, GreenMind Embedding is a large-scale text embedding model built to convert text into high-dimensional numerical vectors that preserve deep semantic meaning. Instead of relying on surface-level keyword matching, the model captures context, intent, and relationships between pieces of text, making it a critical building block for intelligent search and retrieval systems.

Built for Quality, Not Just Speed

The Large architecture of GreenNode-Embedding-Large-1007 is optimized for embedding quality and semantic accuracy. Compared to smaller or compressed embedding models, it produces denser and more expressive vectors—resulting in noticeably better performance in similarity search, clustering, and classification tasks.

The 1007 release represents a stable, production-ready version, tuned for real-world workloads where consistency and reliability matter as much as raw model capability.

Where GreenMind Embedding Excels

GreenMind Embedding is designed for teams building AI products that rely on understanding text at scale, including:

- Semantic search that retrieves results based on meaning, not keywords

- Advanced information retrieval and ranking for knowledge bases and RAG pipelines

- Clustering and classification of large text datasets

- Recommendation systems driven by semantic similarity

- Applications requiring a nuanced understanding of text similarity and context

A Core Building Block for Production AI

From enterprise search to agent memory and retrieval layers, GreenMind Embedding provides a reliable semantic foundation for modern AI systems. By encoding language into rich, meaningful embeddings, it enables downstream models and applications to reason over text with far greater precision—bridging the gap between raw language and real intelligence.

Try GreenMind Embedding Today

Ready to see the difference high-quality embeddings make? Log in to the GreenNode platform to explore GreenMind Embedding, run your own text, and evaluate real-world performance in minutes.