Imagine you are an ordinary person who has a huge document collection, which is comprised of texts, images, tables, figures, etc. And you want to utilize it when looking for material for your research or study by looking for the exact piece of information you want to find.

In the traditional approach, information written on paper, stored in books, and will be saved on a bookshelf. When you want to find relevant topics, you will go into sections (created by you) like math, literature, geography, etc. And then go further to sub-topics such as statistics, linear algebra, etc. After that, you must find exactly the chapter or page that has your information.

In modern times, we will store information in digital format. When looking for information, we will type in the information and then the search engine (google search, bing search, etc). And then matched information will show to you.

But how does it work – you may ask. Because the scope of this blog is focused on document retrieval, we are trying to explain the process using the concept of text search.

Understanding the Building Blocks of Document Retrieval

Before delving into embedding models, let's familiarize ourselves with the fundamental concepts of text-based information retrieval. Imagine your document collection as a vast library filled with books on various topics. This collection is referred to as a corpus. When you search for information, the question or phrase you enter is called a query. For instance, if you're studying machine learning, your query might be "What is a neural network?"

Upon receiving a query, a search system processes it by analyzing your question, locating relevant information, evaluating the results, and presenting the most pertinent ones. There are three common approaches to this search process: full-text search, semantic search, and vector search.

Also read: Fine-tuning RAG Performance with Advanced Document Retrieval System

Full-Text Search

Full-text search involves matching a text query with documents stored in a database, excelling at providing results even when the match is partial. This makes it a flexible tool for creating user-friendly search interfaces where exact matches aren't always necessary.

How It Works:

Full-text search matches words in a query with those found in documents, effectively understanding the lexical intent behind a query. While it works well for specific terms, it struggles when dealing with vague or context-dependent queries.

Key Strengths:

- Corrects typos – e.g., return of the jedi

- Handles precise queries – e.g., exact product names

- Supports incomplete queries – e.g., return of the j

Key Limitations:

- Cannot handle vague queries – e.g., folks fighting with lightsabers

- Lacks contextual understanding – e.g., winter clothes

In essence, full-text search is effective for retrieving results where exact or partial keyword matches are important, but it falls short when user queries require understanding beyond the keywords.

Semantic Search

Semantic search offers a more advanced approach by focusing on intent rather than keywords alone. Powered by technologies like Natural Language Processing (NLP) and Machine Learning (ML), semantic search is designed to grasp the deeper meaning behind a query, thus generating more relevant, contextually accurate results.

How It Works:

Instead of just matching words, semantic search deciphers the relationships between words, concepts, and entities. By leveraging NLP and knowledge graphs, it can identify not just what the user is asking, but the underlying intent and context of their query. This leads to more nuanced and personalized results.

Key Strengths:

- Understands language nuances – providing contextually accurate results

- Personalizes search – learns user preferences and adapts over time

- Improves relevance – delivers results that are conceptually aligned with user intent

Semantic search represents a leap forward from traditional keyword-based search, offering a far more intuitive experience. It goes beyond identifying isolated terms to uncovering the relationships between words and their meanings, paving the way for more personalized, context-aware searches.

Vector Search

Vector search is the AI-powered method for semantic retrieval, transcending text matching to identify documents that are semantically similar. Instead of comparing words directly, it transforms both queries and documents into vector representations—mathematical structures that encode meaning. By leveraging Large Language Models (LLMs), vector search can efficiently handle vague or complex queries.

How It Works:

Vector search converts text, images, or other inputs into multi-dimensional vectors. These vectors capture the essence of the data, allowing for semantic comparisons rather than word-for-word matching. This capability makes vector search a powerful tool for ambiguous queries and multi-modal searches, such as image-based searches.

Key Strengths:

- Handles vague queries – e.g., first released Star Wars movie

- Understands context – e.g., winter clothes

- Suggests similar documents – enhances content discovery

Key Limitations:

- Struggles with precise queries – lacks the pinpoint accuracy of keyword-based searches

- Requires more computational resources – due to the complex nature of vector comparisons

Vector search is ideal for queries that require deeper semantic understanding or when users provide vague or multi-modal inputs. However, it may struggle when precise, exact matches are needed, and it demands more processing power compared to full-text search.

Why use vector search?

Vector search excels in situations where queries are vague or contextual. This is why it's widely used in Retrieval Augmented Generation (RAG) systems, where understanding the user’s intent is essential. Beyond text, vector search also shines in applications such as image search or multi-modal searches, making it a versatile and scalable solution for modern search challenges.

In the next part of this blog, we will dive deeper into embeddings and how they work behind the scenes to convert text, images, and other data into vectors that drive efficient and effective vector search.

Decoding Embedding Models: How They Work and Why They Matter

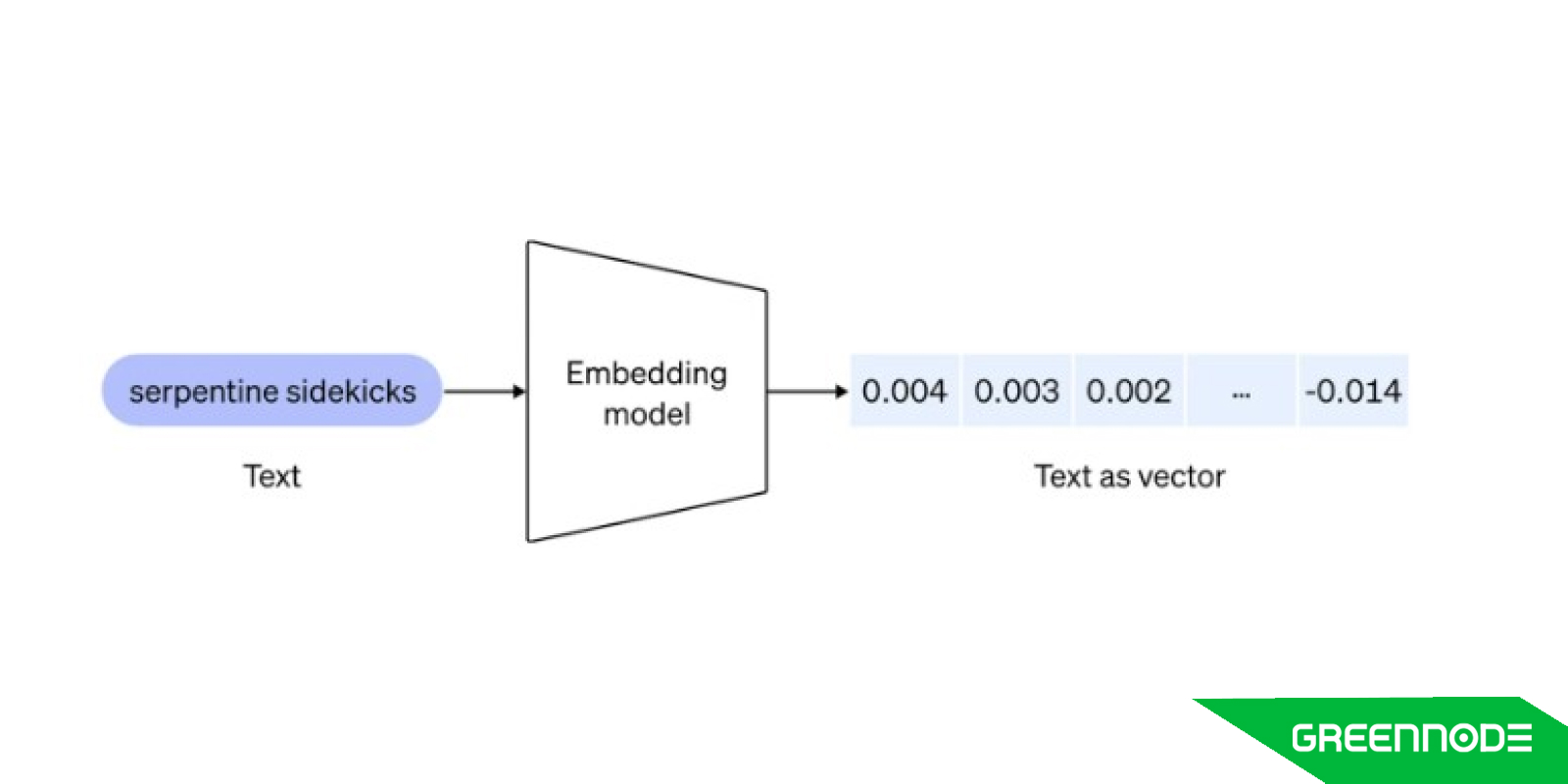

Embeddings are a numerical representation of words that capture the semantic and syntactic meanings. Embedding models are crucial neural network algorithms designed to generate numerical representations (embeddings) for data inputs such as text. These embeddings capture the semantic meaning of words or phrases, making them invaluable in Natural Language Processing (NLP) tasks.

Here's how embedding models function in practice:

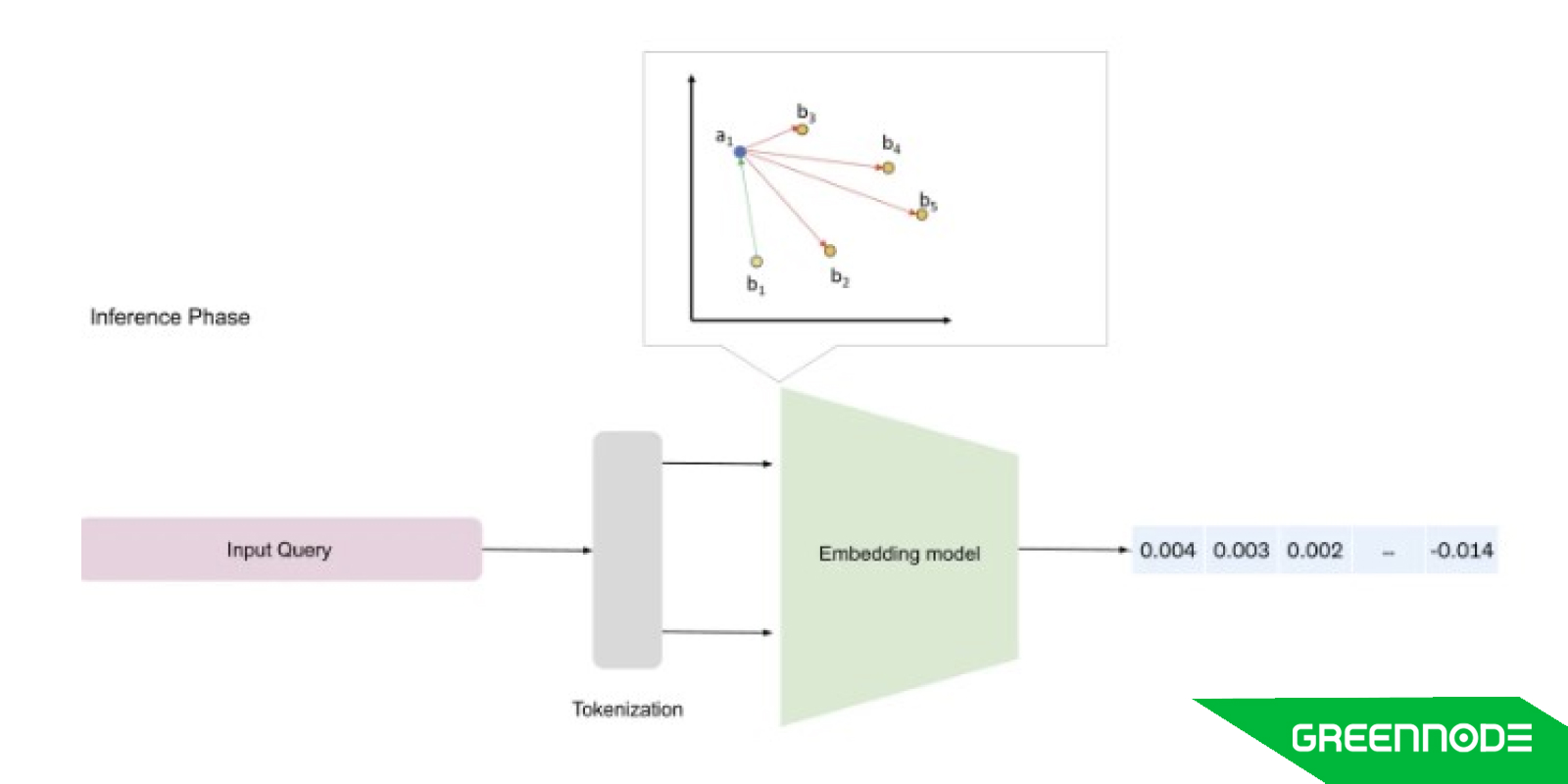

Tokenization: The input data (like a sentence or document) is broken down into smaller units, such as words or subwords, to make processing easier. Each token is then mapped to a unique integer index, which the model uses during both training and inference.

Example: “hello, how are you?” → Tokenization → [2, 41, 531, 12, 52]

- Embedding Initialization: The model initializes an embedding matrix, where each row represents a vector for a token in the vocabulary. These vectors start with random values and are refined as the model learns.

- Training/Fine-Tuning: During training, the model adjusts the embedding vectors to minimize the distance between similar words (e.g., "cat" and "kitten") while maximizing the distance between dissimilar ones. The model learns from observing how words appear together in sentences, creating meaningful relationships between tokens based on their context.

- Inference: After training, when the model receives an input, it retrieves the corresponding embedding vectors for each token. These vectors serve as a rich, compact representation of the data, capturing the underlying semantic information for use in downstream tasks like sentiment analysis, translation, or search.

By transforming raw data into these dense, meaningful vectors, embedding models play a critical role in making sense of language, powering everything from search engines to chatbots with their ability to understand context and relationships between words.

Also read: 5 Best Embedding Models for RAG: How to Choose the Right One

Embeddings Model: The Foundation of Modern Vector Search Systems

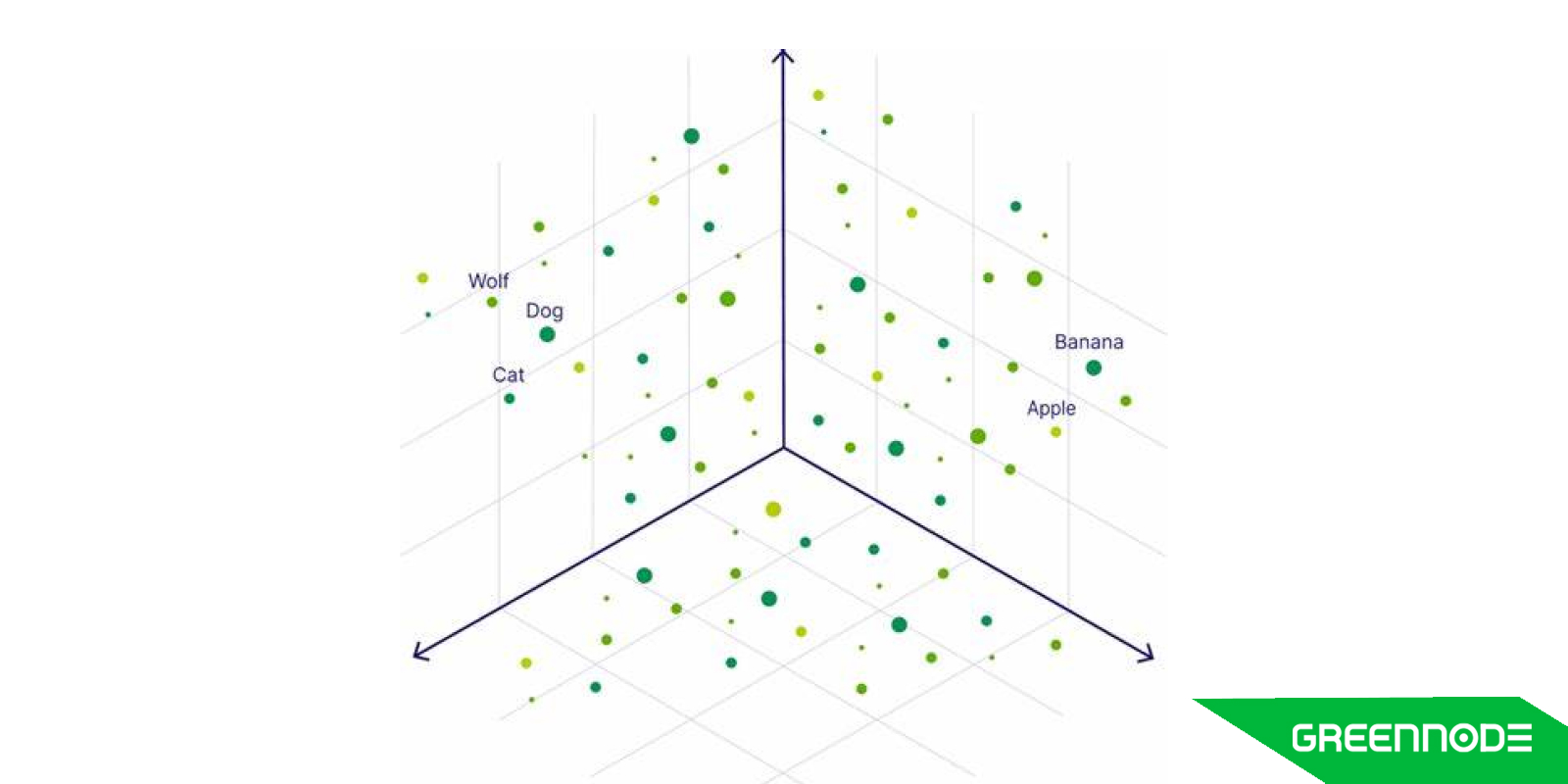

In the vector search system, embeddings are represented in a high-dimensional vectors, a long sequence of continuous values, often called an embedding space. These vectors capture semantic meaning and encode similar words closer to each other in the embedding space. In much simpler words they act like a dictionary or a lookup table for storing information.

For instance, words like “tea”, “coffee” and “cookie” will be represented close together compared to “tea” and “car”. This approach to representing textual knowledge leads to capturing better semantic and syntactic meanings.

Embeddings are higher dimensional vectors that can capture complex relationships and offer richer representations of the data. But they generally require large datasets for training which leads to more computing resources. Additionally, it increases the risk of overfitting.

In natural language processing (NLP), embedding plays an important role in many tasks such as:

- Text classification: It involves classifying textual data based on their context.

- Sentiment analysis: It is used to analyze sentiments such as emotional tone within a given text.

- Information retrieval: It involves retrieving relevant documents or information from a large collection based on user queries.

In this blog, we will focus on analyzing its application in Retrieval Augmented Generation (RAG) systems.

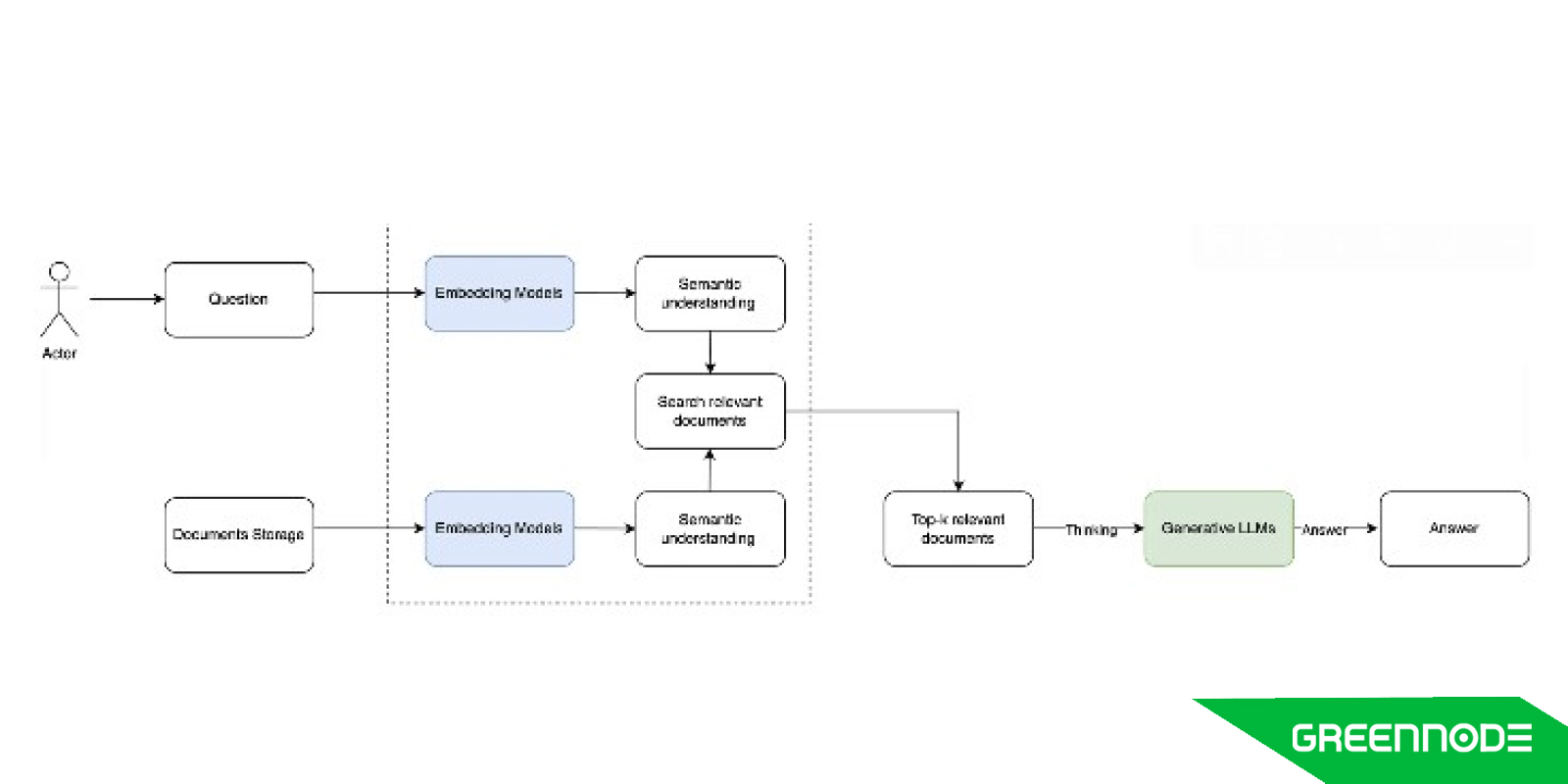

Leveraging Embedding Models for Enhanced RAG Systems

In Retrieval-Augmented Generation (RAG), information retrieval plays a crucial role in enhancing the performance of language models by integrating external knowledge during the text generation process. Here's a breakdown of how embeddings contribute to information retrieval in RAG:

Embedding generation: In a Retrieval-Augmented Generation (RAG) system, embeddings are crucial for transforming input queries and document passages into dense vector representations. These vectors capture semantic relationships between texts, enabling the retrieval of contextually relevant information.

Pre-trained embedding models, trained on large datasets, provide a strong foundation for general-purpose applications. However, they may fall short in domain-specific tasks that require specialized knowledge. To address this, fine-tuning is applied.

Fine-tuning adjusts pre-trained models with domain-specific data, refining the embeddings to better capture the nuances of the target domain. This enhances both the accuracy and adaptability of the RAG system, ensuring more relevant, contextually aligned information retrieval in specialized applications.

- Vector search: Once the embeddings are generated, a similarity search is performed using techniques like cosine similarity or distance-based metrics. The retrieved documents are the ones with embeddings that are most similar to the query's embedding.

- Knowledge retrieval: The retrieved documents provide external knowledge to the generative model, allowing it to produce more accurate, fact-based, and contextually rich responses during generation.

- Scalability: Embedding-based retrieval enables RAG to handle large-scale datasets efficiently by quickly narrowing down the search space based on the query embeddings, without having to search through all documents explicitly.

- Handling diverse queries: Embeddings allow the system to handle diverse and complex queries more effectively than traditional keyword-based retrieval, as the model captures deeper semantic meaning, even when exact keywords are not present.

In essence, embeddings are foundational for retrieving the most relevant external information in RAG systems, enriching the generated outputs with precise and contextually appropriate knowledge.

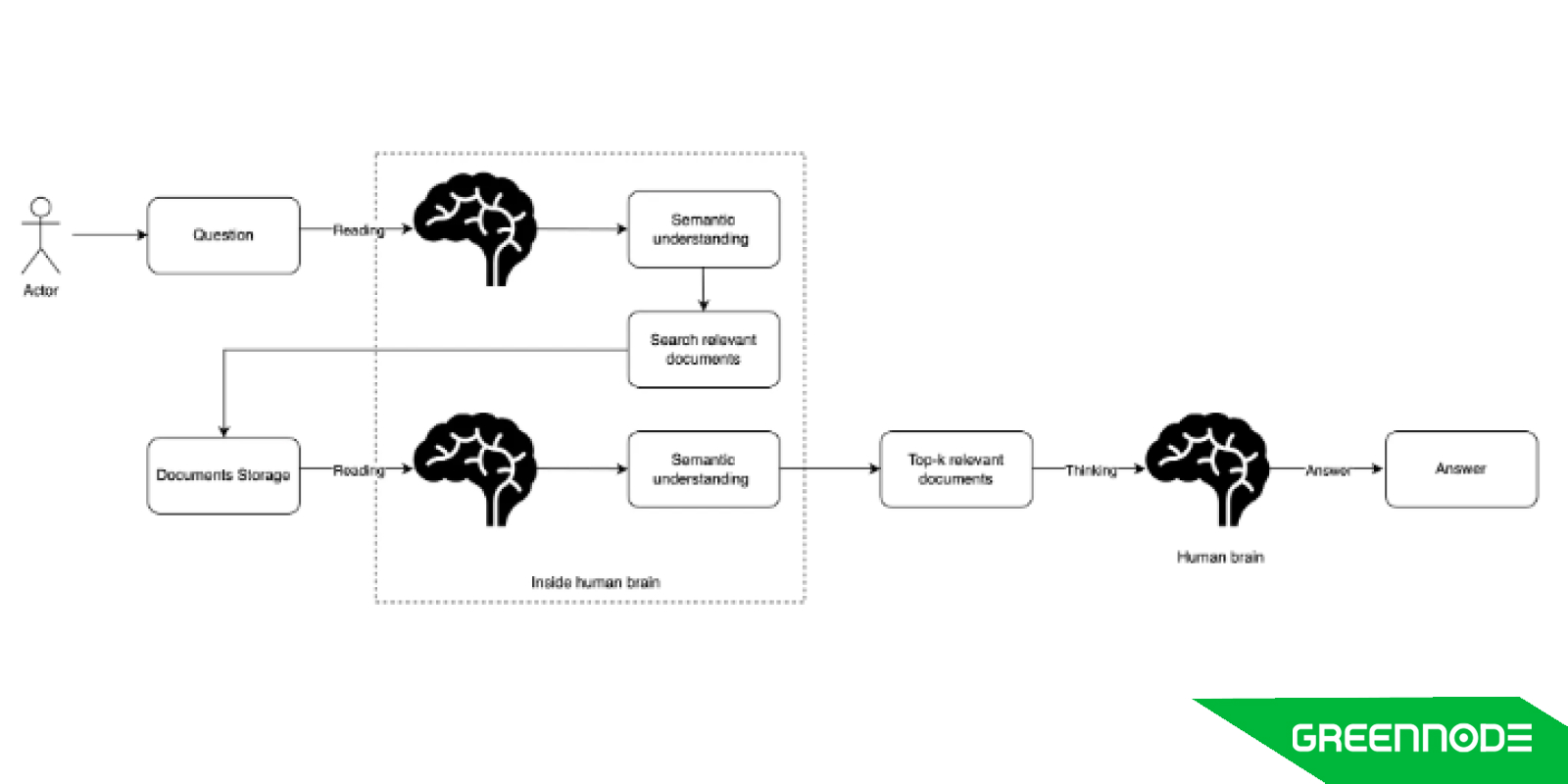

Human when answer a question

RAG Chatbot System

Final Verdict

In summary, embedding models serve as a pivotal component in modern Retrieval-Augmented Generation (RAG) systems, bridging the gap between raw data and meaningful insights. By converting input queries and document passages into dense vector representations, embeddings enable the retrieval of contextually relevant information, enhancing the overall performance of language models.

The transition from traditional search methods to vector search, driven by embeddings, allows for a deeper understanding of semantic relationships and user intent. While pre-trained models provide a robust foundation for general applications, fine-tuning on domain-specific data significantly boosts accuracy and adaptability, ensuring that the RAG system can effectively handle specialized tasks.

Ultimately, the power of embeddings lies in their ability to capture the nuances of language, making them indispensable for a wide range of applications, from text classification to sentiment analysis. As RAG systems continue to evolve, leveraging embedding models will remain essential for delivering precise, contextually rich responses, transforming how we interact with information and paving the way for more intuitive and effective search experiences.