Shipping AI models to production shouldn’t feel like launching a rocket. But for most AI/ML teams, GPU model serving still involves wrestling with CUDA versions, dependency hell, and Kubernetes nightmares—just to get low-latency inference working.

To handle this tech bottleneck, NVIDIA NIM (Neural Inference Microservices) offers a streamlined solution: containerized, production-ready inference endpoints optimized for NVIDIA GPUs. Each NIM package includes pre-tuned models, APIs, and all runtime dependencies—optimized to serve models like DeepSeek, LLaMA, Mistral, and SDXL at blazing-fast token throughput, ready to plug into your app via REST or gRPC.

Now with NIM on the GreenNode AI Platform, deploying AI inference is finally what it should’ve been all along: instant, scalable, and painless. No infra puzzles, no idle GPU costs — just pure low-latency AI deployment in minutes.

Let’s dive in!

Common Challenges for AI Teams in Model Inference

Deploying AI models into production is far more complex than simply running a piece of code. While training models has become more accessible with open-source models and available checkpoints, the inference phase—predicting outputs from a trained model—remains one of the most challenging and resource-intensive steps in the AI development process. There are several potential reasons that could explain this phenomenon:

Complex Infrastructure Setup and Time-Consuming Configuration: Setting up the infrastructure for inference requires integrating various components like Triton Inference Server, TorchServe, vLLM, HuggingFace Text Generation Inference, or manually building with CUDA. Configuring the runtime environment, resolving library conflicts, and managing model versioning often take days to set up and perfect. This complexity becomes a significant barrier for AI teams, especially when trying to ensure a smooth, scalable deployment process.

- High DevOps/MLOps Skills Requirement: For inference to run smoothly and meet real-world demands, AI teams need to address numerous infrastructure-related challenges, including autoscaling, GPU-aware job scheduling, batching, and ensuring high availability. These tasks require in-depth knowledge of DevOps/MLOps practices, which aren't always readily available within AI teams.

- High Hardware Costs and Inefficient Resource Utilization: For models that experience fluctuating request loads (e.g., peak traffic during the day and low traffic at night), GPUs may remain idle during low-traffic hours but still incur costs. Inefficient resource allocation can lead to unnecessary hardware expenses, contributing to higher operational costs for organizations.

Challenges in Serving Large or Distributed Models: Serving large language models (LLMs) like DeepSeek or LLaMA often requires distributing the model across multiple GPUs (tensor parallelism), KV-Cache management, paged attention, continuous batching, or multi-GPU orchestratio,n and employing memory optimization techniques. These configurations can be difficult to set up without specialized libraries such as vLLM or SGLang. Learn more about GreenNode supporting vLLM framework here: Maximizing AI Throughput with GreenNode AI Platform Latest Releases.

- Lack of Dynamic Scaling Capabilities: Many current inference systems lack the ability to dynamically share GPU resources across smaller workloads, meaning each small task requires its own dedicated physical GPU. This results in significant resource wastage and higher costs when running multiple models or services concurrently.

These challenges not only delay the time-to-market for AI solutions but also add significant costs, making it difficult to scale AI systems efficiently.

NIM Integration— A Pre-Configured Framework for Seamless Inferencing

Neural Inference Microservices (NIM) is a powerful and simplified solution developed by NVIDIA to accelerate AI model deployment into production environments. Instead of spending weeks setting up complex infrastructure stacks, NIM offers a fully pre-configured, containerized framework that helps AI practitioners and engineers move from model development to inference in just minutes.

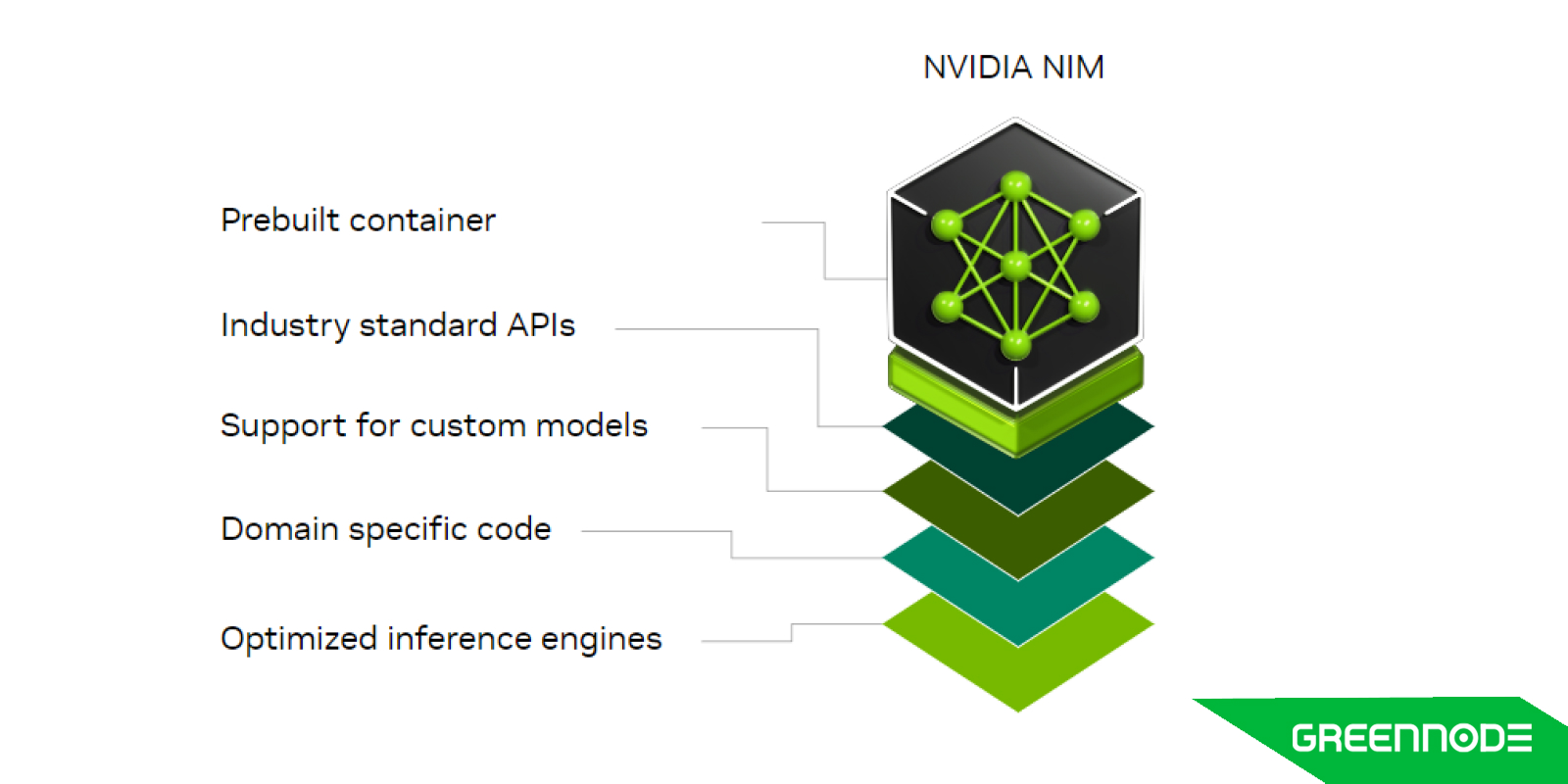

A typical NIM package includes four core components:

- First, it ships with pre-optimized AI models that are already tuned for production.

- Second, it bundles the essential runtime stack—like TensorRT-LLM, CUDA, and the Triton Inference Server—already integrated and ready to run

- Third, NIM exposes models via clean, REST, gRPC, and OpenAI-compatible APIs—making integration into existing applications or pipelines seamless.

- Finally, NIM runs as containerized microservices, making it scalable and easy to orchestrate in cloud-native environments.

Compared to traditional inference setups that require manual installation, dependency management, and DevOps overhead, NIM drastically reduces friction.

Accelerate Your AI Production with NIM Integration on GreenNode AI Platform

GreenNode AI Platform takes NVIDIA NIM to the next level by integrating it directly into our pre-configured GPU infrastructure—turning complex AI model deployment into a seamless plug-and-play experience. With NIM libraries and model frameworks natively supported on GreenNode AI platform, customers no longer need to worry about infrastructure setup or dependency management. All it takes is to enter a valid NIM license, and users can immediately start serving AI models through an access token–based endpoint, ready for inference in minutes.

This tight integration means that AI teams, even without dedicated DevOps support, can confidently deploy and scale models in production. GreenNode handles all the infrastructure under the hood—runtime orchestration, GPU optimization, and load balancing—while providing full monitoring and logging dashboards for observability and performance tracking. It’s built for engineers who want results fast, not configuration headaches.

| Criteria | On-Prem Deployment | Public Cloud (AWS, GCP, Azure) | NIM on Hyperscaler (NVIDIA) | GreenNode AI Platform |

|---|---|---|---|---|

| Setup Time | Weeks to months | Several days to weeks | Hours to days | Minutes—ready -to-run with NIM license |

| DevOps Complexity | High – requires infra orchestration, CI/CD, monitoring | Medium – manual runtime setup, scaling configurations | Medium – some prebuilt support, but still infra-heavy | Low – fully managed, no DevOps required |

| Infrastructure Cost | High upfront (CAPEX), ongoing maintenance (OPEX) | High GPU hourly cost + egress + storage fees | Software licensing + GPU cost + potential lock-in | Transparent, usage-based GPU pricing |

| Runtime Optimization | Manual tuning – CUDA, TensorRT, Triton setup required | Manual or semi-auto (depends on provider/tooling) | Optimized, but not always tunable by user | Pre-optimized for A100/H100 GPUs |

| Scalability | Difficult – requires load balancers, cluster managers | Scalable, but needs proper setup and tuning | Auto-scaling supported, with licensing dependencies | Auto-scaling supported, with licensing dependencies |

| Security & Enterprise Readiness | Fully customizable, but needs compliance setup | Varies by provider, requires additional setup | Generally strong, depending on region | Built-in security controls and audit logging |

| Monitoring & Observability | Must be built or integrated manually | Available via cloud-native tools (extra config) | Limited by platform visibility | Built-in dashboards and full metrics/log tracing |

| Model Access & Integration | API/manual integration | API-based, but needs setup | Integrated APIs, limited | Token-based access, plug-and-play with NIM license |

| Ideal For | Enterprises with in-house infra teams | Teams with cloud experience and flexible budgets | Enterprises seeking end-to-end NVIDIA stack | AI teams/startups looking for speed, simplicity & scale |

With GreenNode, you get an optimized, GPU-native environment where NIM runs out-of-the-box, with no hidden fees, minimal setup, and transparent usage-based billing. It's the fastest, most cost-effective way to bring NIM-powered AI applications into real-world production—without compromising on speed, performance, or scalability.

Top 5 Use Cases for NIM Integration on GreenNode AI Platform

Deploying AI inference pipelines often involves a tangle of frameworks, container builds, and infrastructure orchestration. But with NVIDIA NIM pre-integrated on the GreenNode AI Platform, the most common and impactful use cases are now faster to build, easier to scale, and significantly more cost-efficient. Below are five powerful use cases where GreenNode’s NIM integration unlocks speed, performance, and simplicity — all without requiring deep DevOps involvement.

Conversational AI and LLM-Powered Chatbots

One of the most common uses of NIM is in serving large language models like LLaMA 2 and Mistral for interactive chat interfaces, customer support bots, and virtual assistants. Traditionally, deploying LLMs meant managing CUDA, batching logic, and latency tuning across multiple GPUs. With GreenNode, these models come pre-packaged via NIM, fully optimized for GPU inference with built-in streaming support and token metering.

- Latency: 500ms – 1.5s (per prompt, depending on token count)

- Cost: ~$0.003 – $0.01 per request (based on usage-based billing on H100)

Retrieval-Augmented Generation (RAG) Pipelines

For enterprises building knowledge bots or custom semantic search systems, RAG pipelines combine embedding models with LLMs to deliver accurate, context-rich responses.

On GreenNode, you can easily integrate NIM’s embedding models (such as BGE or E5) with external vector databases and route the retrieved content through NIM-powered LLM endpoints like DeepSeek. Everything runs in a GPU-native environment, eliminating latency caused by cross-platform communication or model cold starts. Learn more about GreenNode RAG system: Fine-tuning RAG Performance with Advanced Document Retrieval System.

- Latency: 1–2s (embedding + vector search + generation)

- Cost: ~$0.004 – $0.012 per query

Real-Time Speech Recognition with FastConformer

Voice-based AI is gaining adoption across industries—from smart assistants to real-time transcription tools for meetings and customer service. Using NIM’s optimized FastConformer model, teams can transcribe voice data with high accuracy and sub-second latency.

- Latency: 200–400ms per 10s audio

- Cost: ~$0.002 per 15s audio chunk

Document Understanding with Transformer-based OCR

Many businesses still struggle to extract structured information from scanned forms, invoices, or handwritten notes. NIM packages models like PaddleOCR are designed for end-to-end document parsing without external OCR tools. GreenNode deploys these models in containerized microservices, giving you a fast, reliable API to turn raw documents into structured JSON outputs.

- Latency: 500ms – 1.2s per page

- Cost: ~$0.005 per page processed

Vision AI and Image Classification

Computer vision tasks, including defect detection, object recognition, and product classification, demand high-speed inference and batch processing capabilities.

With NIM on GreenNode, vision models like EfficientNet and FastViT are optimized to deliver low-latency predictions on large image volumes. Whether you're processing single images or running thousands in batch, you get consistent throughput without GPU waste or manual tuning.

- Latency: 30ms – 100ms per image (batch supported)

- Cost: ~$0.001 – $0.002 per image

Final Thoughts

Production AI shouldn't be this hard—but it often is. From DevOps overhead to infrastructure setup, getting models into real-world applications is still one of the biggest bottlenecks for AI teams.

With NVIDIA NIM integrated into GreenNode's GPU-native platform, deploying optimized inference pipelines becomes fast, simple, and cost-effective. No manual setup, no idle fees—just high-performance, low-latency inference that scales with your needs.

Whether you’re serving LLMs, building chatbots, or launching real-time AI features, NIM on GreenNode gets you from model to production in minutes. Try NIM on GreenNode AI Platform today or contact us for 1:1 consultation – instant reply within 30 minutes.