The integration of Artificial Intelligence—especially Generative AI (GenAI) and Large Language Models (LLMs)—into modern technologies is no longer unfamiliar. However, the application of these advanced models within the robotics and automation sector is now witnessing rapid growth, particularly in service robotics across industries like hospitality, healthcare, smart manufacturing, and retail.

Despite the potential, adopting GenAI and LLMs in robotics still faces major hurdles, including compute-intensive training requirements, lack of scalable infrastructure, and fragmented toolchains. According to a 2024 report by Boston Consulting Group (BCG), 74% of companies have yet to show tangible value from their use of AI, highlighting the challenges organizations face in achieving and scaling AI value across functions.

In this article, we’ll walk through a real-world use case from a major robotics and automation client to train and fine-tune large-scale Large Language Models (LLMs), scale their operations seamlessly, and unlock a new generation of AI-powered service robots.

Let’s dive in.

A Snapshot of the Robotics and Automation AI Application Market Size

Generative AI is redefining what robots can do—empowering them with enhanced perception, contextual decision-making, and natural interaction with humans. From multimodal understanding to autonomous task execution, LLMs are making it possible for machines to "understand" and "respond" intelligently to real-world environments.

The global AI in robotics market is expanding rapidly, with projections showing it will reach $124.77 billion by 2030, growing at a CAGR of 38.5% from 2024 to 2030, according to Grand View Research. This growth is driven by advances in hardware, Generative AI, and increasing automation across sectors like

- Smart Manufacturing – Automating inspection, assembly, and maintenance with AI-powered vision and decision-making systems

- Healthcare Robotics – Enabling service robots to assist with logistics, sanitation, and patient interaction

- Hospitality & Retail – Enhancing customer service through conversational AI, autonomous delivery, and real-time analytics

- Warehouse & Logistics – Optimizing inventory management, route planning, and robotic picking through predictive and generative models

These technologies are enabling robots to perform tasks autonomously, interact with humans naturally, and adapt to changing environments. Yet, while the opportunities are vast, many companies still face significant challenges in realizing AI’s full potential. Limited access to scalable infrastructure, the complexity of training large-scale models, and a shortage of AI expertise remain key obstacles, particularly in industries relying on real-time, mission-critical robotics.

In line with global trends, our client - a leading robotics and automation company in Vietnam— is actively exploring the integration of Generative AI and Large Language Models to improve robotic perception, autonomous task execution, and natural interaction with humans. And this is how the journey begins.

Building Intelligent Robotic Assistants With Large Language Model (LLM)

The journey began when our customer set out to develop and deploy AI-powered assistants for integration into service robots—targeting applications in smart transportation and autonomous virtual assistant. Their core objective was to build multimodal Vision-Language Models (VLMs) capable of understanding visual cues, processing natural language, and delivering context-aware responses in dynamic, real-world settings.

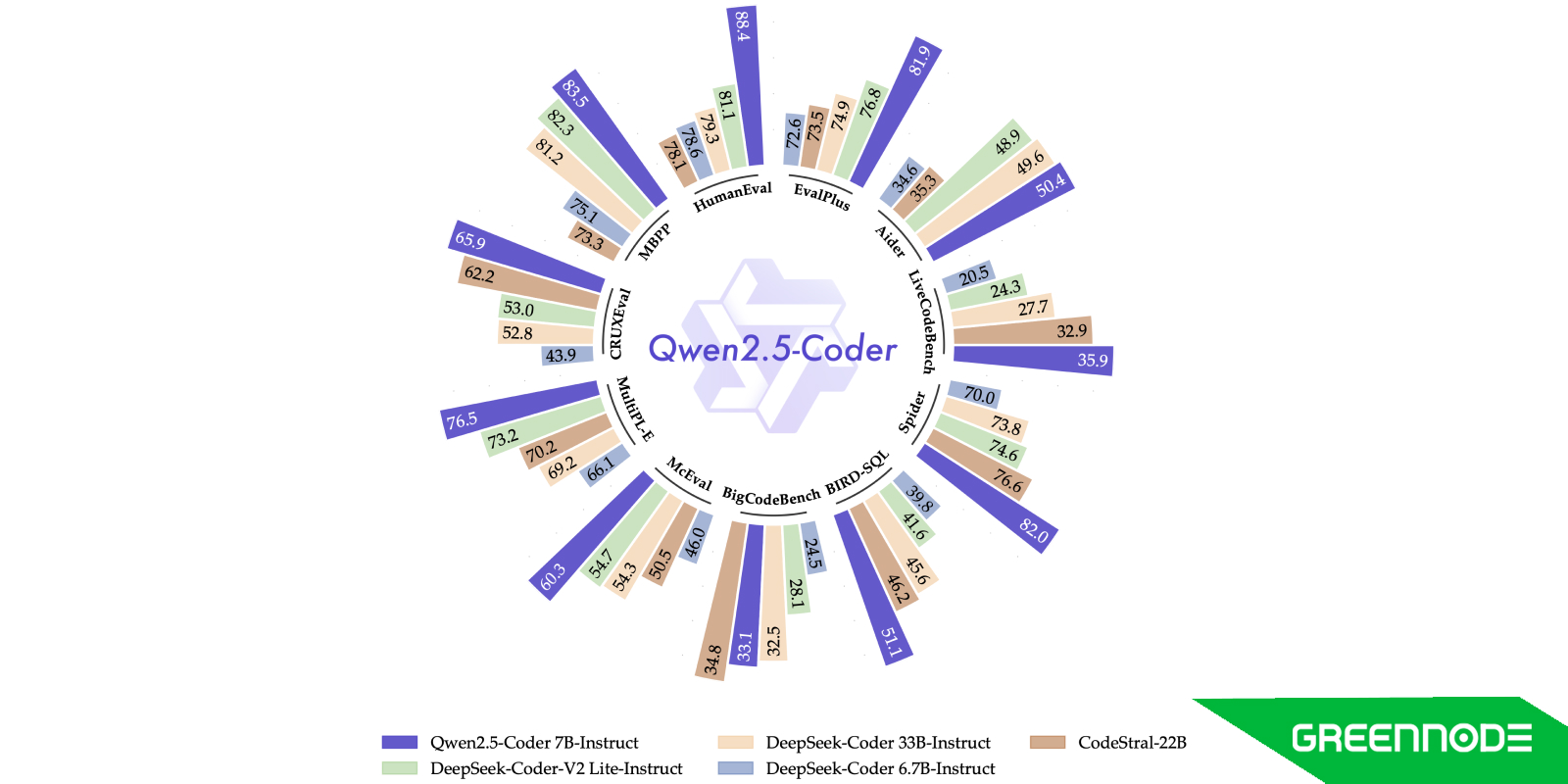

To achieve this, the team needed to train fine-tune models from scratch—including variants of Qwen-2.5-VL at 1B, 3B, and 7B parameters. These models form the foundation of robotic intelligence, enabling real-time decision-making and multimodal interaction.

However, they encountered significant roadblocks using traditional cloud providers:

- Model Training from scratch required multi-node compute with high-bandwidth interconnects

- Data pipeline involved handling over 12TB of raw multimodal data, with a final processed dataset potentially expanding to 3x in size

- Storage of more than 50TB of high-performance storage

- Ability to handle uploads, downloads, file operations, and scaling compute dynamically

To meet these demanding requirements, we worked closely to provide the customer with the necessary resources. At its core, the GreenNode AI Platform is designed to accommodate such high-performance workloads. The customer started by independently provisioning notebook instances and network volumes directly through the platform—without any need for technical support—demonstrating the platform’s ease of use and self-service capabilities.

Given the scale of their model training needs, elasticity and infrastructure readiness were critical. To ensure this, we prepared a tailored solution that included

- Multi-node training architecture that enabled the customer to seamlessly scale GPU compute resources while maintaining full cost transparency through automated pricing estimation

- Integrated storage across tiers, offering a hybrid setup that spanned from CPU-based VM processing to high-performance storage tightly coupled with GPU nodes, capable of opening and processing nearly 1,000 files simultaneously

- Flexible data handling pipelines that allowed input and output data to be collected from multiple sources, such as Google Drive, GCP, AWS, and Hugging Face, directly into virtual machines (VMs)

- Streamlined local-to-cloud data transfer that enables continuous streaming of collected data from local PCs to the cloud for centralized training

Overall, we established a complete data pipeline that supports both storage and data refinement for model training. This pipeline allows seamless ingestion of input data from local PCs or various custom data sources, optimizes storage capacity and cost, and enables standardized data formatting for effective visualization on local machines.

Why GreenNode AI Platform Was Chosen

As a leading local player in developing AI-driven automation and machine learning applications, our customer brought deep expertise and innovation to the table. It was an honor for GreenNode to collaborate with such a forward-thinking R&D team on a project pushing the boundaries of robotics and AI integration.

GreenNode was selected for this initiative due to a combination of competitive pricing and immediate access to high-performance H100 GPU resources—critical for training large-scale vision-language models (VLMs) from scratch. Another decisive factor was our intuitive AI portal, which empowered the customer to self-provision and manage infrastructure—from launching notebook instances to configuring network volumes—without the need for deep DevOps intervention.

Following a successful proof-of-concept (POC) phase, the models developed are now on track to move into production, hosted directly on the GreenNode infrastructure. With an SLA of up to 99.5%, we are committed to delivering high-availability compute resources and seamless service continuity, ensuring our customers can scale confidently while optimizing both performance and cost.

Final Verdict

GreenNode AI Platform provides the robust technical foundation that robotics and automation teams need to stay ahead in the generative AI race. From training vision-language models (VLMs) from scratch to deploying context-aware AI assistants at scale, the platform empowers R&D teams to move faster, reduce reliance on DevOps, and bring cutting-edge AI applications into real-world environments.

If you're looking to build, fine-tune, or deploy your own AI models—whether for robotics, automation, or beyond—getting started with GreenNode takes just a few minutes. Create an account, launch your environment, and tap into high-performance H100 compute and scalable infrastructure tailored for AI workloads. Explore GreenNode AI Platform TODAY!